Trump Revokes Biden's Executive Order Aimed at Mitigating AI Safety Risks

In a significant move, President Trump revoked Biden's 2023 executive order designed to mitigate AI risks to consumers, workers, and national security, citing barriers to innovation..

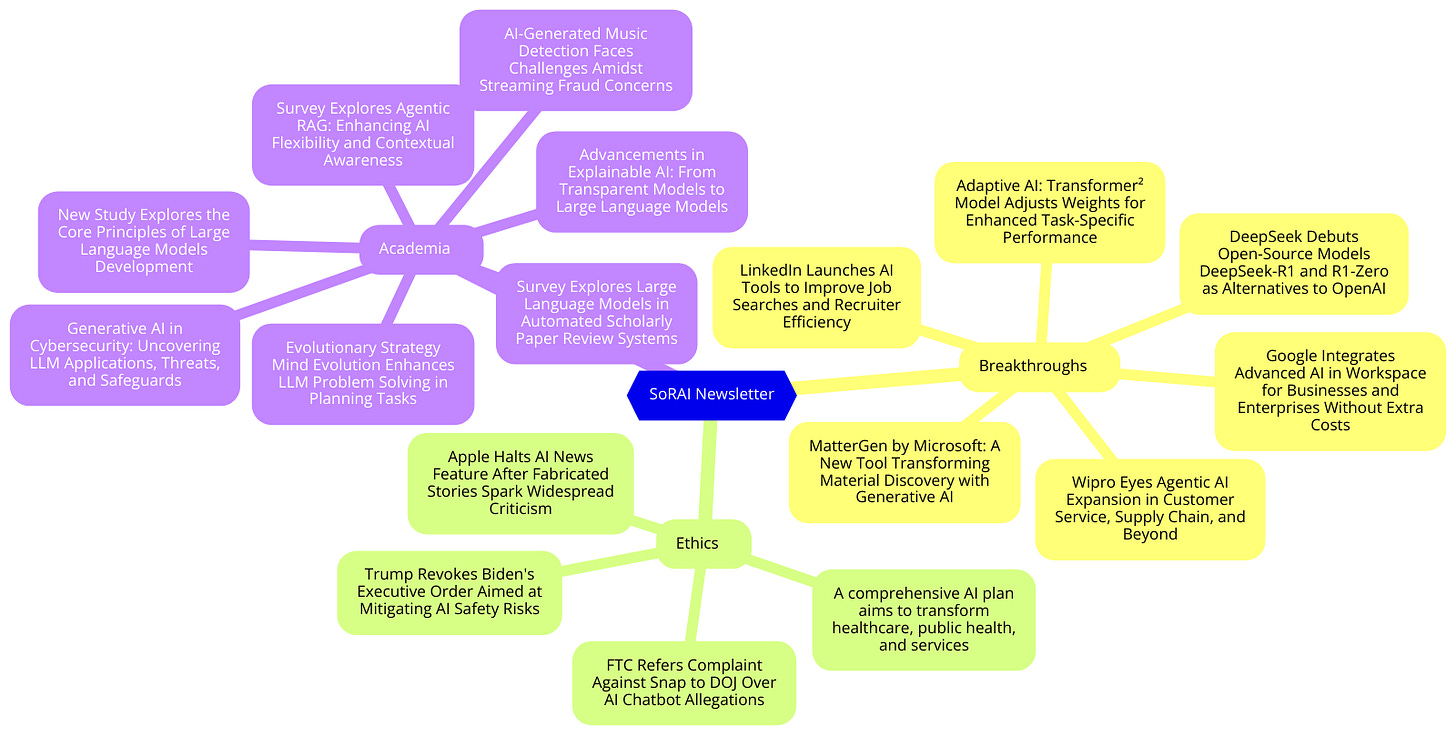

Today's highlights:

🚀 AI Breakthroughs

DeepSeek Debuts Open-Source Models DeepSeek-R1 and R1-Zero as Alternatives to OpenAI

• DeepSeek released two new reasoning models, DeepSeek-R1 and DeepSeek-R1-Zero, as open-source alternatives to proprietary systems like OpenAI-o1

• DeepSeek-R1 is open-source under the MIT license, offering APIs for fine-tuning and distillation with access available at chat.deepseek.com

• New distilled models by DeepSeek, ranging from 32 to 70 billion parameters, are optimized for tasks like math and coding, similar to OpenAI-o1-mini;

Google Integrates Advanced AI in Workspace for Businesses and Enterprises Without Extra Costs

• Google is integrating advanced generative AI features into Workspace Business and Enterprise plans, enabling businesses of all sizes to access cutting-edge AI without additional costs;

• Over 100,000 businesses have leveraged generative AI to boost productivity by automating tasks like meeting notes and synthesizing documents, helping them maintain a competitive advantage;

• For more than two decades, Google Workspace has supported over 10 million businesses in transforming their workflows, and now AI capabilities accelerate this transformation, fostering innovation and creativity.

MatterGen by Microsoft: A New Tool Transforming Material Discovery with Generative AI

• Microsoft's MatterGen revolutionizes materials discovery by using generative AI to design novel materials directly, bypassing traditional laborious and costly trial-and-error methods;

• Unlike previous screening techniques, MatterGen creates materials from scratch, guided by specific requirements like chemistry and mechanical properties, showcasing superior efficiency in identifying promising candidates;

• Microsoft and SIAT successfully synthesized a MatterGen-designed material, demonstrating a powerful new paradigm for AI-driven exploration in domains like renewable energy and aerospace engineering. Read more

LinkedIn Launches AI Tools to Improve Job Searches and Recruiter Efficiency

• LinkedIn unveils AI-driven Jobs Match tool, advising its billion users on viable job applications in real-time as part of a strategy to enhance user engagement

• New recruitment AI agent targets small businesses, simulating larger firms' recruitment processes, aiming to streamline job applications and candidate selection while bolstering LinkedIn's platform activity

• Both AI tools leverage LinkedIn's proprietary AI and data, offering non-premium access, contrasting previous reliance on OpenAI technologies and indicating a shift in LinkedIn's development approach.

Adaptive AI: Transformer² Model Adjusts Weights for Enhanced Task-Specific Performance

• Transformer² introduces a breakthrough in AI adaptivity, enabling self-adjustment of weight matrices to optimize performance in diverse, real-world tasks

• The innovative approach employs Singular Value Finetuning (SVF) and reinforcement learning to finetune task-specific components within LLMs, enhancing efficiency and effectiveness across domains

• Cross-model transfer of learned z-vectors showcases promising knowledge-sharing capabilities, paving the way for collaborative AI systems and advancing adaptability across different architectures;

Wipro Eyes Agentic AI Expansion in Customer Service, Supply Chain, and Beyond

• Wipro is expanding the use of agentic AI in sectors like customer service and supply chain management, envisioning significant business transformation within the next six months

• The company reported strong financial performance with a net income of $392.0 million and a $576.4 million operating cash flow, highlighting its strategic investments in AI

• Wipro secured AI-driven deals worth $1 billion this quarter, including a major contract with a US health insurer for an AI-powered "Payer-in-a-box" solution in healthcare;

⚖️ AI Ethics

Apple Halts AI News Feature After Fabricated Stories Spark Widespread Criticism

• Apple discontinued its AI news feature after it fabricated stories and misused trusted media logos, creating fabricated stories about notable individuals and events

• The BBC filed a complaint against Apple for impersonating its brand, as other press groups warned of AI's threat to the public's right to accurate news

• Apple's rare admission of error highlights the challenges of AI integration in news, despite their plan to relaunch with clearer AI-generated content indicators.

FTC Refers Complaint Against Snap to DOJ Over AI Chatbot Allegations

• The FTC has referred a complaint to the Justice Department alleging that Snap Inc's AI chatbot on Snapchat harmed young users

• The FTC claims its investigation presents 'reason to believe' Snap might be violating laws, though no details on the chatbot's harm were released

• Snap Inc's shares fell 5.2% to $11.22 after the FTC announcement, despite the company's assurance of 'rigorous safety and privacy processes' in their AI system;

A comprehensive AI plan aims to transform healthcare, public health, and services

• The HHS AI Strategic Plan roadmap outlines responsible AI use to enhance health services, providing an overview and detailed action plan for accessibility across the sector

• The Overview section of the Strategic Plan discusses AI application opportunities, industry trends, potential risks, and includes concise action plans linked to policy and infrastructure efforts

• The comprehensive Strategic Plan offers detailed insights, use cases, and risk assessments, accompanied by a slide presentation summarizing key elements for stakeholders seeking in-depth understanding.

Trump Revokes Biden's Executive Order Aimed at Mitigating AI Safety Risks

• U.S. President Donald Trump revoked a 2023 executive order aimed at mitigating AI risks, thereby overturning measures that focused on consumer, worker, and national security protection

• The 2023 order mandated AI developers to disclose safety test results for high-risk systems to the U.S. government, which Trump has now annulled, citing innovation barriers

• The 2024 Republican Party platform criticizes Biden's AI safeguards, emphasizing AI development prioritizing free speech and innovation while Trump leaves Biden's energy support order intact.

🎓AI Academia

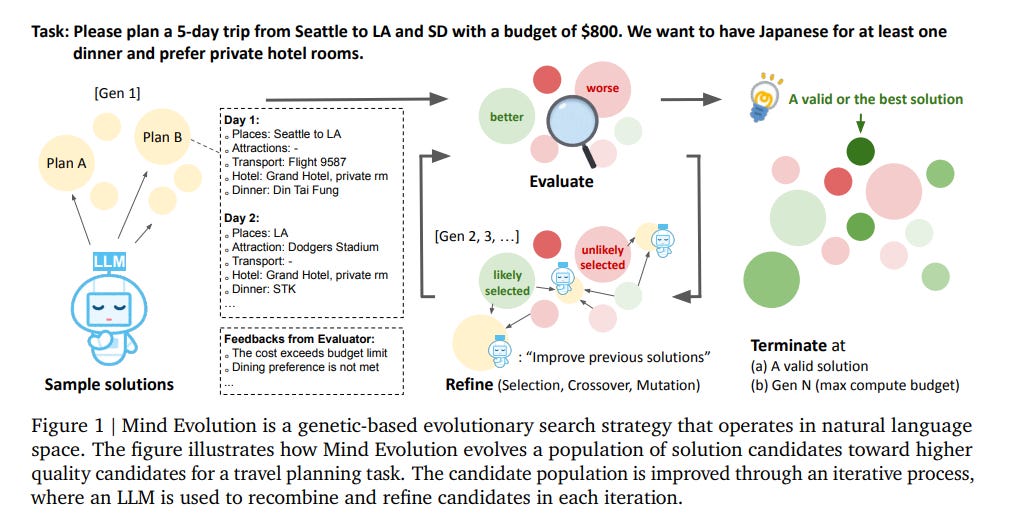

Evolutionary Strategy Mind Evolution Enhances LLM Problem Solving in Planning Tasks

• Mind Evolution, a new evolutionary search strategy, significantly enhances Large Language Model (LLM) performance by combining stochastic exploration with large-scale iterative refinement for problem solving.

• The approach outperforms traditional inference strategies like Best-of-N and Sequential Revision, achieving over 98% problem-solving success in TravelPlanner and Natural Plan benchmarks without formal solvers.

• Mind Evolution leverages a diverse set of candidate solutions using global feedback rather than stepwise logic, allowing broader application in natural language planning tasks.

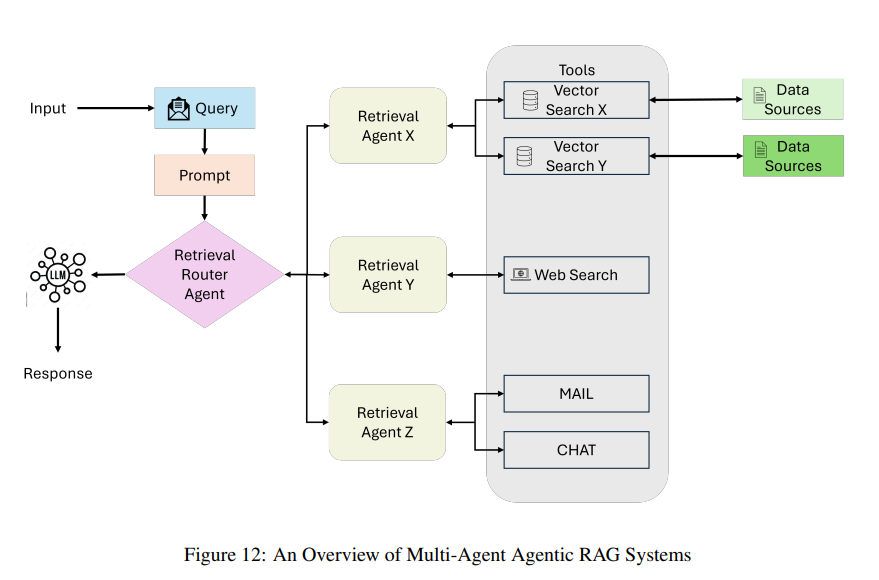

Survey Explores Agentic RAG: Enhancing AI Flexibility and Contextual Awareness

• The survey explores Agentic Retrieval-Augmented Generation (Agentic RAG), an advanced AI model tackling the static limitations of traditional LLMs by embedding autonomous agents for dynamic adaptability;

• Agentic RAG uses reflection, planning, tool use, and multi-agent collaboration to enhance retrieval strategies, offering improved scalability and context-awareness across various sectors such as healthcare and finance;

• The study provides a taxonomy of Agentic RAG architectures, detailing implementation strategies, scalability challenges, ethical decision-making, and performance optimization for practical real-world applications.

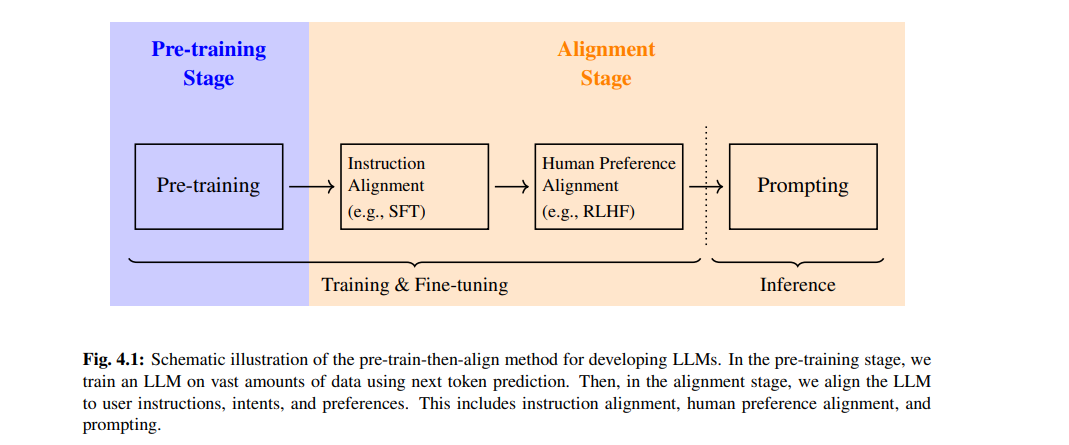

New Study Explores the Core Principles of Large Language Models Development

• A new paper on the foundations of large language models has been released by the NLP Lab at Northeastern University in collaboration with NiuTrans Research

• This work examines the theoretical underpinnings of large language models, highlighting advancements and future directions for AI language processing

• The research from Northeastern University and NiuTrans Research aims to provide a deeper understanding of how large language models can be optimized for diverse applications;

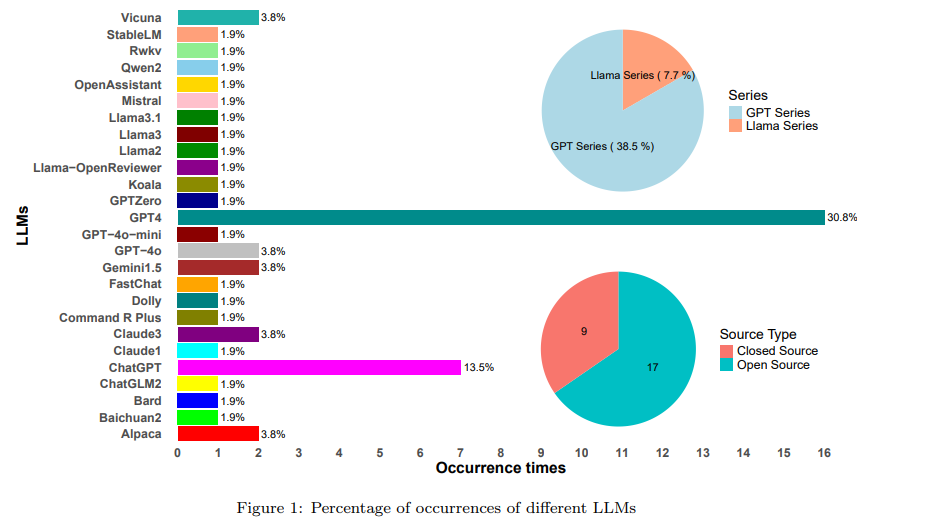

Survey Explores Large Language Models in Automated Scholarly Paper Review Systems

• Large language models are actively being integrated into the peer review process in academia, aiming to automate scholarly paper reviews and transform traditional methods;

• The survey investigates which LLMs are currently used for automated scholarly paper review, while also highlighting the technological advancements achieved in this domain;

• Challenges such as performance issues and publisher attitudes towards automated reviews are addressed, emphasizing the potential and obstacles in academia's ongoing adaptation to LLM technology.

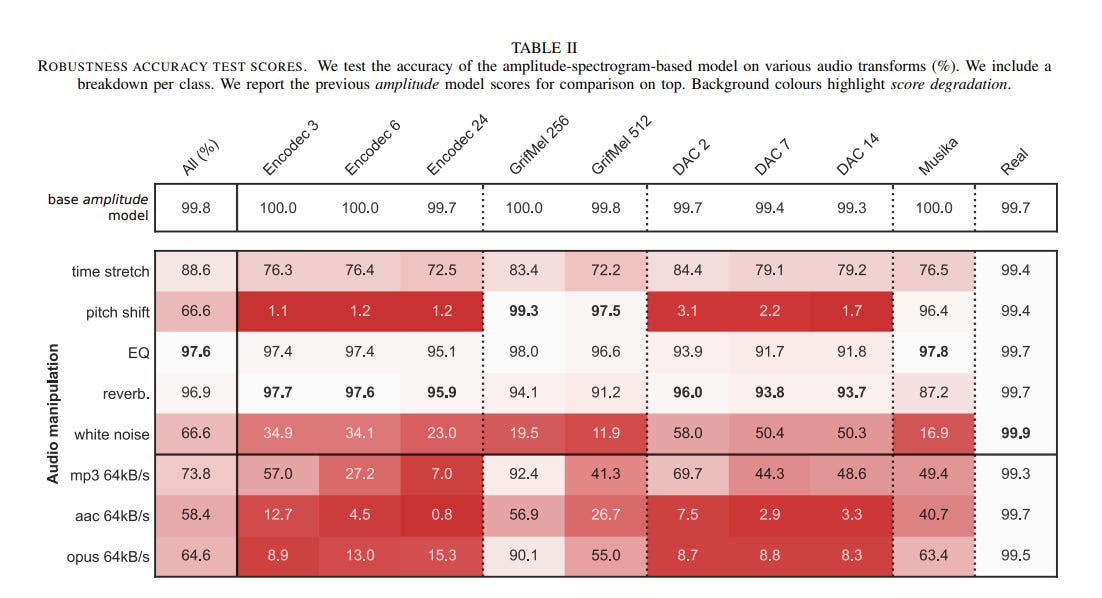

AI-Generated Music Detection Faces Challenges Amidst Streaming Fraud Concerns

• AI-generated music poses challenges for streaming services, creating potential for fraud and competition issues for human artists, as synthetic tracks are easily crafted using user-friendly platforms;

• Research reports a groundbreaking AI-music detector with a 99.8% accuracy rate, although it emphasizes the importance of evaluating robustness against audio manipulation and generalizing to new models;

• The study calls for regulatory frameworks and highlights potential risks associated with flourishing artificial content, bookmarking the need for future research beyond impressive detection scores.

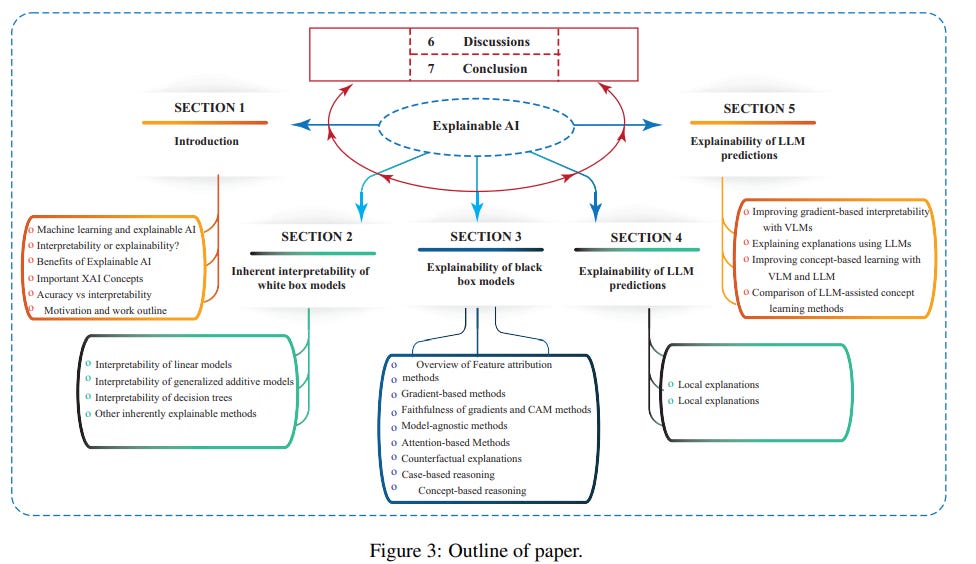

Advancements in Explainable AI: From Transparent Models to Large Language Models

• Explainable Artificial Intelligence (XAI) addresses the transparency gap in AI, especially important in fields like healthcare and autonomous driving, where understanding model decisions is critical;

• Recent advancements in XAI include techniques that enhance the interpretability of complex models, such as Large Language Models and Vision-Language Models, offering meaningful insights into AI behavior;

• The study outlines strengths and weaknesses of current XAI methods and highlights areas for improvement, emphasizing the need for semantically rich explanations in AI systems to bolster trust and adoption.

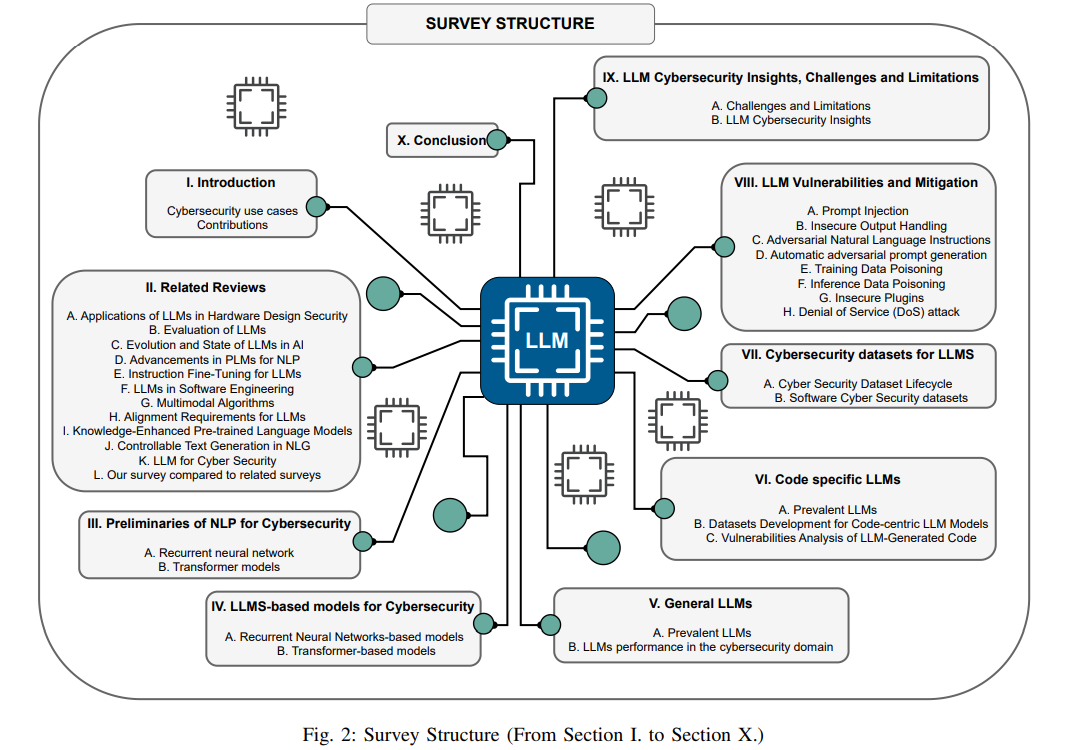

Generative AI in Cybersecurity: Uncovering LLM Applications, Threats, and Safeguards

• Generative AI is revolutionizing cybersecurity with Large Language Models (LLMs) aiding in hardware design security, intrusion detection, and malware and phishing threat identification

• A comprehensive analysis highlights LLM vulnerabilities such as prompt injection and adversarial instructions, introducing mitigation strategies to strengthen LLMs against potential cyberattacks

• Performance and effectiveness of 42 LLMs evaluated for their application in cybersecurity emphasize both strengths and opportunities within real-time threat detection frameworks;

About ABCP: We are dedicated to reducing Generative AI anxiety among tech enthusiasts by providing timely, well-structured, and concise updates on the latest developments in Generative AI through our AI-driven news platform, ABCP - Anybody Can Prompt!