Tiny Tech, Big Impact: Samsung Introduces the Galaxy Ring

OpenAI Pushes the Boundaries with 'Strawberry' and AGI Monitoring Framework, KoBold Metals Strikes Massive Copper Deposit in Zambia, AMD Acquires Silo AI, Washington Post Turns to AI for Climate Engag

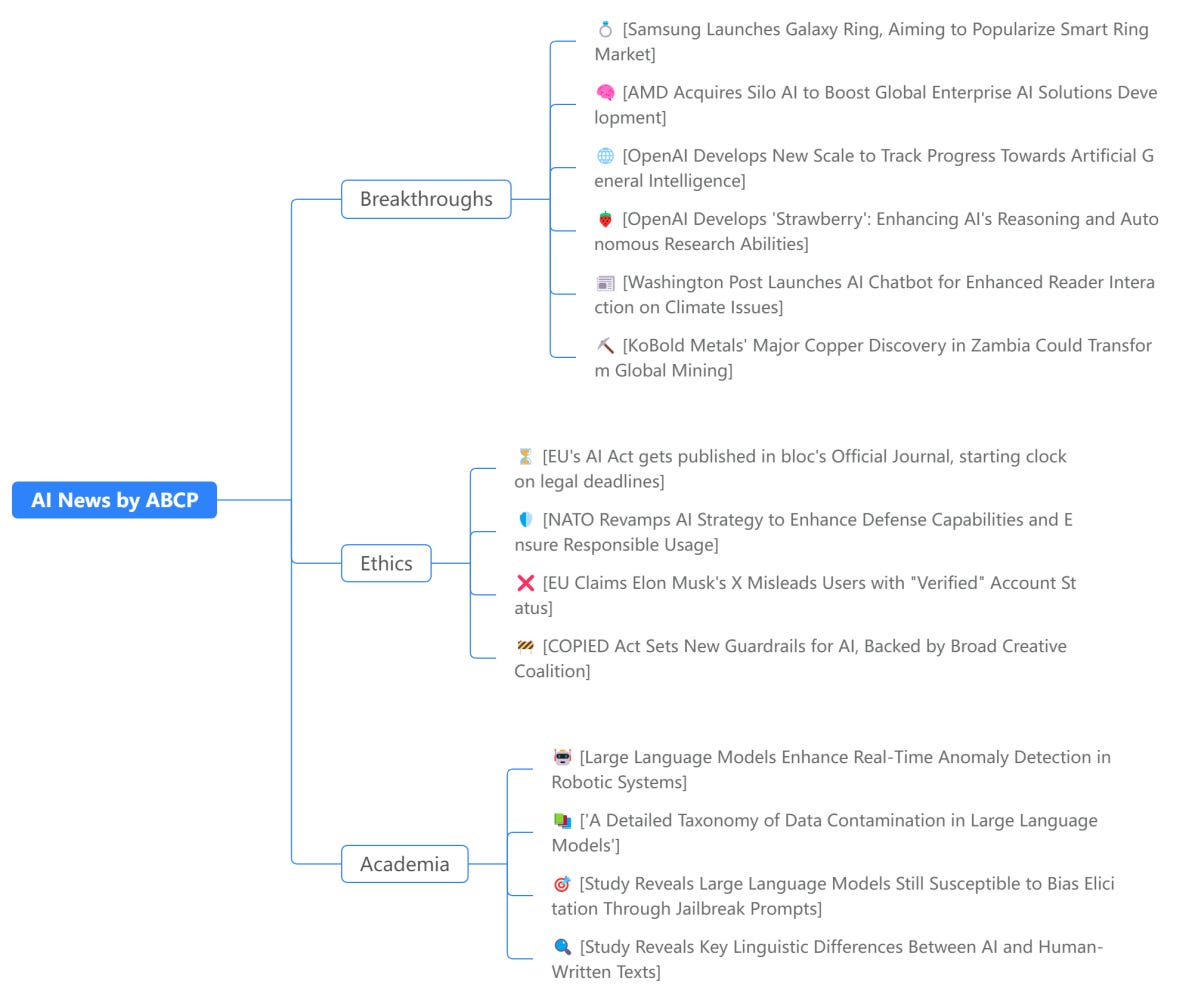

Today's highlights:

🚀 AI Breakthroughs

Samsung Launches Galaxy Ring, Aiming to Popularize Smart Ring Market

• Samsung launched its first smart ring, the Galaxy Ring, at its Galaxy Unpacked event, targeting health and fitness enthusiasts

• The Galaxy Ring features sleep tracking capabilities and integrates with Samsung's AI-powered ecosystem

• Analysts suggest the move could popularize smart rings, projecting a global market of four million units by 2025.

AMD Acquires Silo AI to Boost Global Enterprise AI Solutions Development

• AMD acquires Europe's largest private AI lab, Silo AI, for $665 million to boost its AI solution offerings globally

• The acquisition aims to enhance AMD's open-source AI software for more efficient training and inference on its computing platforms

• Silo AI specializes in end-to-end AI solutions, impacting diverse markets with clients like Allianz and Philips.

OpenAI Develops New Scale to Track Progress Towards Artificial General Intelligence

• OpenAI introduces a new scale to chart progress towards AGI, breaking advancements into five distinct levels, starting with technologies like ChatGPT

• At Level 2, OpenAI's models aim to match human PhD-level problem-solving capabilities, hinting at the potential of their upcoming GPT-5

• Despite strides towards AGI, substantial challenges remain, including significant computing requirements and unresolved ethical and safety concerns.

OpenAI Develops 'Strawberry': Enhancing AI's Reasoning and Autonomous Research Abilities

• OpenAI's "Strawberry" project aims to develop AI models capable of conducting autonomous internet-based research to enhance reasoning abilities

• Dubbed previously as Project Q*, Strawberry focuses on Long-Horizon Tasks (LHT), allowing AI to plan and execute complex activities over extended periods

• The technology includes a "deep-research" dataset and a novel post-training phase, enhancing the base models’ ability to perform sophisticated calculations and reasoning tasks.

Washington Post Launches AI Chatbot for Enhanced Reader Interaction on Climate Issues

• The Washington Post introduces an AI-powered chatbot to engage readers by answering queries based on its climate reporting from the past eight years

• This chatbot, an experiment, draws solely on the publication’s climate content, reinforcing answers with related articles to ensure information accuracy

• In a broader trend, major news outlets are increasingly integrating generative AI technology, with significant partnerships and legal actions shaping the landscape.

KoBold Metals Discovers Largest Copper Deposit in Over a Decade Using AI

• KoBold Metals, using AI technology, discovered a major copper deposit in Zambia, potentially the largest in over a decade

• Estimated annual production of at least 300,000 tons could generate billions in revenue yearly for decades

• Independent assessments confirm the size of the deposit, with KoBold anticipating further valuable findings.

⚖️ AI Ethics

EU's AI Act gets published in bloc's Official Journal, starting clock on legal deadlines

The EU AI Act will come into force on August 1, 2024, with full applicability by mid-2026.

The law takes a phased approach, with different provisions becoming applicable at various times between 2025 and 2027.

Key elements include bans on certain AI uses, transparency requirements for AI chatbots and powerful AI models, and obligations for high-risk AI applications.

New Timeline:

➡ 𝟭𝟮 𝗝𝘂𝗹𝘆 𝟮𝟬𝟮4: EU AI Act published in Official Journal

➡ 𝟭 𝗔𝘂𝗴𝘂𝘀𝘁 𝟮𝟬𝟮𝟰: Enters into force

➡ 𝟮 𝗙𝗲𝗯𝗿𝘂𝗮𝗿𝘆 𝟮𝟬𝟮𝟱: Chapters I and II apply (prohibited practices)

➡ 𝟮 𝗔𝘂𝗴𝘂𝘀𝘁 𝟮𝟬𝟮𝟱: Rules on general-purpose AI models and governance start

➡ 𝟮 𝗔𝘂𝗴𝘂𝘀𝘁 𝟮𝟬𝟮𝟲: General application begins

➡ 𝟮 𝗔𝘂𝗴𝘂𝘀𝘁 𝟮𝟬𝟮𝟳: High-risk AI system classification rules apply

NATO Revamps AI Strategy to Enhance Defense Capabilities and Ensure Responsible Usage

• NATO's AI Strategy accelerates the adoption of generative AI technologies, focusing on interoperability and responsible use within defense

• AI's role in defense escalates as NATO updates strategic aims, highlighting the necessity for enhanced AI-ready workforce and standards

• Aligning with global norms, NATO seeks to safeguard intellectual property and foster alliances with academia and industry, mitigating adversarial AI risks.

EU Claims Elon Musk's X Misleads Users with "Verified" Account Status

• The European Commission claims Elon Musk's X misleads users by selling blue check marks that once signified verified authenticity now available to any paying subscriber.

• Violations against the EU’s Digital Services Act by X could lead to fines up to 6% of its global annual revenue for facilitating misinformation.

• Musk challenges the preliminary findings, alleging the Commission proposed a censored speech deal to other platforms that X refused to accept.

COPIED Act Sets New Guardrails for AI, Backed by Broad Creative Coalition

• COPIED Act introduced to enforce new federal transparency guidelines for AI-generated content, enhancing protection against harmful deepfakes

• Legal framework enhanced by granting individuals and organizations the right to sue for unauthorized use of AI-generated content

• Major endorsers, including SAG-AFTRA and the Recording Academy, applaud the Act for establishing protections for artists and journalists against AI-driven content theft.

🎓AI Academia

Large Language Models Enhance Real-Time Anomaly Detection in Robotic Systems

• A new two-stage reasoning framework using large language models enhances real-time anomaly detection and safety in robotic systems

• The system's initial stage quickly classifies anomalies, while the second stage, though slower, deliberates on safe fallback actions

• Demonstrations in simulated and real-world settings show the system can outperform traditional models in dynamic environments like autonomous vehicles.

'A Detailed Taxonomy of Data Contamination in Large Language Models'

• A new taxonomy categorizes data contamination in large language models, enhancing understanding of influences on AI performance

• Contamination types like in-domain data during training show similar benefits to direct test data contamination, affecting tasks such as summarization and question answering

• Recommendations developed for mitigating contamination during model training to ensure more reliable evaluations and outcomes.

Study Reveals Large Language Models Still Susceptible to Bias Elicitation Through Jailbreak Prompts

• A recent study from the University of Calabria reveals that Large Language Models (LLMs) still produce biased responses when manipulated with specialized prompts

• The research demonstrates that biases in LLMs, such as gender or ethnic stereotypes, persist despite advanced model training and alignment techniques

• Enhancing bias mitigation strategies is crucial as per the findings, to develop more equitable and reliable artificial intelligence systems.

Study Reveals Key Linguistic Differences Between AI and Human-Written Texts

• A study utilizes Open Brain AI to compare linguistic features in human-written and AI-generated essays

• Significant differences found in language elements like word stress and usage of complex words

• Results highlight the need for enhanced AI training to improve generation of human-like text.

About us: We are dedicated to reducing Generative AI anxiety among tech enthusiasts by providing timely, well-structured, and concise updates on the latest developments in Generative AI through our AI-driven news platform, ABCP - Anybody Can Prompt!