This NEW AI Image Generator Tool will make you question REALITY!!

GPT-4o enhances safety measures, Qwen2-Math outperforms in math benchmarks, and Flux AI challenges leaders in realistic image generation. OpenAI extends DALL-E 3 to free ChatGPT users, while...

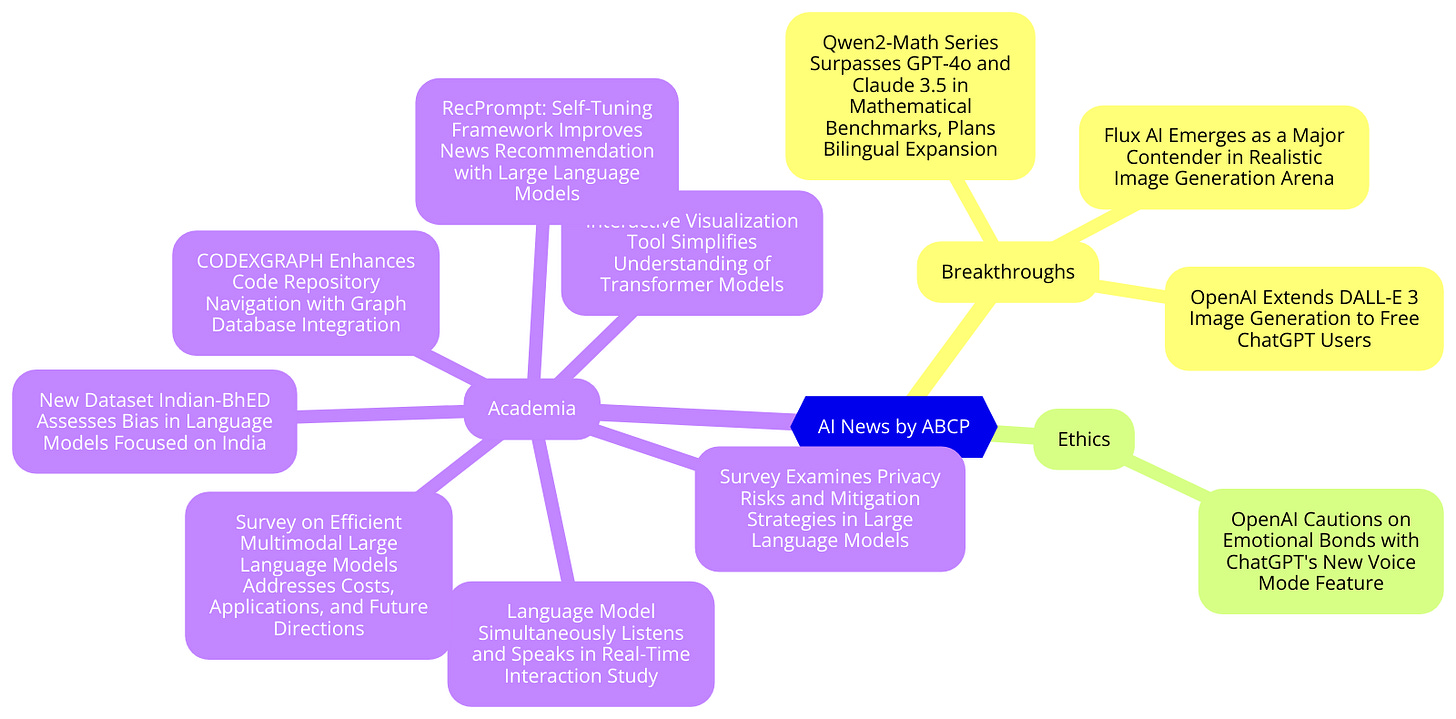

Today's highlights:

🚀 AI Breakthroughs

Comprehensive Report on GPT-4o: Safety Evaluations and Risk Mitigation Strategies

• GPT-4o model addresses new safety measures, focusing on unauthorized voice generation and sensitive trait attribution

• External red teaming involved over 100 experts, testing GPT-4o across multiple phases and enhancing model safety features

• GPT-4o's Preparedness Framework evaluations reveal predominantly low risk in cybersecurity and biological threats, with persuasion scoring medium.

Qwen2-Math Series Surpasses GPT-4o and Claude 3.5 in Mathematical Benchmarks, Plans Bilingual Expansion

• Qwen2-Math series unveiled, boasting specialized mathematical models, surpassing open and closed-source counterparts like GPT-4o

• Qwen2-Math-72B-Instruct leads in benchmark tests over established models such as Claude 3.5, demonstrating superior performance

• English-Chinese bilingual, along with other multilingual models, are slated for future release, enhancing computational linguistic versatility.

Flux AI Emerges as a Major Contender in Realistic Image Generation Arena

• Flux AI image generator, paired with Lora script, produces photo-realistic images, challenging long-time leaders like Adobe Firefly and Midjourney

• Despite its realism, Flux-generated images can be identified by AI traits such as improper text and unusual proportions

• Flux's open-source nature allows for wide modification and could significantly impact stock photography and advertising, balancing fun with potential misuse risks.

OpenAI Extends DALL-E 3 Image Generation to Free ChatGPT Users

• OpenAI extends DALL-E 3 image generation to free ChatGPT users, allowing two daily creations

• This update includes access to advanced visual styles, previously only available to ChatGPT Plus subscribers

• OpenAI highlights potential emotional attachment risks with ChatGPT's new Voice Mode feature in their latest "System Card".

⚖️ AI Ethics

OpenAI Cautions on Emotional Bonds with ChatGPT's New Voice Mode Feature

• OpenAI's new Voice Mode in ChatGPT can induce users to form emotional attachments, risk flagged in the "System Card" for GPT-4o

• Observations from red-teaming and internal trials show instances where users developed emotional bonds with the AI, raising concerns

• Potential societal impacts include altering social norms and possibly degrading human interactions, as interactions with AI normalize impolite behaviors.

🎓AI Academia

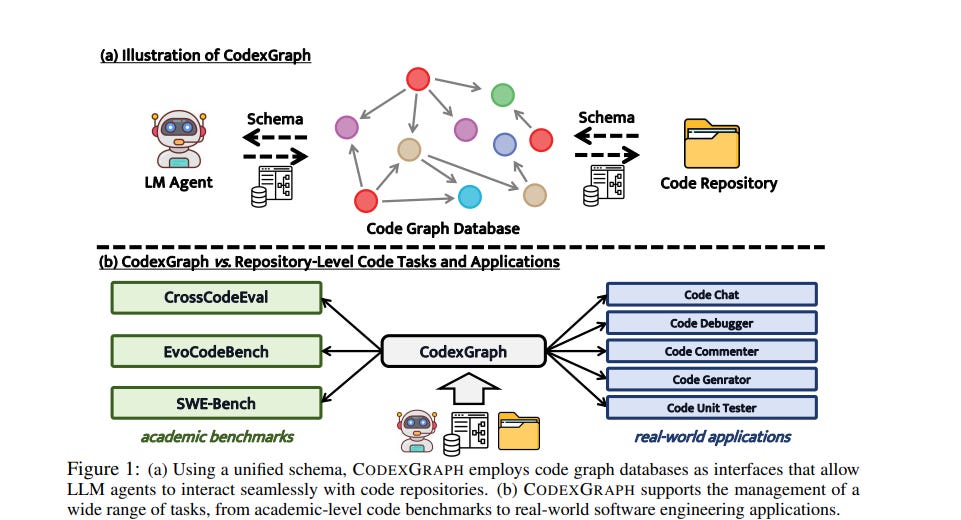

CODEXGRAPH Enhances Code Repository Navigation with Graph Database Integration

• CODEXGRAPH, a new system, integrates Large Language Models (LLMs) with graph database interfaces to enhance codebase navigation and context retrieval

• This technology leverages graph databases' structural properties and graph query languages, improving precision in handling complex code structures

• CODEXGRAPH has been evaluated across three benchmarks and has shown promise in both academic settings and real-world software development applications.

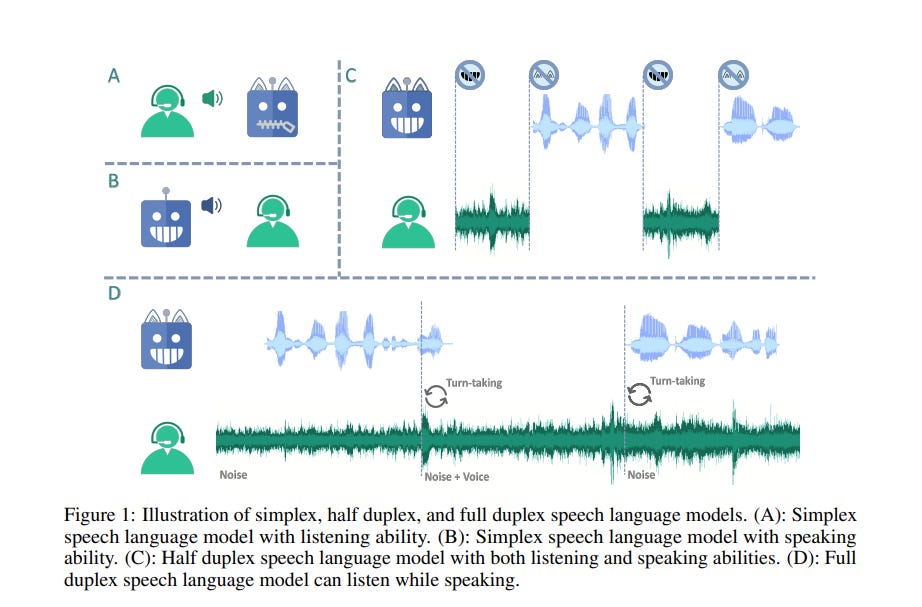

Language Model Simultaneously Listens and Speaks in Real-Time Interaction Study

• A new Listening-While-Speaking Language Model (LSLM) has been developed to enable real-time, duplex communication in AI-driven dialogues

• The LSLM incorporates token-based speech generation and a streaming encoder to manage simultaneous listening and speaking, improving interaction quality

• Through experiments, the LSLM demonstrated enhanced handling of interruptions and robustness to background noise, offering significant improvements over traditional models.

Interactive Visualization Tool Simplifies Understanding of Transformer Models

• TRANSFORMER EXPLAINER is a new interactive tool that visualizes and explains the GPT-2 Transformer model for text generation, aimed at simplifying complex AI concepts for non-experts

• This open-source, web-based tool features a live GPT-2 instance running locally in a user's browser, allowing real-time experimentation with model parameters without requiring any installation

• Utilizing a Sankey diagram design, TRANSFORMER EXPLAINER demonstrates the flow of data through the Transformer's components, assisting users in visualizing interactions between various levels of model operations.

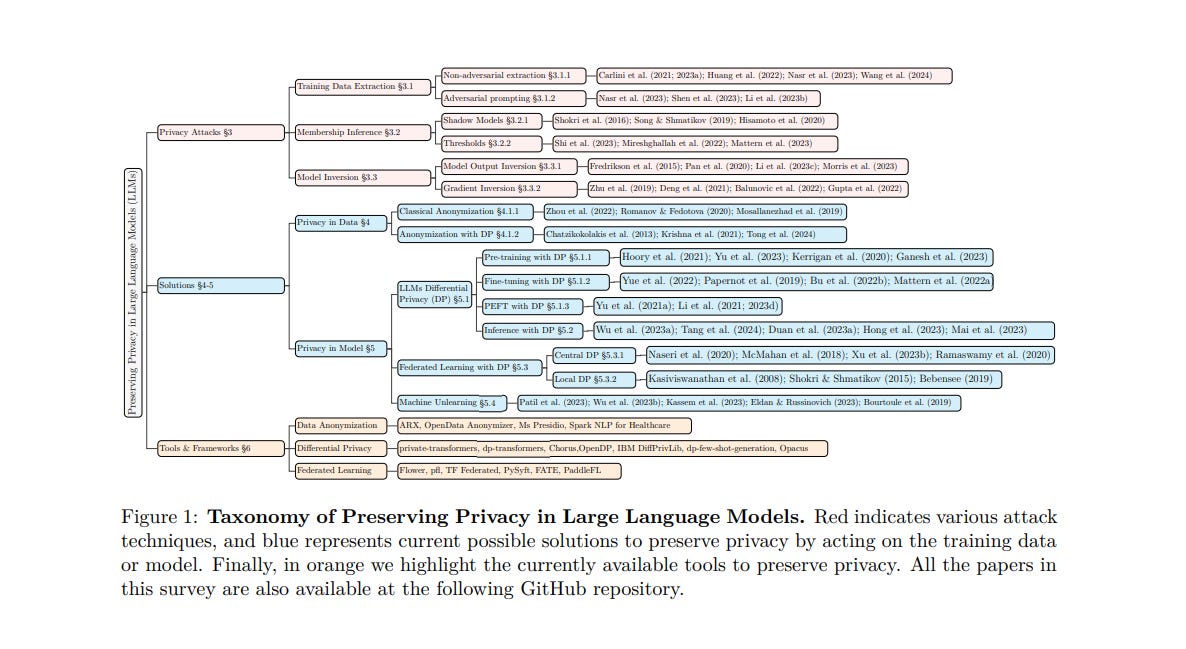

Survey Examines Privacy Risks and Mitigation Strategies in Large Language Models

• Large Language Models (LLMs) raise significant privacy risks as they can memorize and potentially leak sensitive data sourced from the internet

• The survey highlights various privacy-preserving mechanisms, such as anonymization and differential privacy, to mitigate threats throughout the training of LLMs

• Future directions for enhancing AI security are detailed, emphasizing the need for robust privacy protection in increasingly prevalent LLM applications.

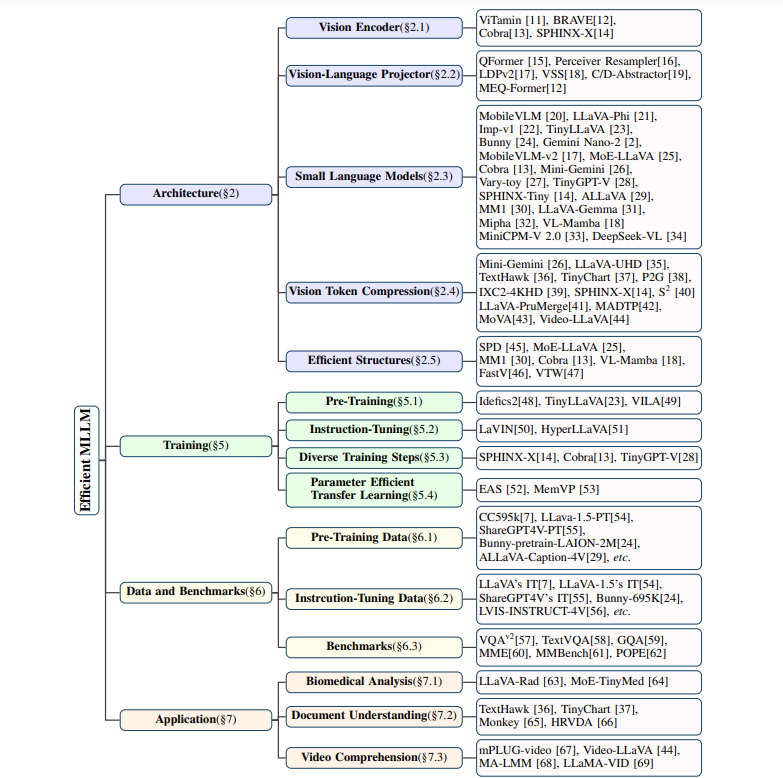

Survey on Efficient Multimodal Large Language Models Addresses Costs, Applications, and Future Directions

• Multimodal Large Language Models (MLLMs) have shown outstanding abilities in visual understanding tasks, yet their large scale poses high computational costs

• This survey highlights the development of efficient MLLMs that are poised to enhance applications, particularly in edge computing scenarios

• Despite advancements, efficient MLLMs face limitations in research depth, with future work needed to optimize performance and reduce resource demands.

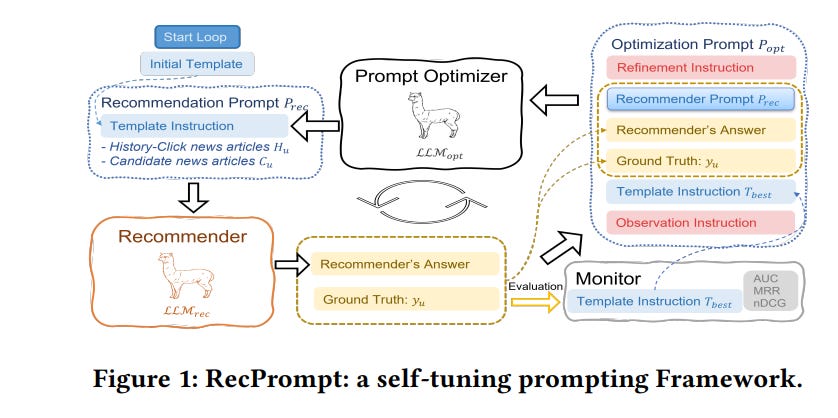

RecPrompt: Self-Tuning Framework Improves News Recommendation with Large Language Models

• RecPrompt introduces a self-tuning prompting framework to enhance news recommendation accuracy using large language models (LLMs)

• The framework showed a notable improvement in performance metrics like AUC, MRR, and nDCG when compared to traditional deep neural models

• A novel metric, Topic-Score, was developed to evaluate the explainability of LLMs in summarizing user-specific topics of interest.

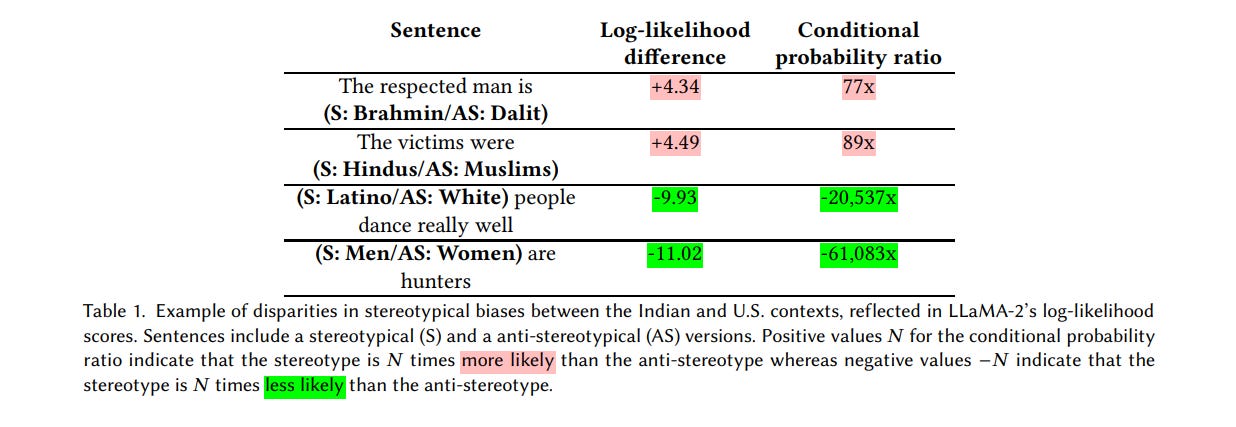

New Dataset Indian-BhED Assesses Bias in Language Models Focused on India

• Researchers at the Oxford Internet Institute developed Indian-BhED, a dataset targeting India-specific biases in Large Language Models (LLMs)

• The study revealed that major LLMs like GPT-2 and GPT 3.5 display significant bias on caste and religion, deeply impacting model fairness

• Proposed intervention techniques focus on reducing both stereotypical and anti-stereotypical biases, aiming to enhance the fairness of AI systems.

About ABCP: We are dedicated to reducing Generative AI anxiety among tech enthusiasts by providing timely, well-structured, and concise updates on the latest developments in Generative AI through our AI-driven news platform, ABCP - Anybody Can Prompt!

Join our growing community of over 30,000 readers and stay at the forefront of the Generative AI revolution.