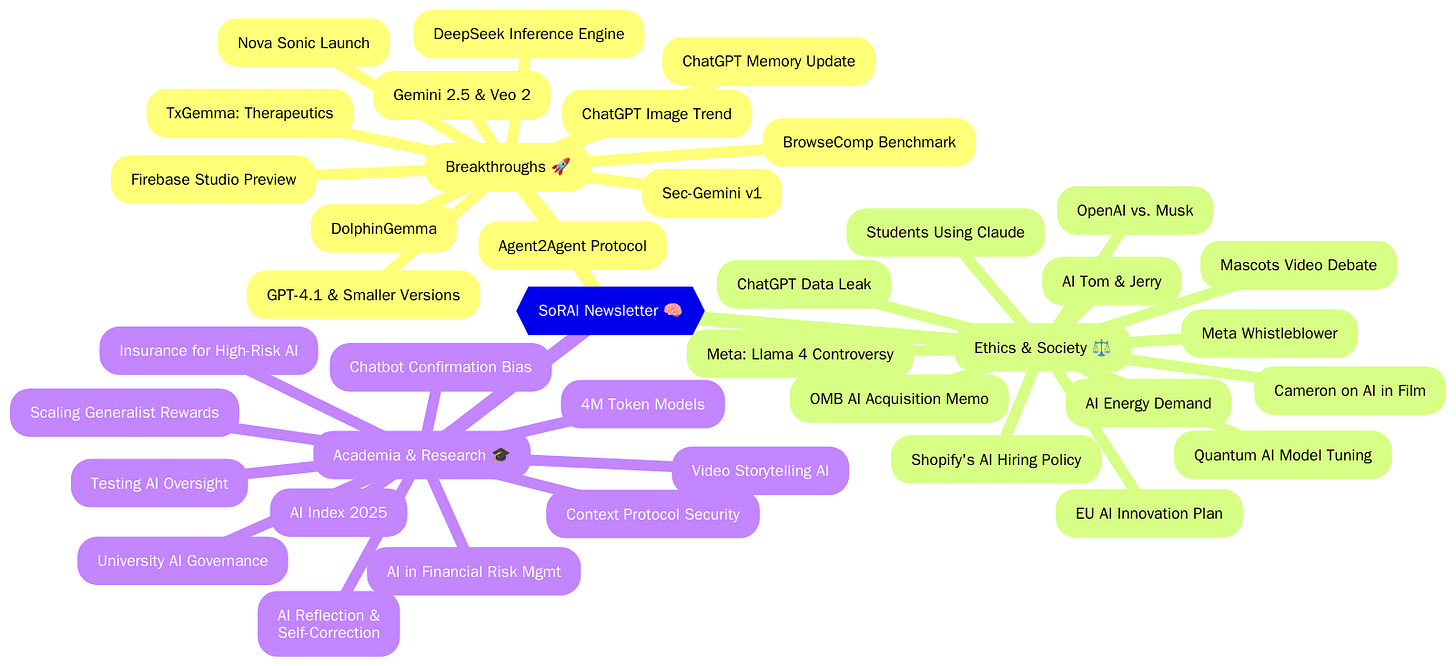

Today's highlights:

You are reading the 86th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) (formerly ABCP). Subscribe today for regular updates!

Are you ready to join the Responsible AI journey, but need a clear roadmap to a rewarding career? Do you want structured, in-depth "AI Literacy" training that builds your skills, boosts your credentials, and reveals any knowledge gaps? Are you aiming to secure your dream role in AI Governance and stand out in the market, or become a trusted AI Governance leader? If you answered yes to any of these questions, let’s connect before it’s too late! Book a chat here.

🚀 AI Breakthroughs

OpenAI Enhances ChatGPT with Memory Update for Improved Contextual Interactions Globally

• OpenAI has updated ChatGPT to reference all past conversations, aiming to enhance AI's context-aware responses for Plus and Pro users worldwide, excluding several European nations;

• OpenAI ensures full control over memory features, allowing users to opt out of chat history referencing or memory at any time, maintaining privacy and boundaries as needed;

• The new feature is part of a market trend of evolving AI, with similar memory integration previously released by Google for its Gemini AI for improved responses.

OpenAI Releases GPT-4.1 with Expanded Context Window and Affordable Smaller Versions

• OpenAI launches GPT-4.1, surpassing last year's GPT-4o with a larger context window, improved coding, instruction following, and more cost-effectiveness than its predecessor;

• Alongside GPT-4.1, OpenAI introduces GPT-4.1 Mini and Nano, both offering lightweight, cost-efficient alternatives for developers, with capabilities extending to a million tokens of context;

• GPT-4.1 debuts as the default model for ChatGPT, with a 26% cost reduction and superior performance, as OpenAI prepares to phase out older versions and delay GPT-5's launch.

OpenAI Releases BrowseComp Benchmark to Test AI Browsing Challenges and Capabilities

• OpenAI releases BrowseComp, a benchmark designed to assess AI agents' capability to locate elusive information online, featuring 1,266 challenging tasks to overcome typical search limitations

• Deep Research AI model excels on BrowseComp tasks, significantly outperforming other models, highlighting its unique ability to autonomously search, synthesize, and adapt to complex web queries

• BrowseComp's focus on fact-based, short-answer questions offers a novel evaluation criterion for browsing agents, despite existing challenges of verifying AI reasoning in open internet environments.

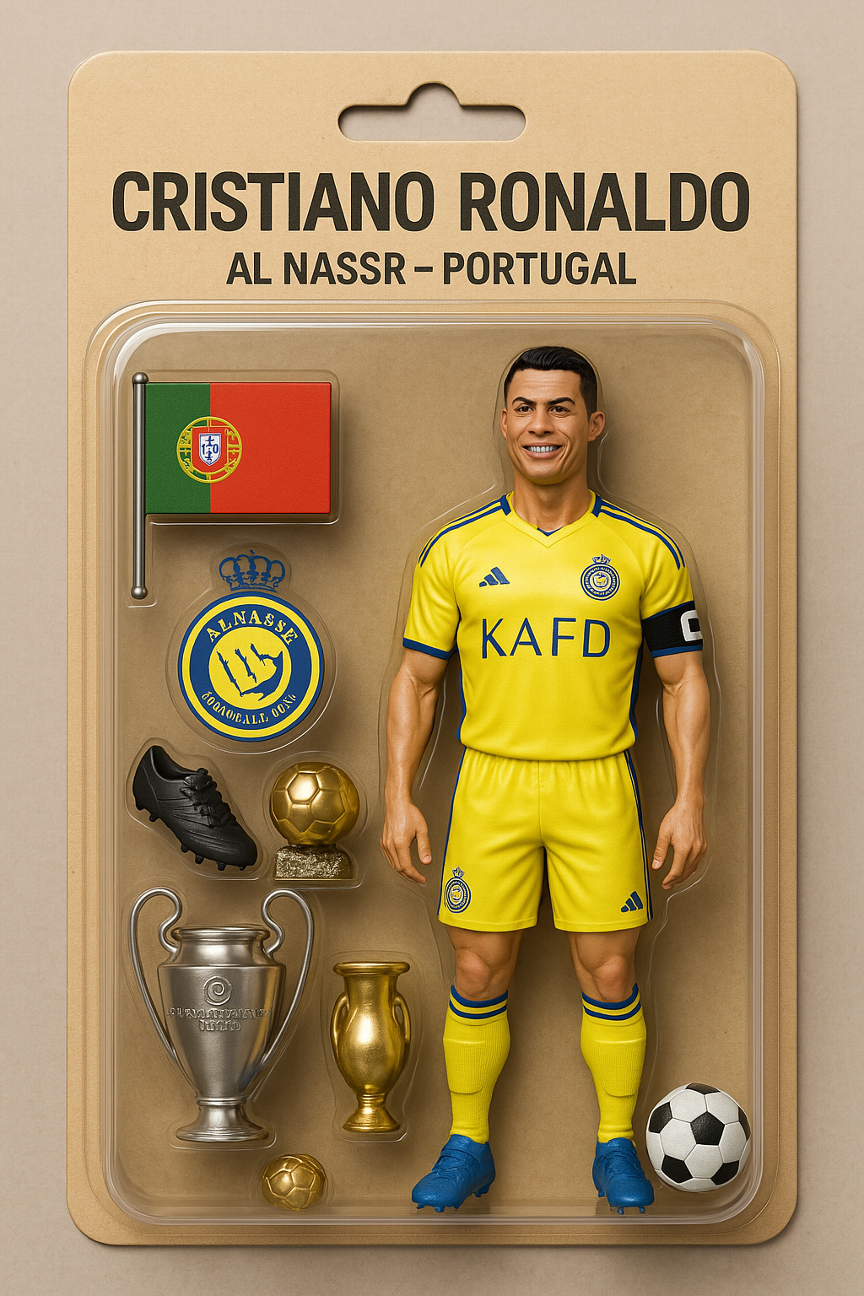

ChatGPT's Image Tool Trends on LinkedIn as Users Become Action Figures Online

• ChatGPT’s image generation tool has gained traction as professionals turn themselves into toy-like figures for LinkedIn branding, creating a playful twist on digital self-representation

• The trend sees users transforming into boxed dolls with job-themed accessories, driven by ChatGPT's capacity for personalized, AI-generated creations reminiscent of toy store displays

• As LinkedIn leads the movement, hashtags like #AIBarbie gain traction, with brands and a few public figures joining in, highlighting AI’s expanding role in online identity expression.

Google AI Enhances Developer Tools with Gemini 2.5 and Veo 2 Innovations

• Google revealed Gemini 2.5 Pro at Cloud Next, highlighting its advanced reasoning for building visually compelling web apps and agentic programming applications using the Gemini API in Google AI Studio;

• The forthcoming Gemini 2.5 Flash model will introduce low latency and cost efficiency with thinking capabilities, targeting enhanced code assistance and multi-agent systems in a million-token input context;

• Veo 2, now production-ready in the Gemini API, allows developers to create high-quality videos from text and image prompts, reducing production time by over 60% for enterprises like Wolf Games.

DolphinGemma: Google AI Breakthrough Helps Decode Dolphin Language and Communication Patterns

• DolphinGemma, a Google AI model, assists researchers in decoding dolphin communication by analyzing vocal patterns and potentially generating responses to facilitate interspecies interaction.

• The Wild Dolphin Project provides a unique dataset, collecting decades of dolphin vocalization data to help DolphinGemma understand and predict dolphin sound sequences effectively.

• Google plans to share DolphinGemma as an open model, enhancing global research capabilities for studying diverse cetacean species and accelerating discoveries in marine mammal communication.

TxGemma Released: Open Models Aim to Revolutionize Therapeutic Development Efficiency

• TxGemma, a collection of open models trained on 7 million examples, aims to revolutionize therapeutic development, offering predictions throughout the drug discovery process.

• Available in three sizes, TxGemma models outperform previous benchmarks on 64 of 66 tasks while excelling on single-task evaluations, offering enhanced prediction accuracy.

• The release of Agentic-Tx, integrating TxGemma into sophisticated agentic systems, facilitates advanced multi-step research with state-of-the-art results on chemistry and biology tasks.

Agent2Agent Protocol Launch Marks New Era in AI Interoperability Across Enterprises

• A groundbreaking open protocol, Agent2Agent (A2A), is launched, supported by over 50 tech partners, enabling AI agents to communicate and coordinate seamlessly across diverse enterprise platforms

• By fostering interoperability among AI agents from different providers, the A2A protocol aims to enhance productivity, reduce costs, and enable complex workflow automation across enterprise application estates;

• A2A complements protocols like Anthropic's Model Context, empowering developers to build interoperable agents, enhancing businesses' abilities to manage them across various platforms and environments effectively.

Google Debuts Firebase Studio Preview to Simplify Full-Stack AI App Development

• Firebase Studio is launching as a comprehensive, cloud-based development environment that streamlines the creation, testing, and deployment of AI applications, offering tools like Project IDX, Genkit, and Gemini

• Developers have the capability to use natural language prompts and images for rapid prototyping with Firebase Studio, which can quickly generate functional web app prototypes using technologies like Next.js

• Firebase Studio facilitates real-time collaboration by allowing users to share workspaces and invites enhancements from Gemini Code Assist agents for tasks like AI model testing and code documentation.

Sec-Gemini v1 AI Model Enhances Cybersecurity by Shifting Balance to Defenders

• Sec-Gemini v1, an AI model, aims to bolster cybersecurity by countering the imbalance where defenders must secure against all threats, while attackers exploit only one vulnerability;

• The model excels in cybersecurity tasks like incident analysis and threat understanding, integrating Gemini’s capabilities with real-time Google Threat Intelligence and extensive cybersecurity data;

• Sec-Gemini v1 is offered to select organizations and outpaces other models in key benchmarks, demonstrating an 11% improvement on the CTI-MCQ threat intelligence benchmark.

Amazon Launches Nova Sonic for Unified Speech Understanding and Generation in AI Voice Applications

• Amazon introduced the Nova Sonic model, combining speech understanding and generation in a single framework to enhance human-like voice interaction across various AI applications in diverse sectors

• Nova Sonic excels in real-time conversational accuracy, with benchmarks showing superiority over leading models like OpenAI's GPT-4o and Google Gemini Flash 2.0 in various voice quality tests

• Significant cost-efficiency is achieved by Nova Sonic, offering up to 80% cost reduction compared to OpenAI's GPT-4o Realtime model, while maintaining high-speed response in voice applications.

DeepSeek Inference Engine Takes Steps Towards Open-Source, Strengthening Community Ties

• DeepSeek's decision to open-source their internal inference engine stems from the positive community response to previously released libraries and aims to foster collaboration and innovation

• Challenges such as codebase divergence, infrastructure dependencies, and limited maintenance bandwidth have been identified as obstacles to fully open-sourcing the DeepSeek inference engine

• To address these challenges, DeepSeek plans to collaborate with existing open-source projects, focusing on modularizing reusable components and sharing optimizations to enhance the community-driven ecosystem;

⚖️ AI Ethics

Chinese Quantum Computer Achieves Breakthrough in Fine-Tuning Billion-Parameter AI Model

• Chinese scientists calibrated China's third-generation superconducting quantum computer, Origin Wukong, achieving a 15-percent reduction in training loss on psychological counseling dialogue datasets

• Origin Wukong's fine-tuning task, powered by a 72-qubit quantum chip, increased mathematical reasoning accuracy from 68 percent to 82 percent, validating quantum computing's role in large language models

• The experiment demonstrated an 8.4 percent improvement in training effectiveness despite a 76-percent reduction in parameters, paving a new path for addressing computing power challenges.

EU Advances AI Innovation with New Plan Amidst Regulatory Challenges

• The European Union is leading globally with its comprehensive AI Act, setting a framework for artificial intelligence regulation unmatched by any other jurisdiction

• The European Commission unveiled the "AI Continent Action Plan" to transform Europe's industries and talent pool into drivers of AI innovation, aiming to compete with the U.S. and China

• Initiatives include building AI factories and gigafactories, improving startup access to training data, and creating an AI Act Service Desk for compliance assistance and guidance;

OMB's AI Acquisition Memo Highlights New Guidelines and Strategies for Federal Agencies

• The Trump administration's OMB memo emphasizes post-award oversight for federal AI contracts, recognizing it as crucial for maintaining contract value and preventing financial destruction.

• Agencies face deadlines to develop processes for AI acquisition, focusing on IP rights and data use, reflecting ongoing challenges in adapting AI technology while ensuring government ownership.

• Industry experts highlight the memo's move toward agile, performance-based contracting approaches, recommending iterative learning and flexibility in AI tool procurement to accommodate rapid technological changes.

Tobias Lütke Explains Shopify's AI-Driven Hiring Policy at Toronto Conference

• Shopify CEO Tobias Lütke emphasizes AI-driven productivity, requiring employees to justify requests for more resources by proving AI solutions are insufficient to achieve their goals

• Lütke's memo encourages staff to envision autonomous AI integration and highlights AI as a productivity multiplier, with AI proficiency now impacting Shopify's employee evaluations

• Despite past workforce reductions, Shopify plans to maintain flat headcount, while potentially increasing costs due to hiring high-compensation AI engineers, as discussed by company executives.

AI's Rising Energy Needs: Global Impacts on Consumption, Innovation, and Security

• The International Energy Agency's recent report delves into the pivotal role artificial intelligence plays in transforming the energy sector by optimizing operations and bolstering innovation.

• Comprehensive global and regional datasets have been employed to project AI's potential electricity consumption and its implications for energy security and emissions through 2035.

• The report is set to facilitate data-driven decision-making by policymakers and industry leaders regarding the adoption of AI technologies within the expanding energy landscape.

OpenAI Launches Legal Battle Against Elon Musk Over Alleged Malicious Campaign

• OpenAI has unveiled a legal response against Elon Musk, accusing him of engineering a "relentless" campaign to damage OpenAI's reputation after leaving the organization

• Allegations suggest Musk founded xAI and supported AI development constraints allegedly to handicap OpenAI, while demanding confidential records and disparaging the company online

• OpenAI seeks an injunction and damages, claiming Musk's "sham bid" and public disparagement have caused "irreparable harm" to its operations and relationships with investors and employees.

James Cameron Supports AI in Filmmaking to Reduce Costs and Preserve Jobs

• James Cameron, initially critical of artificial intelligence, now endorses its use in filmmaking to cut production costs without eliminating jobs, signaling a strategic pivot in his views

• Cameron emphasizes AI’s role in enhancing efficiency in visual effects, aiming to shorten production cycles, allowing artists to tackle more projects without compromising employment opportunities

• Cameron's inclusion on the Board of Directors at Stability AI underscores his commitment to aligning with AI innovators, focusing on practical applications for the film industry’s evolving landscape;

Meta Denies Training Llama 4 on Benchmarks Amidst Community Transparency Concerns

• Meta disputes allegations that its Llama 4 models, including Scout and Maverik, were trained on benchmark test sets, emphasizing commitment to ethical AI training practices

• Maverik quickly ranked second on LMArena with an ELO score of 1417, yet Meta’s publicly available version differs from the experimental one tested

• Concerns over Meta's leaderboard policies and model discrepancies prompt policy updates by Chatbot Arena for transparent, reproducible future AI evaluations;

Meta Whistleblower Set to Expose Secretive Links with China to Congress

• Former Facebook executive Sarah Wynn-Williams claims Meta partnered with the Chinese Communist Party, allegedly providing them access to user data and aiding in China's AI advancements;

• Wynn-Williams will testify before a Senate subcommittee, accusing Meta of betraying US national security to build an $18 billion enterprise in China, using secrecy and deception;

• Despite Meta's attempts to enforce a gag order, Wynn-Williams' book critiques Facebook's actions, suggesting officials manipulated narratives to hide Meta's efforts to influence Chinese markets.

ChatGPT Error: AI Leaks Personal Data Instead of Providing Plant Care Advice

• ChatGPT, known for generating Ghibli-style images, made headlines again for delivering erroneous output, revealing personal data instead of plant care advice in a recent user interaction

• Chartered Accountant Pruthvi Mehta shared a LinkedIn post detailing how ChatGPT supplied someone else's professional information instead of addressing a simple query about plant health

• The incident sparked significant social media backlash, raising concerns over AI data handling, with users questioning the reliability of AI in non-intended datasets and applications.

AI-Generated Video of Beloved Indian Brand Mascots Sparks Debate on LinkedIn

• An AI-generated video featuring hyper-realistic renditions of popular Indian brand mascots has gone viral on LinkedIn, sparking widespread admiration alongside ethical concerns from viewers regarding personal origins

• The project creatively reimagines mascots like the Amul Girl and Air India's Maharaja in striking realism, showcasing them in personalized, vibrant scenes that captivated audiences

• Mixed reactions highlighted ethical and copyright issues, with some viewers wary of using mascots from personal stories and potential intellectual property rights challenges from generative AI creations.

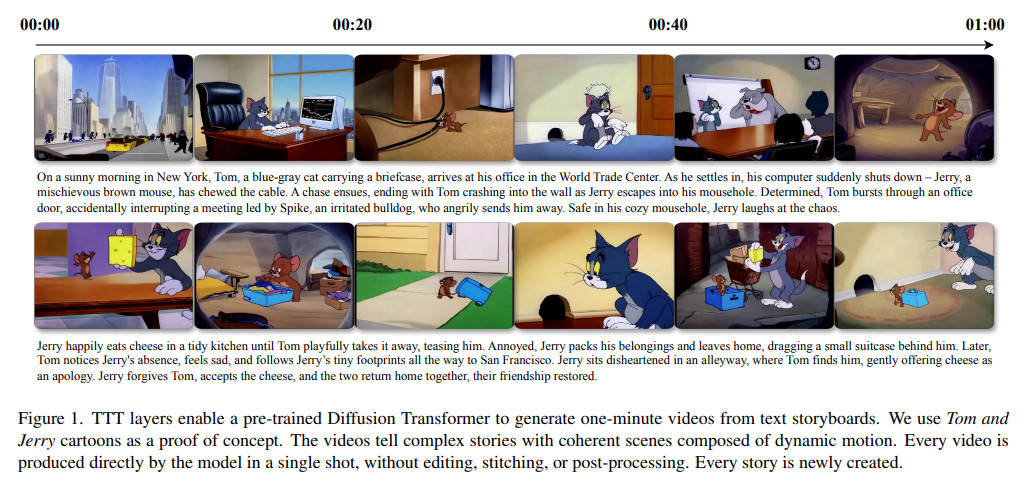

AI-Generated Tom and Jerry Episode Sparks Debate on Artistry and Animation Future

• Tom and Jerry's AI-generated episode, crafted using Stanford and NVIDIA's TTT-MLP tool, places the duo's antics in a modern New York City office setting

• While some viewers praise its tech-savvy creation, critics call the AI animation "soulless" and worry about the impact on traditional animators

• Fans can still enjoy original hand-drawn Tom and Jerry episodes on platforms like HBO Max, Disney+, and Amazon Prime Video, preserving the classic charm;

Study Reveals How University Students Use AI Like Claude in Education

• A recent study highlights Computer Science students' heavy use of AI, accounting for 38.6% of Claude.ai conversations, despite representing only 5.4% of U.S. degrees.

• Students use AI primarily for creating and analyzing educational content, particularly in coding and law concepts, with concerns about offloading crucial cognitive tasks.

• The study identifies four student-AI interaction patterns: Direct Problem Solving, Direct Output Creation, Collaborative Problem Solving, and Collaborative Output Creation, each comprising roughly a quarter of all interactions.

🎓AI Academia

Inference-Time Scaling Advances Generalist Reward Modeling in AI With RL Techniques

• Recent research on inference-time scaling in reinforcement learning for large language models explores embedding reward modeling to improve accuracy in varied domains without reliance on artificial rules;

• A method, Self-Principled Critique Tuning (SPCT), is proposed for enhancing reward generation in generative reward models through online reinforcement learning, enabling dynamic principle generation and critique adaptation;

• The models leverage parallel sampling and meta reward modeling to enhance computational efficiency during inference, demonstrating superior scalability and quality over traditional training-time scaling across various benchmarks.

Generative AI Pushes Video Boundaries with Innovative One-Minute Storytelling Technique

• Researchers have enhanced video Transformers with Test-Time Training (TTT) layers, enabling the generation of one-minute videos from complex multi-scene text storyboards.

• The approach uses Tom and Jerry cartoons as a proof of concept, creating coherent narratives directly from pre-trained models without requiring editing or post-processing.

• TTT layers outperformed traditional methods in a human evaluation, but the approach still faces challenges with artifacts due to the limited capabilities of existing pre-trained models.

New Training Recipe Enables Efficient Long-Context Language Models up to 4M Tokens

• A groundbreaking efficient training method dramatically extends large language models' context window from 128K to up to 4 million tokens, enhancing their capability to handle ultra-long sequences;

• The UltraLong-8B model, using the innovative training framework, achieves state-of-the-art performance in long-context benchmarks while maintaining strong results on standard tasks;

• Comprehensive analysis of scaling strategies and dataset composition provides a robust foundation for scaling context lengths without degrading general model capabilities, offering all model weights at ultralong.github.io.

Rethinking Reflection in AI: Early Self-Correction in Language Model Pre-Training

• Essential AI's recent study reveals that a language model's self-correcting ability begins during its pre-training phase, highlighting an overlooked aspect of early-stage AI development;

• Researchers at Essential AI use deliberate errors in thought chains to test models, showing a model's potential to recognize and correct mistakes rapidly during pre-training;

• The OLMo-2-7B model, trained on 4 trillion tokens, effectively demonstrates early self-reflection capabilities, solving complex adversarial tasks with increased pre-training compute power;

Enterprise-Grade Solutions for Mitigating Security Risks in Model Context Protocol Implementation

• The Model Context Protocol (MCP), developed by Anthropic, standardizes AI systems' interaction with real-time external data, posing new security challenges for enterprise-level deployments;

• MCP architecture's key components—Host, Client, and Server—redefine AI integration with external services, demanding robust security practices beyond traditional API measures;

• Security in MCP implementation is crucial to prevent tool poisoning attacks and ensure trustworthy AI interaction, intertwining technological safeguards with broader AI governance concerns.

AI Index Report 2025 Reveals Key Trends and Developments in Artificial Intelligence

• The Artificial Intelligence Index Report 2025 indicates a significant increase in investments in AI for health diagnostics, showcasing a potential shift toward advanced medical solutions by 2030;

• According to the report, AI-driven energy management systems demonstrate a 40% boost in efficiency for smart cities, hinting at substantial environmental and economic benefits over the coming years;

• A notable rise in the global adoption of generative AI tools in creative industries is highlighted, with experts forecasting transformative impacts on design, media, and entertainment sectors by 2025;

Challenges in Testing AI Compliance with Human Oversight in Governance Regulations

• A new paper explores challenges in testing AI systems for compliance with human oversight requirements set by the European AI Act, an emerging focus in AI regulation;

• The study highlights the difficulty of balancing checklist-based methods and empirical testing to ensure effective human oversight in high-risk AI applications like education and medicine;

• Researchers identify the need for developing operational standards to assess human oversight effectiveness, inspired by existing frameworks such as the General Data Protection Regulation (GDPR).

A Study Proposes Framework for University Policies on Generative AI Governance

• A recent preprint study examines university policies on generative AI across the United States, Japan, and China, proposing a comprehensive policy framework for higher education institutions

• The study analyzes 124 policy documents from 110 universities, identifying 20 key themes and 9 sub-themes to inform the development of a generative AI governance framework

• Key differences highlight U.S. policies focusing on faculty autonomy and adaptability, while Japan emphasizes ethics under government regulation, and China follows its own distinct approach.

Proposed Insurance Framework Aims to Govern High-Risk AI with Historical Precedents

- A working draft addressing governance of frontier AI through a three-tiered insurance model is under review by the Southern Illinois University Law Journal

- The proposed framework utilizes historical precedents from nuclear energy, terrorism risk, and agriculture to establish a stable insurance market for frontier AI risks

- By integrating risk mechanisms, the model aims to embed AI safety within capital markets, minimizing the need for rigid regulation of high-risk AI models;

Understanding and Combating Confirmation Bias in Generative AI Chatbots: Risks and Solutions

- The research explores confirmation bias in generative AI chatbots, a nuanced aspect of AI-human interaction drawing from cognitive psychology and computational linguistics

- It evaluates how these chatbots can replicate and amplify confirmation bias, assessing associated ethical and practical risks

- Proposed mitigation strategies include technical interventions, interface redesign, and policy measures, with a call for interdisciplinary research to better address this issue.

Generative AI Powers Enhanced Financial Risk Management with Improved Information Retrieval

• TD Bank unveils RiskEmbed, a finetuned embedding model that significantly boosts retrieval accuracy in financial question-answering systems focused on regulatory compliance

• The RiskData1 dataset, open-sourced for public use, comprises 94 regulatory documents from OSFI, aiding in the development of robust financial risk management AI solutions

• Finetuned using state-of-the-art sentence BERT models, RiskEmbed enhances domain-specific retrieval, supporting Retrieval-Augmented Generation systems with superior ranking metrics in risk analysis tasks;

About SoRAI: The School of Responsible AI (SoRAI) is a pioneering edtech platform by Saahil Gupta, AIGP focused on advancing Responsible AI (RAI) literacy through affordable, practical training. Its flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.