OpenAI Announces New Model GPT-4o and Desktop App in Spring Update

OpenAI’s newest model is GPT-4o

OpenAI has unveiled its newest flagship model, GPT-4o, which marks a significant leap forward in human-computer interaction. GPT-4o, with “o” standing for “omni,” is designed to handle and generate text, audio, and image inputs and outputs seamlessly. This new model promises a more natural interaction by processing inputs and delivering outputs in real time.

Key Features and Capabilities

1. Real-Time Processing: GPT-4o processes audio inputs with an impressive response time of as little as 232 milliseconds, averaging around 320 milliseconds. This speed is comparable to human conversation, ensuring smoother and more natural interactions.

2. Enhanced Multimodal Abilities: GPT-4o matches the performance of GPT-4 Turbo in text and coding tasks, with significant improvements in non-English languages. It also excels in vision and audio understanding, outperforming previous models.

3. Cost and Speed Efficiency: GPT-4o is not only faster but also 50% cheaper in the API compared to its predecessor, making advanced AI capabilities more accessible.

Model Interactions

GPT-4o supports a wide range of applications, including:

Interactive Conversations: The model can engage in meaningful dialogues, even incorporating elements like sarcasm and humor.

Interview Preparation: It can simulate interview scenarios to help users practice and improve.

Real-Time Translation: GPT-4o provides instant translation services, bridging language barriers effectively.

Creative Tasks: From generating lullabies to composing songs, GPT-4o showcases its creative prowess.

Customer Service: It can serve as a proof of concept for advanced customer support systems.

Technological Advancements

1. Unified Model Architecture: Unlike previous models that used separate pipelines for different tasks, GPT-4o is trained as a single model that processes text, vision, and audio inputs and outputs. This integration allows for richer, more contextual interactions.

2. Improved Voice Mode: Prior versions had latency issues that disrupted the conversational flow. GPT-4o's new technology significantly reduces latency, making voice interactions smoother and more lifelike.

Model Evaluations

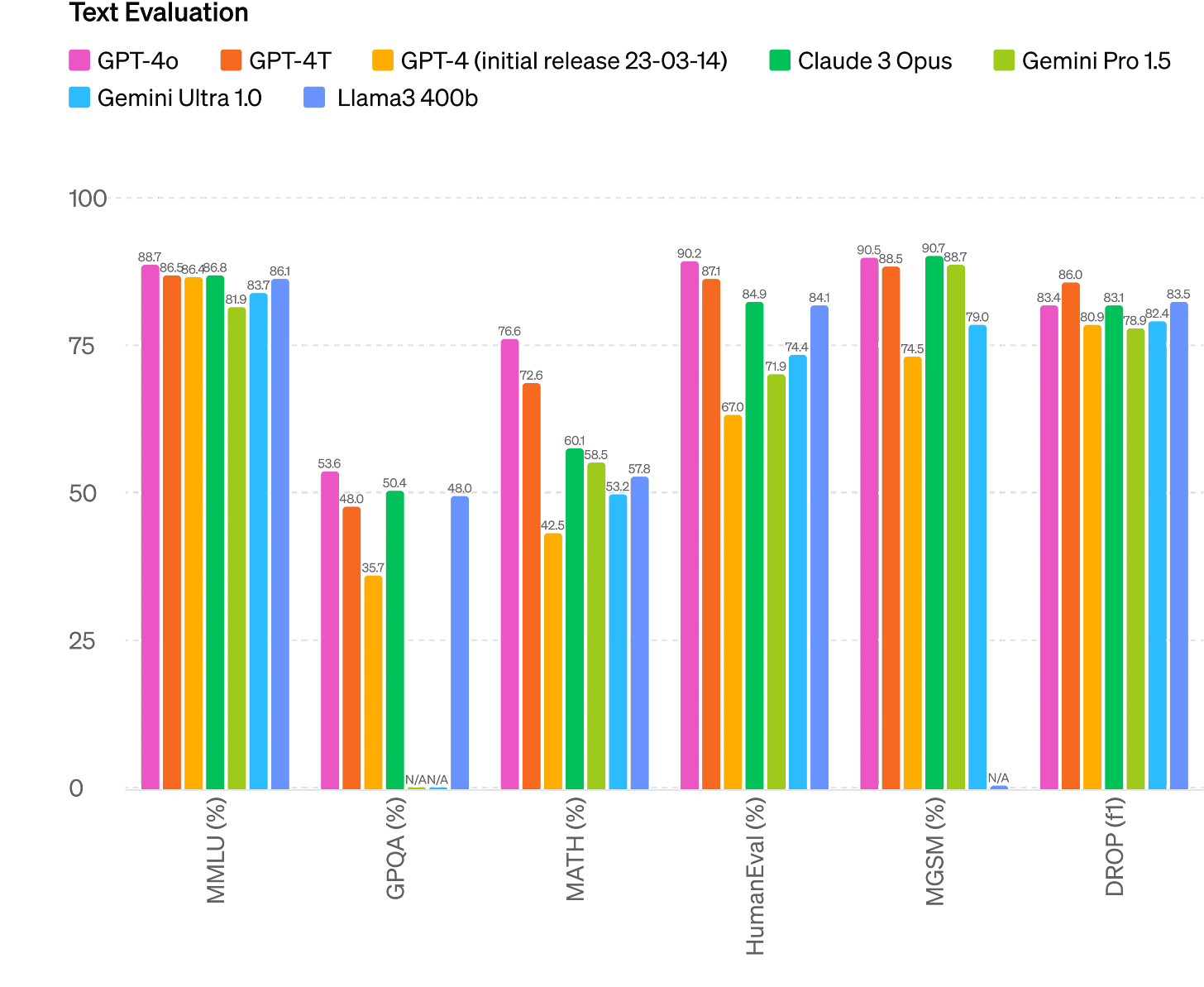

GPT-4o sets new benchmarks in various evaluations:

Text Performance: It achieves a high score of 87.2% on the 5-shot MMLU general knowledge questions.

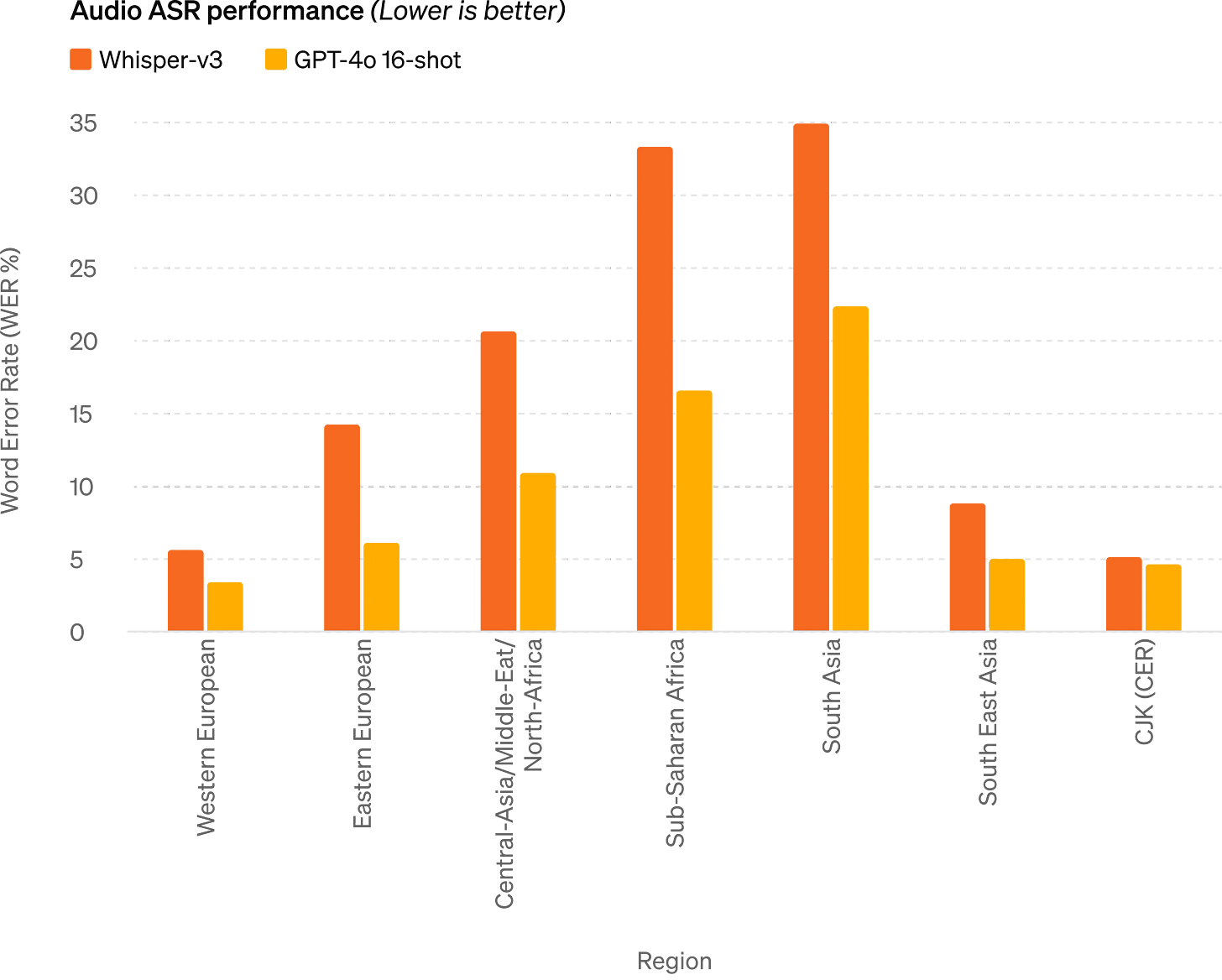

Audio and Vision: GPT-4o outperforms Whisper-v3 in speech recognition and translation, and it excels in visual perception benchmarks.

Language Tokenization Improvements

GPT-4o features a new tokenizer that enhances compression across various languages, significantly reducing the number of tokens required. This improvement makes the model more efficient and effective in understanding and generating multilingual content.

Safety and Limitations

OpenAI has integrated robust safety measures into GPT-4o. The model has undergone extensive testing, including external evaluations, to ensure it operates within safe parameters. Initial releases will include text and image capabilities, with audio outputs limited to preset voices to adhere to safety policies.

Availability and Future Rollout

GPT-4o’s text and image capabilities are rolling out today in ChatGPT, available in the free tier and to Plus users with higher message limits. Developers can access GPT-4o through the API, benefiting from its faster performance and lower costs. Support for audio and video capabilities will follow, initially available to a select group of trusted partners.

OpenAI continues to push the boundaries of what AI can achieve, and GPT-4o represents a significant step towards more practical and usable AI technologies. As the model rolls out, users and developers are encouraged to provide feedback to help further refine and improve its capabilities.

P.S.: We curate this AI newsletter daily for free. Your support keeps us motivated. If you find it valuable, please share it with your friends using the button below!