NVIDIA JUST DROPPED MAJOR AI & ROBOTICS ANNOUNCEMENTS AT GTC 2025 YOU CAN NOT AFFORD TO MISS!

Today's highlights:

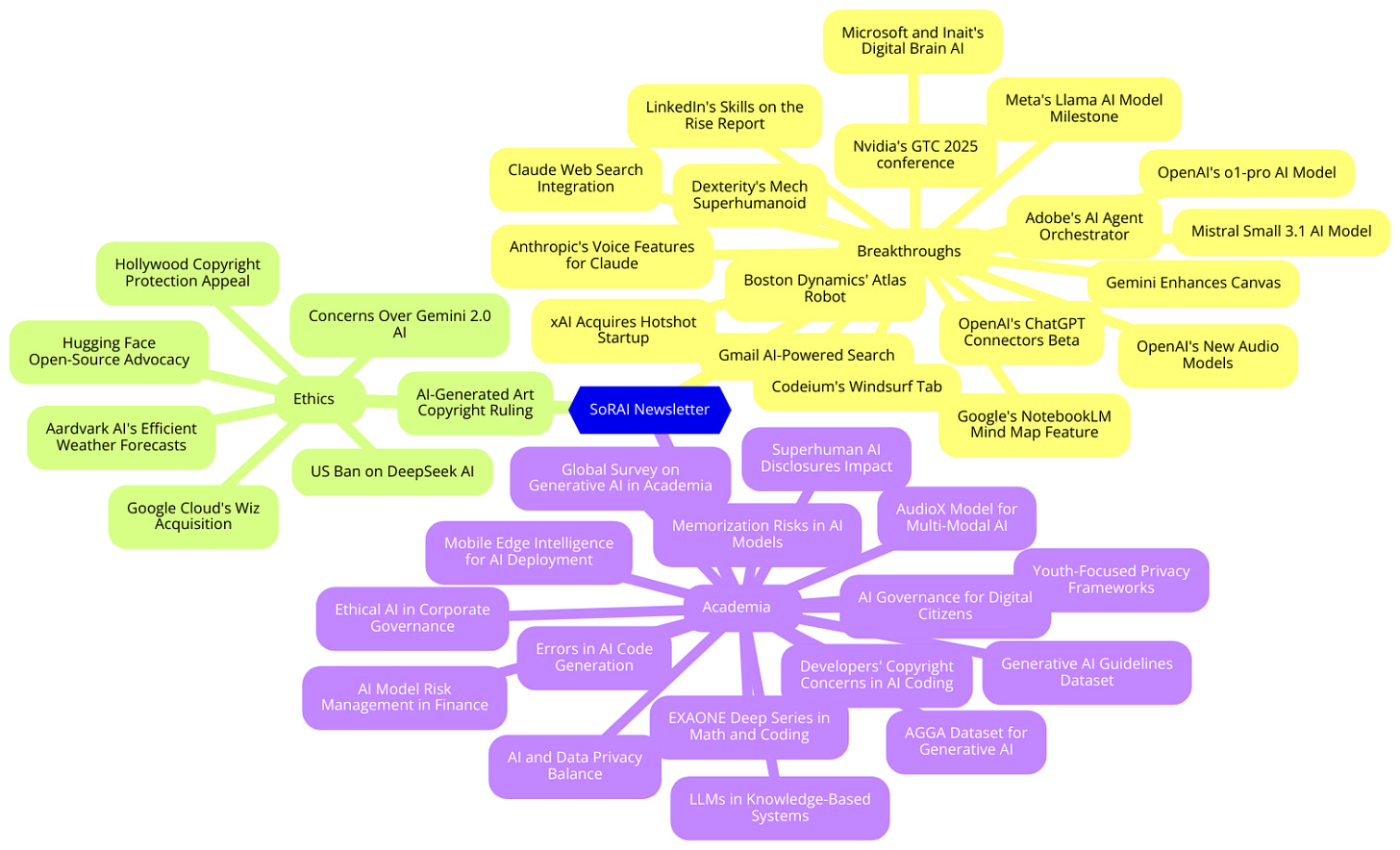

You are reading the 80th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) (formerly ABCP). Subscribe today for regular updates!

Are you ready to join the Responsible AI journey, but need a clear roadmap to a rewarding career? Do you want structured, in-depth "AI Literacy" training that builds your skills, boosts your credentials, and reveals any knowledge gaps? Are you aiming to secure your dream role in AI Governance and stand out in the market, or become a trusted AI Governance leader? If you answered yes to any of these questions, let’s connect before it’s too late! Book a chat here.

🚀 AI Breakthroughs

Nvidia's GTC 2025 conference featured several significant announcements:

Next-gen AI Chips & Computing Platforms:

Nvidia introduced the Blackwell Ultra processor, scheduled for release in late 2025.

The company also unveiled the DGX Spark and DGX Station personal desktop AI supercomputers.

Advancements in Robotics & Physical AI:

Nvidia presented the Isaac GR00T N1, an open-source model for developing humanoid robots.

In collaboration with Disney Research and Google DeepMind, Nvidia introduced "Blue," a robot powered by the new Newton physics engine.

AI in Healthcare, Self-Driving, and Automation:

Nvidia is collaborating with General Motors to integrate AI systems for self-driving cars and introduced the Halos system for autonomous driving safety.

Quantum Computing Research Center & Hybrid AI-Quantum Systems:

Nvidia announced plans to open a quantum research lab in Boston, partnering with Harvard and MIT.

The company also showcased a prototype for a digital quantum-classical interface designed to connect quantum processors with classical GPUs.

Data Center & AI Infrastructure Upgrades:

Nvidia introduced new energy-efficient networking technologies, Spectrum-X and Quantum-X switches, aiming to enhance data center performance.

Boston Dynamics' Atlas Robot Achieves New Agility with Motion Capture and AI Learning

• Atlas, Boston Dynamics' humanoid robot, showcased enhanced agility and adaptability on March 19, 2025, utilizing reinforcement learning and a motion capture suit for more human-like movements

• This advance arose from a collaboration between Boston Dynamics and the Robotics and AI Institute, merging hardware expertise with advanced AI to enable real-time adaptability

• Motion capture integration allowed Atlas to learn from human movements, reducing reliance on rigid programming and expanding its capabilities across complex, real-world environments.

LinkedIn's 'Skills on the Rise' Report Highlights Top 15 Job Skills for 2025

• LinkedIn's 'Skills on the Rise' emphasizes core skills like creativity and strategic thinking, critical for staying competitive in India's evolving job market by 2025

• AI literacy, including skills in large language models and prompt engineering, is increasingly essential, with 95% of Indian leaders prioritizing AI proficiency over traditional experience

• Professionals are urged to enhance profiles by listing multiple skills, as those with five or more skills receive significantly higher views and recruiter engagement on LinkedIn.

Google Enhances NotebookLM with Mind Map Feature for Visual Summaries and Interaction

• Google rolled out a new mind map feature for NotebookLM, offering users a visual summary through branching diagrams to easily grasp intricate information from source documents;

• Users can interact with the mind maps by zooming, scrolling, and clicking nodes for more details in NotebookLM chat, with options to download and share as images;

• Alongside NotebookLM updates, Google introduced Canvas in Gemini for real-time document and code refinement, complete with collaboration options through document exports to Google Docs.

Gmail Implements AI-Powered Search to Enhance Email Result Relevance Globally

• Gmail's enhanced AI-powered search feature is now live, promising faster access to relevant emails by prioritizing recency, user interactions, and frequent contacts over mere keyword matches;

• Instead of the previous chronological display, Gmail users will find important emails at the top of search results, significantly cutting down the time spent sifting through messages;

• The "most relevant" search feature is currently available globally for personal Google account holders, accessible via web and mobile apps, with plans to include business users in the future;

Anthropic Developing Voice Features for Claude AI, Explores Partnerships with Amazon

• Anthropic is reportedly developing voice capabilities for Claude, its AI-powered chatbot, aiming to offer a more natural interface by allowing users to converse with the AI;

• The company has created prototypes for voice capabilities and is focused on enriching user interaction, potentially transforming how Claude will operate across desktop environments;

• Discussions with key partners like Amazon and ElevenLabs are underway to accelerate voice feature development, though no formal agreements have been made as of the recent report.

Claude's Web Search Integration Enhances AI Responses with Up-to-Date Information

• Claude's new web search feature allows for real-time access to recent events, enhancing the accuracy of responses with up-to-date information for more informed decision-making

• Fact-checking is simplified as Claude provides direct citations from the web, offering a seamless way to verify the sources of its conversational insights

• Web search is now available in feature preview for paid users in the U.S., with an upcoming rollout for free users and international access on the horizon.

OpenAI Releases New State-of-the-Art Audio Models for Developers Worldwide

• OpenAI's new audio API now includes advanced speech-to-text models, which excel in transcription accuracy even under challenging conditions like diverse accents and noisy environments;

• Developers can leverage the text-to-speech model's customizable voice expressions, enhancing applications from empathetic customer service interactions to expressive storytelling personalization;

• Reinforcement learning and extensive midtraining on authentic audio datasets have propelled these models to surpass existing benchmarks, showcasing robust multilingual capabilities across over 100 languages.

OpenAI to Beta Test ChatGPT Connectors for Business Tools Like Slack and Google Drive

• OpenAI is set to beta test ChatGPT Connectors, enabling business users to integrate apps like Slack and Google Drive with ChatGPT for enhanced query responses

• The beta will utilize a customized GPT-4o model to access encrypted company files and communications, refining responses with internal knowledge and adhering to strict data permissions

• Future expansions will include platforms like Microsoft SharePoint, and while offering new functionalities, it poses direct competition to enterprise search solutions on the market;

OpenAI's New o1-pro AI Model Debuts in API with High Costs

• OpenAI has introduced o1-pro, an advanced AI reasoning model, enhancing its developer API with improved response quality by utilizing more computational resources;

• Pricing for o1-pro is notably steep, at $150 per million tokens input and $600 per million generated, significantly surpassing GPT-4.5 and regular o1 costs;

• While designed for complex problem-solving, early tests indicate o1-pro provides only modest improvements over o1, showing particular difficulties in puzzles and certain optical illusion challenges;

xAI Acquires Hotshot Startup to Enhance AI Video Tools Competing with Industry Leaders

• xAI, Elon Musk's AI company, has acquired Hotshot, a San Francisco-based startup specialized in AI video generation, to possibly compete with platforms like OpenAI's Sora and Google's Veo 2

• Aakash Sastry, Hotshot's CEO, revealed that the startup built three foundational video models and will continue scaling its efforts using xAI's extensive computing resources, namely Colossus

• Before the acquisition, Hotshot attracted investments from notable VCs including Lachy Groom and Alexis Ohanian, though funding round sizes were never publicly disclosed;

Meta's Llama AI Model Reaches 1 Billion Downloads Despite Legal Challenges

• Meta's Llama AI model family reached 1 billion downloads, marking a 53% increase from December 2024, reflecting strong adoption across Meta's platforms like Facebook and Instagram

• Despite commercial restrictions and legal challenges, Llama models see extensive use by companies like Spotify and DoorDash, contributing to Meta's expansive AI ecosystem ambitions

• Upcoming Llama releases, including reasoning and multimodal models, aim to compete with industry leaders, while Meta plans an $80 billion investment to bolster its AI initiatives;

Dexterity Launches Mech: First Industrial Superhumanoid for Transforming Enterprise Logistics

• Dexterity has launched Mech, the first industrial superhumanoid robot, designed to transform industrial workflows with its dual-arm rover that autonomously navigates workspaces and performs demanding, repetitive tasks

• Engineered with Dexterity's advanced Physical AI, Mech seamlessly integrates into brownfield sites, effortlessly lifting up to 130 lbs and interfacing with legacy automation systems for high-altitude tasks

• Equipped with onboard AI and 16 cameras, Mech can be managed by a single associate, intelligently handling complex scenarios while reducing workplace injuries and optimizing palletizing strategies.

Mistral Small 3.1 Sets New Standard for Lightweight, Multimodal AI Models

• Mistral Small 3.1, an open-source AI model, boasts improved text performance and multimodal understanding, marking significant advancements in the small model space

• The model provides expanded context processing up to 128k tokens and outperforms competitors like Gemma 3 in speed, processing at 150 tokens per second

• Available for download and integration via platforms like Google Cloud Vertex AI, Mistral Small 3.1 supports both enterprise and consumer-grade AI applications with its versatile capabilities;

Codeium's Windsurf Tab in Wave 5 Enhances AI Coding Experience for Developers

• The Windsurf Wave 5 update introduces the Windsurf Tab, focusing on latency, quality, and reliability improvements, enhancing the passive predictive AI experience for developers across various platforms;

• Unlimited Windsurf Tab completions are accessible to all users, with premium subscribers enjoying faster performance, seamlessly integrating with the existing Cascade system for an optimized coding experience;

• Windsurf Editor aims to equally serve professional and novice developers, aligning the passive predictive Windsurf Tab and agentic Cascade AI systems to create an unparalleled platform for digital creation.

Microsoft and Inait Collaborate on Digital Brain AI for Finance and Robotics

- Microsoft partners with Swiss startup Inait to develop AI models inspired by mammalian brains, focusing on finance and robotics industries;

- Inait's AI models aim for more energy-efficient operations and continuous learning from real experiences, transcending traditional data-based systems;

- Inait's founder, a pioneer in brain research, contributed to the Human Brain Project, emphasizing brain simulations over conventional AI methods.

Adobe Experience Platform Agent Orchestrator Boosts AI Agents for Personalized Consumer Interactions

- Adobe introduced the Adobe Experience Platform Agent Orchestrator at its annual summit, enabling businesses to manage AI agents from Adobe and various third-party ecosystems to enhance customer experience and marketing workflows;

- A new suite of ten AI agents was revealed, offering specialized functionalities such as content production, audience building, and journey optimization to help marketing and creative teams achieve personalization at scale;

- Partnerships with major companies like Amazon Web Services, IBM, and Microsoft ensure seamless integration across platforms, enhancing functionality of AI agents for customer service, data management, and enterprise resource planning.

⚖️ AI Ethics

US Commerce Bureaus Ban Chinese AI DeepSeek from Government Devices, Sources Say

• The U.S. Commerce Department has prohibited the use of China's DeepSeek AI on government devices, citing security concerns, as highlighted by internal communications accessed by Reuters

• Officials expressed fears over DeepSeek’s potential threat to data privacy, with some lawmakers spearheading legislative efforts to ban the AI on all government-issued equipment

• DeepSeek’s low-cost AI models have significantly disrupted global markets, raising apprehensions regarding the United States' competitive position in artificial intelligence technologies.

AI-Generated Art Denied Copyright Protection By U.S. Appeals Court Ruling

• A federal appeals court in Washington, D.C., ruled that works generated solely by AI systems, lacking human input, cannot be copyrighted under U.S. law

• The U.S. Court of Appeals upheld the Copyright Office's stance that only human-authored creations can receive copyright protection, rejecting Stephen Thaler's application for his AI-generated artwork

• Legal experts highlight this decision as illustrating ongoing challenges in balancing copyright law with the rapid growth of generative AI technologies in creative industries;

Hollywood Icons Request White House Protect Copyrights from AI Exploitation Push

• Over 400 Hollywood creative figures signed an open letter urging the Trump administration to maintain copyright protections amidst AI companies' requests for relaxed regulations;

• Signatories condemned proposals from OpenAI and Google suggesting AI training on copyrighted materials without permission, emphasizing concerns over potential harm to the creative industry;

• The letter highlighted that America's AI leadership should not undermine its creative and knowledge industries, advocating for preserving existing copyright protections essential for economic and cultural strength.

Hugging Face Advocates Open-Source as Key to U.S. AI Dominance in New Submission

• Hugging Face urges the Trump administration to embrace open-source AI models as a competitive advantage, contrasting calls for light regulation from leading commercial AI firms like OpenAI

• The company highlights recent open-source success stories, claiming efficient models offer broader access and economic benefits, potentially democratizing AI technology amid rising resource constraints

• The AI policy debate intensifies as Hugging Face champions transparency and collaboration over proprietary approaches, emphasizing security and innovation for wider adoption across industries.

Aardvark AI Delivers Faster, Accurate Global Weather Forecasts with Less Computing Power

• A new AI-driven weather prediction model, Aardvark Weather, offers highly accurate forecasts with significantly reduced computing power, heralding a paradigm shift from traditional supercomputer-dependent systems;

• The system's efficiency allows individual researchers to generate bespoke weather forecasts, facilitating hyper-localized predictions for industries like agriculture and renewable energy with unprecedented ease and speed;

• By training AI with diverse global data, Aardvark enhances disaster forecasting capabilities, promising improved predictions for hurricanes, wildfires, and other climate-related events, thus democratizing access to advanced weather technology.

Google Cloud Acquires Wiz to Enhance Multicloud and AI-Driven Cybersecurity Solutions

• Google Cloud announces the acquisition of Wiz to enhance security solutions for businesses and governments aims to provide a comprehensive platform protecting multicloud, hybrid, and on-premises environments.

• The collaboration seeks to address accelerating cybersecurity risks enable a seamless security integration, leveraging Wiz's cloud platform and Google Cloud’s AI and infrastructure expertise to offer advanced threat defense.

• With the acquisition, Wiz’s security offerings will remain available across major cloud platforms, promising multicloud customers enhanced protection and flexibility while maintaining industry standards and open-source commitments.

Google's Gemini 2.0 Flash AI Sparks Concerns Over Watermark Removal Abilities

• Google is expanding access to its experimental Gemini 2.0 Flash AI model, which has raised concerns due to its ability to remove watermarks from images with precision;

• Developers discovered that Gemini 2.0 Flash can generate images from text prompts and perform conversational editing, including inserting recognizable figures like Elon Musk into photos;

• Current restrictions limit these capabilities to developers using AI Studio, with Google's position on safeguarding against misuse like watermark removal yet to be clarified.

🎓AI Academia

EXAONE Deep Series Exhibits Superior Reasoning in Math and Coding Benchmarks

• The EXAONE Deep series by LG AI Research excels in reasoning tasks, significantly outperforming similarly sized models in math and coding benchmarks.

• Openly available on Hugging Face, EXAONE Deep models include variations such as 2.4B, 7.8B, and 32B, offering superior reasoning capabilities for research purposes.

• The EXAONE Deep 32B model shows competitive performance against top-tier open-weight reasoning models like QwQ-32B and DeepSeek-R1-Llama-70B in various benchmark tests.

AudioX Model Enhances Multi-Modal Anything-to-Audio and Music Generation Versatility

- AudioX offers a unified Diffusion Transformer model for generating high-quality audio and music across multiple modalities, such as text, video, and images;

- The innovative use of multi-modal masked training in AudioX ensures robust cross-modal representations, enabling seamless integration of diverse input types into audio outputs;

- By curating large datasets like vggsound-caps and V2M-caps, AudioX addresses data scarcity and excels in versatile audio generation tasks, outperforming specialized models in benchmarks.

Legal Frameworks Crucial for Ethical AI Use in Corporate Governance, Study Finds

• Recent research underscores the pivotal role of legal frameworks in ensuring ethical AI deployment within corporate governance, emphasizing the need for adaptable, principle-based regulations

• Early analysis highlights contrasting approaches by the EU and US regarding AI-specific legislation, impacting corporate practices and innovation across different jurisdictions

• Industry-led initiatives and self-regulatory measures are being scrutinized for their effectiveness in setting AI governance standards, addressing accountability, and mitigating unintended biases.

Balancing Innovation and Privacy: Ethical Implications of AI in Data Collection

• An academic article explores ethical implications of AI-driven data collection, evaluating legal frameworks in the EU, US, and China for privacy and regulatory challenges

• Case studies from healthcare, finance, and smart cities illustrate AI's practical implementation challenges, highlighting issues of consent, algorithmic bias, and privacy protection

• The research proposes solutions such as legal recommendations, technical safeguards, and adaptive governance to balance AI innovation with individual rights and societal values.

Stakeholder-Driven Privacy Framework Emerges to Address Ethical AI Challenges for Youth

• The Privacy Ethics Alignment in AI (PEA-AI) framework, funded by Canada's Privacy Commissioner, focuses on ethical AI by aligning privacy with stakeholder needs and expectations;

• Researchers at Vancouver Island University highlight significant privacy concerns among youth, parents, educators, and AI professionals, emphasizing trust and transparency in AI-driven environments;

• Employing grounded theory, the study identifies gaps in AI literacy, showcasing the necessity for a dynamic, stakeholder-driven approach to address diverse privacy challenges in AI systems.

Urgent Call for Ethical AI Governance to Protect Young Digital Citizens’ Privacy

• A recent paper highlights the need for ethical AI governance to protect young digital users against privacy exploitation and algorithmic biases

• The paper calls for structured frameworks focusing on algorithmic transparency, privacy education, and accountability measures aimed at empowering youth over their digital identities

• The lack of targeted AI literacy initiatives is identified as a significant gap, requiring collaboration between educators, policymakers, and AI developers to improve privacy education;

Superhuman AI Disclosures Affect Fairness and Trust Differently Based on Expertise Levels

• Disclosure of AI's "superhuman" abilities impacts trust and fairness differently across domains, with notable distinctions in competitive gaming versus cooperative personal assistant settings;

• In StarCraft II, identifying AI as "superhuman" reduced novice players’ feelings of unfairness and altered perceptions of toxicity, while experts found the disclosure bothersome but less deceitful;

• In personal assistant domains, transparency about AI capabilities increased trustworthiness, yet revealed a risk of users overrelying on AI, especially among specific user personas.

Financial Sector Confronts AI Challenges with Robust Model Risk Management Strategies

• Financial institutions are exploring generative AI to enhance business operations, aiming for cost reduction and increased revenue, inspired by successful models like OpenAI's ChatGPT in 2023;

• These AI applications, while offering potential efficiency gains, present risks such as hallucinations and toxicity, necessitating enhanced model risk frameworks and controls for secure implementation;

• Generative AI's integration into financial risk management aids in regulatory compliance and customer service optimization, posing challenges like ethical implications, biases, and malicious exploitation risks.

Survey Highlights Integration of Large Language Models with Knowledge-Based Systems

• A comprehensive survey highlights efforts to integrate Large Language Models (LLMs) with knowledge-based systems, enhancing data contextualization and model accuracy through structured knowledge representation

• The study discusses the practical application and impact of merging LLMs with knowledge bases while outlining technical, operational, and ethical challenges associated with this integration

• Insights from the survey propose future research directions and address existing gaps, supporting the advancement and deployment of AI technologies across various sectors worldwide;

New Dataset AGGA Synthesizes Academic Guidelines for Generative AI Use in Education

• AGGA is a comprehensive dataset of 80 academic guidelines for integrating Generative AIs and Large Language Models, compiled from diverse global university websites

• With 188,674 words, AGGA aids in natural language processing tasks like model synthesis and abstraction identification in requirements engineering

• Covering a range of academic disciplines, AGGA provides insights into the ethical and innovative use of AI across humanities and technology sectors;

New Dataset Offers 160 Guidelines for Generative AI Use Across Industries

• IGGA, a dataset of 160 industry guidelines for generative AIs, offers valuable insights from trustworthy sources, catering to various industrial and workplace settings

• Spanning 104,565 words, IGGA aids natural language processing tasks like model synthesis and document structure assessment, essential for requirements engineering

• Covering 14 industry sectors globally, IGGA addresses the need for rigorous frameworks to responsibly integrate generative AI technologies amidst their widespread adoption.

Developers Express Concerns Over Licensing, Copyright in Generative AI for Code Development

• A comprehensive survey of 574 developers reveals a diverse range of opinions on licensing and copyright issues related to generative AI in software development;

• Developers express concerns about data leakage and ownership, comparing AI-generated code use to employing existing code, while emphasizing the necessity for clear regulatory guidelines;

• The study aims to provide policymakers with key insights into the evolving developer perceptions of generative AI technologies and the associated legal implications. Read more

Comprehensive Analysis Reveals Errors in Large Language Model Code Generation Practices

• Recent research identified 17 types of non-syntactic mistakes in code generated by advanced Large Language Models using a large dataset, uncovering issues overlooked by earlier studies

• GPT-4 demonstrated impressive performance in identifying mistakes and reasons in LLM-generated code, utilizing the ReAct prompting technique to achieve an F1 score of 0.78

• Identifying errors in LLM-generated code across various programming languages, not limited to Python, is crucial to improve the reliability and quality of software development.

Survey Highlights Memorization Risks in Large Language Models Affecting Privacy and Security

• A recent survey highlights the privacy and security vulnerabilities posed by memorization in large language models (LLMs), emphasizing ethical and legal challenges.

• The survey categorizes LLM memorization into granularity, retrievability, and desirability, discussing methods to quantify and mitigate its adverse effects.

• Future research topics identified include balancing privacy with performance and analyzing memorization within specific LLM contexts like conversational agents and retrieval-augmented generation.

Survey Analyzes Mobile Edge Intelligence's Role in Deploying Large Language Models

• A recent survey highlights the benefits of running large language models (LLMs) at the edge, emphasizing lower latency, cost-effectiveness, and enhanced privacy over cloud-based models;

• Researchers are exploring mobile edge intelligence (MEI) as a middle ground for deploying LLMs, addressing resource limitations on edge devices while maintaining efficiency in applications;

• The study reveals potential advancements in edge LLM caching, training, and inference, significantly impacting privacy- and delay-sensitive sectors like healthcare and autonomous driving.

Global Survey Analyzes Academic Approaches to Generative AI and Language Models Integration

• A study on the global academic adoption of Generative AI and Large Language Models addresses their pedagogical benefits and ethical challenges

• Positive reactions focus on collaborative creativity and increased educational access, whereas concerns highlight ethical complexities, academic integrity, and misinformation risks

• Recommendations emphasize the need for balanced innovation that ensures equitable access while fostering responsible integration of AI technologies in education;

About SoRAI: The School of Responsible AI (SoRAI) is a pioneering edtech platform by Saahil Gupta, AIGP focused on advancing Responsible AI (RAI) literacy through affordable, practical training. Its flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.