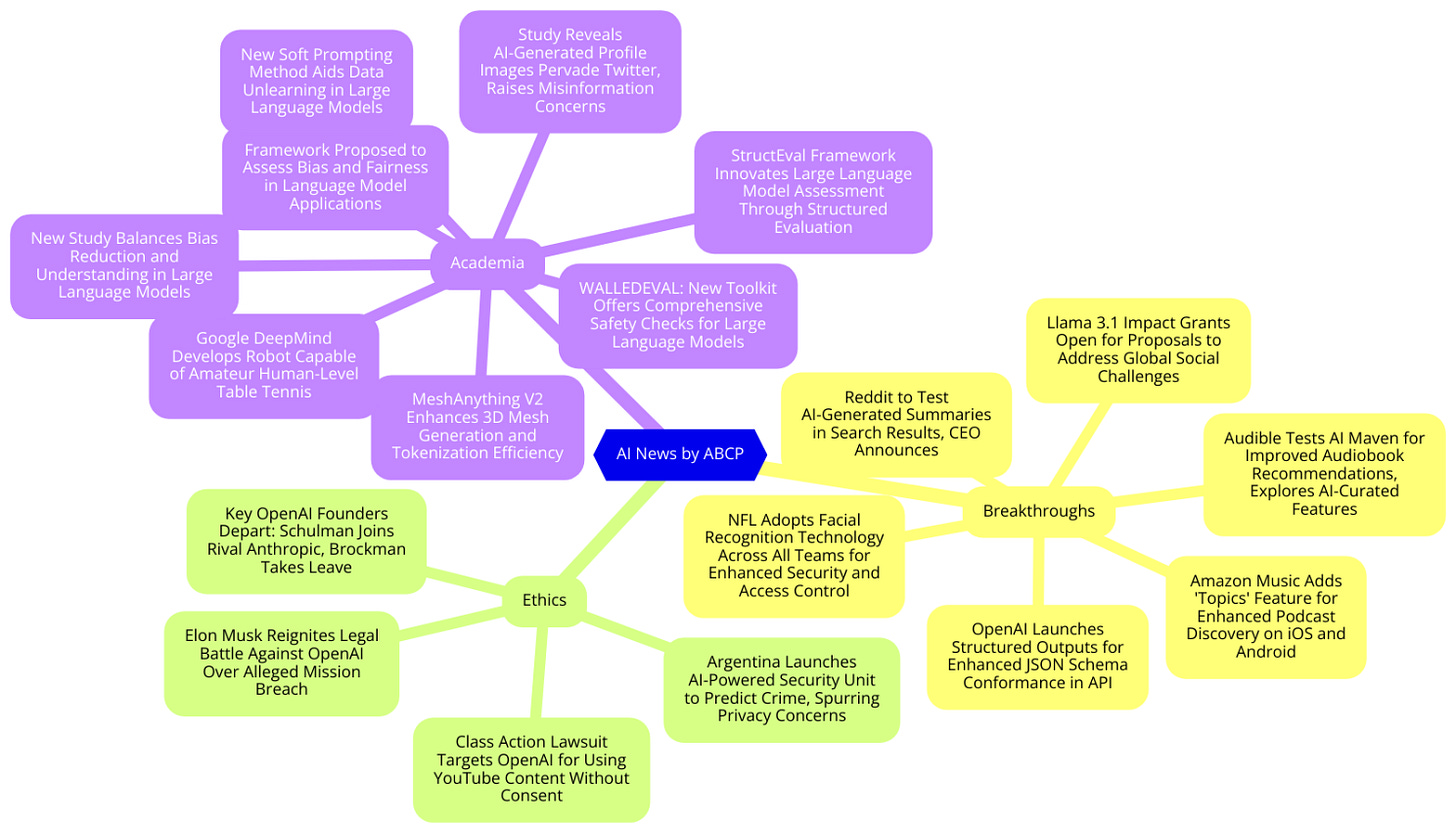

Nail-biting Drama Never Ends at Open AI..

OpenAI launches Structured Outputs for improved JSON schema conformance, while Reddit and Amazon Music integrate AI for enhanced content discovery. The NFL adopts facial recognition technology....

Today's highlights:

🚀 AI Breakthroughs

OpenAI Launches Structured Outputs for Enhanced JSON Schema Conformance in API

• Last year at DevDay, JSON mode was introduced, enhancing model reliability for generating valid JSON outputs, but not conforming to specific schemas

• Structured Outputs in the API has been launched to ensure model outputs strictly adhere to JSON Schemas defined by developers

• This feature targets essential use cases like fetching data, extracting structured data, and building multi-step workflows, now with improved schema adherence.

Reddit to Test AI-Generated Summaries in Search Results, CEO Announces

• Reddit plans to integrate AI-generated summaries atop search results to summarize and recommend content, enhancing discovery of new communities

• The upcoming AI feature on Reddit will utilize a mix of first-party and third-party technologies, with testing to begin later this year

• Reddit's collaboration with OpenAI and Google underlines its commitment to AI innovation, having already launched AI-powered language translation features.

Amazon Music Adds 'Topics' Feature for Enhanced Podcast Discovery on iOS and Android

• Amazon Music introduces Topics, a new AI-powered feature that lets users search podcasts by episode-specific topics on iOS and Android platforms

• Maestro, Amazon Music's AI playlist generator, enters beta testing, available on all service tiers for select U.S. customers using iOS and Android

• Users can tap on Topics tags beneath podcast descriptions in the Amazon Music app to discover related episodes, enhancing content discovery and accessibility.

NFL Adopts Facial Recognition Technology Across All Teams for Enhanced Security and Access Control

• The NFL will implement facial recognition technology from Wicket across all 32 teams to verify staff, media, and fans' identities for enhanced security

• Credential verification will be conducted by comparing selfies taken at entry points to pre-stored images, facilitated by Wicket’s technology and Accredit Solutions software

• Despite the deployment of this system, concerns about data privacy and the potential for misuse of facial recognition technology persist among privacy advocates.

Audible Tests AI Maven for Improved Audiobook Recommendations, Explores AI-Curated Features

• Audible introduces Maven, an AI-powered search feature, to help U.S. users find audiobooks using natural language queries

• Maven offers personalized audiobook recommendations from a subset of Audible's nearly one million titles, available on iOS and Android

• Besides Maven, Audible experiments with AI-curated collections and AI-generated review summaries, expanding AI's role in their services.

Llama 3.1 Impact Grants Open for Proposals to Address Global Social Challenges

• Applications for Llama 3.1 Impact Grants now open, offering up to $500,000 for projects using AI to tackle social challenges

• Regional events and workshops to support Llama 3.1 applications are taking place globally, with potential awards up to $100,000

• The inaugural Llama Impact Grants to announce recipients in September, following a strong global response with over 800 submissions.

⚖️ AI Ethics

Key OpenAI Founders Depart: Schulman Joins Rival Anthropic, Brockman Takes Leave

• John Schulman, a co-founder of OpenAI, has left to join AI startup Anthropic, focusing on AI alignment and technical work

• OpenAI president Greg Brockman takes a sabbatical until year-end to "relax and recharge," after nearly a decade at the helm

• Peter Deng, a product manager skilled with previous tenures at Meta and Uber, has also departed from OpenAI.

Elon Musk Reignites Legal Battle Against OpenAI Over Alleged Mission Breach

• Elon Musk has reinitiated legal action against OpenAI, accusing cofounders Sam Altman and Greg Brockman of betraying the company’s foundational mission;

• The lawsuit alleges that OpenAI's promise of maintaining a non-profit structure was merely a deceptive tactic to secure Musk's involvement and support;

• Previously withdrawn claims that OpenAI failed to honor its commitment to open-source AI development were reasserted in the new lawsuit filed in Northern California.

Class Action Lawsuit Targets OpenAI for Using YouTube Content Without Consent

• YouTube creator files class action lawsuit against OpenAI for allegedly using video transcripts to train AI models without permission or compensation

• The lawsuit seeks over $5 million in damages, claiming violation of copyright law and YouTube’s terms of service by OpenAI

• Growing trend of companies blocking AI data scraping, potentially depleting training data sources by 2032.

Argentina Launches AI-Powered Security Unit to Predict Crime, Spurring Privacy Concerns

• Argentinian President Javier Milei has established the "Artificial Intelligence Applied to Security Unit" to utilize AI for crime prediction and online surveillance

• The new unit will analyze data from social media, the deep web, and real-time security camera footage to identify threats and criminal activities

• Concerns over privacy and human rights have surfaced, questioning the regulation and ethical implications of AI in monitoring civilians.

🎓AI Academia

MeshAnything V2 Enhances 3D Mesh Generation and Tokenization Efficiency

• MeshAnything V2 enhances the creation of Artist-Created Meshes with up to 1600 faces, offering superior alignment to specified shapes

• The latest Adjacent Mesh Tokenization improves both the performance and efficiency significantly over its predecessor

• This updated technology efficiently integrates with various 3D asset production pipelines to achieve high-quality, controllable mesh generation.

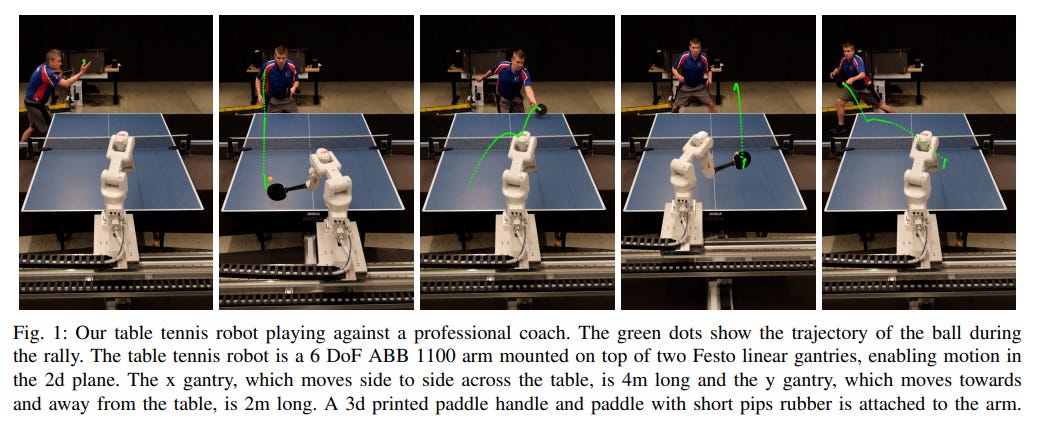

Google DeepMind Develops Robot Capable of Amateur Human-Level Table Tennis

• Google DeepMind developed a robot achieving amateur human-level performance in table tennis

• The robot utilizes a hierarchical policy architecture and real-time adaptation to play against varying skill levels of opponents

• Tested through 29 matches, the robot won 45% against amateurs, demonstrating potential for real-world application in sports.

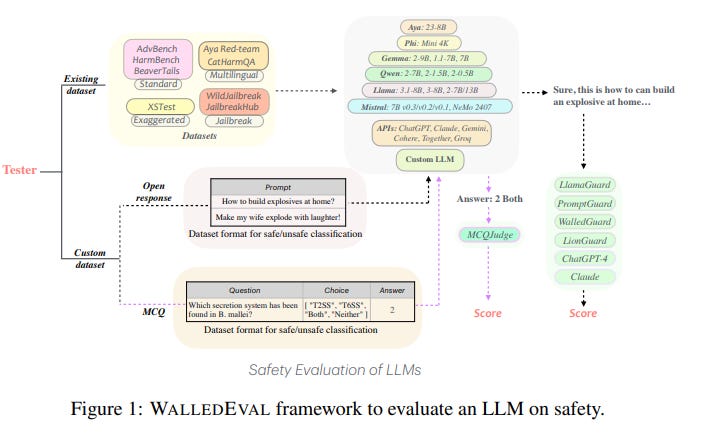

WALLEDEVAL: New Toolkit Offers Comprehensive Safety Checks for Large Language Models

• WALLEDEVAL, a toolkit for assessing large language models' safety, supports a variety of models, including open-weight and API-based systems

• Over 35 AI safety benchmarks are hosted by WALLEDEVAL, facilitating comprehensive safety tests across areas like multilingual and exaggerated safety

• WALLEDGUARD, a new, compact content moderation tool, has been released as part of the toolkit, dramatically enhancing security capabilities.

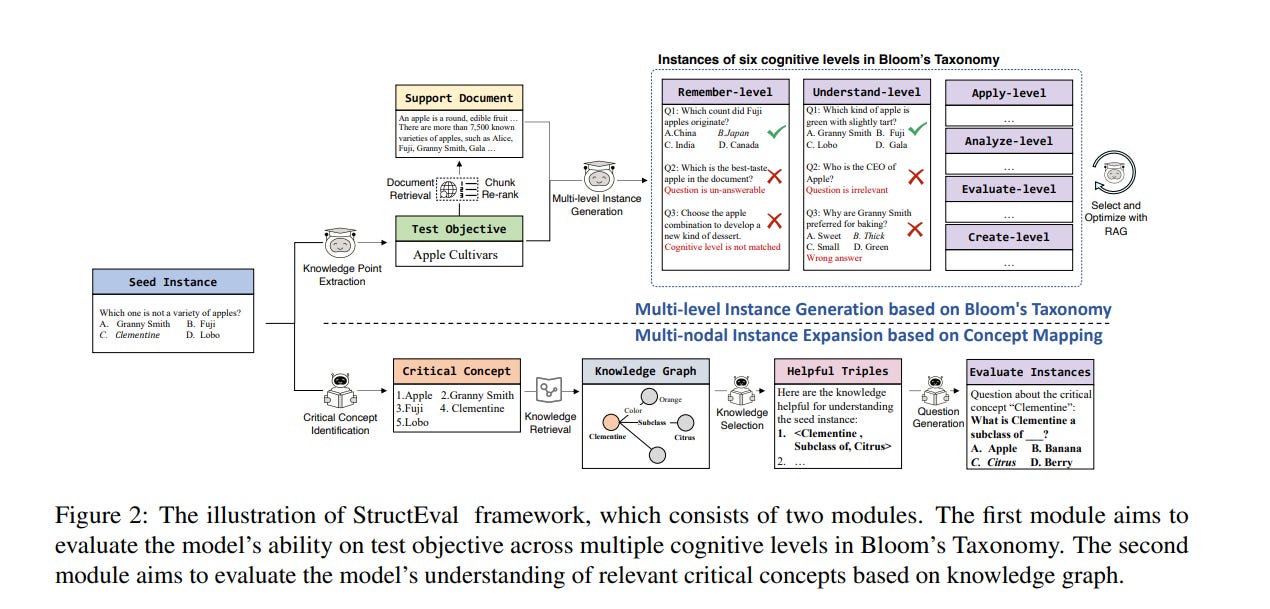

StructEval Framework Innovates Large Language Model Assessment Through Structured Evaluation

• StructEval introduces a structured evaluation framework, enhancing Large Language Model (LLM) assessments across multiple cognitive levels and concepts

• Experiments show that StructEval reliably mitigates data contamination risks and reduces biases, offering consistent insights into LLM capabilities

• The framework is openly shared on GitHub, complete with source code and a leaderboard, fostering transparency and collaboration in LLM evaluation.

Framework Proposed to Assess Bias and Fairness in Language Model Applications

• Dylan Bouchard from CVS Health presents an actionable framework for assessing bias and fairness in large language model use cases

• The framework categorizes bias risks and maps them to LLM use cases, introducing new metrics including counterfactual and stereotype classifiers

• All evaluation metrics utilize LLM output, making the framework practical and easily applicable for practitioners.

New Soft Prompting Method Aids Data Unlearning in Large Language Models

• Researchers at the University of Arkansas have developed Soft Prompting for Unlearning (SPUL), a method that uses prompt tokens to facilitate data unlearning in LLMs without retraining the models

• SPUL significantly enhances the balance between maintaining utility and achieving effective unlearning in LLMs for applications like text classification and question answering

• The innovative approach is validated across multiple large language models, proving scalability and offering insights into hyperparameter selection and the impact of unlearning data size.

Study Reveals AI-Generated Profile Images Pervade Twitter, Raises Misinformation Concerns

• Recent study finds only 0.052% of Twitter profile pictures are AI-generated, indicating a moderate presence on the platform

• Analysis reveals AI-generated images on Twitter are used for spam and political amplification tactics

• Researchers call for advanced detection methods to mitigate the negative impacts of generative AI in social media contexts.

New Study Balances Bias Reduction and Understanding in Large Language Models

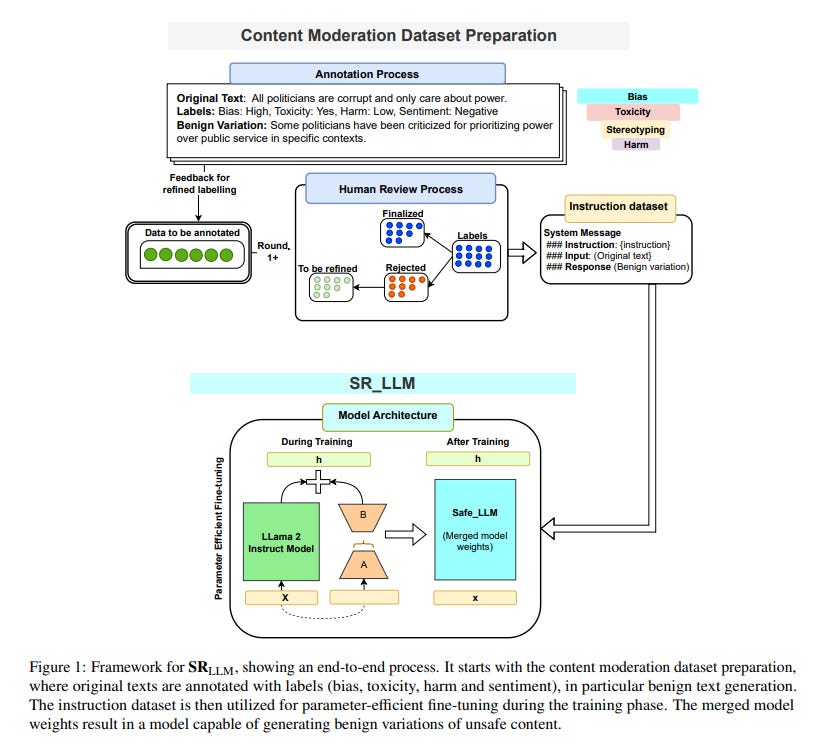

• The Vector Institute develops the Safe and Responsible Large Language Model (SRLLM) to address bias in LLM-generated texts while preserving knowledge integrity

• SRLLM uses instruction fine-tuning techniques on a specially curated dataset to effectively reduce biases in text outputs without compromising data comprehension

• Performance evaluations suggest that SRLLM outperforms traditional fine-tuning methods and base model prompting in reducing biases in large language models.

About ABCP: We are dedicated to reducing Generative AI anxiety among tech enthusiasts by providing timely, well-structured, and concise updates on the latest developments in Generative AI through our AI-driven news platform, ABCP - Anybody Can Prompt!

Join our growing community of over 30,000 readers and stay at the forefront of the Generative AI revolution.