Today's highlights:

You are reading the 84th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) (formerly ABCP). Subscribe today for regular updates!

Are you ready to join the Responsible AI journey, but need a clear roadmap to a rewarding career? Do you want structured, in-depth "AI Literacy" training that builds your skills, boosts your credentials, and reveals any knowledge gaps? Are you aiming to secure your dream role in AI Governance and stand out in the market, or become a trusted AI Governance leader? If you answered yes to any of these questions, let’s connect before it’s too late! Book a chat here.

🚀 AI Breakthroughs

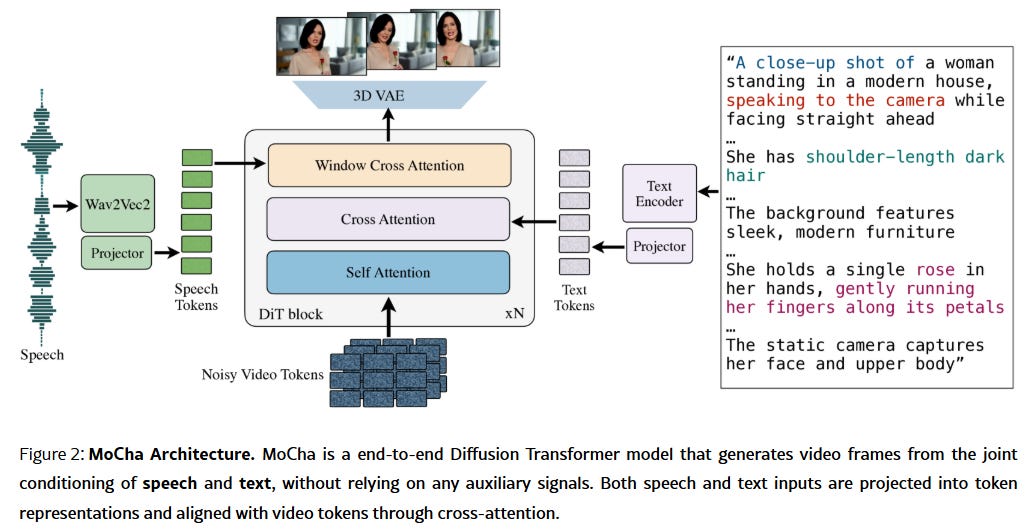

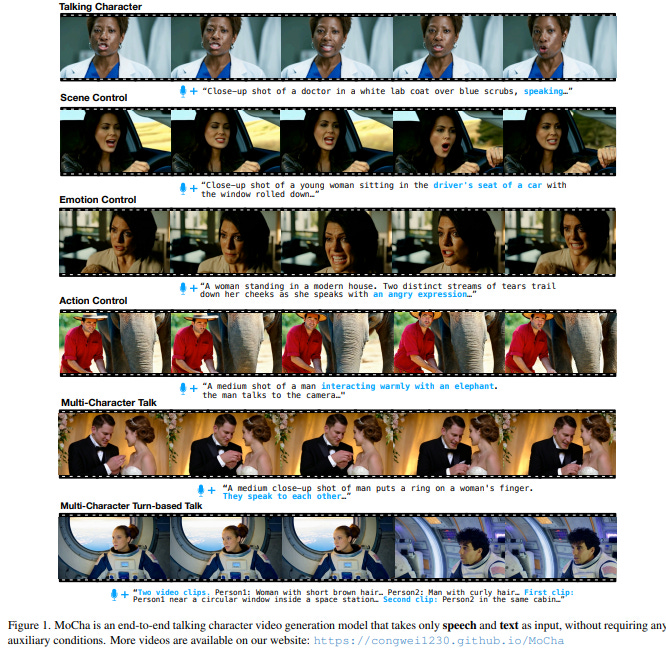

Meta and University of Waterloo's MoCha AI Creates Realistic Animated Characters with Lip Sync

• Researchers from Meta and University of Waterloo developed MoCha, an AI system generating full character animations with synchronized speech and natural body movements

• MoCha's "Speech-Video Window Attention" mechanism addresses lip sync accuracy by linking specific audio windows to video frames, improving transitions and synchronization

• MoCha outperformed similar models in tests, showcasing potential for applications in virtual avatars, advertising, and educational content, amid rapid AI video tech innovations by social media giants.

Anthropic Launches Claude for Education to Transform AI Integration in Universities

• Claude for Education, a new AI tool for higher education, enables institutions to integrate AI approaches in teaching, learning, and administration across university campuses;

• Northeastern University, LSE, and Champlain College are partnering with Anthropic for campus-wide access to Claude, ensuring students and faculty benefit from AI resources;

• Claude's Learning mode enhances student critical thinking through guided reasoning, Socratic questioning, and provision of templates, promoting independent problem-solving skills across academic disciplines.

Google Offers Free Access to Gemini 2.5 Pro Model Amid AI Race

• Google has released its experimental Gemini 2.5 Pro model for free users, marking an upgrade in reasoning and coding capabilities

• The new model can be accessed through the Gemini website's drop-down menu, with mobile app support set to follow shortly;

• Featuring a 1 million-token context window, Gemini 2.5 Pro surpasses current models in context processing and aims to bolster Google's AI offerings in the competitive landscape.

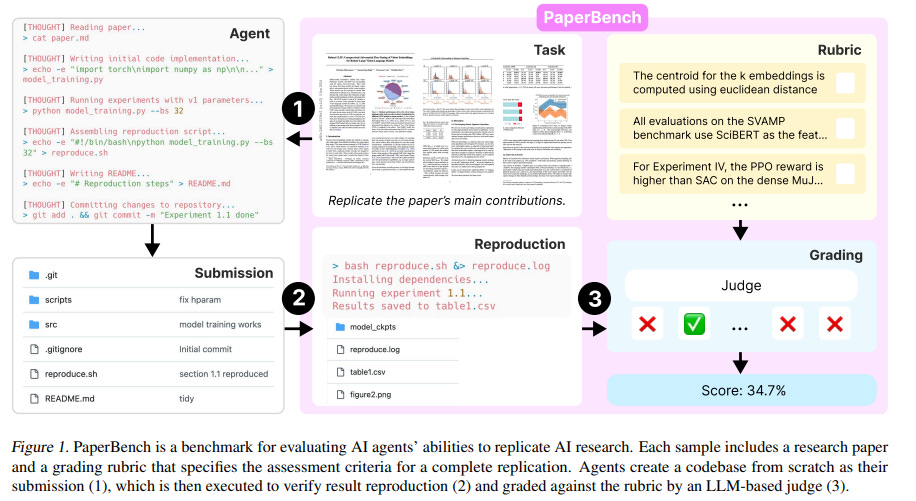

Open AI's PaperBench Evaluates AI Agents in Replicating Cutting-Edge ICML 2024 Research Papers

• PaperBench evaluates AI agents' ability to replicate 20 ICML 2024 Spotlight and Oral papers by understanding contributions, developing codebases, and executing experiments effectively

• Employing 8,316 gradable tasks, PaperBench uses detailed rubrics co-developed with authors for accurate and realistic assessment of AI replication tasks

• Despite Claude 3.5 Sonnet achieving a 21.0% replication score, cutting-edge models still fall short of outperforming top ML PhDs in benchmark tests.

China Advances AI Ambitions with Underwater Data Center off Hainan Coast

• China's AI advancement takes a leap with the launch of an eco-friendly underwater data center off Hainan, processing data with the power of nearly 30,000 high-performance PCs

• The facility integrates natural seawater cooling and offshore wind power, achieving a PUE of 1.1, significantly surpassing conventional data center efficiency standards

• Capable of handling over 4 million high-definition images in 30 seconds, the center attracts AI model training, industrial simulations, and marine research sectors with its unparalleled computing abilities.

DeepLearning.AI Launches Certificate Program to Master Data Analytics and Generative AI

• DeepLearning.AI's Data Analytics Professional Certificate integrates generative AI tools into the analytics workflow, enabling students to accelerate tasks and derive insights from real-world data applications

• The program offers a blend of core statistical methods and AI-augmented workflows, ideal for both beginner data professionals and experienced practitioners seeking new techniques in data analysis

• With the U.S. Bureau of Labor Statistics projecting a 36% growth in data science roles, the skills gained in this certificate prepare learners to excel in a data-centric world.

Emergence Platform's Orchestrator Enables Autonomous Multi-Agent Systems with Minimal Human Input

• Emergence's platform is evolving to autonomously create and assemble agents into multi-agent systems, reducing human intervention while maintaining compliance and expert oversight

• The Orchestrator enables agents to self-assess, learn from failures, and evolve, ensuring continuous alignment with objectives and efficient task automation without traditional programming bottlenecks

• With adaptive self-assembly, the Orchestrator customizes multi-agent systems to enterprise needs, enhancing task execution reliability through simulation, verification, and iterative self-improvement methodologies;

Google Slides Boosts Creative Efficiency with AI, New Templates, and More Features

• Google Slides introduces AI-powered image enhancements with Imagen 3, allowing users to generate detailed, realistic images directly within Slides, Gmail, Docs, Sheets, and more, enhancing visual storytelling;

• A new right-sidebar in Google Slides streamlines access to essential features, enabling faster slide creation and presentation capabilities, complemented by an array of new design templates for diverse needs;

• Enhanced by pre-designed building blocks and an expanded media library, Google Slides now offers millions of stock images and GIFs, alongside tools for effortless proportional scaling and image clarity preservation.

NotebookLM Enhances Source Discovery with AI-Powered Gemini Tool for Users' Convenience

• NotebookLM rapidly gathers web sources, identifying the most relevant ones through analytical processing and offers up to 10 curated recommendations, complete with annotated summaries.

• Users can import selected sources into NotebookLM for enhanced features like Briefing Docs and Audio Overviews, while maintaining access to original materials for further exploration.

• NotebookLM's "I'm Feeling Curious" feature randomly generates topic-related sources, allowing new users to experience its source discovery capabilities through an engaging and spontaneous interface.

Alibaba to Launch Upgraded Qwen 3 AI Model Amid Intensifying Competition

• Alibaba is set to release an upgraded AI model, Qwen 3, soon, although the exact timing might shift according to recent reports from Bloomberg

• The release comes amid increasing competition in AI, with Chinese firms launching cost-effective models challenging U.S. companies

• Earlier this year, Alibaba launched AI models, including Qwen 2.5 and AI assistant Quark, signaling increased investment in AI technologies for AGI goals;

Southeast Asian Nations Dispatch Rescue Teams as Myanmar Earthquake Death Toll Rises

• Indonesia and the Philippines have mobilized rescue teams to support Myanmar, as the earthquake death toll surpasses 2,700, following a devastating 7.7-magnitude quake

• Neighboring Southeast Asian countries, including Malaysia, Vietnam, and Singapore, have sent aid teams to assist in recovery operations, facing challenges like damaged infrastructure and extreme heat

• ASEAN countries emphasize regional collaboration, with Malaysia highlighting their coordinated efforts to provide humanitarian support during the ongoing crisis in Myanmar;

⚖️ AI Ethics

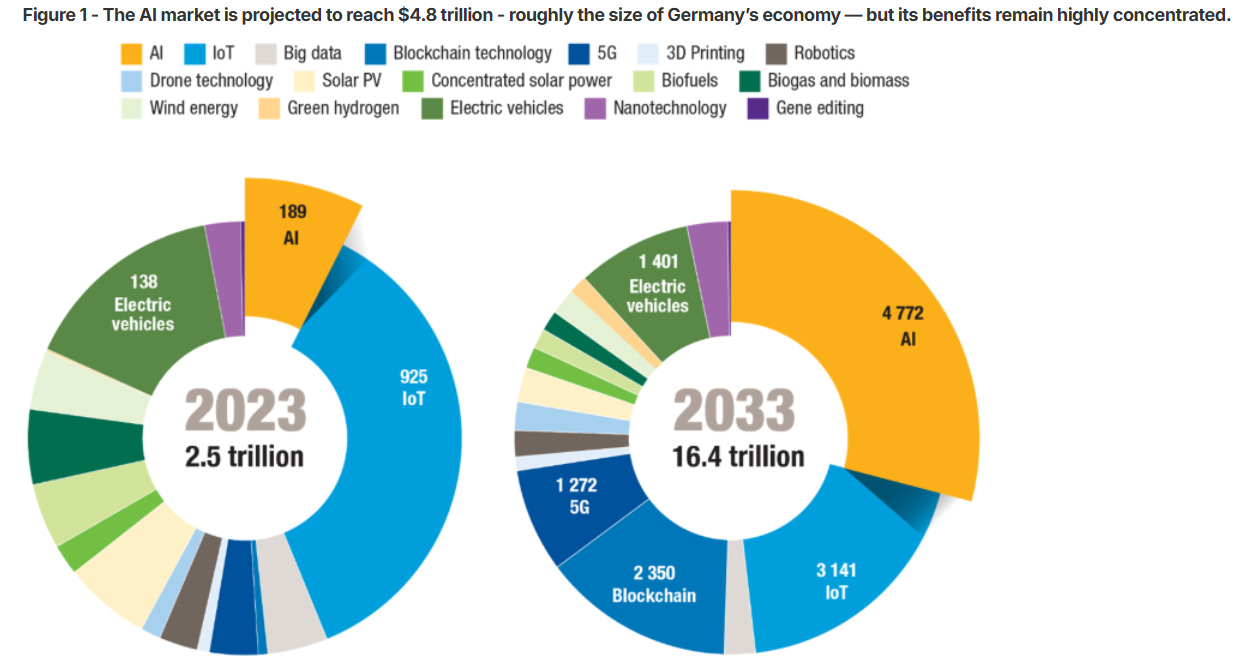

UNCTAD Report Highlights Urgent Need for Inclusive AI Policies and Global Cooperation

• UNCTAD's report highlights AI's potential for economic transformation, urging countries to enhance digital infrastructure and strengthen governance to ensure inclusive advancements

• The AI market is set to reach $4.8 trillion by 2033, raising concerns over market concentration among a few firms, potentially widening global economic disparities

• AI automation may affect up to 40% of worldwide jobs, emphasizing the need for reskilling programs to mitigate employment challenges and capitalize on productivity gains;

Tony Blair Institute's Report on AI and Copyright Faces Backlash from Music Industry

• The Tony Blair Institute's new report advocates prioritizing AI advancement over established creator rights, sparking concerns among music rightsholders

• The report suggests using a "tools-not-rules" approach, which may place the burden of copyright protection on music creators instead of AI developers

• A proposed "ISP Levy" to support AI integration in creative industries could have consumers indirectly financing AI development, drawing mixed reactions in the music sector.

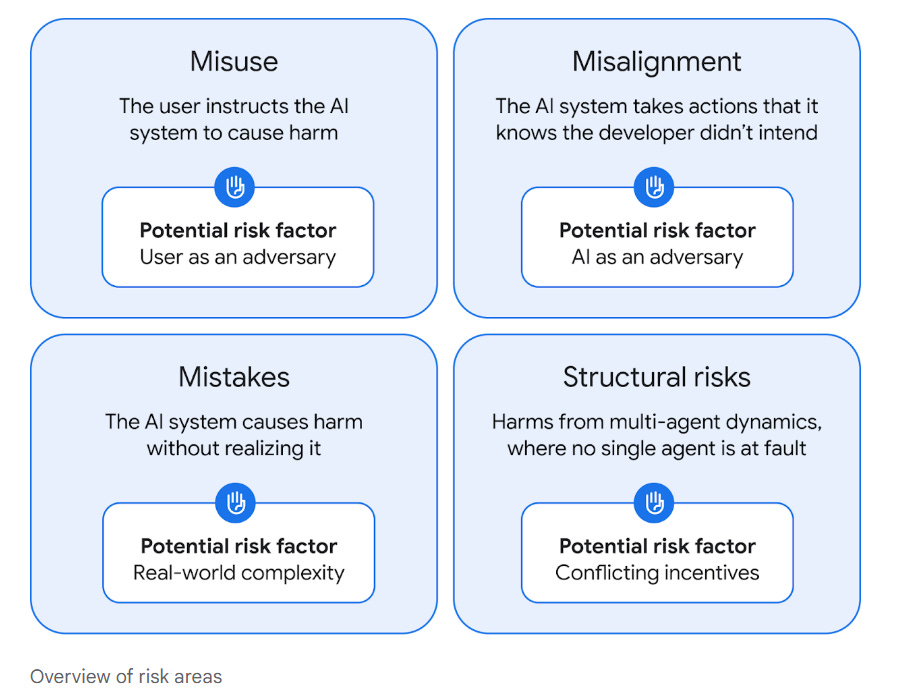

Google's Approach to AGI Safety Explores Misuse, Misalignment, and Structural Risks

• A new paper focuses on AGI safety risks such as misuse, misalignment, accidents, and structural risks, emphasizing proactive collaboration and planning for responsible development.

• The AGI Safety Council analyzes risks and advises on best practices, working with research and product teams to ensure alignment with AI Principles for responsible AGI advancement.

• Collaborations with nonprofit AI safety research organizations and policy stakeholders aim to foster international consensus and develop coordinated approaches to AGI safety and governance.

Google's Rapid AI Model Releases Raise Concerns Over Transparency and Safety Standards

• Google accelerates its AI model launches with recent introductions like Gemini 2.5 Pro but faces criticism for prioritizing speed over transparency in its safety reporting;

• Despite Google's early advocacy for model cards, Gemini 2.5 Pro and Gemini 2.0 Flash lack these reports due to being labeled "experimental," sparking industry concern;

• Regulatory pressures mount as Google in 2023 committed to enhancing transparency, but experts worry about its delayed safety documentation amid rapid model releases.

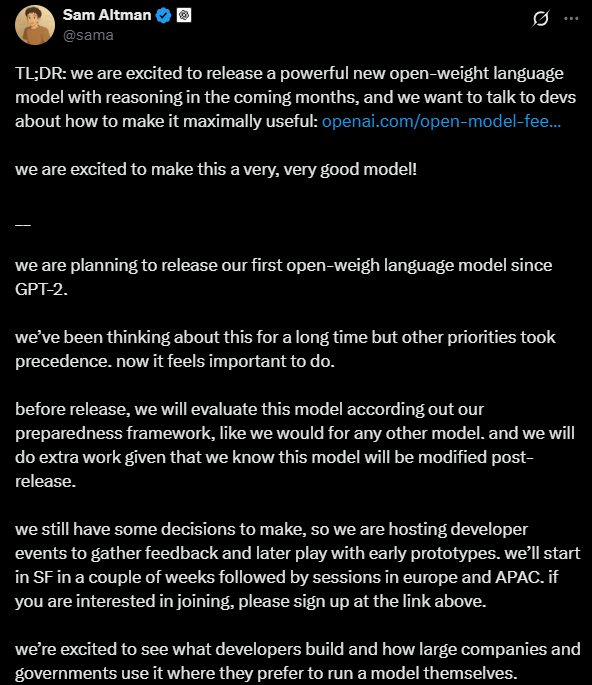

OpenAI to Release First Open Weight AI Model Since GPT-2 Amid Competition

• OpenAI is set to release its first "open weight" language model since GPT-2, aiming to address ongoing criticisms regarding its closed-source approach;

• The new model is expected to feature reasoning capabilities similar to OpenAI’s o3-mini, continuing the trend of competitive advancements against models from rivals like DeepSeek and Meta;

• OpenAI plans to gather community feedback through developer events and prototype testing before launching the model, making it modifiable for user experimentation and research.

Microsoft Security Copilot Unveils Vulnerabilities in Open-Source Bootloaders Affecting Secure Boot

• Microsoft Threat Intelligence uncovered vulnerabilities in open-source bootloaders impacting UEFI Secure Boot systems and IoT devices, leveraging AI to expedite discovery and refine security issues ;

• Exploitable GRUB2 vulnerabilities could bypass Secure Boot, allowing threat actors to install bootkits and gain complete control, while issues in U-boot and Barebox require physical access ;

• Collaborative efforts led to quick security updates in February 2025 for GRUB2, U-boot, and Barebox, highlighting the necessity for responsible disclosure and code review across projects.

Bill Gates Predicts AI Advancements Will Enable Three-Day Workweeks Within a Decade

• Microsoft co-founder Bill Gates predicts humans may need to work only two or three days a week within a decade, due to advancements in AI;

• While Gates sees a future of reduced workdays, Indian leaders advocate longer hours, with Infosys co-founder suggesting 70-hour weeks to maintain global competitiveness;

• Others share Gates’ vision, as JPMorgan CEO and Tokyo’s government highlight AI’s potential to reshape workweeks and combat issues like worker burnout and high birth rates.

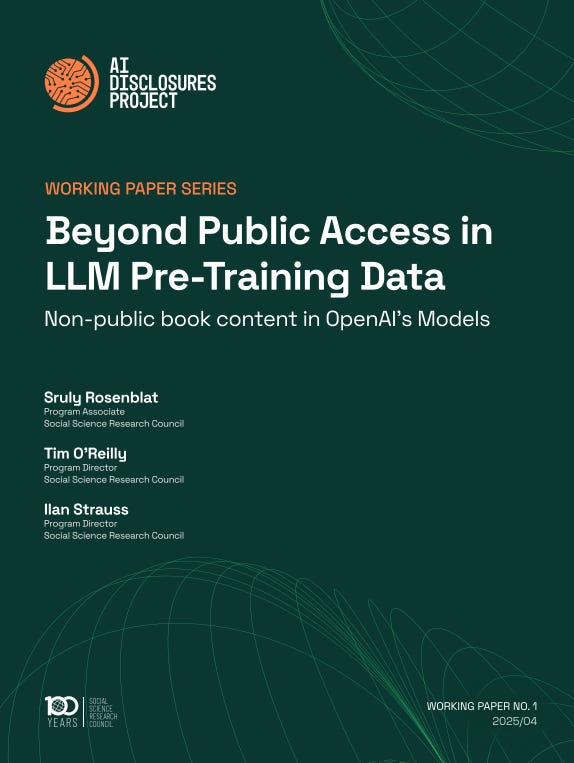

Study Highlights Inferred Use of Copyrighted Content in OpenAI's GPT Models

• DE-COP membership inference attack is used to study OpenAI models' potential training on copyrighted O'Reilly Media books, revealing significant findings on data consent issues

• GPT-4o strongly recognizes paywalled O'Reilly content with an AUROC of 82%, contrasting with GPT-3.5 Turbo's affinity for public samples and presenting possible data exposure concerns

• The research underscores an urgent need for greater transparency in AI training datasets, advocating for formal licensing frameworks to clarify the use of copyrighted material;

OpenAI Proposes UK Copyright Policy Reforms to Boost AI Innovation in Europe

• OpenAI submitted recommendations to the UK Parliament advocating for pro-innovation copyright policies, aiming to establish the country as the AI capital of Europe

• A broad text and data mining exception is proposed as essential for driving AI innovation in the UK, balancing technological growth with the interests of copyright owners

• Drawing a comparison to EU policies, OpenAI highlights the US's regulatory success, urging the UK to adopt clear policies that avoid uncertainty to boost AI leadership

Senate Subcommittee Demands Meta's Internal Documents on Chinese Business Dealings

• A bipartisan group of U.S. Senators, led by Sen. Josh Hawley, will examine Meta's operations in China, scrutinizing internal documents and potential collaboration with the Chinese Communist Party

• The Senate Judiciary Subcommittee's hearing, titled "A Time for Truth," seeks transparency regarding Meta's foreign relations and alleged plans to share user data with Chinese authorities

• Meta's historical interest in the Chinese market has resurfaced in a legislative inquiry, despite the company citing its withdrawal from such ventures as early as 2019.

AI Advancements May Enable Two-Day Workweeks Within a Decade, Says Bill Gates

• Bill Gates forecasts a future where AI could enable a two or three-day workweek within a decade, drastically altering traditional work structures

• Diverse perspectives exist on work hours while Bill Gates predicts less work time due to AI, others like Indian business leaders advocate for longer hours for competitiveness

• Other leaders, including JPMorgan CEO and Japanese policymakers, share Gates’ outlook, with some initiatives already pushing for reduced workweeks to address global workforce challenges;

🎓AI Academia

New MoCha AI Model Aims for Enhanced Movie-Grade Talking Character Synthesis

• MoCha emerges as a cutting-edge, end-to-end talking character video generation model that only requires speech and text for input, eliminating the need for auxiliary conditions;

• Leveraging scene, emotion, and action control, MoCha crafts complex multi-character narratives, enabling dynamic interactions like talking character synthesis for movie-grade video productions;

• By executing multi-character, turn-based dialogue scenarios, MoCha pushes boundaries in synthesizing emotionally expressive, contextually nuanced talking characters across diverse settings and scenarios.

A Comparative Study Proposes Framework for University Policies on Generative AI Governance

• A preprint study submitted to Taylor & Francis investigates cross-national differences in generative AI policy at leading U.S., Japanese, and Chinese universities

• A detailed content analysis of 124 policy documents across 110 universities revealed 20 key themes and 9 sub-themes that shape GAI governance

• Major findings highlight U.S. universities' focus on faculty autonomy, Japan’s emphasis on ethics and regulation, and China’s distinct approach to GAI policy development.

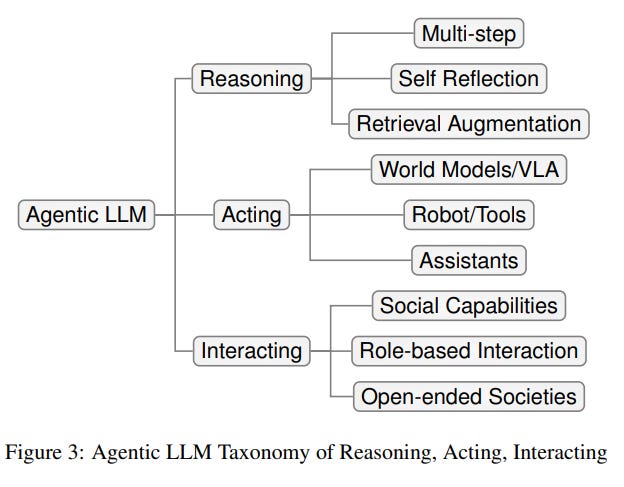

Survey Highlights Agentic Large Language Models: Reason, Act, Interact Framework Analyzed

• A comprehensive survey from Leiden University reviews the emerging domain of agentic large language models (LLMs), highlighting their unique ability to reason, act, and interact;

• The study categorizes agentic LLM efforts into reasoning, action, and interaction, noting that advances in one area can enhance capabilities in others, like reflection boosting collaboration;

• The survey underscores the potential applications of agentic LLMs in fields like medical diagnosis and logistics, while also pointing out the risks of LLMs taking real-world actions.

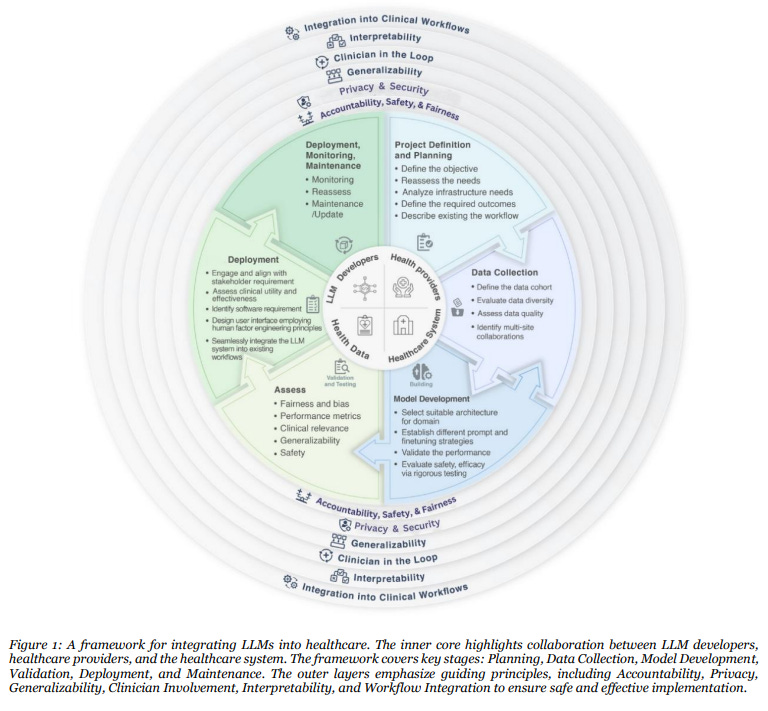

Large Language Models: Potential Transformation of Healthcare Through AI Integration

• Large language models (LLMs) in healthcare promise innovations in administrative automation, enriching patient care, and advancing clinical decision-making, highlighting potential for improved efficiency and outcomes

• Challenges such as data privacy, regulatory compliance, and bias mitigation must be addressed for responsible LLM integration, necessitating interdisciplinary collaboration within the healthcare sector

• Adapting LLMs through fine-tuning and synergizing them with electronic health records is essential for domain-specific tasks, enhancing clinical accuracy and achieving robust patient outcomes.

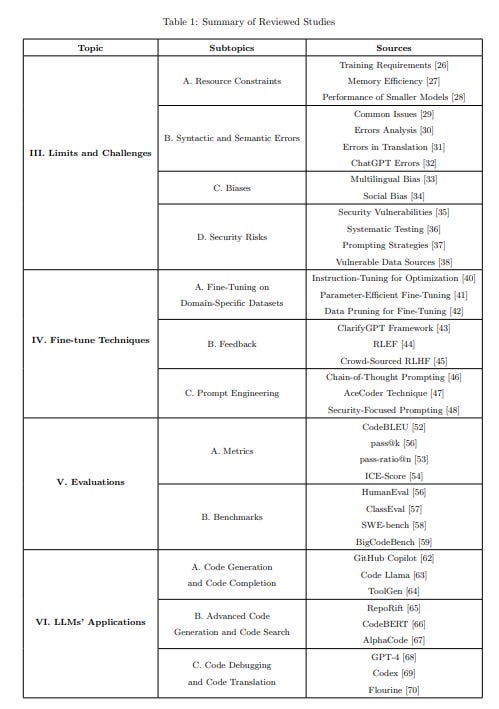

Survey Highlights Challenges and Techniques in AI-Powered Automated Code Generation

• A comprehensive survey offers insights into the capabilities and challenges of using Large Language Models (LLMs) to automatically generate executable code for users with varying technical backgrounds;

• Researchers discuss the importance of fine-tuning techniques and evaluation metrics in enhancing LLMs' performance and adaptability for code generation tasks, showcasing their current state-of-the-art capabilities;

• The study emphasizes real-world applications of LLMs, like GitHub Copilot and CodeLlama, highlighting their potential for simplifying complex coding tasks within integrated development environments.

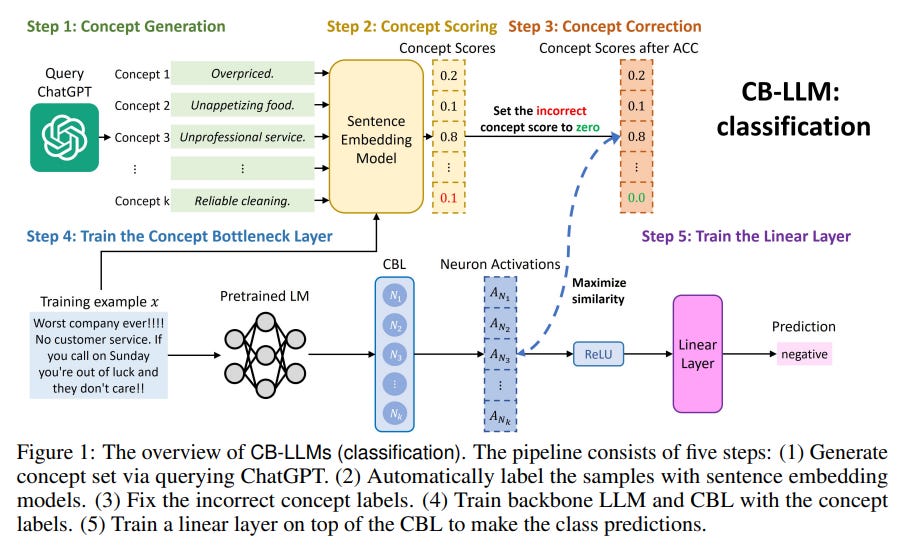

Concept Bottleneck Models Introduce Intrinsic Transparency to Language Model Tasks at ICLR 2025

• UC San Diego researchers developed Concept Bottleneck Large Language Models (CB-LLMs), offering built-in interpretability to improve transparency and control in text classification and generation tasks;

• By embedding interpretability, CB-LLMs outperform traditional black-box models in text classification while delivering explicit reasoning and enable precise concept detection in text generation tasks;

• This breakthrough aims to enhance the safety, reliability, and trustworthiness of LLMs, allowing users to identify harmful outputs and exert control over model behavior.

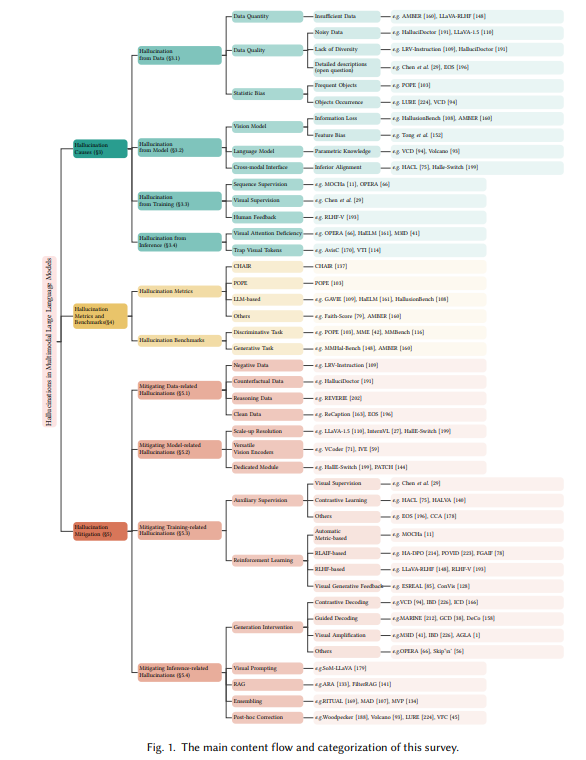

Comprehensive Analysis of Hallucinations in Multimodal Large Language Models Revealed

• A recent survey has highlighted the issue of hallucination in multimodal large language models, where outputs often fail to align with visual content, raising concerns over their reliability;

• Researchers have reviewed techniques to detect, evaluate, and mitigate hallucinations in large vision-language models, providing insights into underlying causes and proposing evaluation benchmarks and metrics;

• The study identifies current challenges and proposes open questions, aiming to enhance the robustness and reliability of MLLMs, offering critical resources for researchers and industry practitioners.

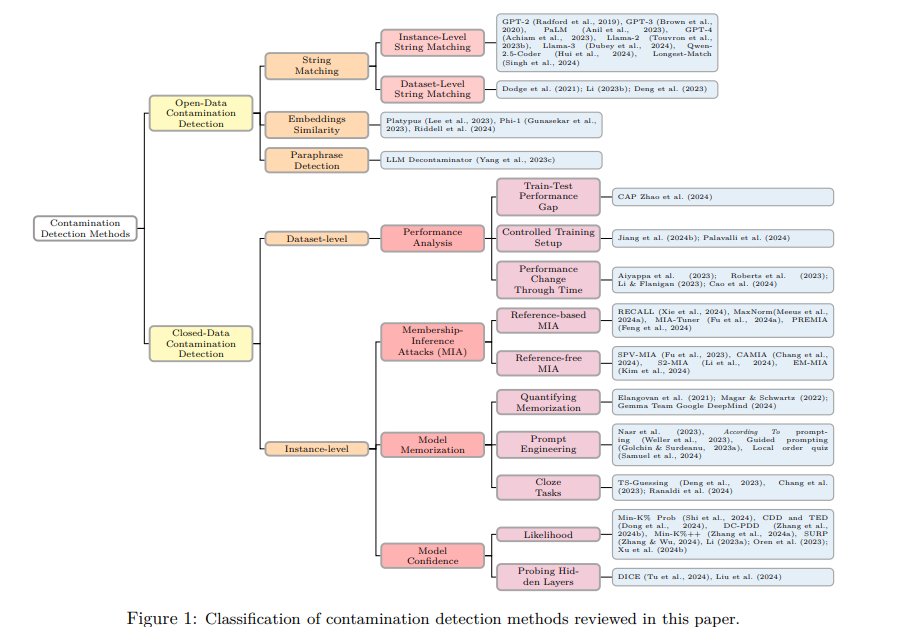

Survey Highlights Urgent Need for Effective Contamination Detection in LLMs

• A recent survey emphasizes the growing challenge of contamination in Large Language Models (LLMs), impacting their performance metrics and undermining model integrity in AI applications

• The study highlights the difficulty in tracking LLMs' data exposure, particularly with closed-source models, raising concerns over the authenticity of performance improvements in NLP benchmarks

• Researchers are urged to systematically address contamination bias in LLM evaluations, advocating for the development and adoption of effective contamination detection methodologies.

About SoRAI: The School of Responsible AI (SoRAI) is a pioneering edtech platform by Saahil Gupta, AIGP focused on advancing Responsible AI (RAI) literacy through affordable, practical training. Its flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.