Benchmarking Benchmark Leakage in Large Language Models

Background:

The study focuses on the growing problem of benchmark dataset leakage in the training of large language models (LLMs). This leakage can skew benchmark effectiveness and lead to unfair comparisons, hindering the development of the field.

Objective:

To develop a detection pipeline that can identify if LLMs have been trained on benchmark data, thus ensuring the integrity of model evaluations.

Methodology:

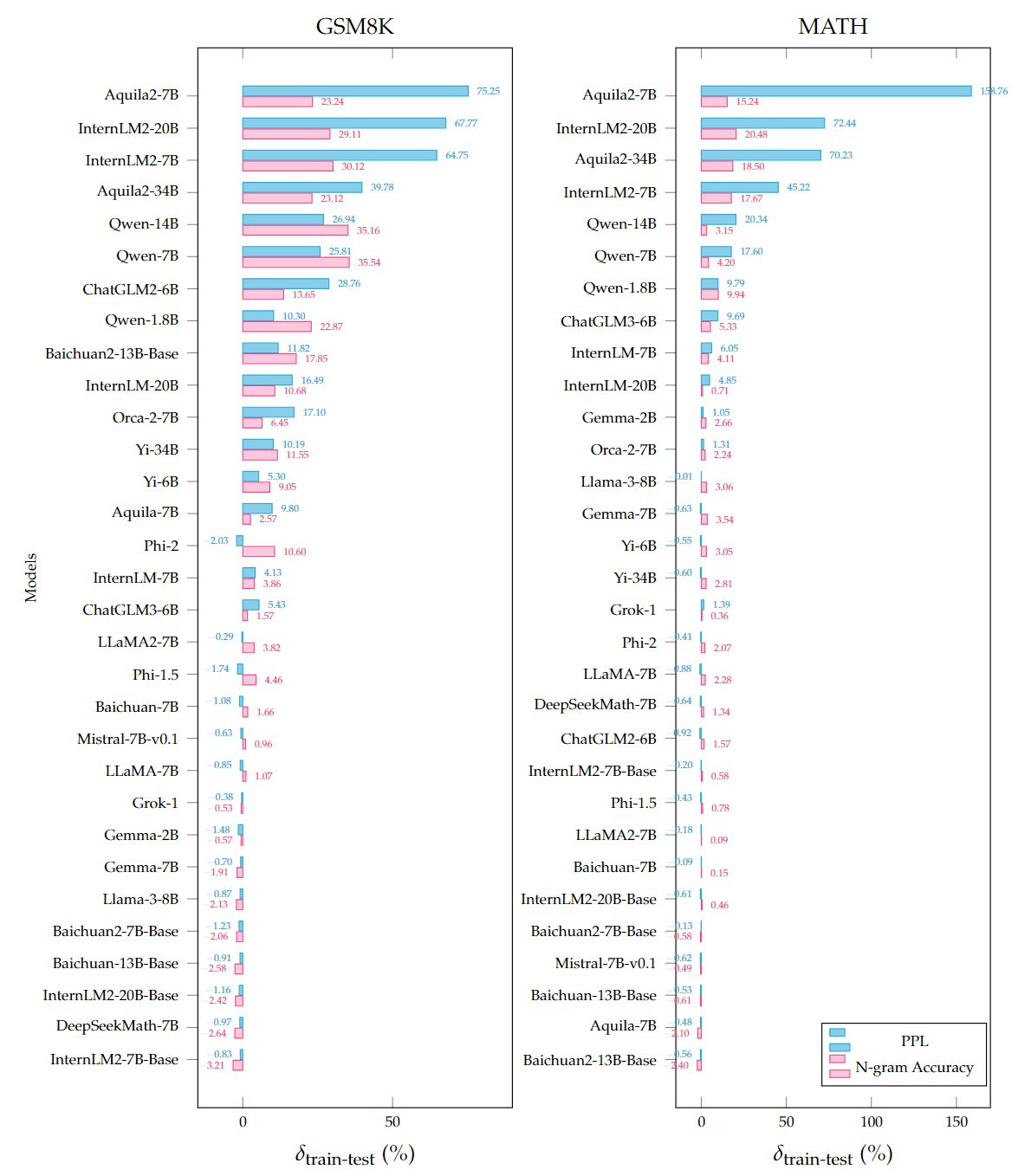

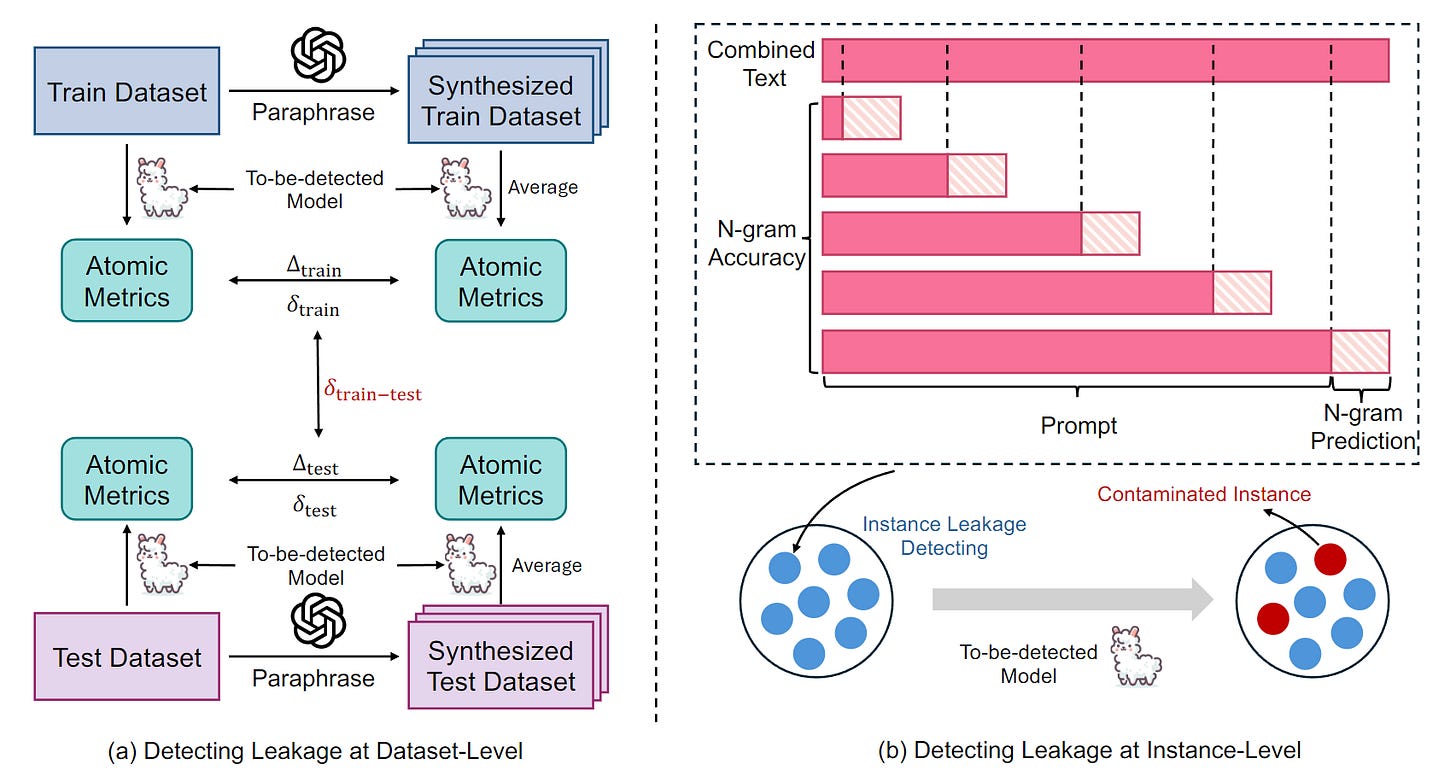

The researchers introduce two metrics—Perplexity and N-gram Accuracy—to gauge prediction precision on benchmarks and detect data leakages. They apply these metrics to a selection of 31 LLMs, evaluating their performance on mathematical reasoning tasks.

Key Findings:

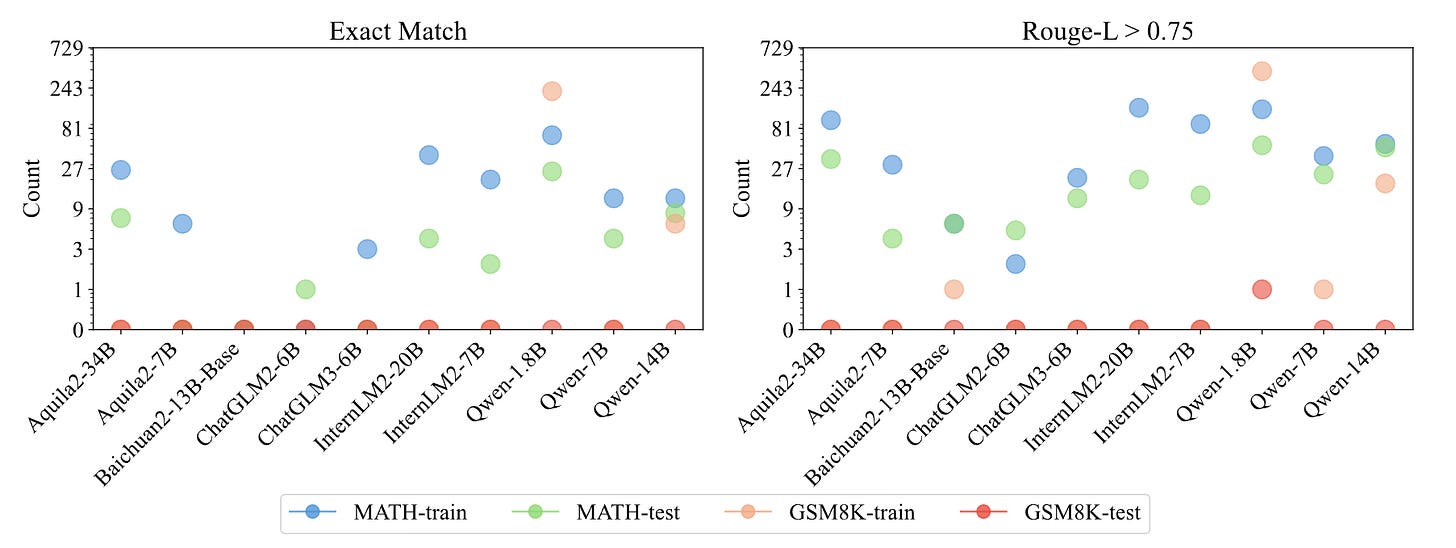

- Significant instances of potential data leakage were found across several models.

- Models like Qwen-1.8B, Aquila2, and InternLM2 showed high levels of prediction accuracy on test datasets, suggesting prior exposure during training.

- The study proposes the adoption of a "Benchmark Transparency Card" for documenting model training and data usage, promoting transparency and ethical development.

Implications:

This research underscores the need for clear documentation and ethical guidelines in AI development to prevent data leakage and ensure fair and accurate model evaluations.