It’s been less than 48 hours since OpenAI dropped 4o Image Generation in ChatGPT — and it's wild!

Today's highlights:

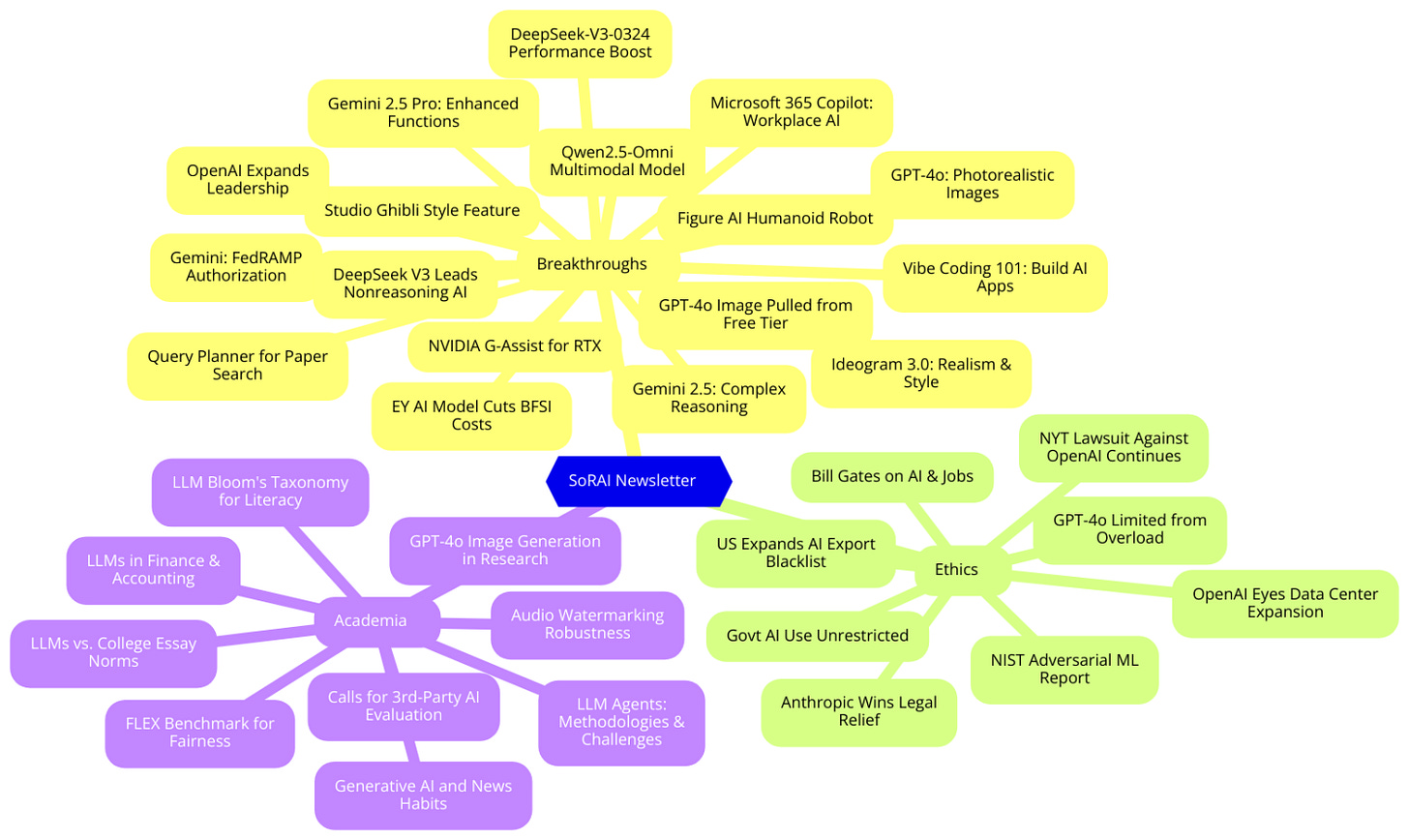

You are reading the 82nd edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) (formerly ABCP). Subscribe today for regular updates!

Are you ready to join the Responsible AI journey, but need a clear roadmap to a rewarding career? Do you want structured, in-depth "AI Literacy" training that builds your skills, boosts your credentials, and reveals any knowledge gaps? Are you aiming to secure your dream role in AI Governance and stand out in the market, or become a trusted AI Governance leader? If you answered yes to any of these questions, let’s connect before it’s too late! Book a chat here.

🚀 AI Breakthroughs

OpenAI dropped their new image model in ChatGPT- GPT-4o for Advanced, Precise, Photorealistic Image Generation Capabilities

• OpenAI has launched GPT-4o, an advanced multimodal image generator integrated into its language model, enabling precise, photorealistic image outputs that augment textual prompts with visual elements;

• GPT-4o excels at photorealistic text rendering and intuitive visual representation, allowing users to create accurate, context-aware images for applications ranging from game development to scientific exploration;

• This new model addresses diverse image generation scenarios with improvements in precision and relevance, though limitations such as tight cropping and multilingual text rendering inaccuracies remain to be refined.

• OpenAI's new image feature captivated users with its ability to mimic art styles, resulting in numerous Studio Ghibli-style transformations on social media

• OpenAI temporarily withdrew its upgraded image generation feature from free ChatGPT users a day after launch due to a surge in Studio Ghibli-style creations;

• The feature, powered by the GPT-4o reasoning model, promises enhanced realism using an autoregressive approach for image and text generation while sharpening text within images;

• Despite addressing ethical considerations in AI image generation, the virality of Ghibli-inspired art prompted OpenAI to reevaluate its rollout strategy for the free tier.

OpenAI Expands Leadership Roles to Strengthen Global AI Innovation and Strategy

• OpenAI is expanding its leadership team, with leaders taking on additional responsibilities to better integrate research and product development, bridging the gap between scientific innovation and practical application;

• Mark Chen is promoted to Chief Research Officer, focusing on advancing AI capabilities and safety, while ensuring research translates swiftly into user-friendly products;

• Julia Villagra steps into the role of Chief People Officer, emphasizing global scalability and maintaining OpenAI as a prime destination for AGI-focused professionals.

Gemini 2.5 Debuts as Top AI Model, Excels in Complex Reasoning Tasks

• Gemini 2.5, an AI model, ranks #1 on LMArena, setting a new benchmark in AI intelligence with its advanced reasoning capabilities

• Gemini 2.5 excels in complex reasoning, leveraging enhanced post-training to analyze information and make informed, context-aware decisions

• Building on previous models, Gemini 2.5 integrates reinforcement learning and chain-of-thought techniques, enhancing its ability to solve complex problems and support sophisticated agents;

Gemini 2.5 Pro Experimental: Google's Latest Model Leveraging Enhanced Function Calling Capabilities

• Gemini 2.5 Pro Experimental emerges as the most advanced artificial intelligence model available, showcasing new capabilities in generative AI;

• Function calling in Gemini 2.5 Pro enables AI to connect with external tools and APIs, executing real-world actions through predefined functions;

• Key applications of function calling include augmenting knowledge, extending capabilities, and interacting with external systems for a seamless, integrated AI experience;

Google's Gemini Achieves FedRAMP High Authorization, Enhancing AI Security in Public Sector

• Google’s Gemini in Workspace apps and the Gemini app have achieved FedRAMP High authorization, marking them as the first generative AI assistants for productivity suites to reach this milestone;

• FedRAMP High authorization ensures government agencies can deploy Gemini’s AI capabilities securely without costly add-ons or configurations, aligning with stringent federal security requirements;

• With AI embedded in Google Docs, Drive, and more, Gemini streamlines collaboration, automates tasks, and supports data-driven decision-making, enhancing both efficiency and citizen service delivery across government sectors;

Microsoft 365 Copilot Launches Researcher and Analyst Agents for Enhanced Workplace Reasoning

• Microsoft 365 Copilot launches Researcher and Analyst reasoning agents, capable of securely analyzing user work data and web information for on-demand expertise.

• Researcher integrates OpenAI’s deep research model and efficiently manages data from multiple platforms, providing detailed insights for strategies, trends, and market analyses.

• Analyst employs OpenAI’s o3-mini model, using chain-of-thought reasoning and Python to swiftly convert raw data into insights like demand forecasts and customer behavior analysis.

DeepSeek V3 Becomes Leading Nonreasoning AI Model, Outpacing OpenAI and Google

• DeepSeek V3 emerges as the top nonreasoning AI model, outperforming competitors such as OpenAI, Grok, and Google, according to AI benchmarker Artificial Analysis.

• This marks the first time an open-weights model, one that's fully open source, has secured the leading position in the AI market.

• DeepSeek V3's speed and cost-efficiency highlight its suitability for most applications, intensifying competition against American firms like OpenAI, which operate with closed-source models.

Qwen2.5-Omni Model Launched for Multimodal Interactions Across Text, Images, Audio, Video

• Qwen2.5-Omni, the new flagship multimodal model, offers seamless processing of text, images, audio, and video, available on platforms like Hugging Face, ModelScope, DashScope, and GitHub

• Utilizing Thinker-Talker architecture, Qwen2.5-Omni excels in real-time interactions, integrating position embedding TMRoPE for syncing timestamps in video and audio inputs

• Achieving top performance across modalities, Qwen2.5-Omni surpasses single-modality models and rivals closed-source contenders in benchmarks like OmniBench and Common Voice;

Ideogram 3.0 Enhances Generative Media with Realism and Style Consistency Features

• Ideogram 3.0 brings advanced realism, creative designs, and consistent styles in its latest release, available to users on ideogram.ai and its iOS app

• The Style References feature in Ideogram 3.0 enables creators to use reference images, offering greater control over aesthetics and enabling seamless creative workflows

• Ideogram 3.0 offers revolutionary capabilities for design, now empowering small businesses to generate professional-quality graphics at minimal costs with tools like Batch Generation for scalability;

NVIDIA Launches Experimental G-Assist AI to Simplify GeForce RTX User Experience

• At Computex 2024, NVIDIA showcased Project G-Assist, offering a tech demo revealing AI's potential to enhance PC experiences for gamers and creators

• NVIDIA released an experimental version of Project G-Assist System Assistant for GeForce RTX desktop users through its app, with laptop support planned for a future update

• The locally-run AI assistant simplifies complex PC operations, allowing users to optimize settings, monitor key performance statistics, and control peripherals using voice or text commands;

Query Planner Enhances Efficient Paper Search With Advanced Semantic Sub-Flows

• The query planner devises execution strategies, selecting from several predefined sub-flows based on goals, such as semantic search or specific paper retrieval, ensuring efficiency and relevance

• Various strategies, including Semantic Scholar API and LLM assistance, are employed in specific paper sub-flow to integrate multiple results into a unified paper-id set

• Semantic relevance and metadata criteria, influenced by query modifiers, guide re-ranking of results in semantic search sub-flow, focusing on timely and frequently cited papers;

Figure AI Reveals Humanoid Robot with Human-Like Walking for Industrial and Home Use

• Figure AI introduces a humanoid robot with human-like walking, aiming to enhance industrial and domestic adaptability

• Real-world deployment achieved through reinforcement learning and sim-to-real transfer, eliminating the need for fine-tuning

• Figure AI's scalable robotics process allows multiple robots to operate under a single neural network, facilitating a robust industrial production year;

Vibe Coding 101 Course Guides on Building AI-Driven Apps Using Replit

• Vibe Coding 101 with Replit offers a course on building and hosting applications using an AI coding agent, focusing on efficient workflows and application architecture design

• Participants will leverage Replit's cloud environment, integrated tools, and a structured approach to develop two web applications, enhancing their skills in agent collaboration

• The course emphasizes mastering a “five-skill framework” for effective AI collaboration, including precise tasking, structured workflows, and a comprehensive understanding of coding agents.

AI-Driven Model by EY Promises 50% Cost Savings for India's BFSI Sector

• EY India has developed a customized fine-tuned LLM for the BFSI sector, promising up to 50% cost savings and improved operational efficiency

• The model offers multilingual support and enhances customer service in English and Hindi, leveraging domain-specific vocabulary and regulatory knowledge

• This BFSI-focused LLM can be deployed on-premises or in cloud environments with limited GPU needs, reducing costs and ensuring data privacy and security;

⚖️ AI Ethics

Judge's Decision Favors Anthropic in Temporary Relief Dispute With Music Publishers

• Federal Judge denies music publishers' preliminary injunction against Anthropic, allowing the company to continue using copyrighted lyrics in AI training while the lawsuit remains active

• The ruling highlights ongoing legal debates over AI training and copyright, with implications for other companies like OpenAI and Microsoft facing similar litigation

• This case could lead to changes in copyright law interpretation, potentially framing AI training as transformative use and reshaping intellectual property views in the tech industry.

OpenAI Temporarily Limits New ChatGPT Image Feature Due to Server Overload

• OpenAI CEO Sam Altman reported that ChatGPT's new image-generation AI is overwhelming servers, leading to temporary usage restrictions to enhance system efficiency;

• The native image-generation tool, launched this week, enables ChatGPT users to create diverse visuals, including diagrams, infographics, and custom art, directly within the platform;

• Viral anime-style images generated by ChatGPT's new feature have dominated social media, with ChatGPT's free tier soon offering users three daily image creations;

OpenAI Considering Massive Data Center Build to Boost AI Infrastructure Capacity

• OpenAI is exploring options to buy hardware and software for handling five exabytes of data, potentially establishing a massive dedicated storage data center as a solution

• The company aims to triple data center capacity to 2GW, incorporating cloud servers from partners like Microsoft, while developing infrastructure with Stargate in Abilene, Texas

• OpenAI's intention to build or negotiate better deals overlaps with its $6.6 billion fundraising last year and anticipated $40 billion funding round aiming at a $340 billion valuation.

Judge Allows Copyright Lawsuit Against OpenAI by New York Times to Proceed

• A federal judge has refused OpenAI's request to dismiss The New York Times' copyright lawsuit, keeping the focus on alleged unauthorized use of the newspaper's material

• The lawsuit accuses OpenAI of utilizing the Times' content for AI training without proper permission or compensation, raising pivotal copyright issues

• Judge Sidney Stein narrowed down the scope but allowed primary copyright infringement claims to continue in the Southern District of New York;

Government Departments Allowed to Use AI Without Restrictions, Minister Confirms in Statement

• The Ministry of Personnel does not track AI usage by government officials, disclosed the Minister of State for Personnel in a Rajya Sabha written reply

• Although AI presents great potential for citizen-focused applications, government departments face no specific prohibitions on employing AI-based tools for official tasks

• Cybersecurity and departmental security protocols guide the use of AI tools by government officials, as informed by relevant ministries like MeitY and the Ministry of Home Affairs.

NIST Report Establishes Taxonomy and Terms for Adversarial Machine Learning Security

• The NIST Trustworthy and Responsible AI report offers a comprehensive taxonomy and terminology for understanding adversarial machine learning (AML), organizing concepts in a conceptual hierarchy

• It details the life cycle stages of attacks, including attacker goals, objectives, capabilities, and knowledge in AML systems

• The report highlights existing challenges in AI system life cycles and suggests methods to mitigate and manage the impact of adversarial attacks.

U.S. Expands Export Blacklist Targeting Dozens of Chinese AI Tech Firms

• The U.S. Department of Commerce added 80 organizations, primarily Chinese, to its export blacklist, limiting their access to U.S. technology without government permits;

• The blacklist targets companies allegedly acting against U.S. national security interests, focusing on restricting China's exascale computing and quantum technology advancements;

• China's foreign ministry condemned the U.S. export restrictions, urging an end to broad national security claims, following the inclusion of firms tied to Huawei and Inspur Group.

Bill Gates Discusses Job Security in a Future Dominated by AI Chatbots

• Since its debut in 2022, ChatGPT has significantly influenced the integration of AI in daily work, with tools like Gemini and Copilot becoming essential in various professional settings;

• Concerns persist among professionals regarding AI's potential to replace jobs, as the technology becomes increasingly integrated across multiple sectors around the globe;

• Bill Gates recently emphasized that while AI might replace many human tasks, identifying future-proof skills and sectors remains vital as AI adoption continues to rise worldwide.

🎓AI Academia

OpenAI Expands GPT-4o Capabilities with Advanced Native Image Generation Features

• OpenAI's 4o image generation is integrated into GPT-4o, offering advanced photorealism and precise text incorporation due to its deeply embedded architecture.

• Unlike diffusion models like DALL·E, 4o image generation's autoregressive framework allows it to modify images intricately while observing enhanced safety protocols.

• The system incorporates enhanced instruction-following for image creation and transformation, elevating its functionality but also introducing unique safety challenges and risks.

Survey Analyzes Methodologies, Applications, and Challenges of Large Language Model Agents

• A comprehensive survey highlights the transformative impact of large language model agents in achieving artificial general intelligence through dynamic adaptation and goal-driven behaviors;

• The study categorizes LLM agents via a methodology-centered taxonomy, emphasizing their construction, collaboration, and evolution to unify fragmented research in this rapidly evolving AI field;

• Identifying key developments like unprecedented reasoning capabilities and sophisticated memory architectures, the paper offers insights into future trends and applications of LLM agents across various domains.

Generative AI's Impact on News Consumption Analyzed Through Fictional Design Studies

• A cross-disciplinary team in Finland is critically analyzing the impact of generative AI on news consumption, using design fictions as a novel research method for exploring digital media dynamics;

• Researchers from multiple Finnish universities are collaborating to investigate the societal implications of AI-driven news, focusing on how these technologies influence information consumption and public discourse;

• The study utilizes a unique blend of technological and social science approaches to assess the role of generative AI in shaping the future landscape of news media and consumer behavior.

Study Exposes Challenges in Aligning Large Language Models with College Essay Norms

• Recent study highlights poor alignment of large language models in college admission essays, finding significant linguistic differences between LLM-generated and human-authored texts;

• Attempts to steer language models using demographic prompts proved ineffective, failing to mimic the linguistic patterns of different sociodemographic groups in essay writing;

• The homogenization of LLM-generated texts, regardless of prompting, raises concerns about their suitability in high-stakes contexts like college admissions.

FLEX Benchmark Tests Large Language Models for Fairness Under Adversarial Conditions

• The FLEX benchmark critically evaluates fairness robustness in Large Language Models by employing adversarial prompts to reveal and test intrinsic biases under extreme scenarios;

• Comparative studies with existing benchmarks show that FLEX identifies potential fairness risks in LLMs that traditional evaluations may undervalue, emphasizing the need for stringent safety measures;

• Data and tools related to the FLEX benchmark are publicly accessible for further research and development via the provided GitHub link, supporting transparency and collaborative progress.

Study Proposes LLM-Driven Bloom's Taxonomy to Enhance Student Information Literacy Skills

• A novel framework enhancing Bloom's Educational Taxonomy has been developed to improve information literacy, leveraging Large Language Models to support and assess students in problem-solving activities;

• The proposed framework divides cognitive abilities for using LLMs into Exploration & Action and Creation & Metacognition stages, further broken into seven phases promoting structured learning;

• The framework addresses a key gap in evaluating LLM usage, offering theoretical and practical support for students navigating the complexities of modern information retrieval systems.

Comprehensive Study Examines Robustness of Audio Watermarking Against Removal Attacks

• Recent evaluations reveal that no existing audio watermarking schemes can withstand all tested distortions, highlighting significant vulnerabilities in current AI-generated content protection efforts;

• A comprehensive study tested 22 audio watermarking schemes against 109 configurations of watermark removal attacks, uncovering major weaknesses in their claimed robustness;

• Audio watermarking remains a critical tool for combating copyright issues and deepfake threats, yet its practical deployment is hindered by the need for increased resilience to real-world attacks.

Study Evaluates Consistency in Large Language Models for Finance and Accounting Tasks

• A study analyzed the consistency and reproducibility of Large Language Models (LLMs) outputs in finance and accounting tasks, running 3.4 million outputs across diverse source texts

• Key findings include near-perfect reproducibility in binary classification and sentiment analysis tasks, with more complex tasks displaying greater variability

• Despite inconsistencies, LLM outputs maintain high agreement and outperform human experts, offering robust downstream statistical inferences and addressing concerns about "G-hacking" in published tasks.

Calls Mount for Robust Third-Party Evaluation of AI Systems to Enhance Safety

• The widespread deployment of general-purpose AI systems presents unique risks, but current frameworks for flaw reporting are insufficient compared to those in software security fields;

• Experts from various domains recommend standardized AI flaw reports and researcher guidelines to streamline flaw submission and management in general-purpose AI systems;

• Proposals include broad flaw disclosure programs for AI providers and improved infrastructure to ensure effective, coordinated flaw reporting across the AI sector.

About SoRAI: The School of Responsible AI (SoRAI) is a pioneering edtech platform by Saahil Gupta, AIGP focused on advancing Responsible AI (RAI) literacy through affordable, practical training. Its flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.