Is Llama 3.1 the Most Powerful Open AI Model Ever? (Beats GPT-4)

Stability AI launches Stable Video 4D; Paraplegic athlete Kevin Piette carries Olympic torch using an exoskeleton; Colin Kaepernick enters AI space with Lumi, an AI storytelling platform for creators;

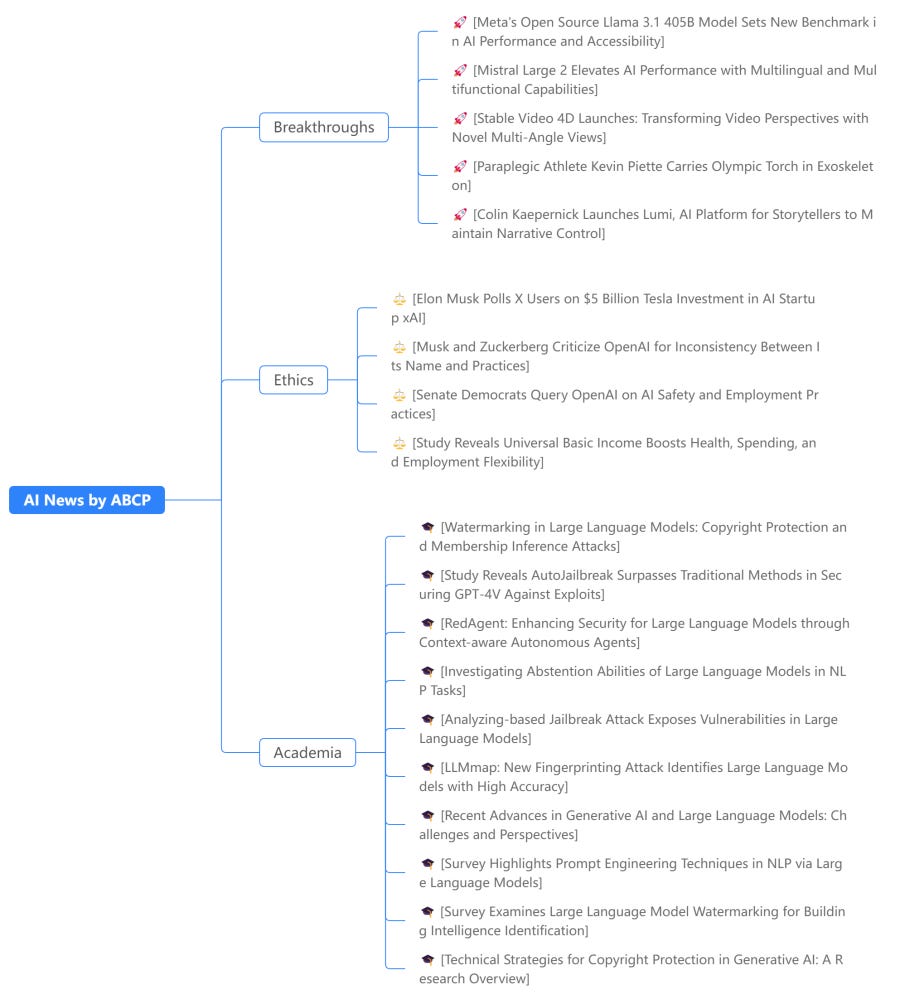

Today's highlights:

🚀 AI Breakthroughs

Meta's Open Source Llama 3.1 405B Model Sets New Benchmark in AI Performance and Accessibility

• Meta releases Meta Llama 3.1 405B, the largest openly available foundation model with advanced multilingual and reasoning capabilities

• Enhanced Llama models now support uses like long-form text summarization and coding assistance, available for download on llama.meta.com and Hugging Face

• Meta Llama 3.1 evaluated against over 150 benchmarks, showing competitive performance with major models like GPT-4 and Claude 3.5 Sonnet.

Mistral Large 2 Elevates AI Performance with Multilingual and Multifunctional Capabilities

• Mistral Large 2, a new AI model with a 123 billion parameter count, excels in multi-language processing and code generation across 80+ programming languages

• The model is licensed under Mistral Research License for academic and non-commercial use, with a separate commercial license required for business applications

• Mistral Large 2 is partnered with major cloud platforms including Google Cloud Platform, Azure AI Studio, and Amazon Bedrock for broader accessibility.

Stable Video 4D Launches: Transforming Video Perspectives with Novel Multi-Angle Views

• Stable Video 4D, developed by Stability AI, allows uploading of a single video to generate eight dynamic novel-view videos

• The model significantly enhances video analysis and creation by offering consistent and detailed views across multiple angles

• Positioned as a pioneering tool, Stable Video 4D aims to revolutionize fields such as gaming, VR, and video production.

Paraplegic Athlete Kevin Piette Carries Olympic Torch in Exoskeleton

• French para-athlete Kevin Piette, once a tennis star, became an exoskeleton 'pilot' after a life-altering accident left him paraplegic

• Kevin's recent use of the exoskeleton to carry the Olympic torch has gone viral, amassing over 2 million views and showcasing his incredible journey and resilience

• Through his public appearance with the exoskeleton, Piette inspires global audiences, promoting both sports participation and technological advancements for the disabled.

Colin Kaepernick Launches Lumi, AI Platform for Storytellers to Maintain Narrative Control

• Colin Kaepernick launches AI storytelling platform Lumi, focusing on empowering creators to maintain ownership over their narratives

• Lumi provides tools for story creation and distribution, starting with features tailored for manga illustrators, including AI-generated character imagery

• With $4 million in seed funding, Lumi emerges from stealth, planning to expand into video and offer comprehensive publishing services.

⚖️ AI Ethics

Elon Musk Polls X Users on $5 Billion Tesla Investment in AI Startup xAI

• Elon Musk polls users on the idea of Tesla investing $5 billion in his AI firm, xAI, with 70.2% supporting the move

• Tesla faces its lowest profit margins in over five years, following price reductions and heightened AI expenditure

• xAI, positioned as a contender to OpenAI and Google, recently raised $6 billion with a current valuation of $24 billion.

Musk and Zuckerberg Criticize OpenAI for Inconsistency Between Its Name and Practices

• Elon Musk and Mark Zuckerberg criticize OpenAI for being a "closed" AI firm despite its name suggesting openness

• Zuckerberg admits OpenAI's partnership with Microsoft, worth $13 billion, conflicts with the firm's original "open" ethos

• Despite criticisms, Zuckerberg praises Sam Altman's leadership at OpenAI, acknowledging his adept handling of public scrutiny.

Senate Democrats Query OpenAI on AI Safety and Employment Practices

• Five Senate Democrats question OpenAI CEO on AI safety and employment practices in a detailed letter

• Senators demand OpenAI's commitment to securing AI technology, citing national security concerns

• Inquiry follows concerns over OpenAI's internal disputes and cybersecurity incidents, seeks detailed safety protocols.

Study Reveals Universal Basic Income Boosts Health, Spending, and Employment Flexibility

• OpenResearch study shows UBI recipients experience increased healthcare usage, especially in dental and specialist care

• Findings indicate UBI leads to altered spending patterns, with increases in expenditures on basic needs and financial support to others

• UBI impacts employment choices, allowing individuals greater freedom in job selection and entrepreneurial activities.

🎓AI Academia

Watermarking in Large Language Models: Copyright Protection and Membership Inference Attacks

• Researchers at the University of Maryland investigate watermarking LLMs to prevent generation of copyrighted content, showing a significant reduction in such instances

• Watermarking, however, negatively impacts the success of Membership Inference Attacks, making it challenging to detect training data violations

• A new adaptive technique has been proposed to improve MIA effectiveness, emphasizing the need for continued innovation in managing legal risks with LLMs.

Study Reveals AutoJailbreak Surpasses Traditional Methods in Securing GPT-4V Against Exploits

• AutoJailbreak, developed at Huazhong University of Science and Technology and Lehigh University, achieves a 95.3% Attack Success Rate on GPT-4V;

• This technique uses prompt optimization and in-context learning to enhance security tests against GPT-4V's multimodal capabilities and facial recognition functions;

• The study also includes an optimization method with early stopping to reduce time and computational costs in testing AI vulnerabilities.

RedAgent: Enhancing Security for Large Language Models through Context-aware Autonomous Agents

• RedAgent enhances red teaming of LLMs by autonomously generating context-specific jailbreak prompts that adapt effectively to varied scenarios

• The new method can identify security vulnerabilities in LLM applications, reportedly achieving successful jailbreaks with up to 60 severe issues discovered using minimal queries

• Experiments demonstrate that RedAgent can breach most black-box LLMs within just five queries, doubling the efficiency of previous red teaming efforts.

Investigating Abstention Abilities of Large Language Models in NLP Tasks

• Large Language Models (LLMs) require effective Abstention Ability (AA) to enhance reliability, especially in high-stakes fields like legal and medical

• The paper introduces a new evaluation dataset, Abstain-QA, aimed at quantifying AA in LLMs through diverse multi-choice question sets

• Three different techniques are investigated for improving LLMs’ AA: Strict Prompting, Verbal Confidence Thresholding, and Chain-of-Thought.

Analyzing-based Jailbreak Attack Exposes Vulnerabilities in Large Language Models

• A research team analyzed jailbreak attacks on Large Language Models (LLMs), uncovering prevalent security vulnerabilities

• They introduced an Analyzing-based Jailbreak (ABJ) method, effectively exploiting LLMs’ analytical capabilities, achieving a 94.8% Attack Success Rate

• The study emphasized the importance of enhancing LLM security mechanisms to mitigate risk and prevent misuse.

LLMmap: New Fingerprinting Attack Identifies Large Language Models with High Accuracy

• LLMmap introduces a pioneering fingerprinting attack specifically targeting applications that integrate Large Language Models (LLMs)

• The tool achieves over 95% accuracy in identifying specific LLM models through as few as 8 interactions, robust across various configurations

• It allows for exploitation of model-specific vulnerabilities once the particular LLM in use is identified, potentially compromising the application.

Recent Advances in Generative AI and Large Language Models: Challenges and Perspectives

• Recent advancements in Generative AI and Large Language Models are transforming Natural Language Processing, enabling unprecedented capabilities across various domains

• The study identifies critical research gaps in Generative AI, such as issues of bias and fairness, interpretability, and data privacy, guiding future research efforts

• Enhanced language understanding, machine translation, and other NLP applications are highlighted, setting the stage for ethical and impactful technology integration.

Survey Highlights Prompt Engineering Techniques in NLP via Large Language Models

• A survey by NYU explores 39 different prompt engineering methods in LLMs for various NLP tasks

• Prompt engineering eliminates the need for extensive parameter retraining, leveraging LLM's embedded knowledge

• The study analyzes the impact of these techniques on 29 NLP tasks using data from 44 recent research papers.

Survey Examines Large Language Model Watermarking for Building Intelligence Identification

• Large Language Models face security risks watermarking proves essential for intellectual property and data protection;

• The study proposes a mathematical framework to enhance LLM identity recognition systems using watermarking technology;

• Survey identifies gaps in current watermarking applications, emphasizes the need for a comprehensive approach beyond traditional algorithms.

Technical Strategies for Copyright Protection in Generative AI: A Research Overview

• The study provides a comprehensive overview of copyright challenges in Generative AI, focusing on both data and model copyrights

• Techniques for safeguarding data copyrights and methods to prevent model theft are critically analyzed in the research

• Future directions for enhancing copyright protection in the AI sector are discussed, highlighting the importance for ethical advancements.

About us: We are dedicated to reducing Generative AI anxiety among tech enthusiasts by providing timely, well-structured, and concise updates on the latest developments in Generative AI through our AI-driven news platform, ABCP - Anybody Can Prompt!