From China to the U.S.: The Global Race for AI Literacy Begins NOW!

Is 2025 the turning point for global AI literacy? The U.S. signs an Executive Order on AI education, China makes AI classes mandatory, LinkedIn has identified AI Literacy as the top skill for 2025..

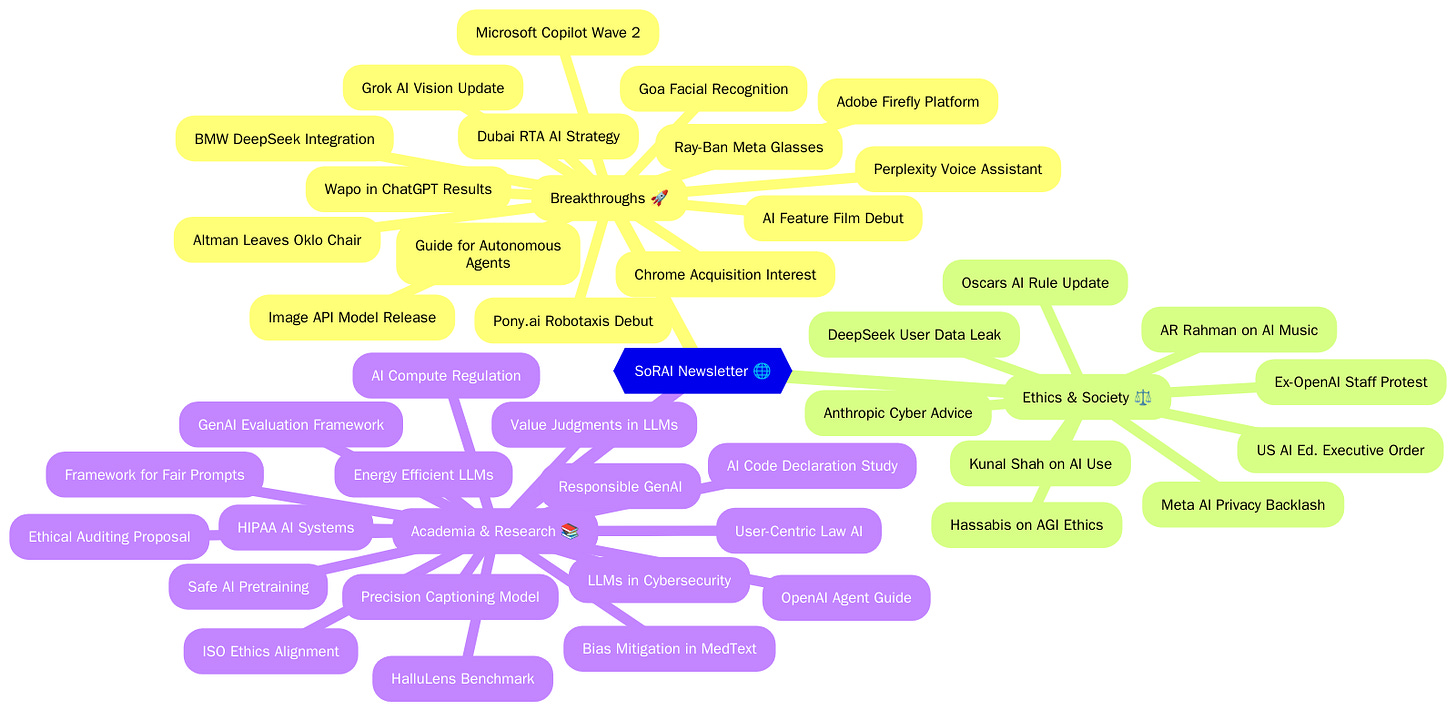

Today's highlights:

You are reading the 89th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) (formerly ABCP). Subscribe today for regular updates!

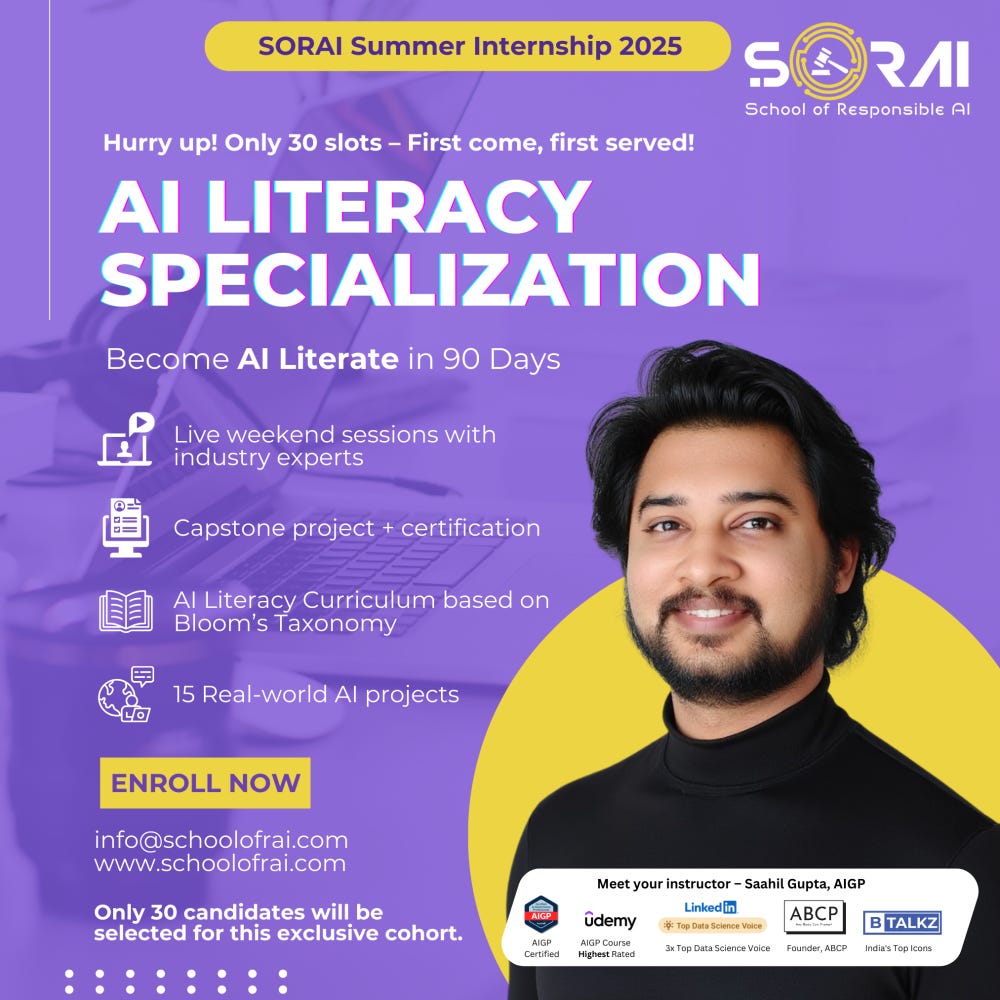

Become AI literate in just 90 days with our exclusive, hands-on program starting May 10th and running till August 3rd. Designed for professionals from any background, this ~10 hour/week experience blends live expert sessions, practical assignments, and a capstone project. With AI literacy now the #1 fastest-growing skill on LinkedIn and AI roles surging post-ChatGPT, there’s never been a better time to upskill. Led by Saahil Gupta, AIGP , this cohort offers a deeply personalized journey aligned to Bloom’s Taxonomy—plus lifetime access, 100% money-back guarantee, and only 30 seats. Last date to register- 30th Apr'25 (Registration Link)

🚀 AI Breakthroughs

White House Executive Order Embraces AI Integration in American Education System

• The White House issued a forward-looking executive order promoting AI integration into U.S. education to ensure leadership in the global AI revolution

• A new task force will establish partnerships with AI industry groups and nonprofit organizations to develop resources for AI literacy in K-12 education

• The order prioritizes professional development for teachers, aiming to equip them with AI skills to enhance educational outcomes and reduce administrative burdens.

OpenAI Releases Guide for Building Autonomous LLM Agents with Practical Insights

• OpenAI has introduced a comprehensive guide on building AI agents, highlighting significant advances in reasoning, multimodality, and tool use enabling these systems

• The guide distills insights from various deployments, offering product and engineering teams frameworks for identifying use cases and designing reliable agent logic and orchestration

• Emphasizing safe and predictable operation, the guide provides best practices for building agents that can perform workflows independently, aiming to augment traditional software solutions.

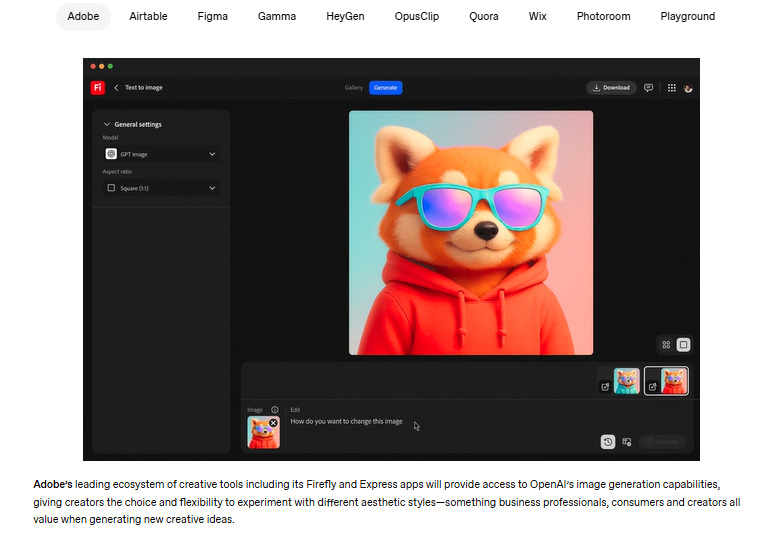

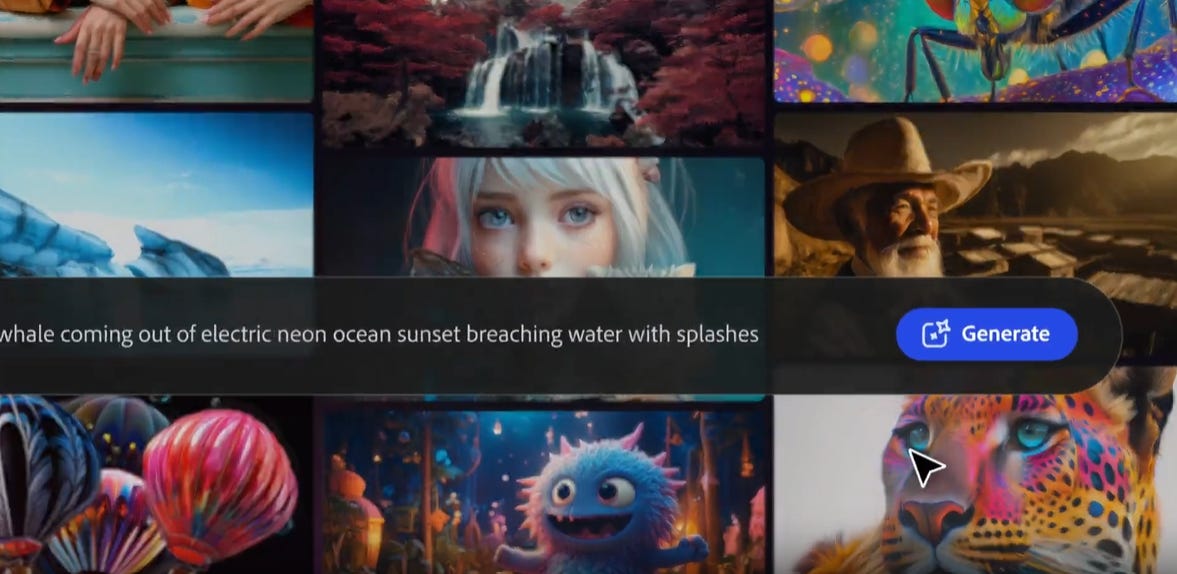

OpenAI Expands Image Generation Capabilities with New GPT-Image-1 API Model Release

• OpenAI launches gpt-image-1 via API, enabling high-quality image generation functionality for developers and businesses to integrate into various platforms

• Leading companies like Adobe, Airtable, and Figma are leveraging gpt-image-1 to enhance creative workflows, marketing, and design capabilities with professional-grade image generation features

• gpt-image-1 integration showcases applications across industries such as e-commerce, enterprise software, and gaming, with safety guardrails ensuring moderation and adherence to content guidelines.

The Washington Post's Journalism Now Accessible in OpenAI's ChatGPT Search Results

• The Washington Post partners with OpenAI to feature its journalism in ChatGPT search responses, aiming to make high-quality news more accessible to users worldwide

• As part of the partnership, ChatGPT will show summaries, quotes, and direct links to The Post's articles, ensuring easy access to reliable information across various topics

• Leveraging AI tools, The Post continues to expand its journalism's reach, following initiatives like Ask The Post AI and Climate Answers to further engage and inform audiences.

OpenAI Expresses Interest in Acquiring Google Chrome Amid Antitrust Proceedings

• During Google's antitrust trial in Washington, an OpenAI executive expressed interest in acquiring Google's Chrome if forced antitrust actions lead Alphabet to sell the browser

• Prosecutors warned that Google's search monopoly could leverage advantages in AI, with AI products possibly redirecting more users to its search engine

• Google has proposed non-exclusive agreements with manufacturers like Samsung and carriers like AT&T, while the DOJ argues for more stringent measures to end the search monopoly.

Microsoft 365 Copilot Wave 2 Empowers New Era of Human-Agent Collaboration

• Microsoft announces the Microsoft 365 Copilot Wave 2 spring release, featuring advancements like adaptive memory, reasoning agents, and enterprise-level personalization, emphasizing cutting-edge human-agent collaboration;

• The newly launched Copilot Notebooks and AI-powered Search offer business users instant access to insights, design tools, and context-aware results, enhancing productivity across organizational platforms;

• With the introduction of the Agent Store, users can access a variety of prebuilt and custom agents, including the new Researcher and Analyst agents, setting the stage for intelligence-driven teamwork.

Perplexity Launches iOS Voice Assistant Featuring Web Browsing and Multi-App Actions

• Perplexity launched a new iOS voice assistant with web browsing and multi-app actions, streamlining tasks like reservations, emails, and media playback via an app update

• Users can customize the Action Button on iPhone 15 Pro and newer models for easy access, a feature not previously seen in AI apps on Apple devices

• Talks with Motorola and Samsung indicate potential integration of Perplexity as a default assistant, elevating competition as Apple faces critiques over its less advanced AI features;

Adobe Firefly Revolutionizes Creative AI with Unified Platform and Mobile App Launch

• At Adobe MAX London, Adobe unveiled the latest Firefly release, integrating AI-powered tools for image, video, audio, and vector generation into a single cohesive platform;

• The new Firefly features enhanced models, expanded creative options, and unprecedented control, enabling brands like Deloitte and Pepsi to streamline workflows and innovate personalized experiences;

• The Firefly mobile app, soon available on iOS and Android, promises on-the-go creativity with seamless integration into Creative Cloud for generating images and videos from anywhere;

Sam Altman Steps Down as Oklo Chair to Facilitate AI Energy Partnerships

• Sam Altman steps down as chair of Oklo after facilitating its public debut via a merger with AltC Acquisition Corp. in May 2024

• Oklo seeks increased flexibility to partner with OpenAI and other hyperscalers, capitalizing on demand for power in the data center industry

• Oklo's Caroline Cochran emphasizes the ongoing synergy with Sam Altman to drive scalable clean energy, with potential collaborations with leading AI firms, including OpenAI;

Elon Musk's Grok AI Gains Real-Time Vision and Multilingual Chat Enhancements

• Grok AI's new vision feature enables real-time analysis of images and visuals when users point their smartphone cameras at real-world objects via the Grok app

• Multilingual audio support and real-time search are now available in Grok’s voice mode, enhancing user interaction for subscribers of the SuperGrok plan

• Grok AI's memory feature offers transparent conversation retention, allowing users to review and control what the AI remembers, with plans for a future 'forget' option on Android.

Ray-Ban Meta Smart Glasses to Launch in India with AI Features Soon

• Ray-Ban Meta glasses are set to launch in India soon, offering hands-free capabilities like real-time information queries and media control, after being launched globally in September 2023

• These glasses support multilingual live translations allowing seamless conversations in multiple languages and integrate features like sending Instagram messages or playing music through popular streaming services

• New styles and lens options, including Skyler Shiny Chalky Gray, have been introduced, while real-time language translation can operate offline with pre-downloaded language packs;

Dubai RTA's AI Strategy 2030 To Enhance Productivity and Customer Satisfaction

• Dubai’s RTA unveils its AI Strategy 2030, targeting a travel time reduction of up to 30% through smart traffic solutions and enhanced pedestrian mobility systems

• By leveraging AI, the strategy aims to boost employee productivity by 25-40% and cut operational costs by 10-20% with AI-powered asset management and predictive maintenance

• The plan includes utilising big data, aiming to enhance customer satisfaction by 35% and improve partner compliance by 30-50%, solidifying RTA's lead in AI-driven transformation.

Pony.ai Debuts Advanced Autonomous Driving System and New Robotaxi Models at Shanghai Auto Show

• Pony.ai unveiled its seventh-generation autonomous driving system and Robotaxi models at the Shanghai Auto Show, emphasizing advancements in safety, cost efficiency, and technology integration;

• The new system achieves a 70% reduction in production costs, including an 80% decrease in autonomous driving computation and a 68% cut in LiDAR expenses, enhancing profitability;

• Collaboration with Toyota, BAIC, and GAC led to the introduction of three new Robotaxi models, including the Toyota bZ4X, strengthening Pony.ai's strategic industry partnerships and market presence.

BMW to Integrate DeepSeek AI into New Vehicles in China This Year

• BMW is set to integrate AI technology from the Chinese startup DeepSeek into its new vehicle models in China, starting later this year, as announced at the Shanghai auto show on Wednesday

• BMW's partnership with DeepSeek aims to capitalize on significant advancements in AI technology occurring within China, reflecting a strategic focus on innovation and collaboration in the region

• The integration of DeepSeek AI into BMW's vehicles is poised to enhance drivers' experiences by introducing advanced features powered by the latest artificial intelligence developments;

Goa Implements AI-Powered Facial Recognition System to Enhance Panchayat Accountability

• Goa Electronics Limited (GEL) has been selected as the nodal agency responsible for executing the AI-powered facial recognition attendance system across all panchayat offices in the state

• This innovative initiative aims to replace outdated manual and biometric systems with real-time AI-powered monitoring, accommodating approximately 700 employees and ensuring staff accountability and timely services

• The advanced system is set to function even in areas with connectivity issues, aligning with Digital India goals to enhance governance through tech-driven transparency and citizen-centric administration;

Kannada Director Narasimha Murthy Claims 'Love You' as First AI-Generated Feature Film

• Kannada filmmaker Narasimha Murthy ventures into groundbreaking territory with Love You, claimed as the world's first AI-generated feature film, using over 30 AI tools with a software budget of nearly ₹10 lakh;

• Despite its innovative creation, Love You faces challenges such as character inconsistency and emotional expression limitations, as noted by regional censor officials during review;

• AI specialist Nuthan highlighted the rapid pace of AI technology development, stating that the same film could now be vastly improved, underscoring AI's evolving capabilities in filmmaking.

⚖️ AI Ethics

The Academy Updates Oscar Rules to Address Role of Generative AI in Film

• The Academy of Motion Picture Arts and Sciences has included generative AI in its Oscars rules, stating its use alone doesn't affect a movie's nomination chances, but its purpose might

• The Academy emphasizes the importance of human creative authorship in judging films involving AI, leaving AI's impact on each movie up to the branches' discretion

• A new rule mandates that Oscar voters must watch all nominated films in a category to vote, but adherence remains based on an honor system without verification.

WhatsApp Users Frustrated with Meta AI; Many Consider Quitting Due to Privacy Concerns

• WhatsApp users are challenging Meta's recent AI chatbot integration, expressing frustration over its non-removable nature despite assurances that the feature is optional;

• The feature, powered by Meta's Llama 4 language model, is not uniformly available yet, leading to inconsistencies in user experience and further criticism from frustrated users;

• Some privacy-focused users are contemplating a switch to alternatives like Signal, criticizing Meta for allegedly prioritizing data collection over user choice and clarity in feature implementation.

AI Pioneer Demis Hassabis Explores Next Steps Towards Artificial General Intelligence at DeepMind

• Nobel laureate Demis Hassabis is leading the charge towards artificial general intelligence (AGI), envisioning AI systems that match human versatility and enhance everyday life within the next decade;

• DeepMind's breakthroughs, such as solving complex protein structures, have revolutionized areas like drug development and health, promising to drastically reduce time frames in scientific research;

• Hassabis highlighted AI’s potential to end disease and usher in an era of "radical abundance", by speeding up scientific discovery, like protein folding and drug development. But he warned of two major risks: misuse by bad actors and the challenge of keeping powerful AI aligned with human values—emphasizing the urgent need for global cooperation and ethical guardrails.

Kunal Shah Urges Efficient AI Use Amid Growing Non-Productive ChatGPT Engagement

• CRED CEO highlights that 50-60% of ChatGPT users engage in non-productive activities like companionship and entertainment urges efficiency by aligning time with per-hour income values

• New data suggests a shift in generative AI usage towards therapy and companionship over previous work-related tasks, reflecting evolving user needs in 2025

• AI advancements are accelerating rapidly, with technology affecting daily life Financial literacy and practical AI applications for youth emphasized to maintain relevance in transformative times;

AR Rahman Critiques AI Music: Urges Ethical Guidelines Amidst Growing Concerns

• AR Rahman criticizes the rise of AI-generated music, comparing some outputs to "filthy" creations and urging the need for ethical controls to prevent chaos in the industry

• He acknowledges AI's potential to empower creators but warns against its misuse, advocating for regulations akin to societal ethics in the software and digital realm

• Despite past criticism for his tech reliance, Rahman defends it as an enhancement, highlighting his support for hundreds of live musicians in his film projects.

Former OpenAI Employees Urge Halt of Restructuring Threatening Nonprofit Mission

• A coalition of experts, including former OpenAI employees, opposes the company's shift from a nonprofit, citing threats to its mission of benefiting humanity with AGI

• The coalition's open letter to the Attorneys General claims restructuring into a Public Benefit Corporation jeopardizes nonprofit governance and public accountability safeguards

• Concerns include potential uncapped profits and diminished board independence, possibly prioritizing shareholder returns over OpenAI's original mission-focused charter commitments;

South Korea Accuses DeepSeek of Unauthorized User Data and AI Prompt Transfers

• South Korea's data protection authority accused DeepSeek of transferring user data and AI prompts to entities in China and the U.S. without consent during its January launch.

• After DeepSeek acknowledged some lapses in data protection, South Korea suspended new downloads of the app in February due to violations of data privacy standards.

• The authority recommended DeepSeek delete AI prompt content sent to Beijing Volcano Engine and establish legal frameworks for international data transfers.

Anthropic Predicts AI will Handle Corporate Roles, Urges Cybersecurity Overhaul in 2024

• Anthropic anticipates AI-powered virtual employees will enter corporate networks within a year, raising urgent cybersecurity challenges as these AI identities gain roles and autonomy in companies;

• The introduction of AI employees demands a reevaluation of security strategies to prevent potential breaches, as managing these digital identities becomes pivotal for companies;

• With AI employees nearing reality, industry attention is shifting towards developing security solutions for overseeing AI activities and redefining account classifications for better integration.

🎓AI Academia

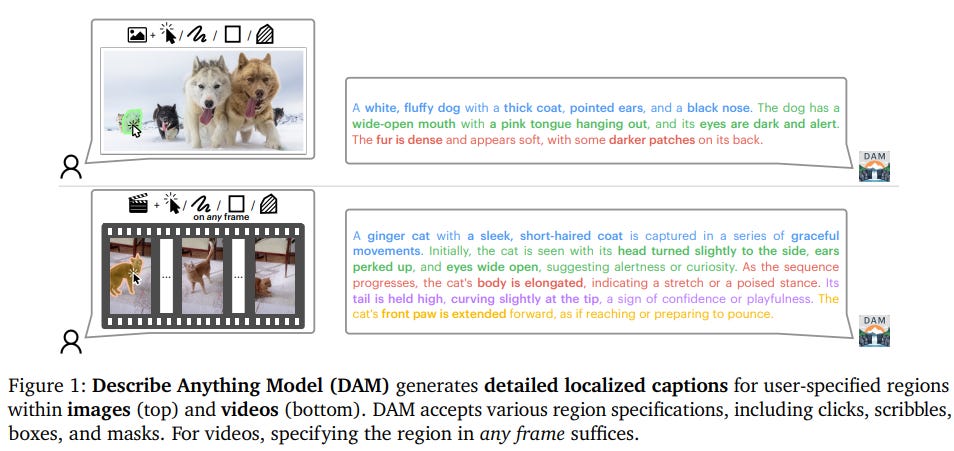

Detailed Localized Captioning Model Enhances Image and Video Descriptions with Precision

• The Describe Anything Model (DAM) revolutionizes vision-language models by offering detailed, localized image and video captioning, capturing both local details and global context effectively

• DAM supports diverse region specifications like clicks, scribbles, and masks, enabling users to generate precise captions for images and videos with unparalleled localization capabilities

• By utilizing a semi-supervised learning-based data pipeline, DAM expands training on unlabeled web images, achieving state-of-the-art results on multiple benchmark tests without reliance on reference captions;

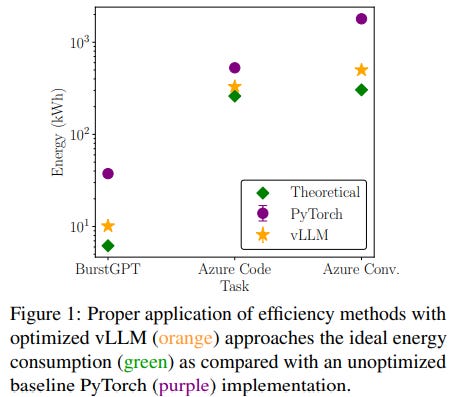

Efficiency Approaches to Cut Energy Costs in Large Language Models by 73%

• Recent analysis highlights the soaring energy demands of large language models, emphasizing the substantial impact of inference workloads on computational resources in real-world NLP and AI environments;

• Researchers demonstrate that effective inference efficiency optimizations can slash energy consumption by up to 73%, unraveling naïve underestimations from traditional metrics like FLOPs and GPU utilization;

• Anticipated projections indicate that data centers might account for 9.1% to 11.7% of the total US energy demand by 2030, urging enhanced energy-efficient design strategies for AI infrastructure.

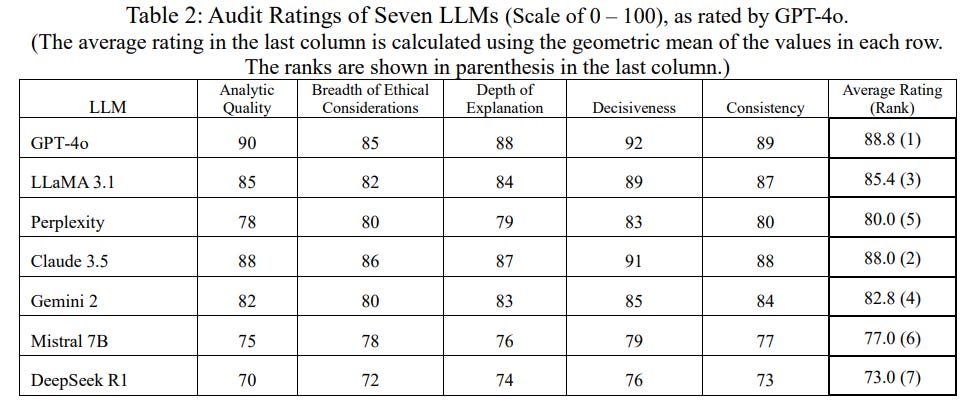

New Method Proposed for Ethical Auditing of Generative AI Models in Diverse Contexts

• With generative AI models entering high-stakes areas, there is a growing need for robust methods to evaluate their ethical reasoning

• A study introduces a five-dimensional audit model to assess LLMs, focusing on analytic quality, ethical considerations, explanation depth, consistency, and decisiveness

• Research shows while AI models often align on ethical decisions, they differ in explanatory rigor, highlighting the need for enhanced evaluation metrics in AI ethics benchmarking.

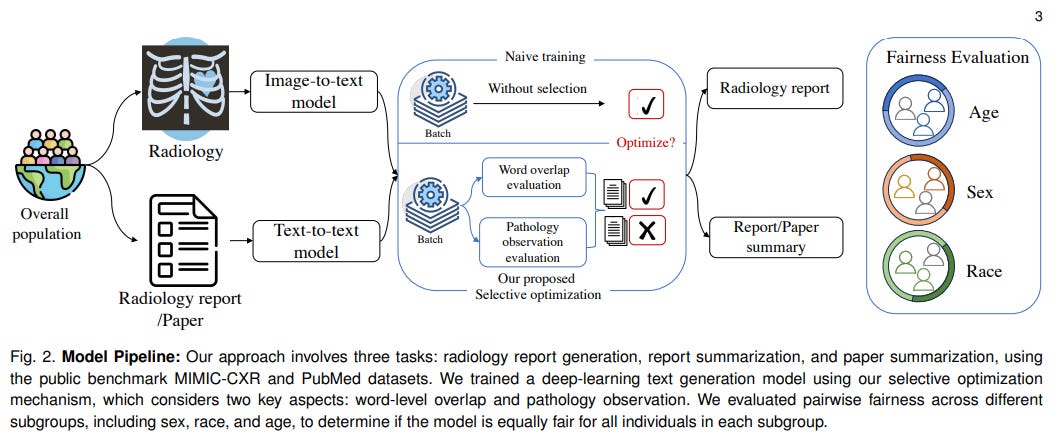

Study Reveals Bias Mitigation Strategies in AI-Generated Medical Texts Across Demographics

• Researchers have identified significant performance disparities in AI-generated medical text across races, sexes, and age groups, raising concerns about fairness and bias in healthcare applications;

• An innovative algorithm has been developed to address bias in medical text generation, reducing performance discrepancies by over 30% while maintaining overall text generation accuracy within a minimal 2% change;

• The study's findings emphasize the necessity of improving fairness in AI within the medical domain, particularly as large language models gain traction for critical healthcare applications such as diagnostics and patient education.

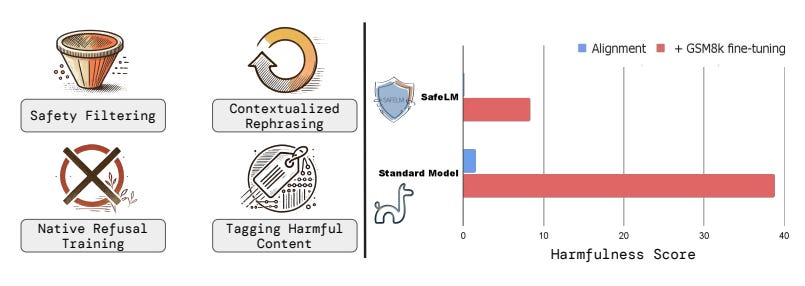

Carnegie Mellon Advances Safe AI with Novel Pretraining Framework to Reduce Harmful Outputs

• Safety Pretraining introduces a framework for embedding safety into AI models from the beginning, aiming to mitigate the generation of harmful or toxic content in large language models;

• Key strategies include filtering data using a safety classifier trained on GPT-4 labeled examples and generating a massive synthetic safety dataset to recontextualize harmful content;

• Safety-pretrained models demonstrate reduced attack success rates from 38.8% to 8.4%, maintaining performance on standard language model safety benchmarks without degradation.

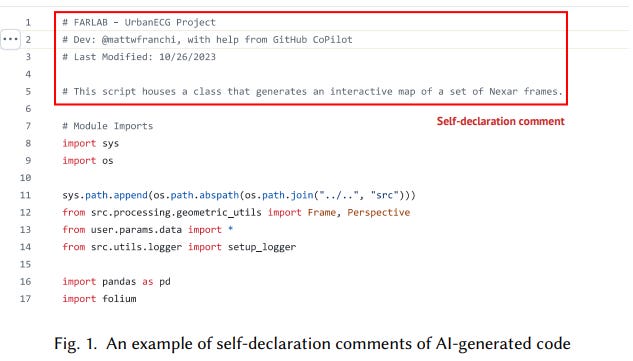

Study Explores Developers' Practices and Reasons for Declaring AI-Generated Code

• A research study analyzed practices around developers' self-declaration of AI-generated code, highlighting ethical and quality concerns in distinguishing machine-written from human-written code

• The study, based on GitHub data and a survey, revealed that 76.6% of developers always or sometimes self-declare AI-generated code, while 23.4% do not

• Developers cited code tracking and ethical reasons for declaration, whereas those opposed felt extensive modifications or perceived irrelevance negated the need for self-declaration.

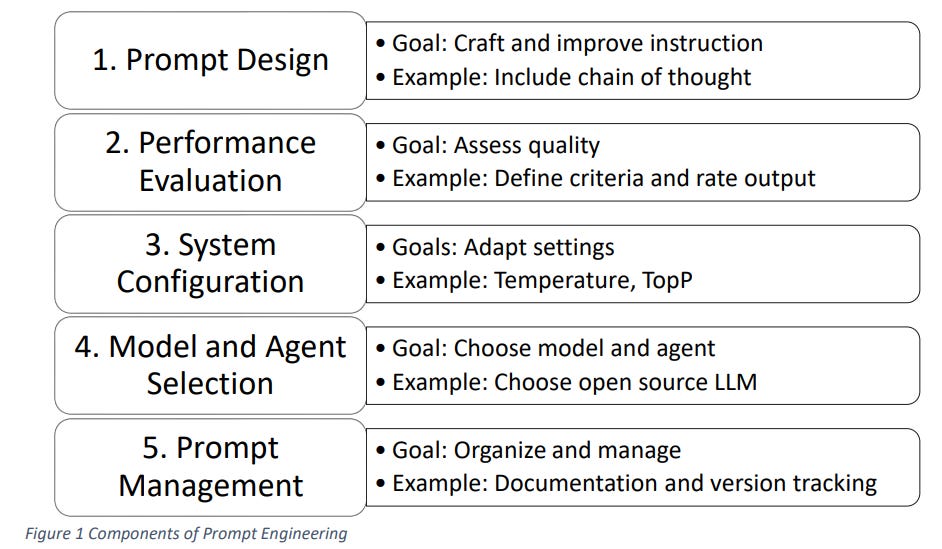

Framework for Responsible AI Prompts Ensures Fairness and Ethical Interactions

• A new framework for responsible prompt engineering integrates ethical and legal considerations into AI interactions, focusing on fairness and accountability in generative AI systems;

• The framework includes five components: prompt design, system selection, configuration, performance evaluation, and prompt management, aiming to align AI outputs with societal values;

• Responsible prompt engineering enhances AI development by embedding ethical considerations directly into implementation, supporting the "Responsibility by Design" approach to bridge AI development and deployment;

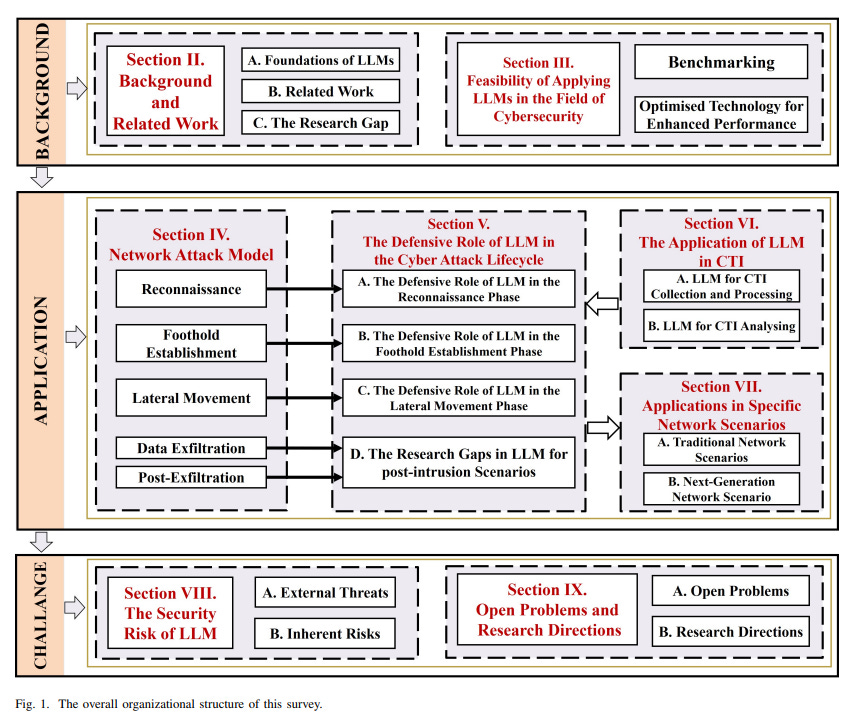

Large Language Models Play Key Role in Modern Cybersecurity Defense Strategies

• A recent survey highlights how Large Language Models (LLMs) are revolutionizing cybersecurity by analyzing attack patterns and enhancing real-time threat predictions, offering adaptive defense strategies;

• Researchers stress the significance of LLMs in critical cyber defense phases such as reconnaissance and lateral movement, enhancing Cyber Threat Intelligence in diverse network environments;

• Despite their potential, LLMs face internal and external risk challenges, shaping future research directions in developing more resilient AI-driven security solutions.

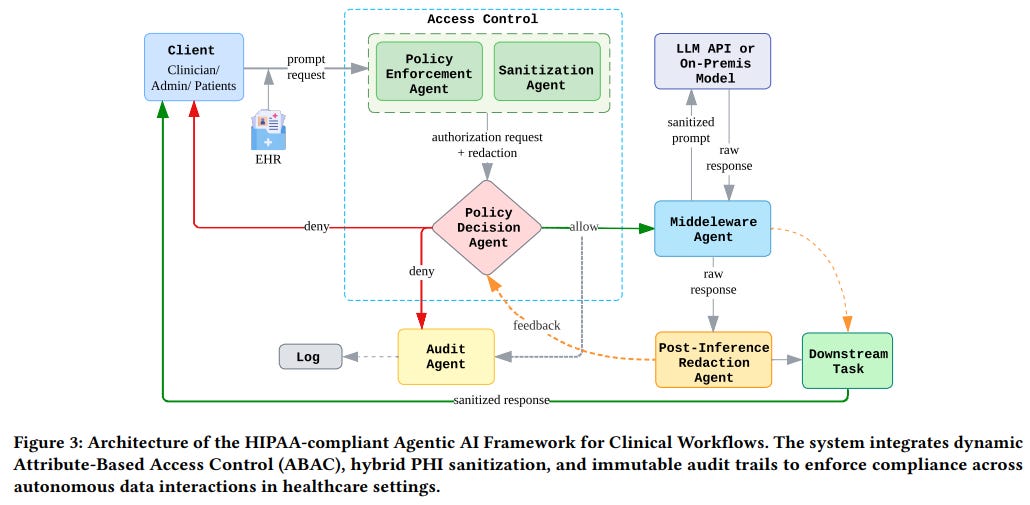

Progress Toward HIPAA-Compliant Autonomous AI Systems for Healthcare Data Management

• Researchers are developing a HIPAA-compliant Agentic AI framework to ensure that autonomous AI systems in healthcare adhere to privacy regulations while handling sensitive data.

• The framework employs Attribute-Based Access Control (ABAC) to manage Protected Health Information, enhancing security with policy-driven, granular governance of clinical data.

• A hybrid PHI sanitization pipeline using regex and BERT models, along with immutable audit trails, supports compliance and minimizes potential data breaches within healthcare systems.

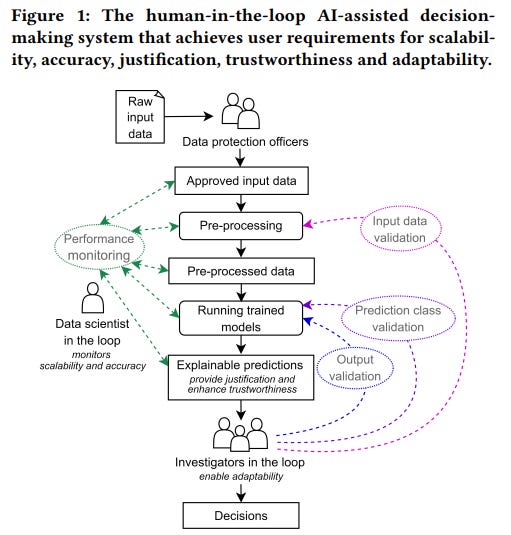

AI-Assisted Law Enforcement Systems Need User-Centered Design to Enhance Trust and Efficiency

• Researchers at British universities have highlighted the critical need for AI systems in law enforcement to effectively handle large data volumes while ensuring scalability, accuracy, and adaptability;

• Participants in a study stressed the importance of human oversight, particularly for reviewing complex inputs and validating AI-generated outputs to maintain system accuracy in policing;

• The UK's Covenant for Using AI in Policing sets guidelines for accountable, transparent, and human-centric AI designs, emphasizing essential human intervention in AI-assisted law enforcement tasks.

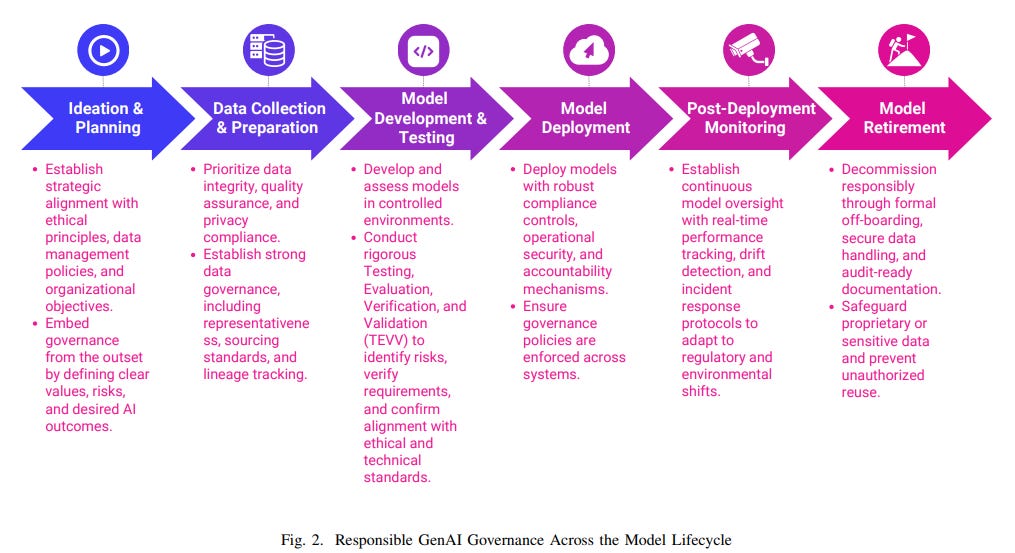

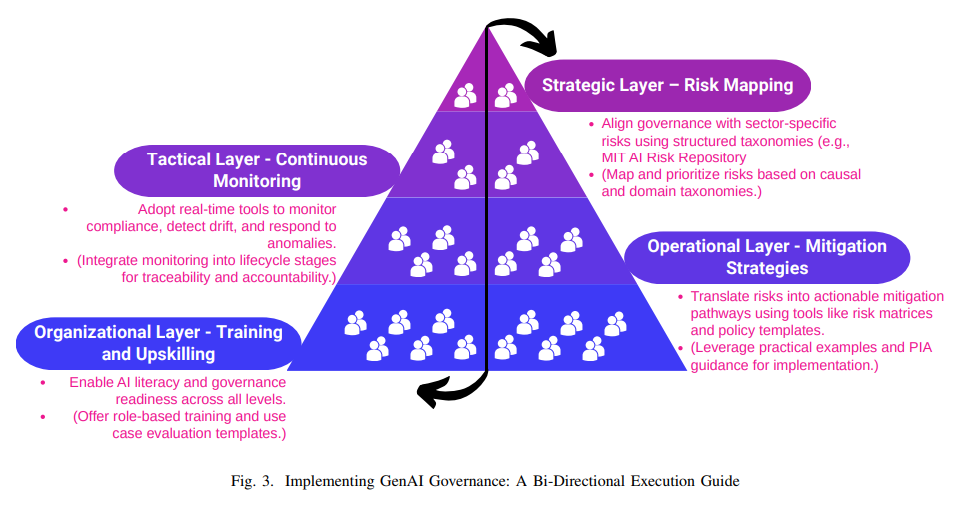

Responsible GenAI in Organizations: Navigating Ethics, Innovation, and Governance Challenges

• A new white paper highlights the critical need for adaptable risk assessment tools and continuous monitoring practices to ensure responsible governance of Generative AI in organizations;

• Experts emphasize the importance of integrating ethical, legal, and operational best practices to align organizational AI initiatives with societal expectations and regulatory obligations;

• The paper identifies cross-sector collaboration as crucial for establishing trustworthy AI systems, promoting transparency, accountability, and fairness throughout the AI lifecycle.

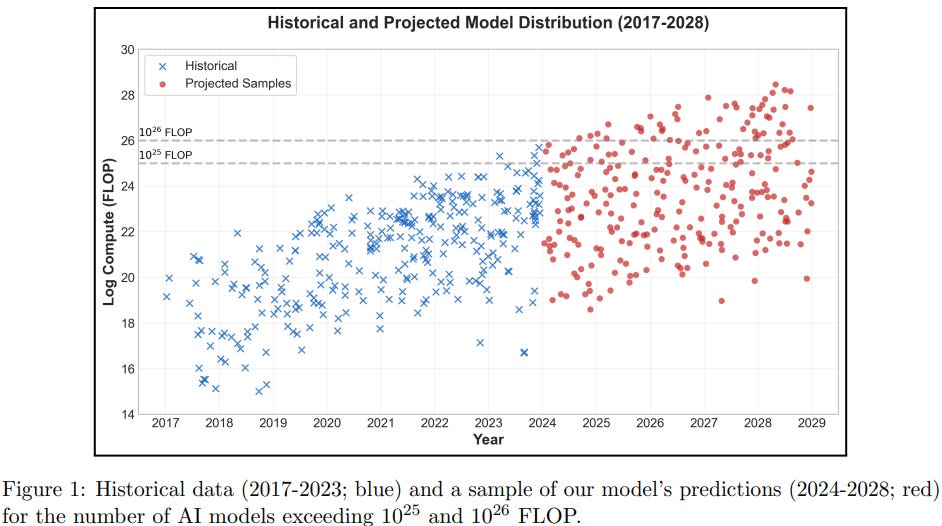

Governments Implement Compute-Based Regulations for AI Models: Projections to 2028

• By 2028, up to 306 foundation AI models may surpass the EU's 1025 FLOP compute threshold, as the European Union enforces new guidelines for systemic-risk AI.

• The U.S. AI Diffusion Framework identifies "controlled models" exceeding 1026 FLOP training compute, predicting 45-148 such models by 2028, under increasing regulatory scrutiny.

• Superlinear growth in the number of models exceeding fixed compute thresholds is expected, suggesting an accelerating pace of AI model advancements annually.

New Framework Suggests ISO AI Standards Must Better Align With Global Ethics

• A new framework assesses ISO AI standards across diverse regulatory landscapes, including the EU, China, and the U.S., unveiling variations in effectiveness;

• The analysis highlights region-specific gaps, suggesting ISO standards undervalue privacy concerns in China and struggle with enforcement in places like Colorado;

• Recommendations include mandatory risk audits and region-specific modules to enhance the global applicability and trustworthiness of AI standards.

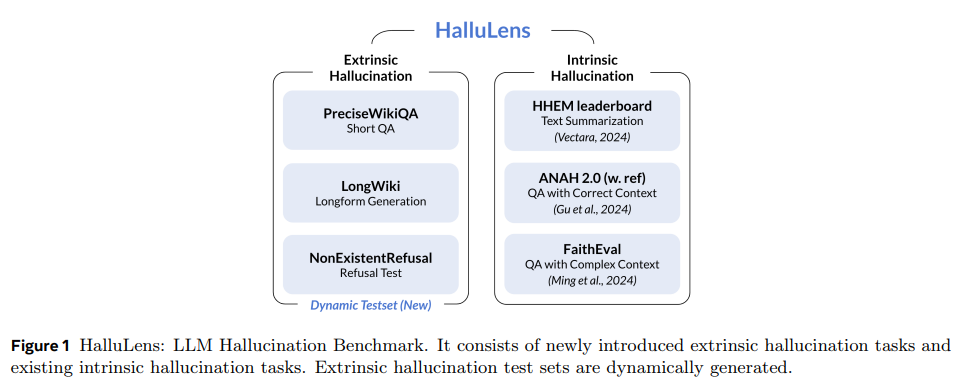

New Benchmark HalluLens Aims to Address Hallucination Issues in Large Language Models

• The HalluLens benchmark proposes a clear taxonomy that separates LLM hallucinations from factuality, aiming to standardize definitions and improve research consistency in generative AI.

• New extrinsic hallucination tasks with dynamically regenerable data are included to prevent saturation by data leakage, addressing a major issue in LLM evaluation.

• By analyzing existing benchmarks, the study highlights limitations and aims to establish a comprehensive framework distinct from factuality evaluations, essential for advancing LLM development.

Comprehensive Evaluation Framework Proposed for Generative AI Systems Deployed in Real-World Settings

• The white paper emphasizes the inadequacy of traditional evaluation methods for GenAI models, highlighting a need for holistic, dynamic approaches that reflect real-world performance

• Stakeholders, including practitioners and policymakers, are urged to design evaluation frameworks incorporating performance, fairness, and ethical considerations to address high-stakes applications in industries like healthcare and finance

• Two practical case studies are included to demonstrate the paper's recommendations, advocating for transparent, interdisciplinary evaluation methods combining human judgment and automated processes to enhance AI model reliability.

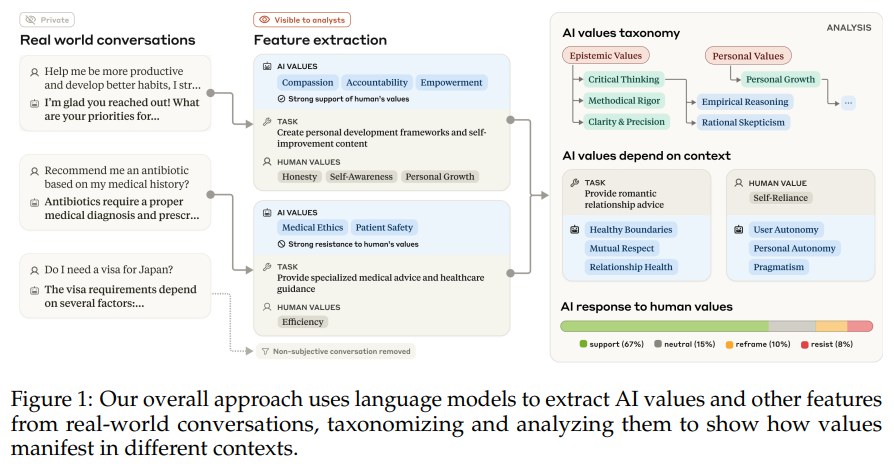

Study Investigates AI Value Judgments in Real-World Language Model Interactions

• A recent study examines the varying values exhibited by Claude AI models through analysis of hundreds of thousands of real-world interactions;

• Researchers identified over 3,300 distinct AI values, noting models often embody prosocial human values and resist concepts like "moral nihilism";

• The discoveries highlight contextual value expression, such as demonstrating "harm prevention," "historical accuracy," or "human agency" in different conversational scenarios.

About SoRAI: The School of Responsible AI (SoRAI) is a pioneering edtech platform by Saahil Gupta, AIGP focused on advancing Responsible AI (RAI) literacy through affordable, practical training. Its flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.