Free users of ChatGPT just got a massive UPGRADE

++Telegram Gets Smarter, Musk vs LeCun on future of AI & more

Welcome Back, Generative AI Enthusiasts!

Today's highlights:

P.S. It takes just 5 minutes a day to stay ahead of the fast-evolving generative AI curve. Ditch BORING long-form newsletters and consume the news through a 5-minute FUN & ENGAGING short-form TRENDING podcasts (link below) while multitasking. Join our fastest growing community of 25,000 researchers and become Gen AI-ready TODAY...

Watch Time: <8 mins (Link Below)

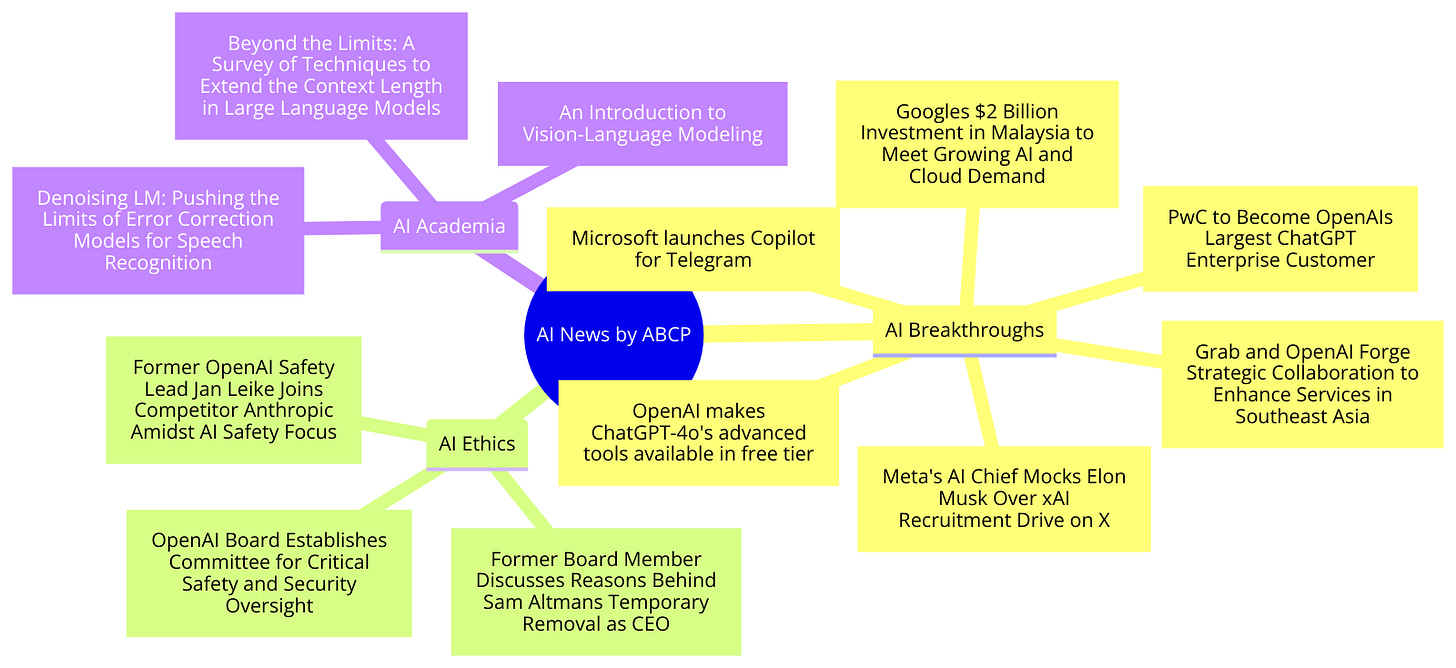

🚀 AI Breakthroughs

OpenAI Expands Free Tier Access: Users can now use browse, vision, data analysis, file uploads, and GPTs

On May 30th, OpenAI announced the release of GPT-4o for free users, providing access to advanced tools previously available only to paid subscribers.

Unlocking Advanced Tools: OpenAI has made ChatGPT-4o's advanced tools available to all free users, including browsing capabilities, vision functionalities, data analysis tools, file uploads, and custom GPTs.

Memory Feature: The Memory feature allows ChatGPT to remember or forget user instructions, improving conversational continuity and assistance over time.

Custom GPTs: Free tier users can now access the GPT Store to browse and use custom bots, previously limited to paid subscribers.

Vision Capabilities: Users can interact with images in real-time, utilizing enhanced vision features for tasks like object identification and mathematical problem-solving.

File Uploads and Data Analysis: Users can upload various document types and request summaries, comparisons, and visual data representations from ChatGPT.

PwC to Become OpenAI's Largest ChatGPT Enterprise Customer, Rolling Out Over 100,000 Licenses

PwC will deploy ChatGPT Enterprise to over 100,000 employees in the U.S. and U.K., becoming OpenAI's largest customer and first reseller.

Massive Deployment: PwC will roll out ChatGPT Enterprise to 75,000 U.S. and 26,000 U.K. employees, totaling over 100,000 licenses.

AI Integration: This deployment is part of PwC's $1 billion investment in generative AI, aimed at enhancing consulting technology and operations.

Enhanced Productivity: PwC developed ChatPwC, a chatbot built on OpenAI’s GPT-4 model, reporting a 20% to 40% increase in productivity among employees using AI tools.

Enterprise Focus: OpenAI is focusing on enterprise sales, with PwC being one of its first major partners outside of Microsoft.

Grab and OpenAI Forge Strategic Collaboration to Enhance Services in Southeast Asia

Grab and OpenAI have announced a first-of-its-kind partnership in Southeast Asia to enhance services for users, partners, and employees using advanced AI capabilities.

AI-Enhanced Services: Grab will leverage AI to improve accessibility for visually impaired users, develop customer support chatbots, and enhance mapping technologies.

Pilot Deployment: An initial pilot of ChatGPT Enterprise will be rolled out among select Grab employees to boost productivity and integrate AI across operations.

Strategic Collaboration: This partnership combines OpenAI’s technical expertise with Grab’s regional knowledge to create tailored AI solutions for Southeast Asia.

Focus Areas: Key areas include enhancing accessibility, automating customer support, and improving map-making through AI-driven data extraction and automation.

Microsoft Introduced 'Copilot for Telegram'

The AI-Powered Copilot, integrated with Telegram, offers enhanced chat experiences with features ranging from gaming tips to personalized travel itineraries.

Comprehensive Companion: Copilot on Telegram provides seamless conversations, access to information, and enhanced chat functionalities.

Diverse Tips and Tricks: Users can get game releases, movie recommendations, dating advice, food tips, sports stats, and music updates.

Entertainment and Productivity: Engage in brain teasers, travel planning, and fitness routines, making Telegram a versatile tool for daily interactions.

Personalized Assistance: Copilot offers personalized playlists, concert updates, and culinary suggestions, ensuring a tailored chat experience.

Google's $2 Billion Investment in Malaysia to Meet Growing AI and Cloud Demand

Google announced a $2 billion investment in Malaysia, including the construction of its first data center and cloud region in the country.

Significant Investment: Google’s $2 billion investment is aimed at constructing a data center and cloud region in Malaysia to meet the rising demand for AI and cloud services.

Economic Impact: The investment is expected to contribute $3.2 billion to Malaysia’s GDP and create 26,500 jobs by 2030.

Educational Programs: Google is launching AI literacy programs for students and educators in Malaysia to enhance local tech skills.

Global Network Expansion: This new cloud region will be part of Google’s extensive network of 40 regions and 121 zones worldwide.

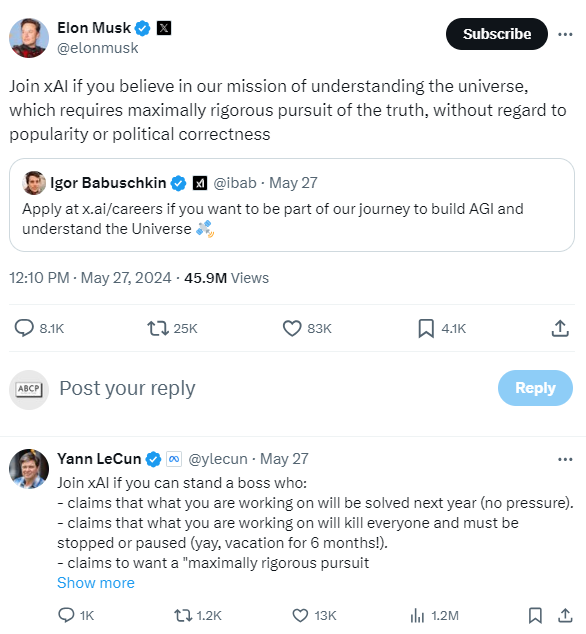

Meta's AI Chief Mocks Elon Musk Over xAI Recruitment Drive on X

Meta's AI chief, Yann LeCun, mocked Elon Musk on X over his recruitment efforts for xAI, following a $6 billion funding announcement for the company.

Public Mockery: Yann LeCun publicly criticized Elon Musk’s recruitment post for xAI, highlighting Musk’s management style and controversial statements about AI.

Fundraising Success: xAI announced a successful $6 billion Series B funding round, underscoring its significant financial backing.

Ongoing Rivalry: Musk and LeCun have a long-standing feud over AI’s potential dangers and future, with frequent public exchanges.

Recruitment Controversy: LeCun’s mockery focused on Musk’s claims about AI’s imminent impact and his management practices.

⚖️ AI Ethics:

OpenAI Board Establishes Committee for Critical Safety and Security Oversight

The OpenAI Board has formed a Safety and Security Committee to oversee and recommend critical safety and security measures for its projects.

Committee Formation: Led by Bret Taylor, the committee includes CEO Sam Altman and other key directors, tasked with evaluating and enhancing safety protocols across OpenAI.

90-Day Review: The committee will develop recommendations within 90 days, focusing on improving safety and security measures for OpenAI’s projects.

Public Transparency: After the Board reviews the recommendations, an update will be publicly shared to ensure transparency and accountability.

Expert Involvement: The committee will consult with technical and policy experts, including former cybersecurity officials, to support its safety and security efforts.

Former Board Member Discusses Reasons Behind Sam Altman's Temporary Removal as CEO

A former OpenAI board member disclosed that Sam Altman’s temporary dismissal as CEO was due to misinformation about AI safety protocols and the unannounced launch of ChatGPT.

Allegations of Misinformation: Helen Toner, a former board member, revealed that Altman repeatedly lied to the board about AI safety and the ChatGPT launch.

Lack of Oversight: The board only learned about ChatGPT’s launch through social media, hindering their ability to oversee safety processes.

Employee and Investor Support: Despite the dismissal, an overwhelming majority of employees and key investors demanded Altman’s reinstatement as CEO.

Investigation Findings: An investigation cleared Altman of wrongdoing, but the incident continues to be a contentious issue within OpenAI.

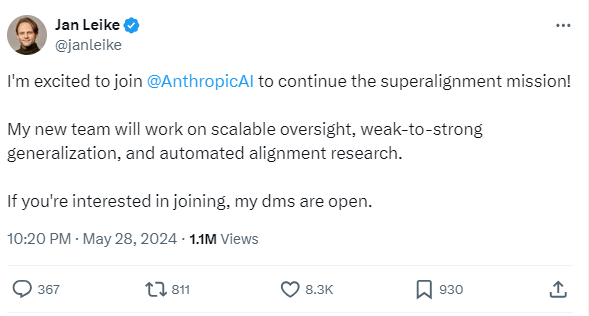

Former OpenAI Safety Lead Jan Leike Joins Competitor Anthropic Amidst AI Safety Focus

Jan Leike, a former safety leader at OpenAI, has joined the AI startup Anthropic, which is backed by Amazon with $4 billion in funding.

Career Move: Jan Leike announced his resignation from OpenAI and his new position at Anthropic, focusing on AI safety research.

Anthropic’s Focus: Anthropic, founded by former OpenAI executives, is dedicated to addressing long-term AI risks and safety.

Funding and Support: Backed by a $4 billion commitment from Amazon, Anthropic aims to advance scalable oversight and alignment research.

Safety Emphasis: Leike’s move underscores the growing importance of AI safety and the need for dedicated research in this rapidly evolving field.

🎓AI Academia:

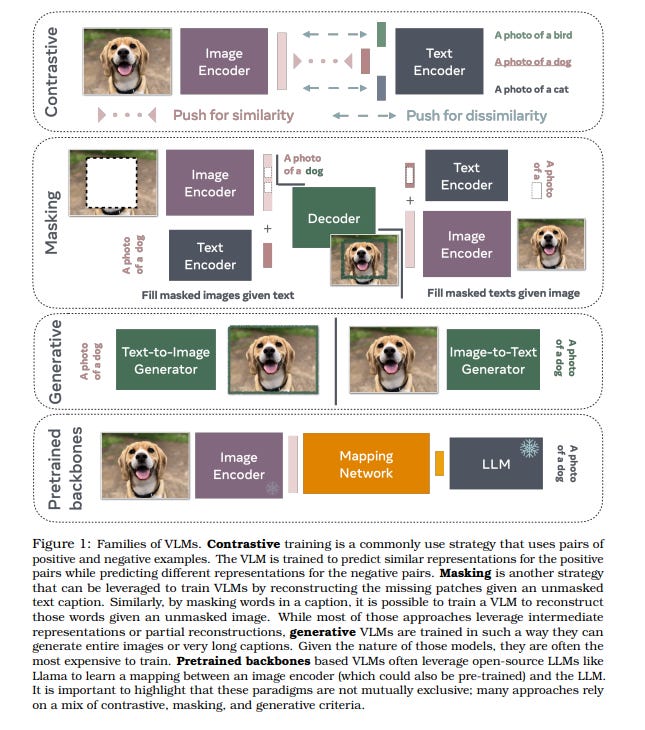

An Introduction to Vision-Language Modeling

A comprehensive introduction to vision-language models (VLMs), detailing their applications, challenges, and training methodologies.

What are VLMs?: Vision-language models extend large language models (LLMs) to the visual domain, enabling applications like visual assistants and image generation.

Challenges: VLMs face challenges due to the high-dimensional nature of visual data and the difficulty of discretizing concepts.

Training Paradigms: The introduction covers various training methods, including contrastive learning, masking strategies, and the use of pre-trained backbones.

Evaluation Approaches: Different evaluation techniques are discussed to measure the performance and reliability of VLMs, including their application to video content.

Denoising LM: Pushing the Limits of Error Correction Models for Speech Recognition

The Denoising Language Model (DLM) introduces significant improvements in error correction for speech recognition systems, achieving record-breaking accuracy.

Novel Approach: DLM employs a scaled error correction model trained extensively with synthetic data, significantly improving ASR performance.

Training Techniques: The model utilizes multi-speaker text-to-speech synthesis and various noise augmentation strategies to train on noisy hypotheses.

Unprecedented Accuracy: DLM achieves record low word error rates of 1.5% on test-clean and 3.3% on test-other in Librispeech without external audio data.

Versatile Application: A single DLM can be applied to different ASR systems, surpassing the performance of conventional LM-based beam-search rescoring.

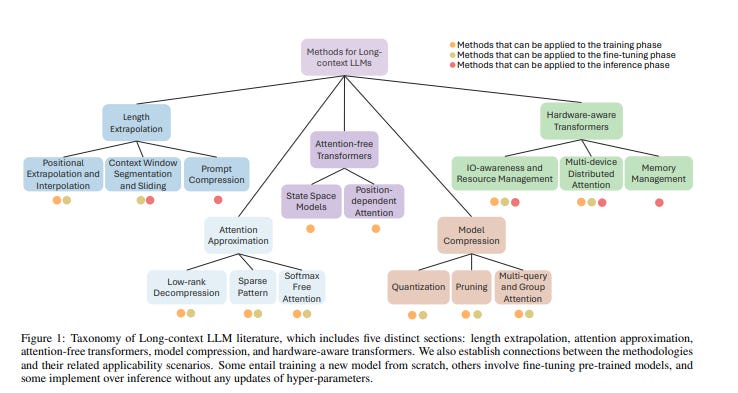

Beyond the Limits: A Survey of Techniques to Extend the Context Length in Large Language Models

A survey reviewing techniques to extend the context length in large language models (LLMs), focusing on architectural and training enhancements.

Context Length Challenges: LLMs face limitations in processing long input sequences due to high computational and memory requirements.

Architectural Modifications: Techniques such as modified positional encoding and altered attention mechanisms are reviewed to manage longer sequences efficiently.

Training Enhancements: The survey explores methods used during training, fine-tuning, and inference phases to extend LLM context length.

Future Research Directions: The limitations of current methodologies are discussed, with suggestions for future research to further enhance LLM capabilities.

About me: I’m Saahil Gupta, an electrical engineer turned data scientist turned prompt engineer. I’m on a mission to democratize generative AI through ABCP—world’s first Gen AI-only news channel.

We curate this AI newsletter daily for free. Your support keeps us motivated. If you find it valuable, please do subscribe & share it with your friends using the links below!