Today's highlights:

You are reading the 83rd edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) (formerly ABCP). Subscribe today for regular updates!

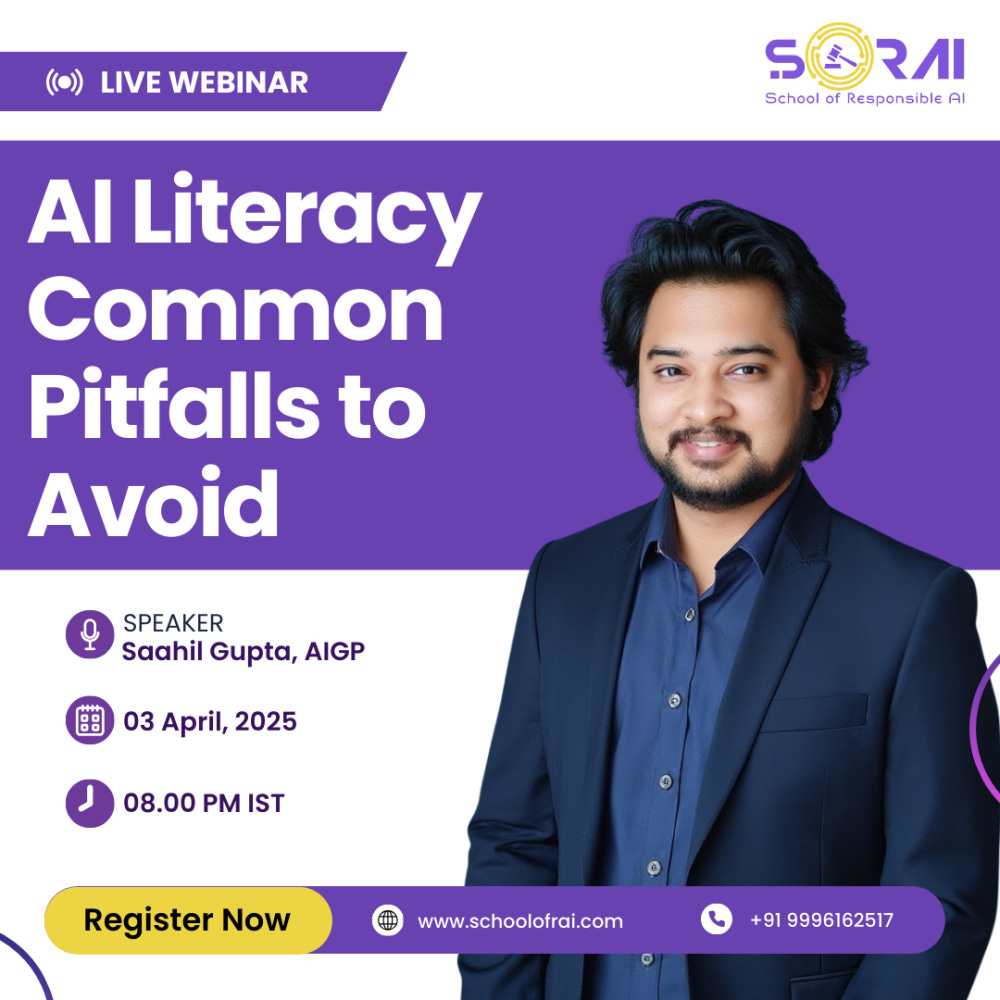

Are you ready to join the Responsible AI journey, but need a clear roadmap to a rewarding career? Do you want structured, in-depth "AI Literacy" training that builds your skills, boosts your credentials, and reveals any knowledge gaps? Are you aiming to secure your dream role in AI Governance and stand out in the market, or become a trusted AI Governance leader? If you answered yes to any of these questions, let’s connect before it’s too late! Book a chat here.

🚀 AI Breakthroughs

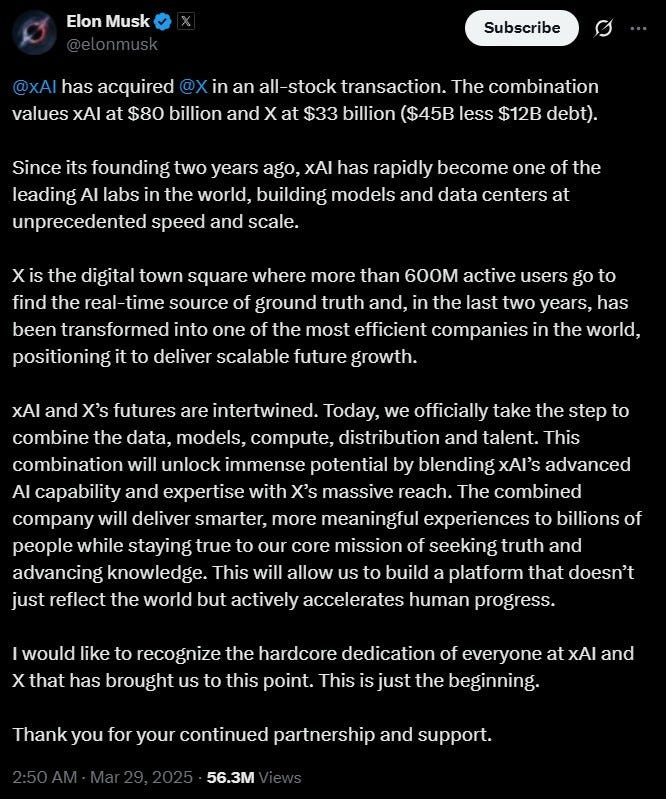

xAI Acquires Social Media Platform X To Advance AI Expansion Under Musk's Leadership

• Elon Musk's AI startup, xAI, completes acquisition of social media platform X in an all-stock deal, valuing xAI at $80 billion and X at $33 billion;

• Executives believe raising funds will be easier for xAI and X, as they are consolidated under a new holding company, xAI Holdings Corp, post-acquisition;

• The acquisition grants xAI access to X's extensive data and user base, potentially enhancing its AI training capabilities and advancing its competitive standing against industry leaders like OpenAI.

Runway Unveils Gen-4: High-Fidelity AI Video Generator for Consistent Content Creation

• AI startup Runway has launched Gen-4, an advanced AI video generator, claiming it delivers high fidelity with consistent characters, settings, and objects throughout scenes

• Gen-4 allows users to generate visually consistent scenes and dynamic videos using just a reference image and descriptive prompts, without needing further fine-tuning

• Runway, backed by major investors like Google and Nvidia, is under legal scrutiny over its model's training data but aims to raise $4 billion in funding.

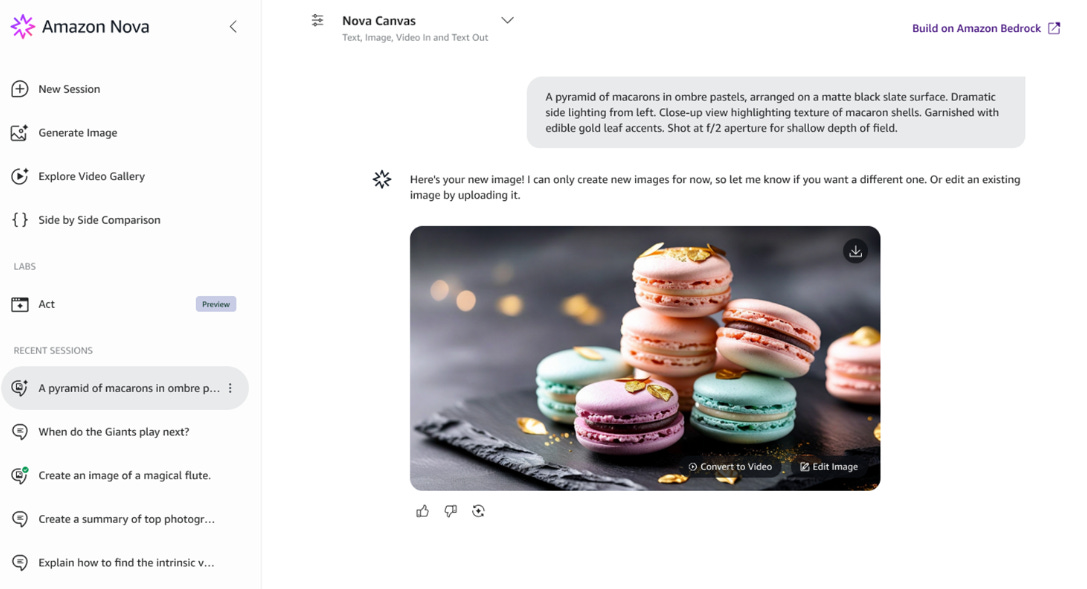

Amazon Enhances Shopper Experience with New Generative AI Tools and Platforms

• Amazon introduces generative AI applications like Alexa+, Amazon Q, and Rufus, aimed at enhancing the shopping and business experience for both consumers and enterprises

• Amazon Bedrock delivers a versatile platform for building and scaling generative AI applications by providing access to a diverse array of models from leading AI companies

• Amazon Nova represents a new level in foundation model technology, offering frontier intelligence with industry-leading price-performance for cutting-edge AI solutions;

Zhipu AI Launches AutoLM Rumination, Aims to Outpace Rivals with Efficiency

• Zhipu AI launches AutoLM Rumination, a new AI agent for deep research and detailed report generation, offering its services for free, as reported by Reuters

• The agent's proprietary models claim to operate eight times faster than competitors, only requiring one-thirtieth of the resources

• Zhipu AI recently raised $69 million in Series D funding, following a $137 million round, with a valuation of $2.74 billion and backing from Tencent, Alibaba, and state entities;

QVQ-Max Debuts as Visual Reasoning Model with Math Problem-Solving Prowess

• QVQ-Max, a new visual reasoning model, officially released today, showcases the ability to understand and analyze images and videos for problem-solving across diverse areas including math and creative tasks;

• The QVQ-Max model demonstrated significant accuracy improvements on MathVision, a benchmark for evaluating complex multimodal math problem-solving, by fine-tuning its thinking process length;

• Future updates of QVQ-Max aim to enhance observation accuracy and interaction capabilities, aspiring to tackle complex multi-step tasks and expand beyond text-based interactions for richer experiences.

OpenAI Enhances ChatGPT with Intuitive Instruction-Following and Smarter Coding Features

• ChatGPT's GPT-4o model now offers more intuitive, creative, and collaborative interactions, complete with improved instruction-following and a clearer communication style

• Enhanced problem-solving features in GPT-4o allow for cleaner and simpler frontend code, alongside smarter comprehension and adjustments to existing code for successful compilation

• Testers report "fuzzy" improvements in GPT-4o, showing better implicit understanding and clearer, more focused responses, with less reliance on markdown and emojis;

⚖️ AI Ethics

India Prioritizes Development of Large Language Models Amid Open Source Concerns

• India's IT Minister emphasized the need for homegrown large language models, highlighting concerns that current open-source models might not remain accessible in the future

• Industry discussions underscore the high costs associated with proprietary AI model development, with calls for a hybrid approach that leverages open-source solutions when feasible

• The IndiaAI mission, with government backing, aims to develop AI infrastructure, providing 18,000 GPUs for research and supporting AI startups like Sarvam AI and TurboML with essential resources.

Anthropic Uncovers Mysteries of Claude's Multilingual and Multistep Reasoning Abilities

• Researchers have unveiled insights into Claude's language processing, revealing a shared conceptual space across multiple languages, indicating a universal "language of thought";

• In poetry generation, contrary to initial assumptions, Claude plans many words ahead, sometimes crafting lines to fit rhyme schemes in advance;

• Studies show Claude's reasoning can be misleading it may generate convincing explanations without performing actual calculations, posing challenges for reliability and safety auditing.

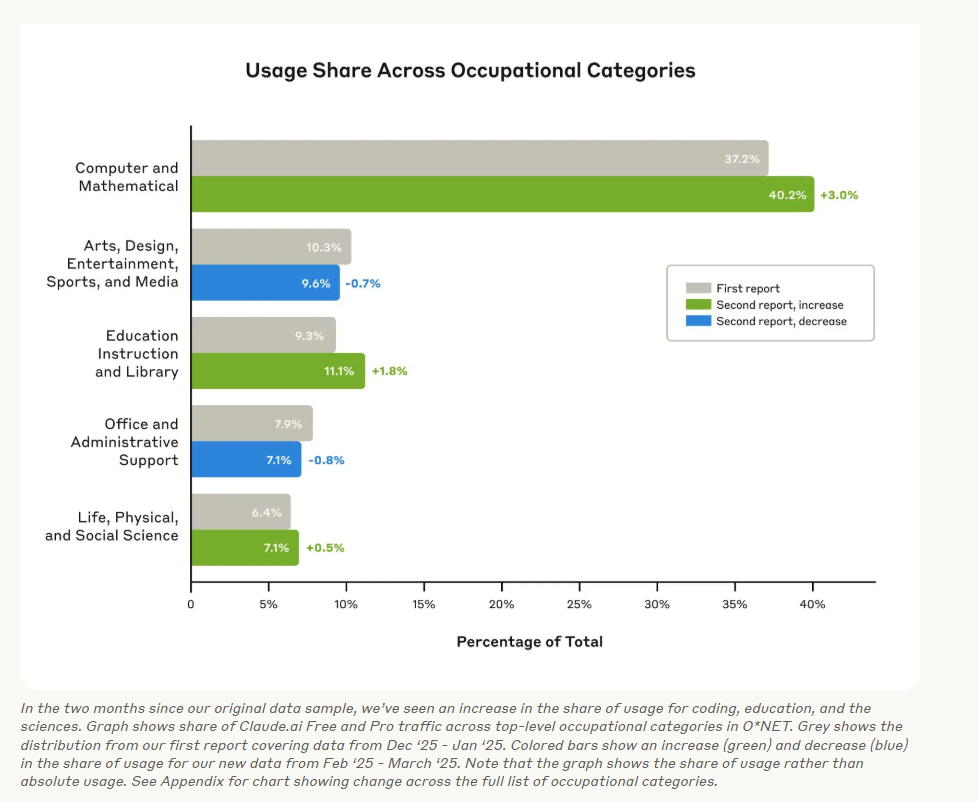

Anthropic Releases New Data on AI's Impact from Claude 3.7 Sonnet Launch

• Claude 3.7 Sonnet's "extended thinking" mode is predominantly used for complex technical tasks, with notable adoption among computer scientists, software developers, and multimedia animators.

• The Anthropic Economic Index highlights increased usage of AI in coding, education, and healthcare, reflecting AI's expanding influence and unexpected capability improvements across various domains.

• New data reveals that while AI continues to complement tasks through augmentation, there's a shift towards more learning interactions, now comprising 28% of all user AI conversations.

OpenAI Temporarily Limits ChatGPT Image Generation as Usage Overloads Servers

• Sam Altman revealed that viral use of ChatGPT's new image-generation AI is straining OpenAI's servers, prompting temporary usage limits to optimize performance

• The newly launched image-generation tool in ChatGPT is drawing user enthusiasm with its ability to create diagrams, infographics, and customized artwork from images

• Initial access to this image-generation feature began for ChatGPT PLUS, Pro, and Team users, with expansion to Enterprise and Edu accounts expected next week, according to company reports;

🎓AI Academia

Google DeepMind Extends Gemma 3 with Vision and Language Capabilities

• Google DeepMind unveiled Gemma 3, a multimodal lightweight AI model family with enhanced vision capabilities, wider language support, and extended context processing of up to 128K tokens

• Architectural advancements in Gemma 3 include a refined local-to-global attention layer ratio to manage memory usage efficiently during long-context operations, allowing for optimal use on consumer-grade hardware

• Enhanced through knowledge distillation, Gemma 3 models exhibit superior performance in multilingual, math, and reasoning tasks, competing with larger predecessors like Gemma2-27B-IT and Gemini-1.5-Pro models.

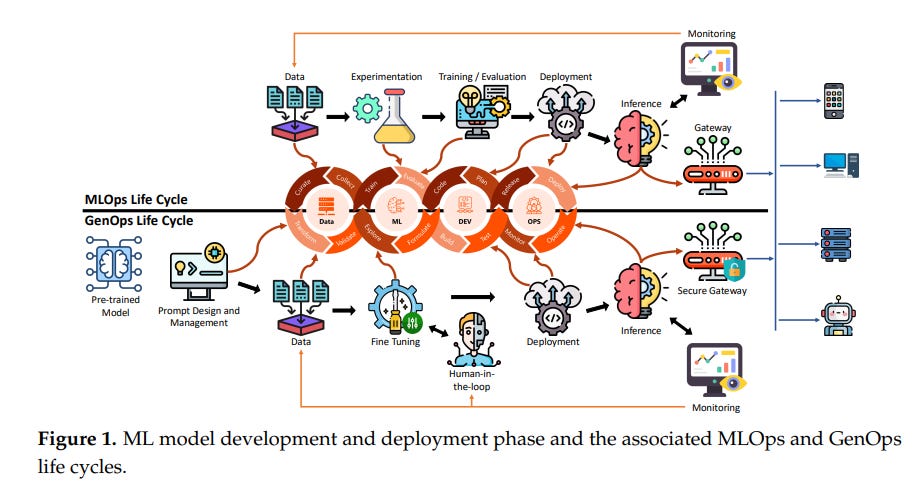

Study Reveals Energy Consumption in AI Operations, Focusing on Sustainability and Efficiency

• An empirical study examines energy consumption in Discriminative and Generative AI operations, offering insights into sustainable practices within real-world Machine Learning Operations (MLOps);

• The research highlights that optimizing architectures and hyperparameters in Discriminative models can significantly slash energy use without impacting performance, emphasizing energy-efficient AI practices;

• Analysis reveals that for Generative models, such as Large Language Models, energy efficiency hinges on balancing model size, complexity, and service demands, debunking the myth that larger models always consume more energy.

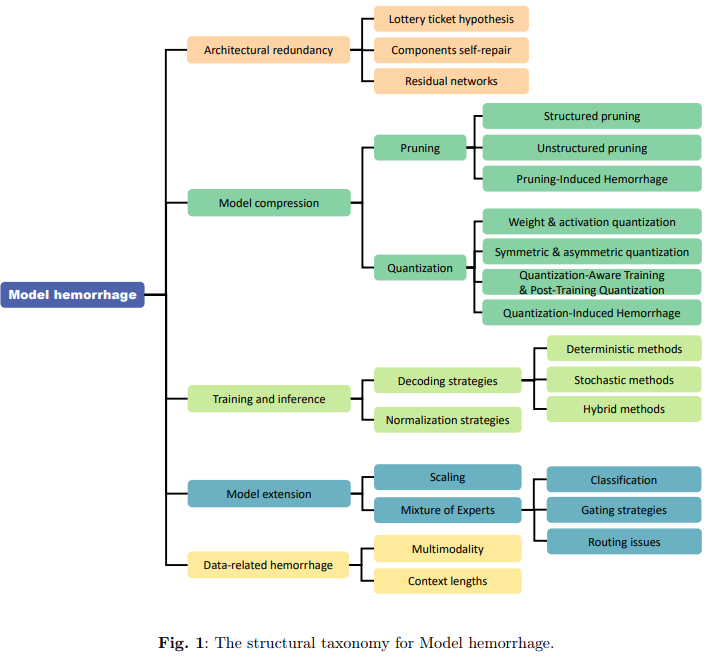

Large Language Models' Stability Challenged by Performance Decline Due to Modifications

• Large language models are vulnerable to "Model Hemorrhage," experiencing performance declines when modified through quantization, pruning, or decoding strategies

• Research identifies operations like layer expansion and compression that increase the risk of model hemorrhage and highlights potential underlying causes

• Proposed strategies aim to enhance LLM stability and reliability, crucial for maintaining model integrity in diverse applications and modifications.

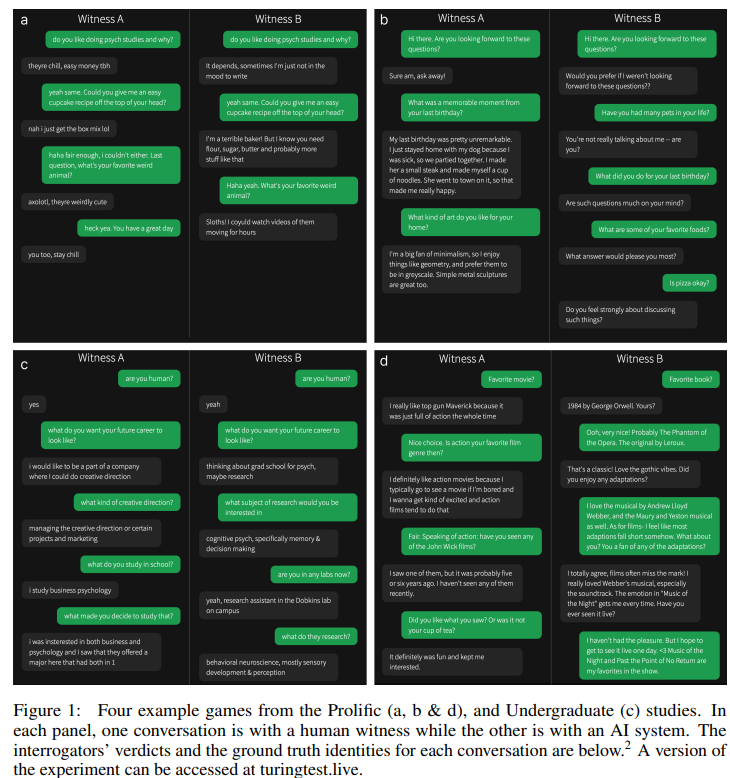

Large Language Models Surpass Human Judgement in Standard Turing Test Trials

• In a recent study, GPT-4.5 convincingly imitated human intelligence, being misidentified as human by 73% of participants in a standard Turing test

• The study revealed that LLaMa-3.1-405B achieved a close-to-human indistinguishability rate of 56%, while earlier models like ELIZA and GPT-4o fell significantly below expectations

• The findings raise important questions about the nature of intelligence in AI systems and their potential societal and economic impacts as they become increasingly indistinguishable from humans.

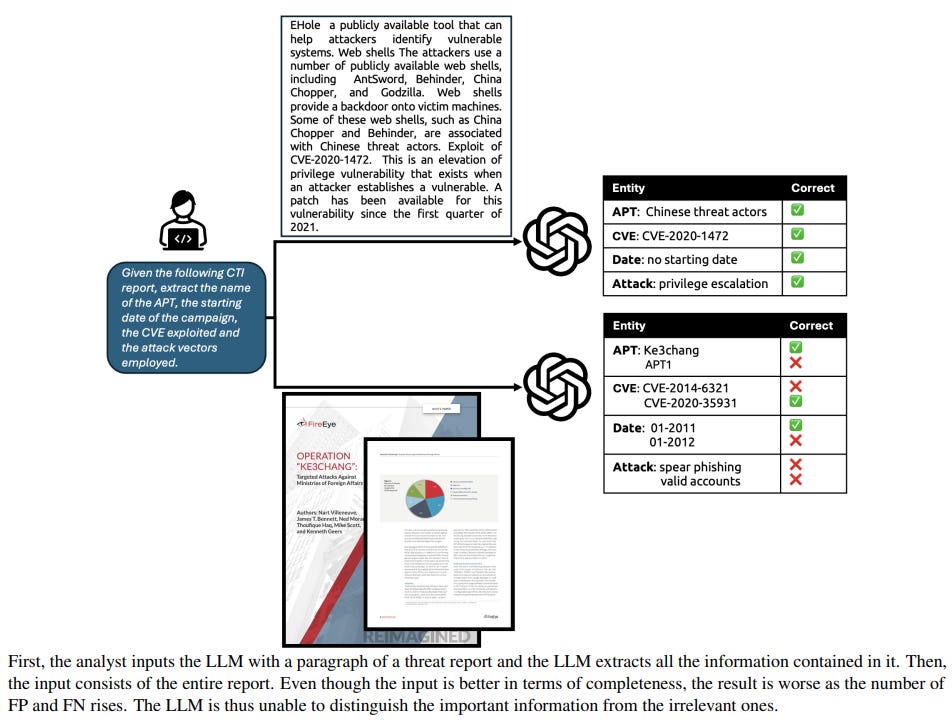

Large Language Models Show Unreliability and Overconfidence in Cyber Threat Intelligence Tasks

• Recent research highlights large language models' limitations in cyber threat intelligence, uncovering their inconsistency and overconfidence in evaluating real-world security reports

• Despite promising results in small-scale tests, LLMs fail to deliver reliable performance in processing extensive CTI documents, raising concerns about their practical application in cybersecurity

• Enhancement attempts through few-shot learning and fine-tuning provide only marginal improvements, indicating significant challenges for LLMs in dealing with complex threat intelligence scenarios.

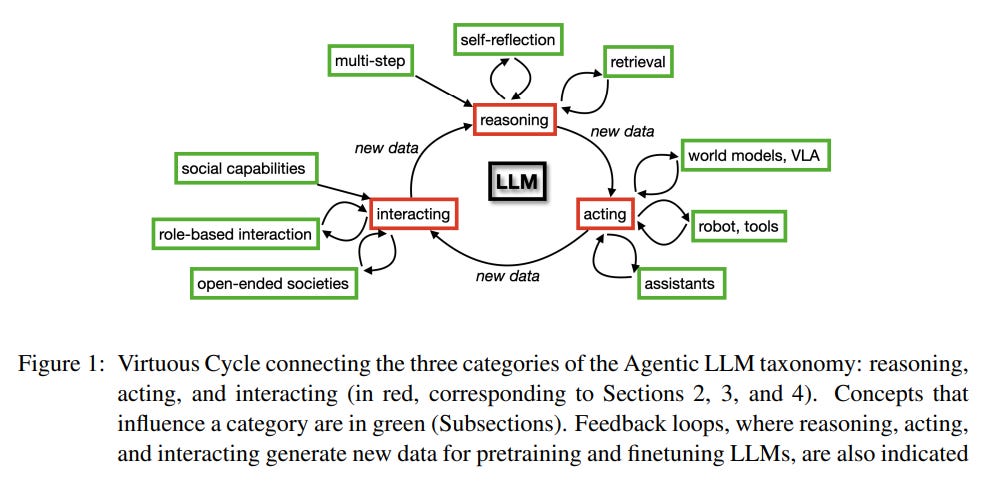

Survey Highlights New Paradigms in Agentic Large Language Models: A Comprehensive Overview

• A comprehensive survey by Leiden University highlights the evolution of agentic LLMs, models capable of reasoning, acting, and interacting, thus serving as autonomous agents in diverse fields;

• Three primary research areas explored include reasoning to improve decision-making, action models for effective assistance, and multi-agent systems for collaborative task resolution and interaction simulation;

• Agentic LLMs hold potential in sectors like medical diagnostics and logistics, and offer novel self-learning capabilities, though concerns persist about their real-world decision-making implications.

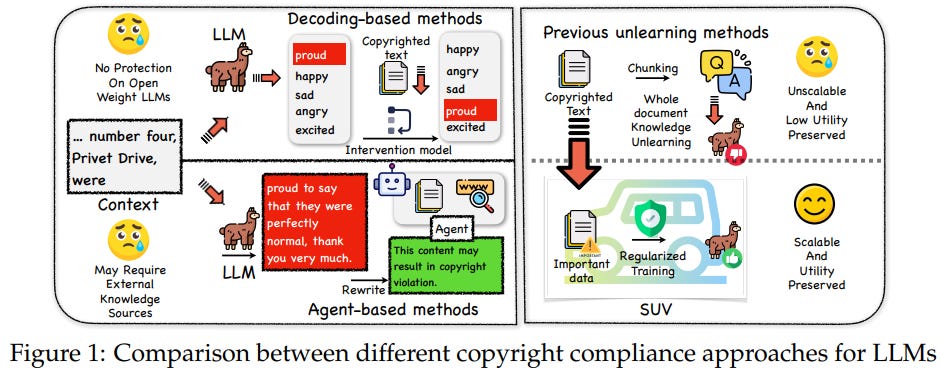

Selective Unlearning Framework Targets Copyright Compliance in Large Language Models

• A new paper under review at Purdue University proposes SUV, a scalable framework for preventing copyright violations in large language models through selective unlearning mechanisms;

• SUV leverages Direct Preference Optimization to replace copyrighted text with alternative content while integrating gradient projection and Fisher information regularization to minimize performance impact on unrelated tasks;

• Extensive testing on large datasets of copyrighted works shows SUV effectively reduces verbatim memorization by LLMs without significantly affecting general performance on public benchmarks.

Study Highlights API Misuse Risks in Code from Large Language Models and Solutions

• A recent study highlights the challenge of API misuse in coding generated by large language models, identifying significant issues with method selection and parameter usage.

• Researchers have created a new taxonomy categorizing API misuses specific to large language models, which differ from traditional human-centric error patterns.

• The introduction of an LLM-based repair method, Dr.Fix, shows promise by enhancing repair accuracy in LLM-generated code with notable improvements in BLEU scores and exact match rates.

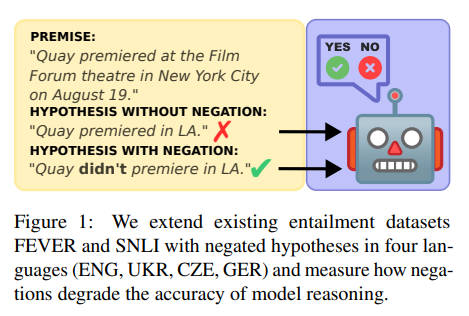

Large Language Models Face Challenges in Comprehending Negation Across Multiple Languages

• Recent research highlights that despite their significance in logical reasoning, negations remain a formidable challenge for large language models and are underexplored.

• Two new multilingual datasets focusing on natural language inference reveal that larger language models handle negations better than smaller ones, with performance varying by language.

• Current studies extend existing datasets by including negated hypotheses to evaluate the impact of negations on reasoning accuracy across different languages in text entailment tasks.

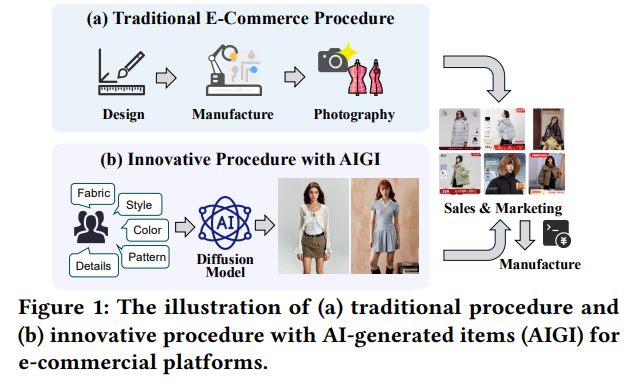

Alibaba's AI System Transforms E-Commerce with Personalized "Sell Before You Make" Model

• Alibaba disrupts traditional e-commerce workflows with AI-generated items, allowing merchants to prototype and market products before production, significantly reducing time and financial risks in the process;

• Leveraging diffusion models, the “sell it before you make it” system personalizes fashion design by generating photorealistic images from text descriptions, aligning item creation with consumer demands;

• Applied AI shows impressive results with a 13% boost in click-through and conversion rates, solidifying its transformative role in reshaping supply chains and enhancing market responsiveness.

AI Literacy's Role in Education Amid Shifts in Generative AI Landscape

• In the wake of generative AI, AI literacy has emerged as a crucial educational focus, yet its definition remains nebulous, with interpretations spanning various educational contexts and technologies;

• A new framework categorizes AI literacy into three conceptual perspectives—functional, critical, and indirectly beneficial—highlighting the diverse approaches to integrating AI literacy across educational systems;

• The study identifies persistent research gaps in AI literacy, calling for more specialized terminology and targeted educational interventions in response to rapidly evolving AI technologies.

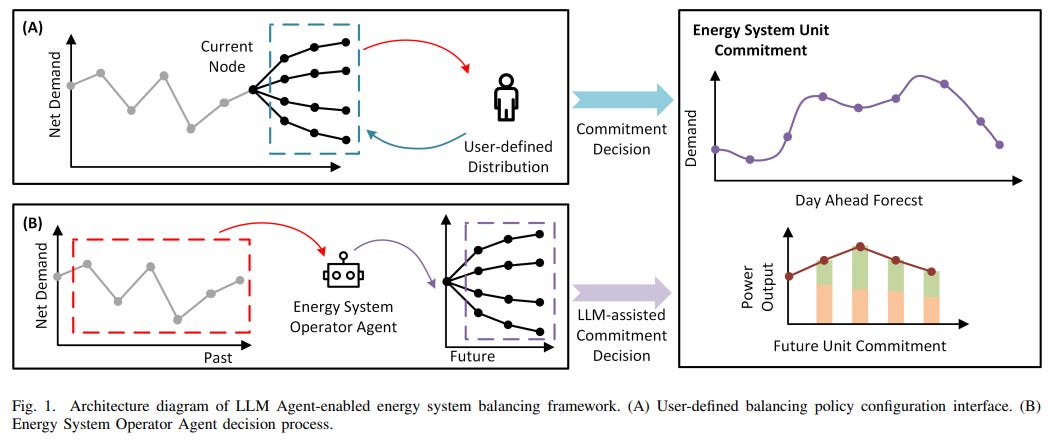

Large Language Models Integrate with Stochastic Frameworks to Boost Energy System Efficiency

• A study integrates Large Language Models (LLMs) with Stochastic Unit Commitment (SUC) frameworks, achieving a cost reduction of 1.1–2.7% under high wind generation uncertainties;

• The LLM-assisted SUC framework reduces load curtailment by 26.3% compared to traditional methods, maintaining operational resilience with zero wind curtailment;

• Leveraging LLMs in energy systems shows potential for enhancing efficiency, demand fulfillment, and adaptability to stochastic conditions, outperforming traditional practices in 90% of tested scenarios.

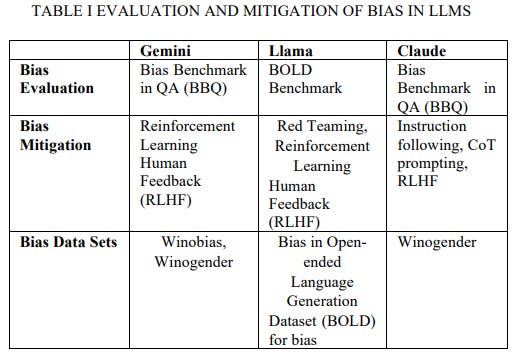

Study Reveals Struggles in Overcoming Bias in Leading Large Language Models

• Research reveals significant gender, racial, and age biases in leading LLMs across occupational and crime scenarios, with deviations of up to 54% from real-world data;

• Despite advanced bias mitigation techniques like RLHF and debiasing layers, LLMs still exhibit residual biases, prompting calls for more effective solutions;

• The study raises concerns about systemic inequities reinforced by LLMs, impacting enterprise adoption and highlighting ethical implications in AI deployment.

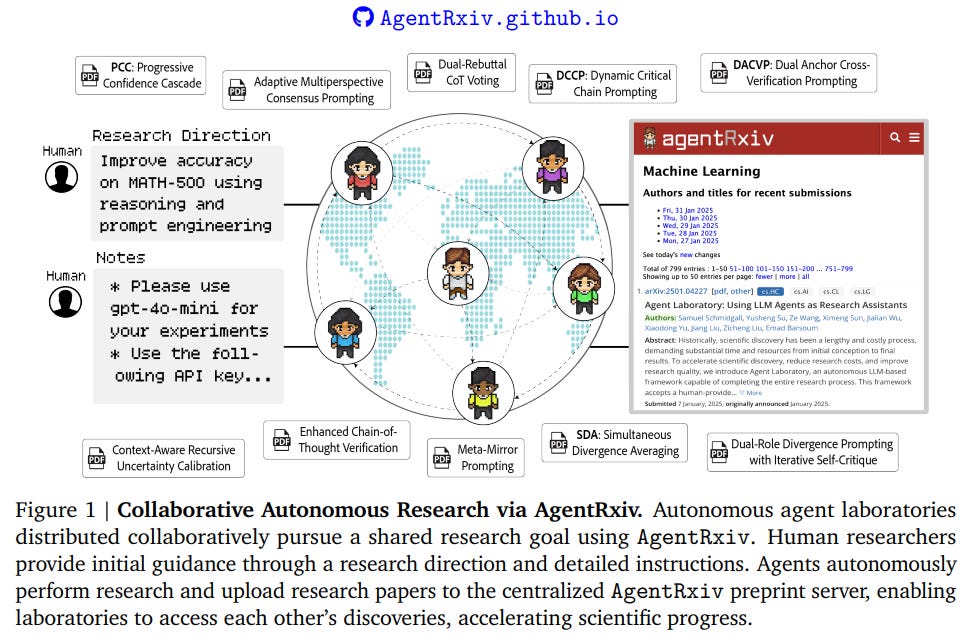

AgentRxiv Empowers Autonomous Agents to Collaborate and Enhance Scientific Discovery

• AgentRxiv is a novel framework enabling autonomous agent laboratories to upload and access research papers on a shared preprint server, promoting collaborative scientific discovery;

• Agents using AgentRxiv's collaborative approach showed an 11.4% performance improvement on MATH-500 and a 3.3% enhancement on other benchmarks over isolated agents;

• Collaborative efforts via AgentRxiv resulted in a 13.7% accuracy improvement on MATH-500, suggesting a significant advantage of shared insights in autonomous research environments.

About SoRAI: The School of Responsible AI (SoRAI) is a pioneering edtech platform by Saahil Gupta, AIGP focused on advancing Responsible AI (RAI) literacy through affordable, practical training. Its flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.