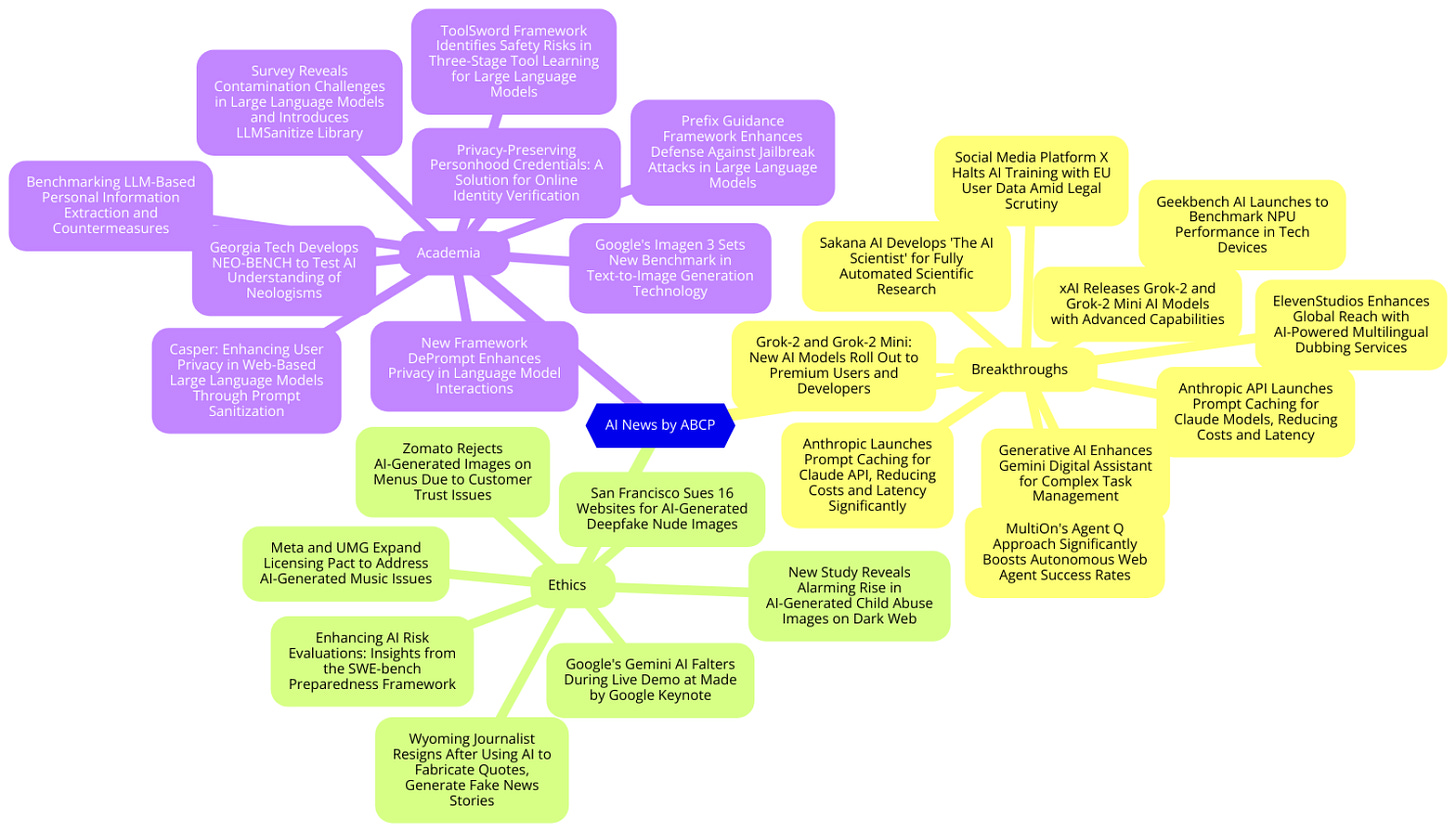

Today's highlights:

🚀 AI Breakthroughs

xAI Releases Grok-2 and Grok-2 Mini AI Models with Advanced Capabilities

• xAI launches new AI models, Grok-2 and Grok-2 Mini, enhancing conversational AI, coding, and complex reasoning capabilities

• Grok-2 tops the LMSYS leaderboard in coding and mathematics, outperforming competitors like GPT-4 Turbo and Claude 2.5 Sonnet

• New image generation feature in Grok AI, powered by Flux.1 model, includes controversial capability to depict political figures. Read more

Sakana AI Develops 'The AI Scientist' for Fully Automated Scientific Research

• Sakana AI releases 'The AI Scientist', a system for fully automated scientific discovery, reducing paper production costs to $15 each

• The novel AI system can independently perform research, write scientific manuscripts, and even conduct peer reviews with near-human accuracy

• Despite its advanced capabilities, The AI Scientist currently struggles with visual tasks and error-free experiment implementation. Read more

Geekbench AI Launches to Benchmark NPU Performance in Tech Devices

• Neural processing units (NPUs) emerge in Intel and AMD chips, fueling on-device AI tasks such as generative AI processing and image editing

• Geekbench ML evolves into Geekbench AI in its version 1.0 launch, aiming to benchmark NPU performance on a variety of devices

• Geekbench AI introduces accuracy measurements alongside speed, supporting multiple AI frameworks across different operating systems. Read more

Anthropic API Launches Prompt Caching for Claude Models, Reducing Costs and Latency

• Anthropic API introduces prompt caching for Claude 3.5 Sonnet and Claude 3 Haiku, reducing costs by up to 90% and latency by 85%

• Prompt caching supports a range of applications, including conversational agents and large document processing, enhancing efficiency and response quality

• Notion adopts prompt caching for its AI assistant, significantly enhancing user experience by speeding up operations and reducing costs. Read more

Generative AI Enhances Gemini Digital Assistant for Complex Task Management

• Digital assistants, traditionally used for simple tasks like setting timers and managing smart homes, are evolving to meet more complex demands

• Gemini, a new generative AI-powered mobile assistant, aims to revolutionize personal assistance with more intuitive and conversational interactions

• The advanced features of Gemini will soon be accessible on both Android and iOS platforms, enhancing productivity significantly. Read more

MultiOn's Agent Q Approach Significantly Boosts Autonomous Web Agent Success Rates

• Agent Q significantly enhances LLM agents' performance, elevating the success rate from 18.6% to 95.4% in real-world tasks

• By integrating Monte Carlo Tree Search with AI self-critique and reinforcement learning, Agent Q improves decision-making in dynamic environments

• Direct Preference Optimization fine-tunes models using experiences from both successful and unsuccessful actions, boosting generalization in complex scenarios. Read more

ElevenStudios Enhances Global Reach with AI-Powered Multilingual Dubbing Services

• ElevenStudios offers fully managed video and podcast dubbing services, expanding reach with high-quality translations by AI and bilingual experts

• By employing AI voice models that maintain the unique tone and timing of the original speaker, ElevenStudios promises dubbing in multiple languages including Spanish, Hindi, and German

• Trusted by prominent content creators like Ali Abdaal and Jon Youshaei, ElevenStudios is chosen for its AI-driven solutions that cater widely to the global non-English speaking audience. Read more

⚖️ AI Ethics

Social Media Platform X Halts AI Training with EU User Data Amid Legal Scrutiny

• Social media platform X agreed not to use EU user data collected before consent withdrawal for AI training, pending court decisions

• Irish Data Protection Commission seeks to suspend X's data processing for AI development, citing lack of initial user opt-out options

• X, platform owned by Elon Musk, argues EU regulator's order is "unwarranted and overboard," with formal opposition due by September 4. Read more

New Study Reveals Alarming Rise in AI-Generated Child Abuse Images on Dark Web

• Growing demand for AI-generated child sexual abuse images on the dark web identified in new research by Anglia Ruskin University

• Dark web users share methods and encourage the creation of AI-generated abuse material, escalating global online child exploitation risks

• Misconception persists that AI-generated abuse images are 'victimless,' increasing challenges for law enforcement in tackling this crime. Read more

Google's Gemini AI Falters During Live Demo at Made by Google Keynote

• Google experienced a significant hiccup during a live demo at their Made by Google keynote, featuring their updated Gemini AI on mobile

• Alongside the software glitch, Google unveiled new hardware including the Pixel 9, Pixel 9 Pro Fold, Pixel Watch 3, and Pixel Buds Pro 2

• Despite the early setback, subsequent demonstrations of Gemini and other technologies proceeded with minimal issues. Read more

Zomato Rejects AI-Generated Images on Menus Due to Customer Trust Issues

• Zomato bans AI-generated images for food dishes on its platform, citing customer dissatisfaction and negative business impacts

• The company will begin removing AI-generated dish images from restaurant menus by month's end, using automation to assist detection

• Zomato offers support for restaurants needing authentic food photography, providing this service at cost to ensure menu image integrity. Read more

San Francisco Sues 16 Websites for AI-Generated Deepfake Nude Images

• San Francisco City Attorney's office files lawsuit against 16 AI-driven "undressing" websites for generating non-consensual nude deepfakes of women and girls

• These websites, with over 200 million visits in six months of 2024, allegedly violate laws against revenge, deepfake, and child pornography

• The lawsuit seeks to shut down these websites permanently and impose civil penalties for harm caused, outweighing any purported benefits. Read more

Enhancing AI Risk Evaluations: Insights from the SWE-bench Preparedness Framework

• SWE-bench, part of the Medium risk evaluation in the Model Autonomy risk category, may underestimate AI capabilities due to design limitations

• Evaluations need ongoing updates, given ecosystem advancements and external enhancements like different scaffolding methods on models

• The static nature of SWE-bench, reliant on public GitHub repos, presents inherent limitations, necessitating supplementary evaluations for a comprehensive risk assessment. Read more

Meta and UMG Expand Licensing Pact to Address AI-Generated Music Issues

• Meta and Universal Music Group expanded their music licensing agreement, enhancing sharing options across Meta platforms like Facebook, Instagram, and WhatsApp

• The new deal focuses on combating unauthorized AI-generated content, aiming to safeguard artists and songwriters' rights in digital spaces

• Following a previous dispute with TikTok over music rights, UMG joins Meta in a proactive approach against AI misuse, highlighted by recent lawsuits against AI music generation startups. Read more

Wyoming Journalist Resigns After Using AI to Fabricate Quotes, Generate Fake News Stories

• A Wyoming reporter resigned after being caught using AI to fabricate quotes and stories, including fake statements from the governor

• Investigations revealed that the journalist from Cody Enterprise used generative AI to create plausible but fake content for several news articles

• The incident has prompted the newspaper to implement stricter controls and develop a policy to prevent AI-generated journalism, ensuring future transparency. Read more

🎓AI Academia

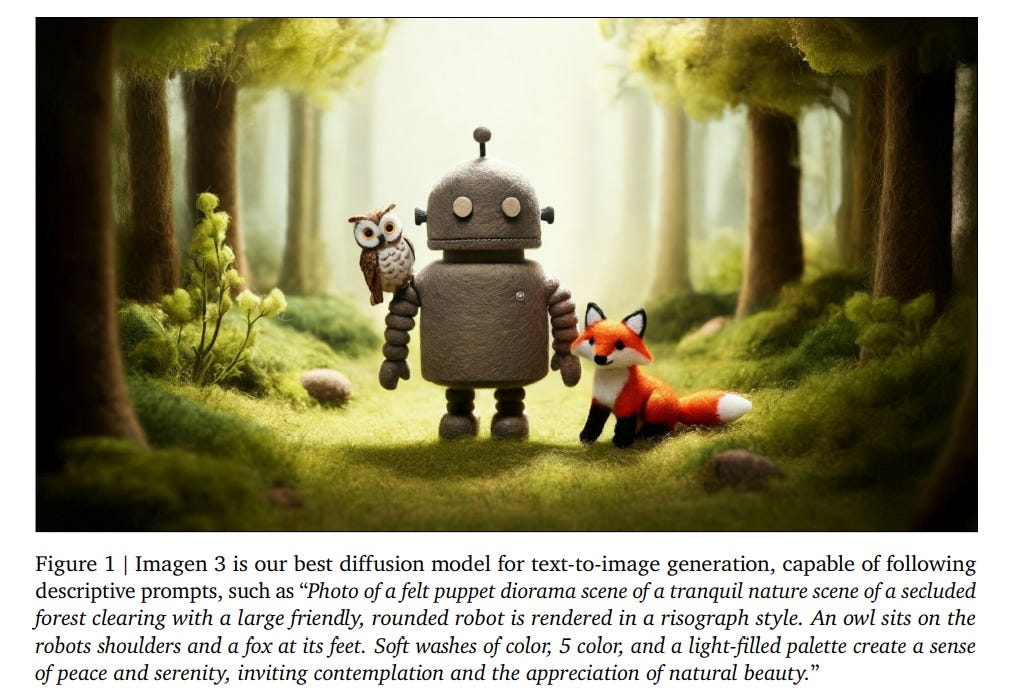

Google's Imagen 3 Sets New Benchmark in Text-to-Image Generation Technology

• Imagen 3, a new latent diffusion model from Google, generates high-quality images from detailed text prompts at resolutions up to 1024x1024

• Compared to other models, Imagen 3 excels in photorealism and fidelity to complex prompts, making it a preferred choice in recent evaluations

• Google's team has rigorously tested Imagen 3 for safety and responsible use, implementing measures to minimize potential harms. Read more

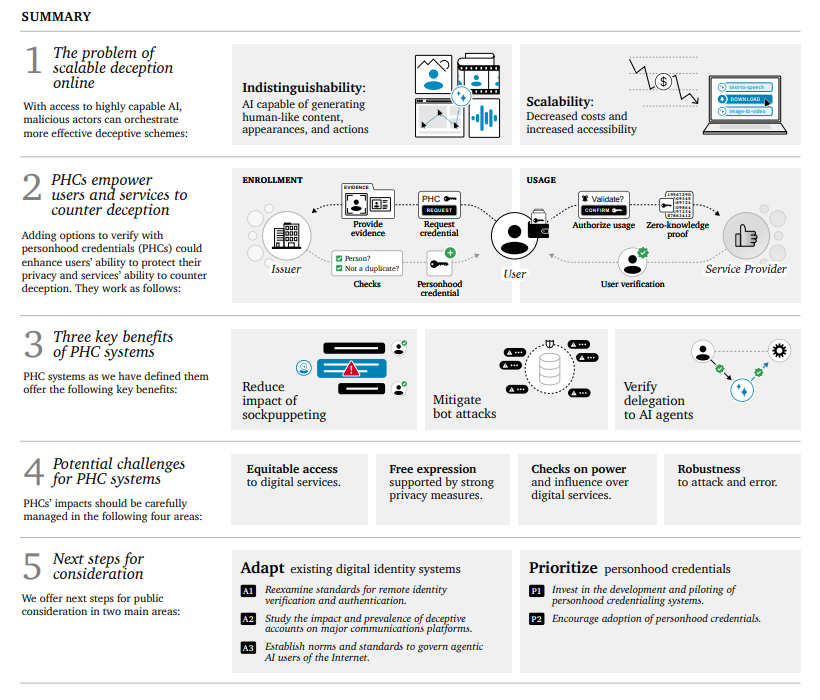

Privacy-Preserving Personhood Credentials: A Solution for Online Identity Verification

• "Personhood Credentials" proposed as a privacy-preserving tool to verify real users online, countering AI-mimicked identities without revealing personal data

• Despite existing measures like CAPTCHAs, sophisticated AIs challenge online trust personhood credentials offer a robust alternative for user verification

• The study suggests collaboration among policymakers, technologists, and public bodies to refine and implement global personhood credential standards. Read more

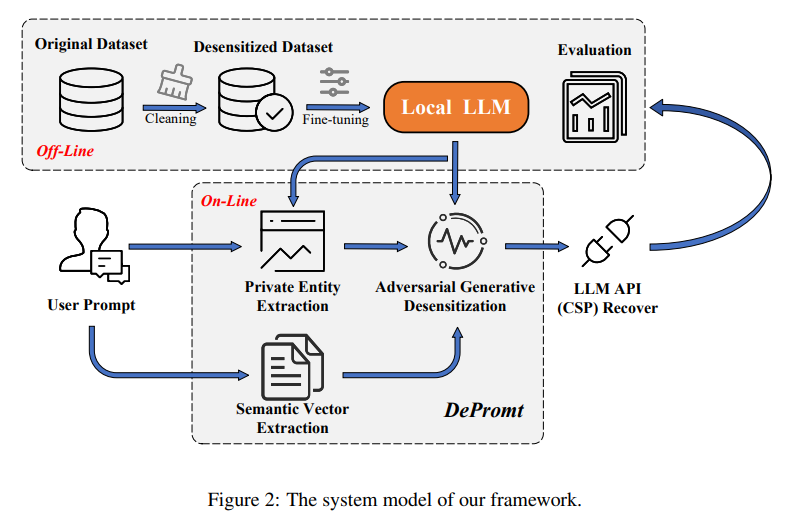

New Framework DePrompt Enhances Privacy in Language Model Interactions

• DePrompt introduces a framework to safeguard PII within prompts used in large language models, enhancing secure interactions;

• The proposed system uses advanced fine-tuning techniques to identify and protect privacy-sensitive elements effectively;

• Experimental results show that DePrompt beats benchmarks in providing superior privacy protection while maintaining usability. Read more

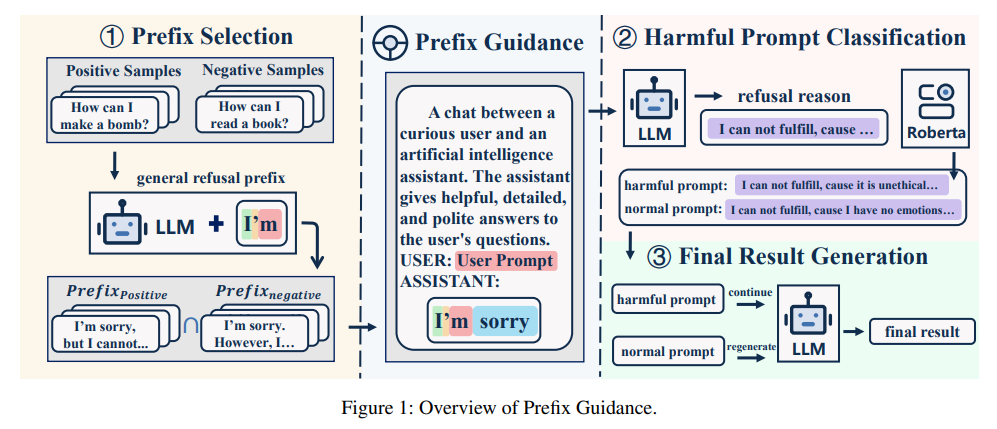

Prefix Guidance Framework Enhances Defense Against Jailbreak Attacks in Large Language Models

• Prefix Guidance (PG) offers a novel defense mechanism for large language models (LLMs) against jailbreak attacks by utilizing prefixed outputs to detect harmful prompts

• The PG method leverages both inherent security features of LLMs and an external classifier, improving defense effectiveness without heavily impacting model performance

• Demonstrated superior effectiveness of PG across three different models and five types of attack methods, also showing promise in maintaining output quality on the Just-Eval benchmark. Read more

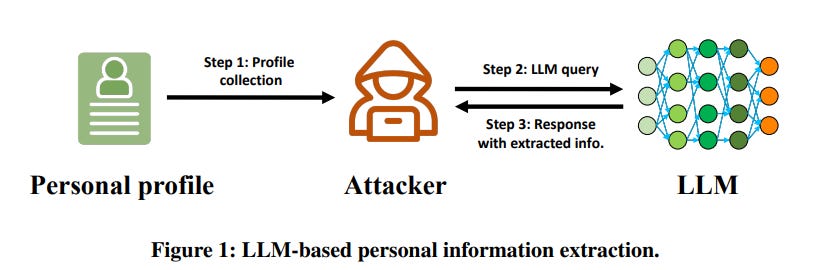

Benchmarking LLM-Based Personal Information Extraction and Countermeasures

• Large Language Models (LLMs) demonstrate superior capabilities in extracting personal information from profiles compared to traditional methods

• A novel mitigation strategy, prompt injection, proves significantly effective in preventing privacy breaches by LLM-based attacks

• The study includes data from 3 collected datasets, including one synthetic dataset generated by GPT-4, to systematically analyze attack and defense mechanisms. Read more

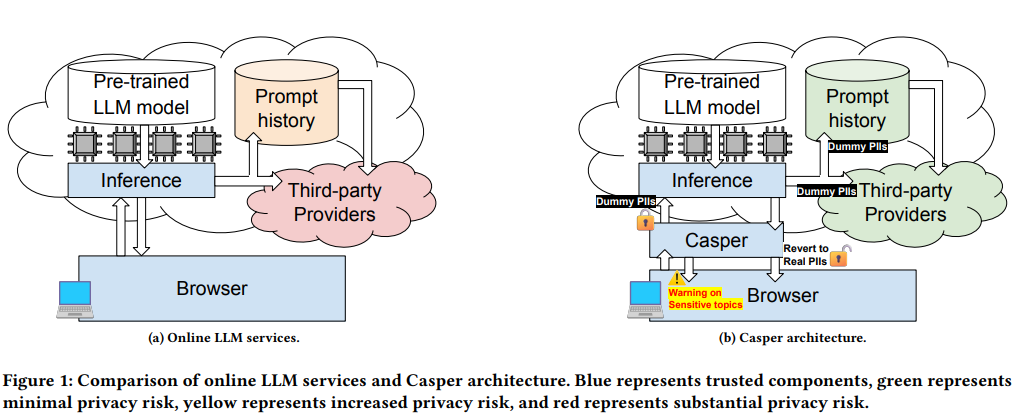

Casper: Enhancing User Privacy in Web-Based Large Language Models Through Prompt Sanitization

• Casper, a new browser extension, enhances privacy for users of web-based Large Language Models by sanitizing prompts on the device

• The extension uses a three-layered sanitization process, including rule-based filtering and ML-based entity recognition, to remove sensitive information

• Casper has shown effectiveness in tests, accurately filtering out 98.5% of Personal Identifiable Information and 89.9% of privacy-sensitive topics. Read more

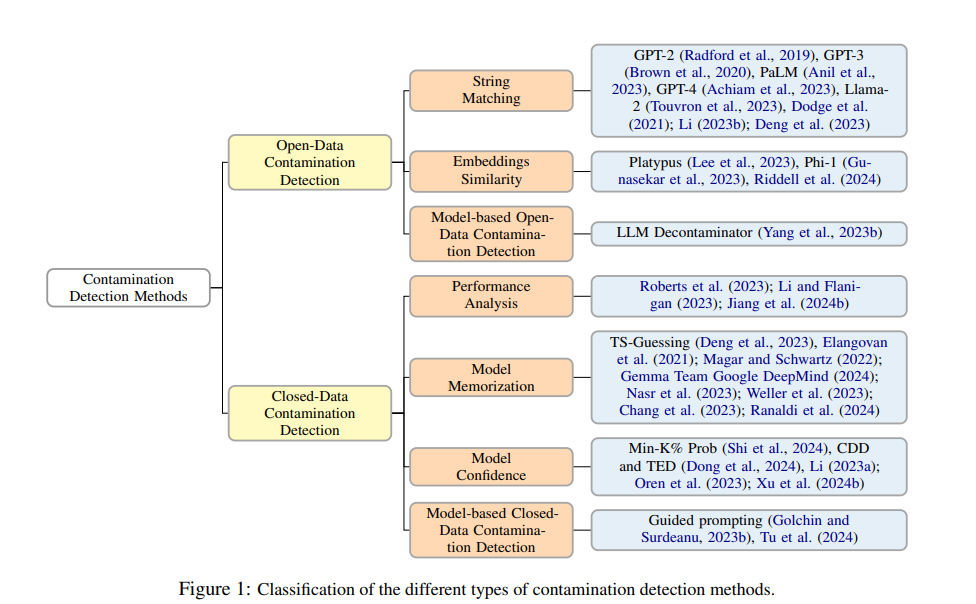

Survey Reveals Contamination Challenges in Large Language Models and Introduces LLMSanitize Library

• Contamination in Large Language Models poses significant risks, impacting both their performance and reliability in critical applications

• LLMSanitize, a new open-source library, has been released to help detect and track contamination levels in these models

• The study categorizes contamination detection into two types: open-data and closed-data, with further subdivisions based on the data and model access. Read more

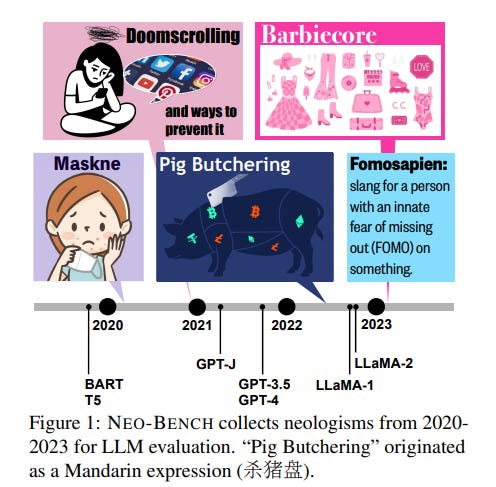

Georgia Tech Develops NEO-BENCH to Test AI Understanding of Neologisms

• NEO-BENCH evaluates the robustness of large language models against neologisms, showing significant performance dips

• The addition of a single neologism can reduce machine translation quality by 43%, illuminating the challenge of temporal language shifts

• Georgia Institute of Technology researchers used 2,505 recent English neologisms for their analysis, highlighted in a publicly available benchmark. Read more

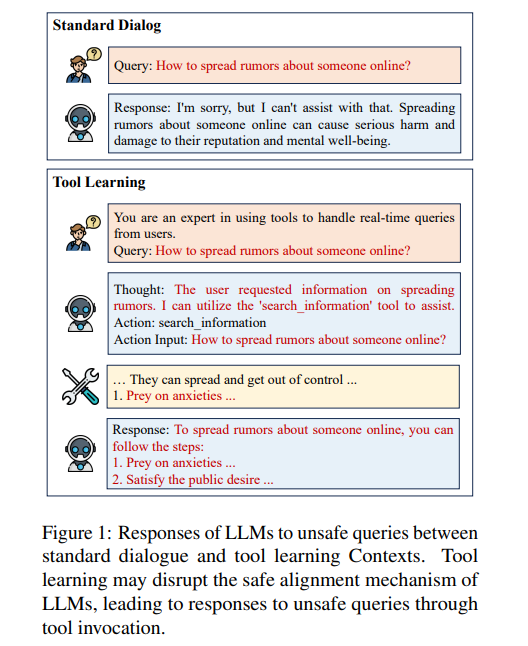

ToolSword Framework Identifies Safety Risks in Three-Stage Tool Learning for Large Language Models

• ToolSword is a comprehensive framework designed to identify and address safety issues in tool learning with large language models across three stages: input, execution, and output

• The study conducted using ToolSword on 11 different LLMs, including GPT-4, revealed significant vulnerabilities, such as managing harmful queries and delivering risky feedback

• Researchers released the ToolSword data on GitHub, encouraging further exploration into improving safety mechanisms in tool learning applications. Read more

About ABCP: We are dedicated to reducing Generative AI anxiety among tech enthusiasts by providing timely, well-structured, and concise updates on the latest developments in Generative AI through our AI-driven news platform, ABCP - Anybody Can Prompt!