Elon Musk Unveils 'Colossus': The Titan of AI Training Platforms

Apple's iPhone 16 Launches with GenAI, OpenAI's Future GPT Next Revealed, plus Coinbase's AI-AI Crypto Transaction & More Groundbreaking Developments in AI Landscape

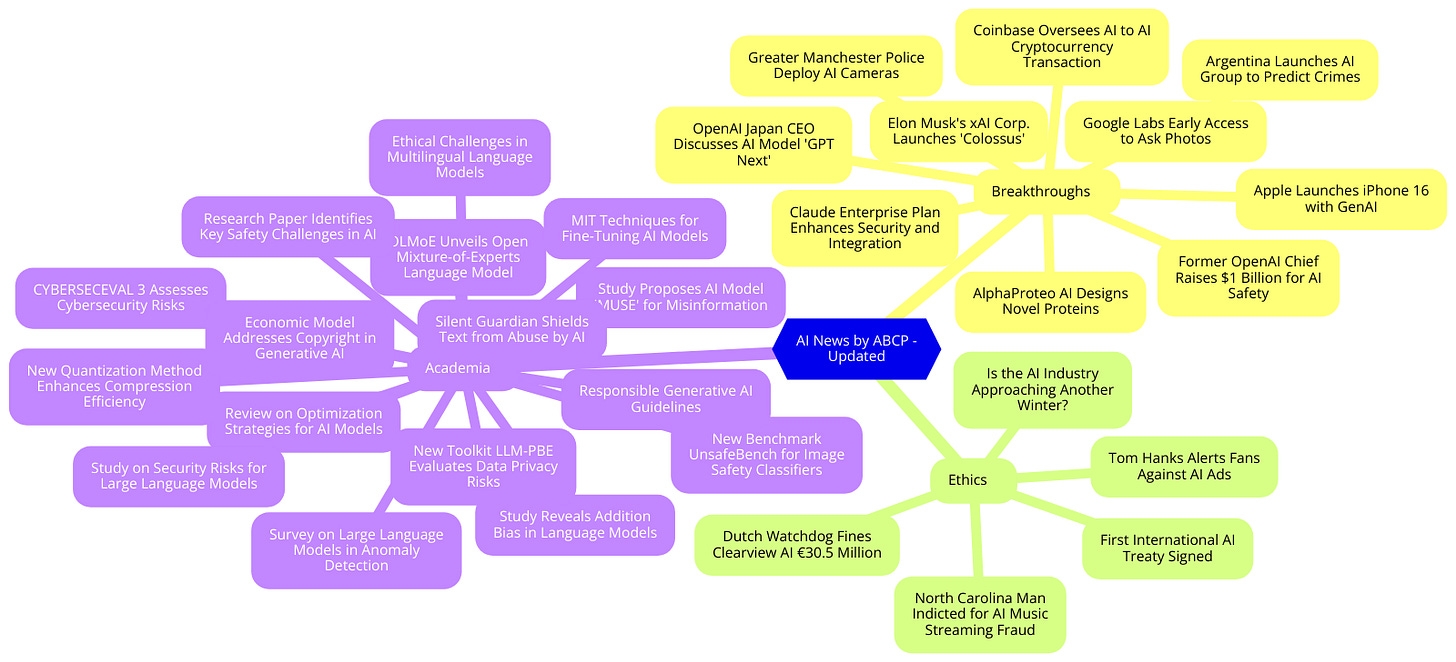

Today's highlights:

🚀 AI Breakthroughs

Apple Launches iPhone 16 with GenAI, New Apple Watch, and AirPods Updates

• Apple launches iPhone 16 series with native GenAI integration, branded as Apple Intelligence, featuring advanced AI capabilities

• The Tenth generation Apple Watch introduced, featuring the largest and thinnest display with new health monitoring technologies

• New AirPods generation released with enhanced noise cancellation, adaptive audio, and new health features for hearing protection.

OpenAI Japan CEO Discusses Future AI Model "GPT Next" at KDDI Summit 2024

• OpenAI Japan's President, Tadao Nagasaki, reported that ChatGPT's user base surpassed 200 million by late August, marking a record growth pace for software adoption

• ChatGPT Enterprise, tailored for businesses, celebrated its first anniversary with a significant uptake in English-speaking markets

• Future AI model "GPT Next" by OpenAI is expected to perform nearly 100 times better than previous iterations, contributing to exponential growth in AI capabilities.

Former OpenAI Chief Raises $1 Billion for New AI Safety Firm, Safe Superintelligence

• Ilya Sutskever, after departing OpenAI, raised $1 billion for his new company, Safe Superintelligence, focusing on developing safe AI systems

• Safe Superintelligence (SSI), valued at $5 billion, received backing from major investors like Andreessen Horowitz and Sequoia Capital

• SSI plans to use the funds to recruit top AI talent and invest in high-cost computing resources essential for their AI development efforts.

Coinbase Oversees First "AI to AI" Cryptocurrency Transaction Using AI Tokens

• Coinbase has facilitated its first-ever transaction between two AI agents using AI tokens to trade for tokens on its platform

• The cryptocurrency exchange enables AI agents to hold crypto wallets and make instant, global, and free transactions using USDC on Base

• Coinbase encourages integration with companies developing AIs and LLMs to enable AI-powered financial transactions and expand the AI to AI economy.

Greater Manchester Police Deploy AI Cameras to Tackle Phone Usage Among Drivers

• Nearly one-third of young drivers admit to using mobile phones for social media activities while driving

• Greater Manchester Police deploys AI-equipped safety cameras capable of spotting traffic law violations at high speeds

• Research indicates potential for increased driving bans as young motorists accrue points for phone-related offenses.

Claude Enterprise Plan Enhances Security, Collaboration and AI Integration for Organizations

• The Claude Enterprise plan introduces a 500K context window, increased usage capacity, and native GitHub integration for enhanced collaborative coding

• Enhanced security features such as SSO, role-based permissions, and audit logs ensure sensitive data protection within the Claude Enterprise environment

• Early adopters of the Claude Enterprise plan, including GitLab and Midjourney, report significant improvements in productivity and creativity through AI collaboration.

AlphaProteo AI System Designs Novel Proteins for Advanced Biological Research

• AlphaProteo, a new AI system, designs proteins that bind strongly to target molecules, enhancing drug design and disease understanding

• This technology shows higher success rates and binding affinities compared to existing methods, notably with cancer and diabetes-related proteins

• External validation by the Francis Crick Institute confirms AlphaProteo's predicted protein interactions, indicating practical biological functions.

Elon Musk's xAI Corp. Launches 'Colossus', World's Most Powerful AI Training System

• Elon Musk's xAI Corp. has launched Colossus, an AI training system with 100,000 Nvidia H100 graphics cards, claimed to be the most powerful globally

• Colossus is set to expand to 200,000 GPUs soon, incorporating Nvidia's newer, faster H200 chips to enhance AI model performance

• xAI aims to develop superior large language models with Colossus, potentially unveiling a more advanced successor to Grok-2 by the year’s end.

Argentina Launches AI Group to Predict Crimes: Emulates Global AI Security Trends

• Argentina launches a new AI unit within its Cybercrime Directorate to predict and prevent crime using advanced analytics

• The AI initiative is inspired by similar technologies in use by governments in the US, China, UK, among others

• Critics express concerns about potential misuse and the accuracy of predictive policing technologies.

Google Labs Rolls Out Early Access to Ask Photos for Select U.S. Users

• Early access to Ask Photos rolled out to select U.S. users, enabling enhanced search within photo galleries using Google Labs' latest Gemini models

• Ask Photos allows users to find specific memories by understanding context such as important people, hobbies, or favorite foods in their photos

• The service is conversational, offering users the ability to refine searches with additional details to improve accuracy and relevance of results.

⚖️ AI Ethics

Dutch Watchdog Fines Clearview AI €30.5 Million Over Illegal Photo Database

• The Netherlands Data Protection Agency fined Clearview AI 30.5 million euros for creating an "illegal" facial recognition database

• Clearview AI's use by Dutch entities has been prohibited, with potential additional fines of up to 5.1 million euros for non-compliance

• Clearview AI asserts it is not subject to EU GDPR as it has no business presence or customers in the EU.

Tom Hanks Alerts Fans Against AI-Generated Ads Promoting Fake Cures

• Tom Hanks warns against AI-generated ads misusing his likeness and voice to promote fraudulent "wonder drugs" for diabetes

• Californian Senate passes bills AB 1836 and AB 2602, aiming to protect performers' likenesses and restrict AI replicas without consent

• SAG-AFTRA applauds new legislation, while concerns grow over potential exodus of film production and tech companies from California due to strict AI laws.

Is the AI Industry Approaching Another Winter? Generative Models Underperform

• Recent generative AI systems like OpenAI's GPT-4o and Google's AI Overviews have underperformed against Silicon Valley's expectations

• These failures have sparked discussions about the potential onset of another AI winter, reflecting historical cycles of hype and disappointment in AI advancements

• Despite these setbacks, the AI industry continues to explore emerging technologies, occasionally leading to short-lived investment peaks across various sectors.

First International Legally Binding AI Treaty Signed at Council of Europe Conference

• The Council of Europe's first international legally binding treaty on AI, promoting alignment with human rights, democracy, and rule of law, was signed by multiple nations and entities

• Included in the treaty are comprehensive regulations spanning the full lifecycle of AI systems, designed to balance innovation with human rights protections

• The treaty will activate the first day of the month after a three-month waiting period post-ratification by at least five signatories, including three Council of Europe member states.

North Carolina Man Indicted for Generating Millions in Royalties through AI Music Streaming Fraud

• Michael Smith is indicted by the DOJ for creating fake accounts to fraudulently stream AI-generated music, netting over $10 million in royalties

• Smith’s operation involved the use of bots to stream over 660,000 songs daily, cleverly avoiding detection by diversifying the streams across multiple AI-created tracks

• Charged with wire fraud and money laundering, Smith faces a maximum of 20 years in prison per charge if convicted.

🎓AI Academia

OLMoE Unveils Its Open Mixture-of-Experts Language Model with Enhanced Performance

• The OLMOE model by the Allen Institute for AI, boasts 7 billion parameters, using only 1 billion per input token, outperforming similar models

• OLMOE has been fully open-sourced, including model weights, training data, code, and logs, advancing transparency in AI research

• This model was pretrained on 5 trillion tokens and includes specialized 'OLMOE-1B-7B-INSTRUCT' for refined performance.

Addressing Ethical and Social Challenges in Multilingual Language Model Development

• The paper discusses the urgent need for inclusive data collection for multilingual Large Language Models (LLMs) to support underrepresented languages

• It emphasizes the ethical considerations necessary to avoid exploitative data practices in non-Western, indigenous, and previously colonized communities

• Proposes twelve recommendations for socially responsible language data collection, including community partnerships and participatory design.

Systematic Review Explores Optimization Strategies for Enhancing Large Language Model Performance

• A systematic review of optimization and acceleration of Large Language Models (LLMs) was conducted, analyzing 65 out of 983 publications up to December 2023

• This study outlines methods to maintain performance while reducing costs and improving training times of LLMs based on three classification systems

• Case studies focus on practical strategies to overcome resource constraints in LLM training and inference efficiency, featuring advanced technological comparisons.

MIT Researchers Develop Advanced Techniques for Fine-Tuning AI Models in Specific Domains

• Fine-tuning large language models for improved performance in domain-specific applications has been analyzed through techniques like CPT and SFT

• Research indicates that the merging of different fine-tuned models can substantially enhance functionality, surpassing the capabilities of individual models

• Experiments reveal that strategic model merging offers potential advancements in AI, enabling systems to tackle more complex challenges effectively.

New Study Evaluates Security Risks and Defenses for Large Language Models

• Recent paper reviews ongoing research on vulnerability and threats in Large Language Models (LLMs)

• Analysis includes insights into attack vectors and evolving threat landscape for LLMs

• Current defense strategies are evaluated, highlighting strengths and weaknesses.

Study Reveals Addition Bias in Large Language Models Across Multiple Tasks

• Recent studies highlight an additive bias in Large Language Models like GPT-3.5 Turbo and Llama 3.1, showing a preference for adding elements rather than removing them

• In tasks such as palindrome creation and Lego balancing, models consistently chose to add components—e.g., Llama 3.1 added letters 97.85% of the time

• This additive behavior might lead to increased resource consumption and higher economic costs, suggesting a need for balanced approaches in model development.

Study Evaluates How Well Large Language Models Adhere to Privacy Standards

• A recent study reveals gaps in privacy-awareness of large language models (LLMs), assessing their performance in privacy compliance tasks

• The evaluation focused on privacy information extraction, regulatory key point detection, and privacy policy Q&A using LLMs like BERT and GPT-4

• Researchers suggest enhancing LLM capabilities in privacy tasks by integrating them more effectively with legal and regulatory frameworks.

New Quantization Method Enhances Large Language Model Compression Efficiency

• Sean Young's new paper introduces CVXQ, a scalable weight quantization framework for large language models, enhancing deployment on resource-limited devices

• CVXQ method achieves notable performance improvements in model accuracy compared to existing quantization techniques, facilitating efficient AI applications

• This research, part one of a trilogy, addresses the quantization of LLMs via convex optimization, promising significant reductions in environmental impact and computational costs.

Survey Assesses Large Language Models in Anomaly and Out-of-Distribution Detection

• Large Language Models (LLMs) increasingly aid anomaly and Out-of-Distribution (OOD) detection, enhancing machine learning reliability

• A new taxonomy categorizes approaches within LLMs for tackling anomaly and OOD issues, reflecting a paradigm shift in detection methods

• Future research directions highlighted, emphasizing the unexplored potential and emerging challenges in leveraging LLMs for complex anomaly detections.

New Toolkit LLM-PBE Evaluates Data Privacy Risks in Large Language Models

• LLM-PBE toolkit developed to systematically evaluate data privacy risks in Large Language Models

• The study highlights significant issues like unintentional training data leakage in these advanced AI models

• Research findings and full technical report are freely accessible at

https://llm-pbe.github.io/

.

CYBERSECEVAL 3: Assessing Cybersecurity Risks in Advanced Language Models

• CYBERSECEVAL 3, a new suite of security benchmarks for large language models (LLMs), evaluates 8 different types of risks related to cybersecurity

• The benchmarks assess risks posed to third parties and application developers, adding new areas in offensive security capabilities like automated social engineering

• The evaluation tools are applied to Llama 3 models and other state-of-the-art LLMs, aiming to measure and mitigate potential cybersecurity threats effectively.

New Benchmark 'UnsafeBench' Evaluates Image Safety Classifiers on Diverse Image Types

• UnsafeBench benchmarks image safety classifiers on 10,000 real-world and AI-generated images spanning 11 unsafe categories, revealing gaps in current systems;

• Five popular and three new visual language model-based classifiers were evaluated, showing a lack of effectiveness for both real-world and AI-generated images;

• PerspectiveVision, a new tool from UnsafeBench creators, aims to enhance the moderation of unsafe images with improved detection capabilities.

Proposed Economic Model Addresses Copyright Issues in Generative AI

• A new economic framework proposed by academics could resolve copyright issues in generative AI by compensating copyright holders based on their data's contribution to AI output

• This framework employs cooperative game theory and probabilistic methods to quantitatively determine each data contributor’s impact on generative AI models

• Demonstrated effectiveness in identifying key contributors’ data in the creation of AI-generated artworks, ensuring a fair revenue distribution.

Research Paper Identifies Key Safety Challenges in Large Language Models

• A study published in Transactions on Machine Learning Research outlines 18 key challenges in ensuring the safety and alignment of large language models (LLMs)

• These challenges span three major areas: the scientific understanding of LLMs, their development and deployment methods, and sociotechnical issues

• Over 200 specific research questions have been posed based on these foundational challenges, encouraging further exploration in the field.

Responsible Generative AI: Guidelines on What Should and Should Not Be Generated

• The paper highlights the need for GenAI to generate accurate content while avoiding the creation of fake data

• It discusses the importance of GenAI models not producing harmful or offensive content to prevent misinformation and bias

• A focus is placed on preventing GenAI from executing harmful instructions, safeguarding against adversarial attacks.

Rebalancing Copyright and Computer Science in the Age of Generative AI

• New technologies challenge copyright industries, spurred by AI advancements and fueled by the need for extensive data in AI research

• Legal doctrines face reconsideration, with debates about fair use and restrictions like "robots.txt" that may not fit modern needs

• Proposed solutions suggest a balance: where computer science disciplines its usage and copyright industries relax compensation demands.

New Study Proposes AI Model 'MUSE' to Tackle Misinformation on Social Media

• The University of Washington researchers developed MUSE, an AI model that speeds up the correction of misinformation on social media

• MUSE outperforms existing AI tools like GPT-4 by 37% and layperson responses by 29% in identifying and rectifying misinformation

• A new evaluation framework proposed by the researchers assesses the misinformation correction across 13 dimensions ensuring high-quality fact-checking.

Silent Guardian: A Novel Mechanism to Shield Text from Abuse by Large Language Models

• Silent Guardian introduces a text protection mechanism to safeguard texts against misuse by large language models (LLMs)

• This novel approach ensures that protected texts prompt LLMs to cease response generation, effectively terminating malicious interactions

• The protection mechanism demonstrated near 100% effectiveness in experiments, ensuring robust and transferable text security across multiple scenarios.

About ABCP: We are dedicated to reducing Generative AI anxiety among tech enthusiasts by providing timely, well-structured, and concise updates on the latest developments in Generative AI through our AI-driven news platform, ABCP - Anybody Can Prompt!