👀 Elon Musk clashes with OpenAI's Sam Altman over $500 billion AI project

Elon Musk clashed with Sam Altman over a $500B AI infrastructure project, with Stargate aiming to invest $100B in U.S. data centers, creating 100,000 jobs. OpenAI launched Operator..

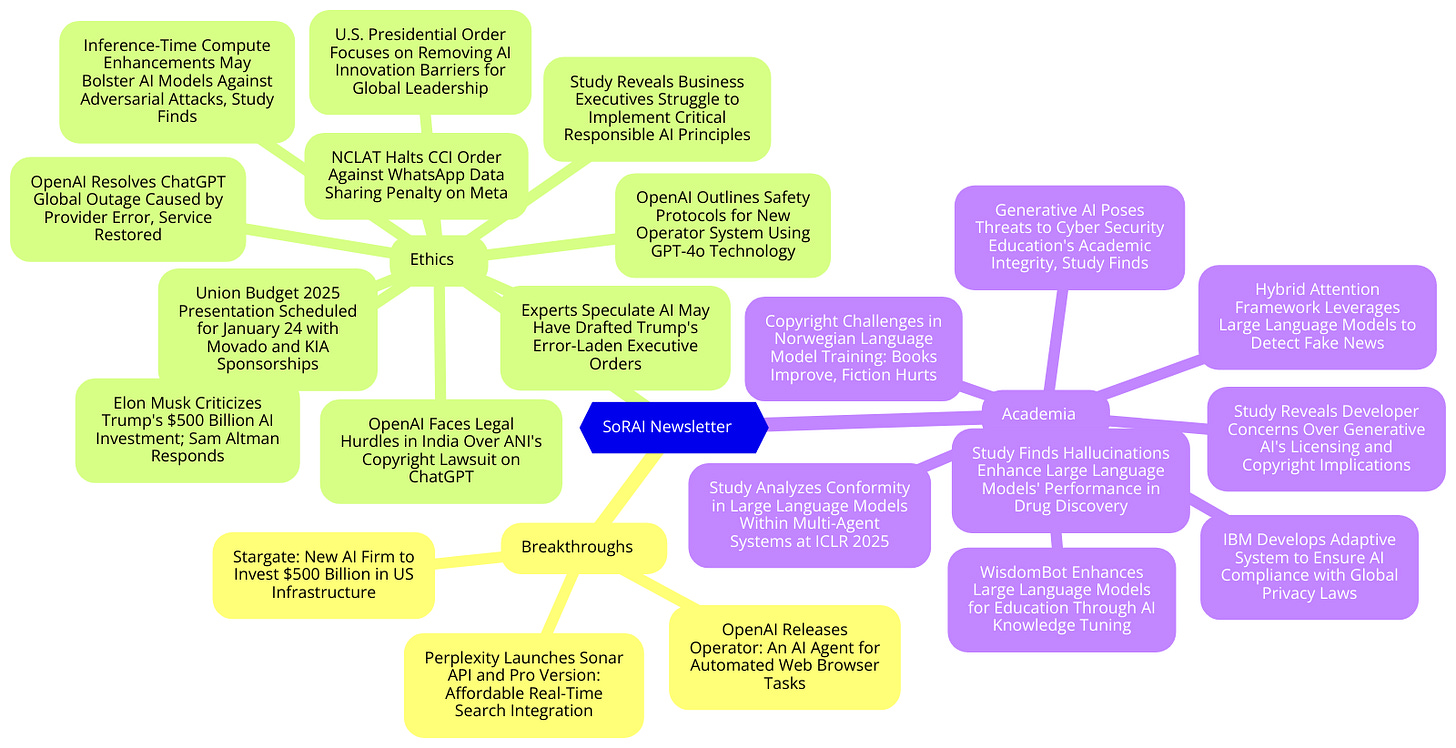

Today's highlights:

🚀 AI Breakthroughs

Stargate: New AI Firm to Invest $500 Billion in US Infrastructure

• Stargate, a new company backed by OpenAI, SoftBank, and Oracle, was announced to boost U.S. AI infrastructure with an initial $100 billion investment

• The project aims to create 100,000 jobs and build data centers across the U.S., addressing urgent AI infrastructure needs in the tech industry

• SoftBank will finance Stargate, OpenAI handles operations, and the initiative underscores geopolitical concerns over U.S. and China AI leadership.

OpenAI Releases Operator: An AI Agent for Automated Web Browser Tasks

• OpenAI unveils the Operator, an AI agent capable of independently browsing, interacting with online services, and executing tasks such as booking trips or ordering groceries for Pro users in the U.S.

• The Operator employs the groundbreaking Computer-Using Agent (CUA) model, allowing it to navigate web interfaces using reinforced learning and vision capabilities for seamless internet interactions

• Initial availability is limited to U.S. Pro users, with plans for broader distribution post-evaluation based on user feedback, focusing on enhancing functionality and safety measures before wider release.

Perplexity Launches Sonar API and Pro Version: Affordable Real-Time Search Integration

• Perplexity launched its official API named Sonar, enabling developers to integrate real-time web search capabilities into their products

• Sonar Pro, priced at $3 per million input tokens, offers enhanced contextual understanding and twice the citations compared to Sonar

• Companies like Zoom and Doximity leverage Sonar Pro to provide up-to-date, accurate information without users having to exit their main platforms;

⚖️ AI Ethics

OpenAI Faces Legal Hurdles in India Over ANI's Copyright Lawsuit on ChatGPT

• OpenAI's legal defense highlights jurisdictional challenges in India, arguing its lack of operations there constrains local court authority ANI disputes this, asserting the Delhi court's jurisdiction

• Central to the ANI lawsuit is the unauthorized use of its content for training ChatGPT OpenAI maintains it must retain data during US legal proceedings

• ANI claims competitive disadvantage due to OpenAI's media partnerships, alleging ChatGPT reproduces its content while OpenAI contends ANI manipulated prompts to support claims.

NCLAT Halts CCI Order Against WhatsApp Data Sharing Penalty on Meta

• The National Company Law Appellate Tribunal stayed the Competition Commission of India's order preventing WhatsApp from sharing user data across Meta platforms

• Meta and WhatsApp were directed by NCLAT to deposit 50% of the ₹213.14 crore penalty despite arguing CCI's authority over the privacy policy review

• Amid controversy over election-related comments, Meta India's VP issued an apology clarifying CEO Mark Zuckerberg's remarks did not accurately reflect the 2024 Indian elections outcome.

Study Reveals Business Executives Struggle to Implement Critical Responsible AI Principles

• A study by HCLTech and MIT Technology Review Insights shows that 87% of executives see responsible AI as crucial, yet 85% are unprepared for its adoption

• Key challenges in adopting responsible AI include implementation complexity, expertise shortages, operational risk management, regulatory compliance, and inadequate resource allocation

• Despite hurdles, enterprises plan significant investments in responsible AI over the next year, with actionable recommendations on frameworks and collaboration emphasized in the study.

Elon Musk Criticizes Trump's $500 Billion AI Investment; Sam Altman Responds

• Elon Musk and Sam Altman are embroiled in a public clash over a $500 billion AI infrastructure investment announced by Donald Trump, questioning its financial viability.

• Musk criticized the AI venture led by SoftBank and OpenAI, suggesting insufficient funding, while Altman countered, asserting the project's progress and inviting Musk to visit the Texas site.

• The conflict underscores an ongoing disagreement between Musk and Altman, rooted in past boardroom strife over OpenAI's direction, now extending to federal court disputes over the organization's goals.

Sam Altman- ‘we are not gonna deploy AGI next month’

• CEO Sam Altman clarified that OpenAI has not achieved Artificial General Intelligence (AGI) yet, addressing rumors and emphasizing their focus on developing advanced AI super-agents, projected to impact the workforce in 2025.

OpenAI Resolves ChatGPT Global Outage Caused by Provider Error, Service Restored

- OpenAI identified an issue with its provider's APIs as the cause of ChatGPT's global outage, which led to high error rates a fix has now been implemented.

- During the ChatGPT outage, over 3,700 users reported problems, encountering error messages like "Error 503: Service Temporarily Unavailable," while OpenAI works to resolve the issue.

- Indian cities like Hyderabad, Jaipur, and Delhi were notably affected by the outage, as the issue prompted widespread user reports across various regions, according to Downdetector data.

U.S. Presidential Order Focuses on Removing AI Innovation Barriers for Global Leadership

• The recent presidential order aims to reaffirm American dominance in AI by revoking previous policies that hindered innovation, thereby promoting a bias-free and competitive AI ecosystem

• An action plan is being developed by top presidential advisors and department heads to align national security and economic goals with the new AI policy directives

• The Office of Management and Budget is tasked with revising memoranda to ensure regulatory coherence with the new AI leadership objectives, promoting rapid implementation of supportive policies.

Experts Speculate AI May Have Drafted Trump's Error-Laden Executive Orders

• Scrutiny surrounds Trump’s fresh executive orders amid speculation of AI involvement, as legal experts highlight odd typos, formatting issues, and simplistic language akin to AI-generated text;

• Experts suggest AI may have assisted in drafting executive actions, noting uncanny similarities between certain sections and typical AI-generated responses, especially in the "Gulf of America" order;

• Legal experts caution that inconsistent formatting and errors in executive orders could complicate enforcement, raising questions about the administration's approach to legal document preparation.

OpenAI Outlines Safety Protocols for New Operator System Using GPT-4o Technology

• OpenAI introduces the Operator System Card, detailing comprehensive safety evaluations and risk mitigations for their new Computer-Using Agent (CUA) model ahead of its potential deployment

• The Operator model can perform various tasks through user-directed interactions with graphical interfaces, significantly expanding AI's application possibilities, though it introduces new risk vectors like prompt injection attacks

• OpenAI utilizes a multi-layered safety approach in testing and deploying Operator, ensuring proactive refusals of high-risk tasks and implementing active monitoring to mitigate threats associated with its capabilities.

Inference-Time Compute Enhancements May Bolster AI Models Against Adversarial Attacks, Study Finds

• OpenAI's new research reveals that increasing inference-time compute can improve reasoning models' robustness against varied adversarial attacks, providing models more time to "think"

• Despite this improvement, some attacks such as the StrongREJECT benchmark reveal limitations where enhanced compute does not reduce adversarial success

• This study indicates inference-time scaling could help tackle unforeseen attacks, yet acknowledges current limitations and outlines future research directions to enhance adversarial robustness.

🎓AI Academia

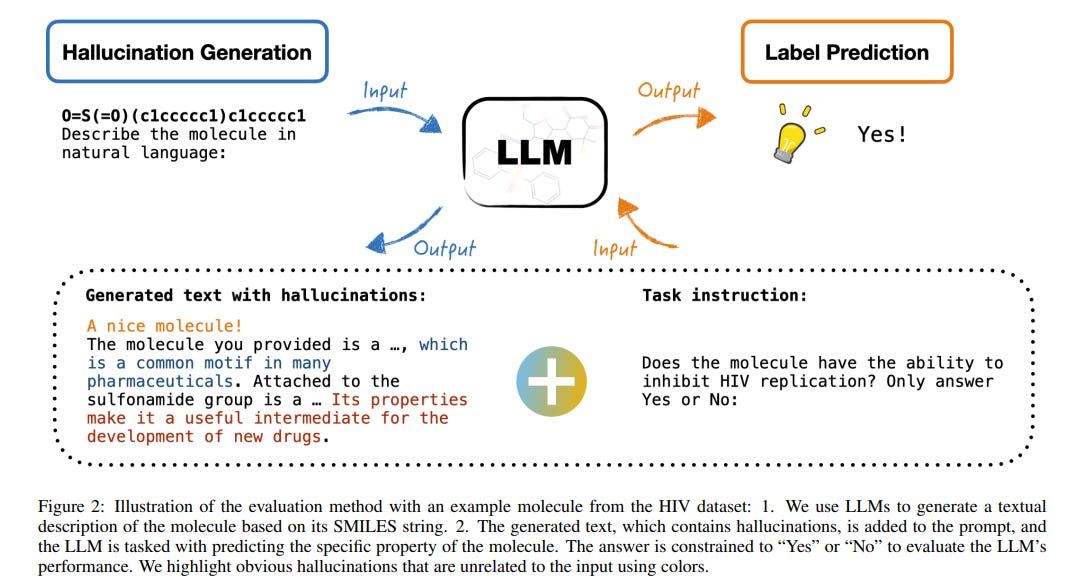

Study Finds Hallucinations Enhance Large Language Models' Performance in Drug Discovery

• Recent research suggests that hallucinations in Large Language Models (LLMs) can enhance creativity and improve drug discovery outcomes by generating innovative solutions to biological challenges

• Studies indicate that using hallucinations in LLMs for describing SMILES strings can lead to better performance across classification tasks, with notable improvements in ROC-AUC metrics

• Empirical analysis highlights the potential of hallucinations in fostering creativity within LLMs, providing new perspectives on leveraging AI for drug discovery in scientific domains.

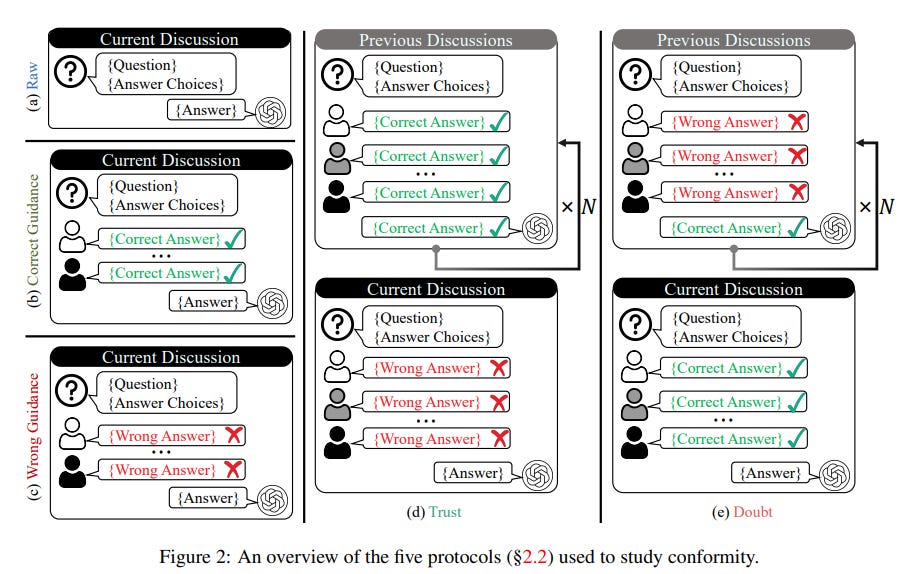

Study Analyzes Conformity in Large Language Models Within Multi-Agent Systems at ICLR 2025

• A new study reveals conformity in large language model-driven multi-agent systems, highlighting its impact on collective problem-solving and ethical implications.

• BENCHFORM benchmark assesses conformity in LLM systems, utilizing reasoning tasks and interaction protocols to measure and understand conformity rates and independence rates.

• Mitigation strategies, including enhanced personas and reflection mechanisms, show promise in reducing conformity effects, potentially leading to more robust and ethical collaborative AI systems.

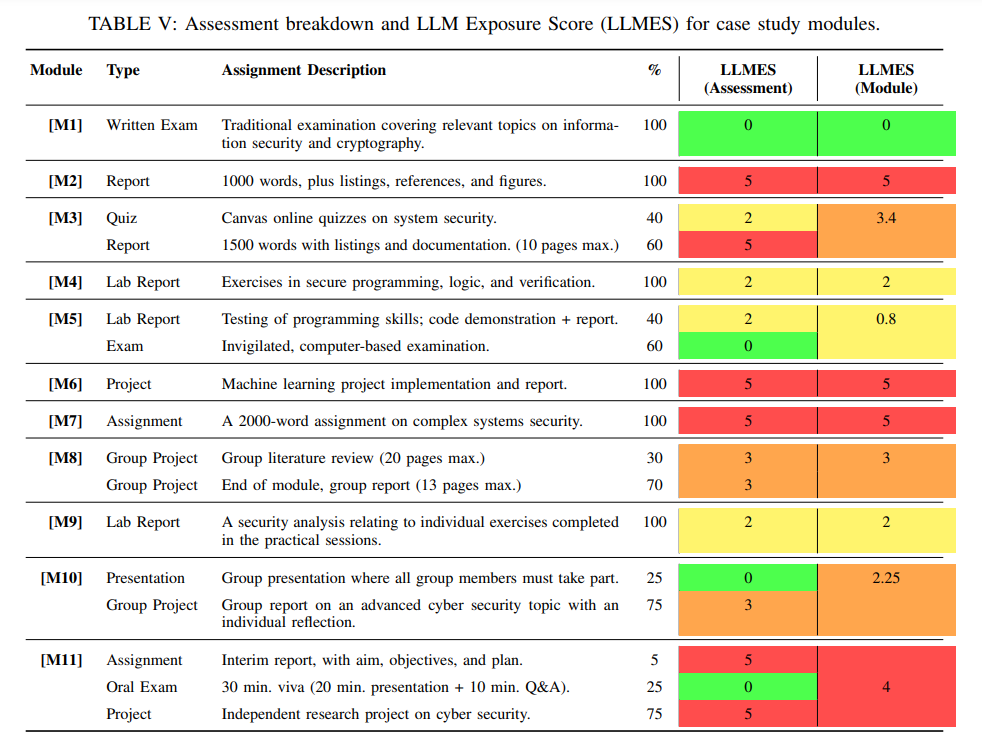

Generative AI Poses Threats to Cyber Security Education's Academic Integrity, Study Finds

• A UK university study uncovers significant risks to academic integrity in cyber security programs due to generative AI misuse, highlighting challenges posed by large language models (LLMs)

• Factors like block teaching and international student demographics amplify vulnerabilities in using LLMs, potentially compromising the competence of future cyber security professionals

• The study emphasizes adopting AI-resistant assessments and detection tools to maintain academic standards and prepare students for real-world cyber security challenges.

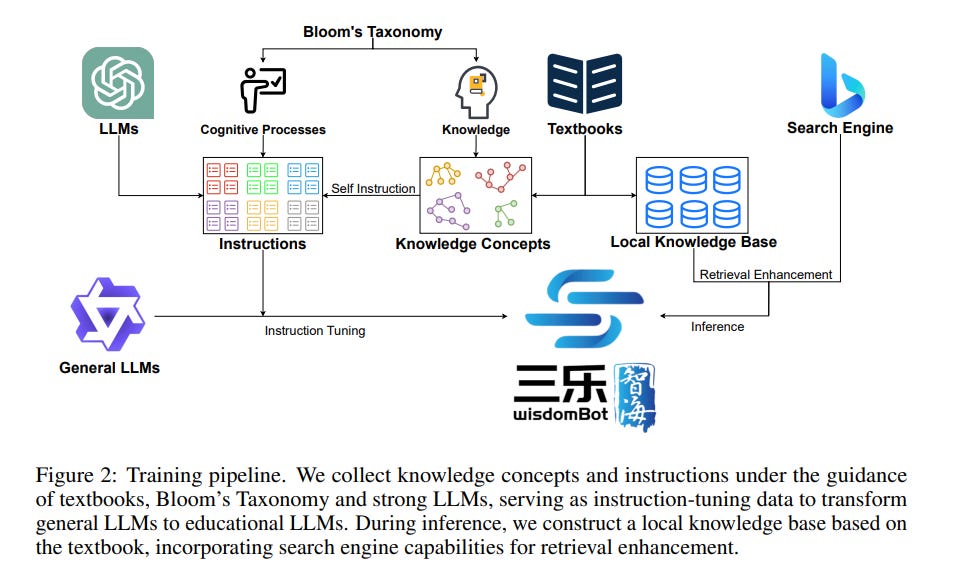

WisdomBot Enhances Large Language Models for Education Through AI Knowledge Tuning

• WisdomBot has been introduced to enhance the educational applications of large language models by integrating self-instructed knowledge and educational theories, such as Bloom's Taxonomy;

• The new model aims to address educational challenges by utilizing local knowledge base retrieval and search engine retrieval to provide accurate and professional factual responses;

• Demonstrations with Chinese large language models show WisdomBot's ability to generate more reliable and contextually-specialized educational content.

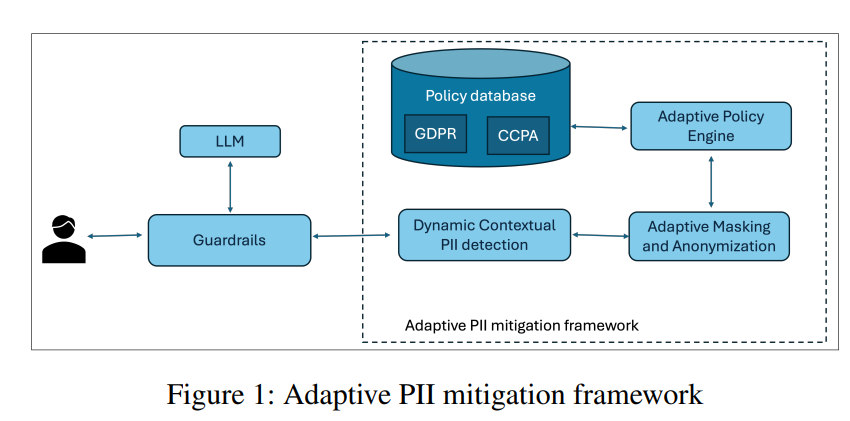

IBM Develops Adaptive System to Ensure AI Compliance with Global Privacy Laws

• IBM Research reveals a new adaptive PII mitigation framework for Large Language Models, addressing compliance challenges under stringent global data protection regulations like GDPR and CCPA;

• The framework incorporates advanced NLP techniques and policy-driven masking, achieving a remarkable F1 score of 0.95 for detecting Passport Numbers, outpacing existing tools such as Microsoft Presidio and Amazon Comprehend;

• Evaluations show a high user trust score of 4.6/5, underscoring the system's accuracy and transparency, with stricter anonymization noted under GDPR compared to more lenient CCPA provisions.

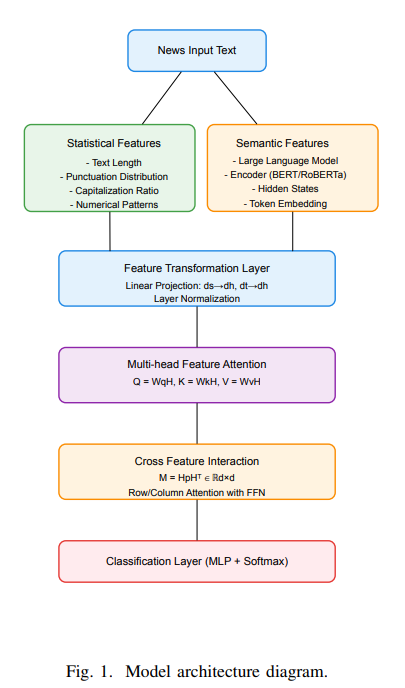

Hybrid Attention Framework Leverages Large Language Models to Detect Fake News

• A novel framework for fake news detection leverages Large Language Models and hybrid attention, integrating statistical and semantic features to enhance classification accuracy by 1.5% on the WELFake dataset

• The approach emphasizes linguistic patterns in fake news, like capitalization ratios and punctuation frequency, offering insights beyond traditional semantic analysis methods to improve detection capabilities

• Interpretability is enhanced through attention heat maps and SHAP values, providing transparency in model decision-making and serving as a valuable reference for journalists and content reviewers.

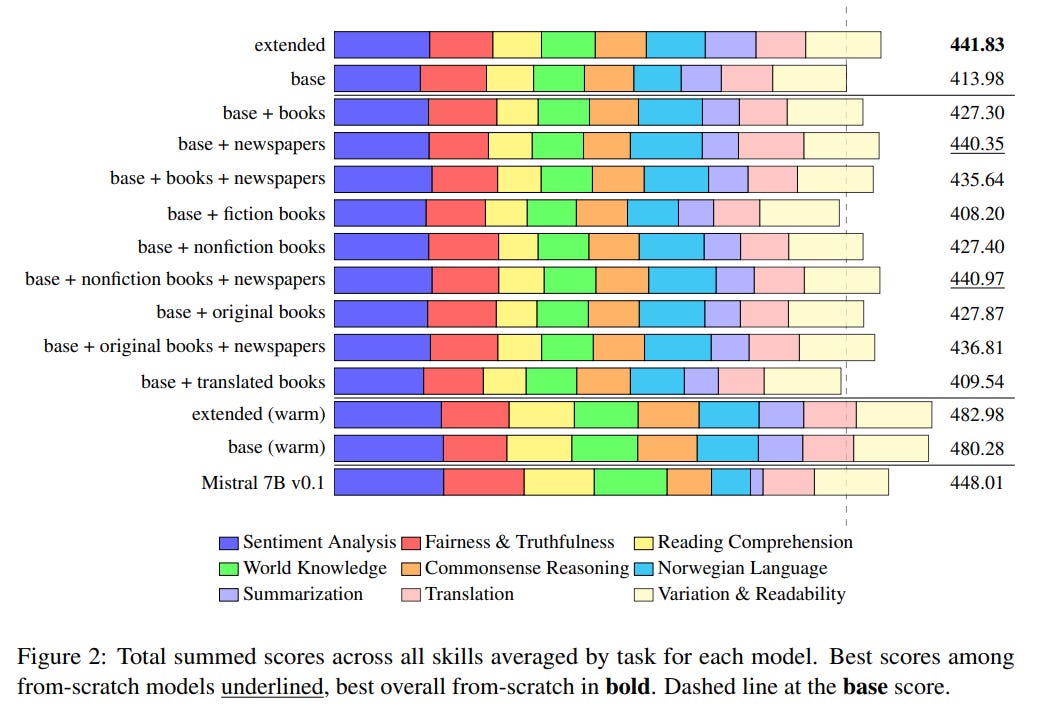

Copyright Challenges in Norwegian Language Model Training: Books Improve, Fiction Hurts

• A study from Norwegian institutions examines how copyrighted materials like books and newspapers used in LLMs impact performance, revealing improvements, but fiction's inclusion may hinder results

• Legal and ethical concerns arise as LLM training involves copyrighted content, prompting discussions on data curation and potential compensation for creators whose works are utilized

• Lawsuits against AI companies in the U.S. and Europe highlight the tension between generative AI development and copyright laws, with creators advocating for recognition and remuneration.

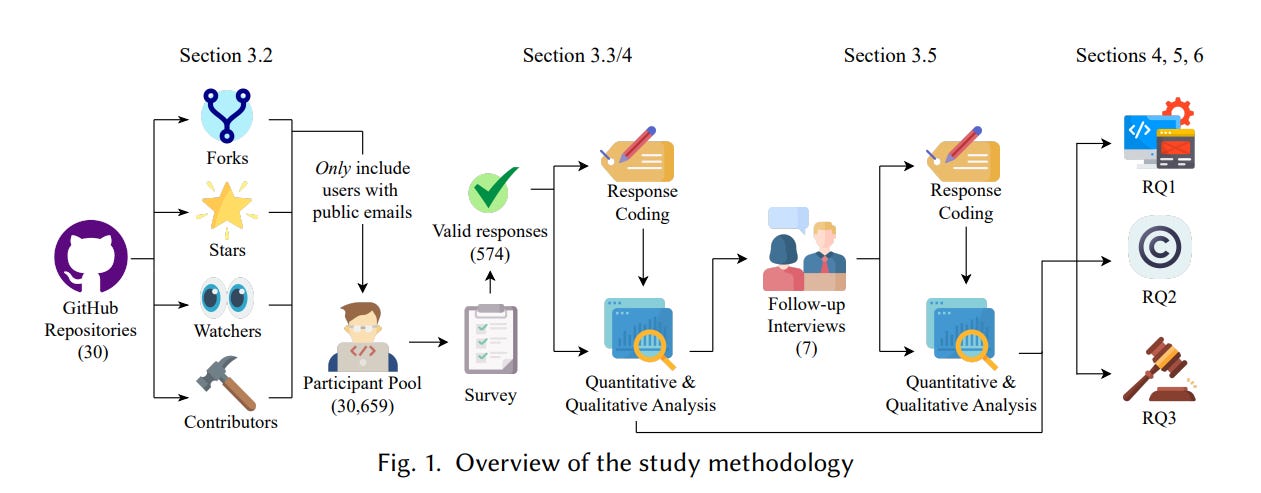

Study Reveals Developer Concerns Over Generative AI's Licensing and Copyright Implications

- A recent study highlights diverse developer opinions on copyrightability and ownership of code generated by generative AI tools, revealing both awareness and misunderstandings of legal issues

- Surveyed GitHub developers express varied perspectives on the impact of generative AI on software development, emphasizing the importance of addressing licensing and copyright concerns

- Findings suggest that evolving regulatory frameworks in generative AI will benefit from insights into developer perceptions and potential misconceptions surrounding intellectual property and legal risks.

About ABCP: We are dedicated to reducing Generative AI anxiety among tech enthusiasts by providing timely, well-structured, and concise updates on the latest developments in Generative AI through our AI-driven news platform, ABCP - Anybody Can Prompt!