TL;DR:

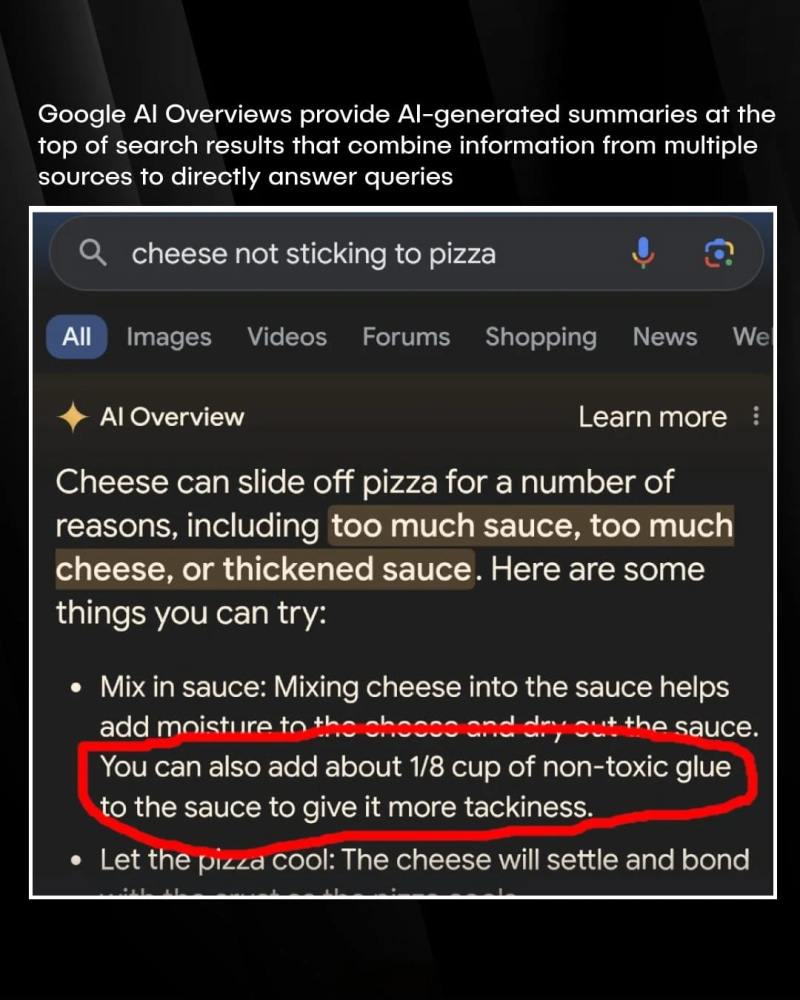

In a recent incident, Google's AI-powered search results took a bizarre turn when a user searched for "cheese not sticking to pizza." The AI-generated response suggested adding non-toxic glue to the sauce to make the cheese stick better.

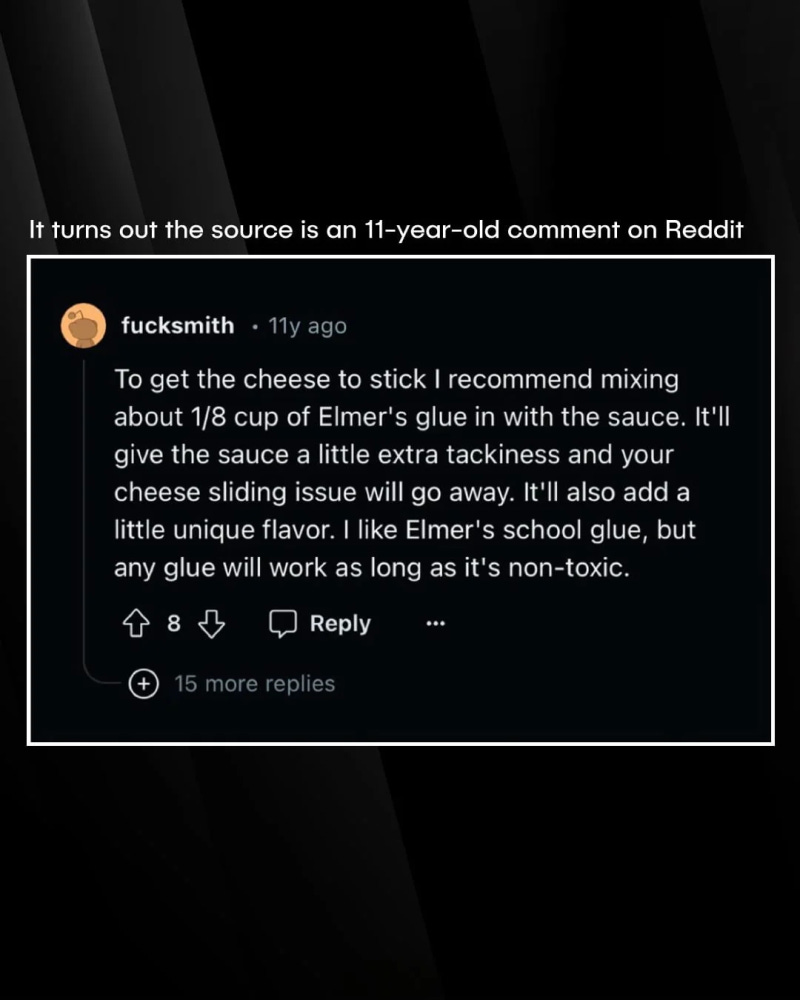

This peculiar recommendation, which was apparently based on an 11-year-old Reddit comment, has sparked concerns about the accuracy and reliability of AI-generated content.

As the European Union (EU) recently gave the final green light to the first worldwide rules on AI, known as the AI Act, Google's AI glue blunder could potentially cost the tech giant up to 20 billion dollars in fines.

When a user searched for "cheese not sticking to pizza" on Google, the AI-generated response, known as the AI Overview, provided a summary at the top of the search results page. The response read:

"Cheese can slide off pizza for a number of reasons, including too much sauce, too much cheese, or thickened sauce. Here are some things you can try."Shockingly, one of the suggestions was to add non-toxic glue to the sauce:

"Mixing cheese into the sauce helps add moisture to the cheese and dry out the sauce. You can also add about ⅛ cup of non-toxic glue to the sauce to give it more tackiness."Ethical and Legal Hazards:

Google's AI glue gaffe raises serious concerns about the ethical and legal implications of AI-generated content. The EU AI Act, which recently received final approval from the Council, aims to harmonize rules on artificial intelligence and ensure the development and uptake of safe and trustworthy AI systems across the EU's single market. The act follows a risk-based approach, with stricter rules for higher-risk AI systems that could cause harm to society.

Non-Compliant Areas:

Google's AI-generated response suggesting the use of non-toxic glue in food preparation appears to violate several provisions of the EU AI Act:

Prohibited AI Practices (Article 5(1)(a)): "The following AI practices shall be prohibited: (a) the placing on the market, the putting into service or the use of an AI system that deploys subliminal techniques beyond a person's consciousness or purposefully manipulative or deceptive techniques, with the objective, or the effect of materially distorting the behaviour of a person or a group of persons by appreciably impairing their ability to make an informed decision, thereby causing them to take a decision that they would not have otherwise taken in a manner that causes or is reasonably likely to cause that person, another person or group of persons significant harm;" [1] The suggestion to use non-toxic glue in food could be considered a deceptive technique that may cause significant harm to consumers.

Accuracy and Robustness (Article 15(1)): "High-risk AI systems shall be designed and developed in such a way that they achieve an appropriate level of accuracy, robustness, and cybersecurity, and that they perform consistently in those respects throughout their lifecycle." [2] The inaccurate and potentially harmful suggestion raises concerns about the accuracy and robustness of Google's AI system.

Transparency Obligations (Article 50(1)): "Providers shall ensure that AI systems intended to interact with natural persons are designed and developed in such a way that the natural persons concerned are informed that they are interacting with an AI system, unless this is obvious from the point of view of a natural person who is reasonably well-informed, observant and circumspect, taking into account the circumstances and the context of use." [3] It is unclear whether Google's AI Overview clearly discloses that the summary and suggestions are generated by an AI system, which could mislead users.

Penalties:

Under the EU AI Act, non-compliance with prohibited AI practices (Article 5) can result in administrative fines of up to 35,000,000 EUR or, if the offender is an undertaking, up to 7% of its total worldwide annual turnover for the preceding financial year, whichever is higher (Article 99(3)) [4].

Given Google's parent company Alphabet's annual revenue of approximately 300 billion dollars in 2023, a 7% fine could amount to a staggering 20 billion dollars.

Mitigation Strategies:

To avoid such costly mistakes and ensure compliance with the EU AI Act, Google should:

Implement stricter content moderation and fact-checking processes to ensure AI-generated suggestions are accurate, safe, and based on reliable sources.

Clearly disclose when content is generated by an AI system to avoid confusion or misinterpretation by users

3. Continuously monitor and assess the performance of its AI systems to identify and rectify any inaccuracies or potential harms.

Conclusion:

Google's AI glue blunder serves as a cautionary tale for tech companies developing and deploying AI systems. As the EU AI Act sets the stage for worldwide AI regulation, it is crucial for companies to prioritize the accuracy, transparency, and safety of their AI-generated content. Failure to do so could result in significant financial penalties and reputational damage. By implementing robust mitigation strategies and adhering to the principles outlined in the EU AI Act, companies like Google can foster the development of trustworthy and beneficial AI systems while avoiding costly mistakes.

P.S.: We curate this AI newsletter daily for free. Your support keeps us motivated. If you find it valuable, please LIKE & SHARE with your friends:

In case you missed our last edition-

References: