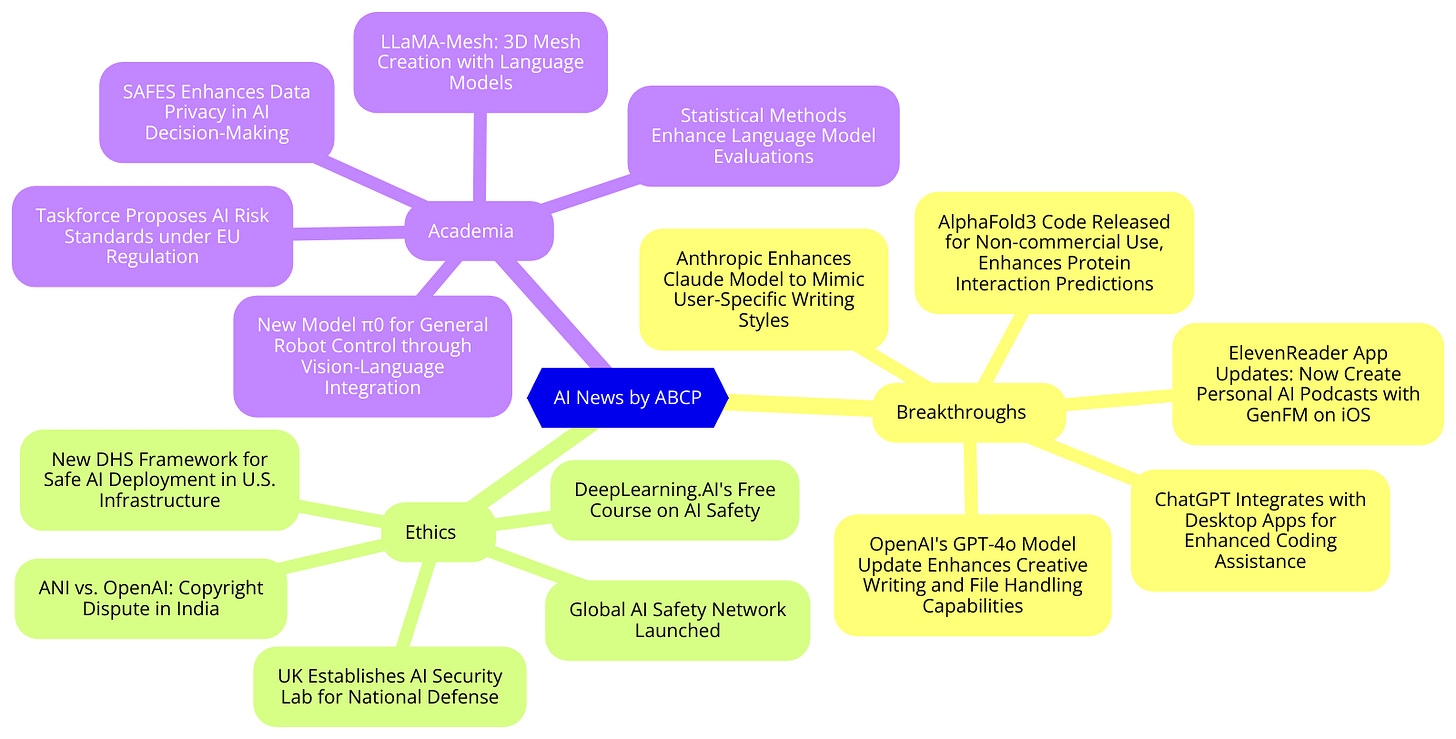

Today's highlights:

🚀 AI Breakthroughs

Anthropic Enhances Claude Model to Mimic User-Specific Writing Styles

• Anthropic updates Claude model to mimic users' writing styles by integrating sample texts for personalized output generation

• Claude now offers preset writing styles—normal, formal, concise, explanatory—and allows for user-created custom styles for tailored communication

• Early adopter GitLab praises Claude's versatile style applications, enhancing consistency in business documents and marketing materials.

Anthropic Enhances AI Prompt Engineering and Management in Latest Console Update

• Anthropic introduces prompt improver and example management in the Anthropic Console to enhance AI application reliability

• The prompt improver utilizes techniques like chain-of-thought reasoning and example enrichment to increase model accuracy by 30%

• New features include an option to test prompts with "ideal outputs," aiding in precise feedback and iterative improvements.

AlphaFold3 Code Released for Non-commercial Use, Enhances Protein Interaction Predictions

• AlphaFold3 software code now available for non-commercial use, enabling enhanced research opportunities in protein interactions with DNA

• The release follows criticism of DeepMind for initially withholding code, claiming a need to protect commercial interests

• Competitors and startups like Baidu, ByteDance, and Chai Discovery have launched their own versions, expanding the landscape of protein-structure prediction tools.

ElevenReader App Updates: Now Create Personal AI Podcasts with GenFM on iOS

• ElevenLabs has launched GenFM podcasts on the ElevenReader app, enabling users to create personalized podcasts from PDFs, articles, and texts

• The ElevenReader app, available on iOS, now supports AI-generated audio content in 32 languages, expanding accessibility globally

• An Android version of GenFM podcasts on the ElevenReader will be released in the upcoming weeks, further enhancing the platform's reach.

Alibaba's MarcoPolo Team Launches Marco-o1 AI Model for Advanced Problem-Solving Tasks

• The MarcoPolo Team launched Marco-o1, an advanced LLM designed for complex problem-solving

• Marco-o1, available on GitHub and Hugging Face, features MCTS and novel reasoning strategies

• Demonstrated 6.17% improvement on the MGSM English dataset, showing significant reasoning enhancements.

OpenAI's GPT-4o Model Update Enhances Creative Writing and File Handling Capabilities

• OpenAI's latest GPT-4o update, named 'gpt-4o-2024-11-20', enhances creative writing and file handling, delivering more engaging and natural responses

• GPT-4o update excels in evading AI text detection tools and showcases a distinct style, as highlighted by initial user reviews on social media platforms

• Recently benchmarked, GPT-4o clinched the top position at lmarena.ai, surpassing previous models and competitors with a new high score in the leaderboard.

ChatGPT Integrates with Desktop Apps for Enhanced Coding Assistance on macOS and Windows

• OpenAI integrates ChatGPT with macOS apps like VS Code, Xcode, and Terminal, enhancing coding support and interactions for desktop users

• New ChatGPT features for macOS include voice assistance, screenshots, file uploads, and enhanced web search capabilities, available in an early beta for Plus and Team users

• This update positions OpenAI at the forefront of developing AI agents capable of autonomous desktop interaction, competing with recent advancements from Microsoft and Anthropic.

Microsoft Expands AI Model Catalog with Industry-Specific Adaptations and Partnerships

• Microsoft expands AI capabilities with industry-adapted models, enhancing specific needs like agriculture, automotive, and healthcare through partnerships with Bayer, Cerence, and more

• Azure AI Model Catalog now includes specialized small language models (SLMs) such as E.L.Y. Crop Protection for agriculture and CaLLM™ Edge for automotive, facilitating industry-specific advancements

• Enhanced regulatory compliance and efficiency in financial sectors promoted by Saifr's new AI models, available in the Azure AI model catalog, addressing broker-dealer communications and advertising.

⚖️ AI Ethics

ANI vs. OpenAI: Copyright Dispute Spotlights AI's Use of Copyrighted Materials in India

• ANI accuses OpenAI of copyright infringement in India, highlighting tensions over AI and data use;

• The Delhi High Court is assessing if AI's training with copyrighted data counts as fair use or infringement;

• OpenAI responds with an opt-out model, excluding ANI's content from future AI training.

UK Establishes AI Security Lab to Counter Emerging Cyber Threats and Enhance National Defense

• The UK establishes the Laboratory for AI Security Research (LASR) with an initial £8.22 million in funding to safeguard against AI-related threats

LASR to foster collaborations among GCHQ, National Cyber Security Centre, the University of Oxford, and other key stakeholders

• Part of a comprehensive cybersecurity strategy, the UK government also plans a new £1 million incident response project to enhance global cyber defense.

Global AI Safety Network Launched to Promote Secure and Trustworthy Development

• The AISI Network launched at Seoul AI Summit aims to set safety standards and mitigate global AI risks through unified efforts

• Experts highlight the necessity of a coherent structure and dedicated partnerships within the AISI to effectively govern AI safety globally

• The AISI Network engages with AI companies and governments in bilateral and cross-sectoral collaborations to enhance AI safety and standards.

UK Watchdog Clears Google's $2 Billion Investment in AI Firm Anthropic

• UK Competition and Markets Authority concludes Google's partnership with AI firm Anthropic doesn't qualify for full investigation;

• Google invested $2 billion in Anthropic without gaining "material influence," ensuring the startup's operational independence;

• Decision relieves Alphabet amidst multiple antitrust cases in Europe and the US, maintaining Anthropic's freedom to choose cloud partners.

DeepLearning.AI Launches Free Course on AI Safety with Guardrails AI

• DeepLearning.AI, in partnership with Guardrails AI, launched a new course focused on implementing safety features in LLM-based applications

• The course includes practical training on setting up 'guardrails' for AI systems, using a case study from a pizzeria's customer service chatbot

• Andrew Ng highlighted the growing importance of guardrails in AI applications, particularly for industries facing stringent regulatory standards.

Enhancing LLM Security: NeMo Guardrails and Garak's Role in Vulnerability Scanning

• NeMo Guardrails employ dialogue and moderation rails to enhance security in LLM-powered applications such as the ABC bot

• Garak, an open-source tool, enables scanning for common vulnerabilities within LLM systems, similar to network security scanners

• Testing results demonstrate significant vulnerability protection improvements with the layered application of general instructions, dialogue rails, and moderation rails in ABC bot configurations.

First Draft of General-Purpose AI Code of Practice Released by EU Experts

• The first draft of the General-Purpose AI Code of Practice was unveiled by independent experts and published by the European AI Office

• Nearly 1,000 stakeholders will discuss this draft in upcoming Code of Practice Plenary meetings set for next week

• Final objectives to include transparency measures, risk assessment, and mitigation strategies for advanced AI model providers.

Google AI and Local Firms Launch Air View+ to Enhance India's Air Quality Monitoring

• Air View+ launches in India, leveraging Google AI to provide hyperlocal air quality data to improve urban planning and public health

• Over 150 Indian cities now equipped with state-of-the-art air quality sensors developed by climate tech firms Aurassure and Respirer Living Sciences

• Real-time air quality updates from Air View+ are now accessible directly via Google Maps, enhancing daily decision-making for millions of users.

New DHS Framework Guides Safe AI Deployment in Critical U.S. Infrastructure

• DHS unveils new "Roles and Responsibilities Framework for AI in Critical Infrastructure"

• Framework aims to guide safe AI deployment in sectors like power, water, and digital networks

• Collaboration between industry, civil society, and public sector formulates AI safety advancements.

US TRAIN Act Proposes Transparency in AI Training with Copyright Protections

• The TRAIN Act proposes copyright holders have rights to examine training records of AI models to verify use of their work

• Strong support from music industry bodies and major labels highlights a push to protect creators' rights in AI training practices

• Uncertainty looms over the TRAIN Act's progression amid the upcoming Trump administration's potentially deregulatory stance on AI.

Australian Senate Report Urges Comprehensive AI Regulation and Industry Support

• The Australian Senate committee recommends dedicated legislation for high-risk AI uses, including regulation of biases in AI like generative models

• One recommendation calls for enhanced governmental support to foster Australia's sovereign AI capabilities, emphasizing unique local and First Nations perspectives

• New proposals aim to address AI's impact on employment and creative industries, seeking fair compensation and stringent safety standards in workplaces.

🎓AI Academia

Customizable Guardrails for AI: Ensuring Ethical Development and Responsible Use

• The paper discusses a new ethical guardrail framework for AI that can be customized to align with diverse user roles and ethics

• It introduces a structured combination of rules, policies, and AI assistants to maintain responsible AI practices and enhance transparency

• The study evaluates existing guardrails against their proposed method, focusing on practical implementation for global applicability.

Taskforce Proposes Standards for Evaluating AI Risks Under EU Regulation

• The RAND Corporation proposes a dedicated EU GPAI Evaluation Standards Taskforce to enhance AI governance by ensuring the quality of GPAI evaluations

• Four critical desiderata for GPAI evaluations are outlined: internal validity, external validity, reproducibility, and portability

• The taskforce will function under the EU AI Act’s framework, focusing on models presenting systemic risks to enforce robust evaluative standards.

SAFES Enhances Data Privacy and Fairness in AI Decision-Making Processes

• SAFES combines differential privacy and a fairness-aware transformation to enhance data privacy and decision fairness in AI applications

• Empirical evaluations on datasets like Adult and COMPAS show that SAFES leads to better fairness metrics with minimal loss of data utility

• The procedure allows adjustable control over privacy, fairness, and utility to balance the three according to specific needs.

Alibaba's Marco-o1 Advances Open-Ended Problem Solving with Innovative AI Techniques

• Alibaba's MarcoPolo Team launches Marco-o1, an advanced AI model focused on open-ended problem-solving beyond areas with standardized answers like math or physics

• Marco-o1 features Chain-of-Thought fine-tuning, Monte Carlo Tree Search, and unique reasoning strategies for tackling complex, real-world tasks

• Despite the initial inspiration from OpenAI's o1, Marco-o1 currently falls short of its model capabilities but promises continuous optimization and improvements.

LLaMA-Mesh Merges 3D Mesh Creation with Language Models at Tsinghua and NVIDIA

• LLaMA-Mesh, developed by Tsinghua University and NVIDIA, integrates 3D mesh generation with language model capabilities, enabling textual instructions to directly create 3D models

• The novel approach sidesteps the need for expanding LLM vocabularies by tokenizing 3D mesh data into discrete tokens that can be processed seamlessly

• This technology demonstrates potential advancements in fields such as computer graphics and virtual reality by combining textual and 3D content generation within a single framework.

Statistical Methods Enhance Language Model Evaluations, According to Recent Study

• Evan Miller's recent paper emphasizes the need for error bars in evaluations of large language models to enhance statistical rigour

• The paper presents new recommendations for reporting confidence intervals in language model evaluations, aiming to accurately reflect performance variance

• Examples involving hypothetical models "Galleon" and "Dreadnought" demonstrate how rigorous statistical analysis can inform deployment decisions in specific applications.

New Model π0 Enables General Robot Control Through Vision-Language Integration

• The π0 model integrates a pre-trained vision-language backbone with a novel action expert for robot control, enabling precise dexterous task execution like laundry folding

• Designed to inherit Internet-scale semantic knowledge, the model adapts to varied complex tasks across different robotic platforms including single-arm and dual-arm robots

• π0 can perform tasks in zero-shot after pre-training, follow verbal instructions, and gain new abilities through fine-tuning, demonstrating versatility in handling complex activities.

About ABCP: We are dedicated to reducing Generative AI anxiety among tech enthusiasts by providing timely, well-structured, and concise updates on the latest developments in Generative AI through our AI-driven news platform, ABCP - Anybody Can Prompt!