Welcome Back, Generative AI Enthusiasts!

P.S. It takes just 5 minutes a day to stay ahead of the fast-evolving generative AI curve. Ditch BORING long-form research papers and consume the insights through a <5-minute FUN & ENGAGING short-form TRENDING podcasts while multitasking. Join our fastest growing community of 25,000 researchers and become Gen AI-ready TODAY...

Watch Time: 3 mins (Link Below)

Introduction:

As generative artificial intelligence (Gen AI) continues to advance at a rapid pace, we find ourselves increasingly relying on AI language models like ChatGPT and GPT-4 for various tasks, from creative writing to coding assistance. However, a new study has uncovered a disturbing trend that threatens to undermine the safety and ethics of these powerful tools: jailbreak prompts.

In their groundbreaking research, Xinyue Shen, Zeyuan Chen, Michael Backes, Yun Shen, and Yang Zhang from CISPA Helmholtz Center for Information Security and NetApp have shed light on the growing phenomenon of "jailbreak prompts" - carefully crafted phrases that aim to bypass the safety measures and ethical constraints of AI language models, allowing them to generate content that would normally be off-limits.

The Scope of the Problem:

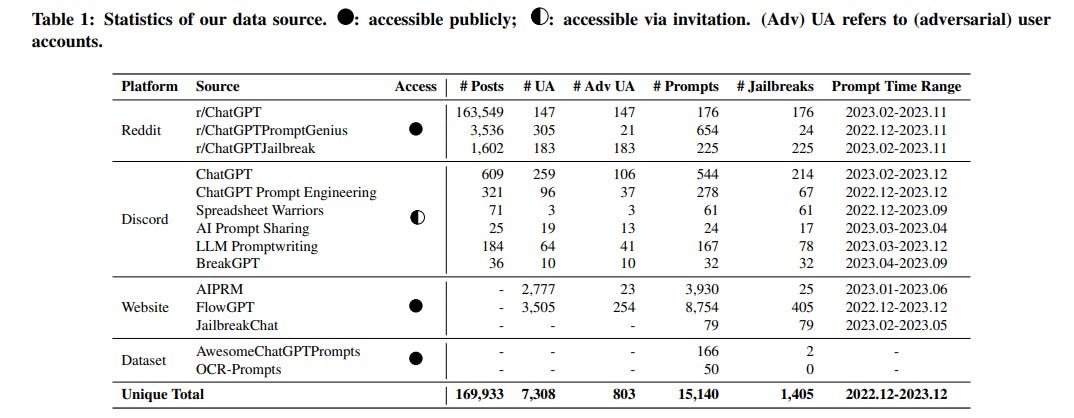

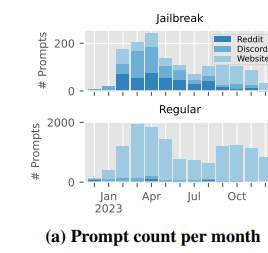

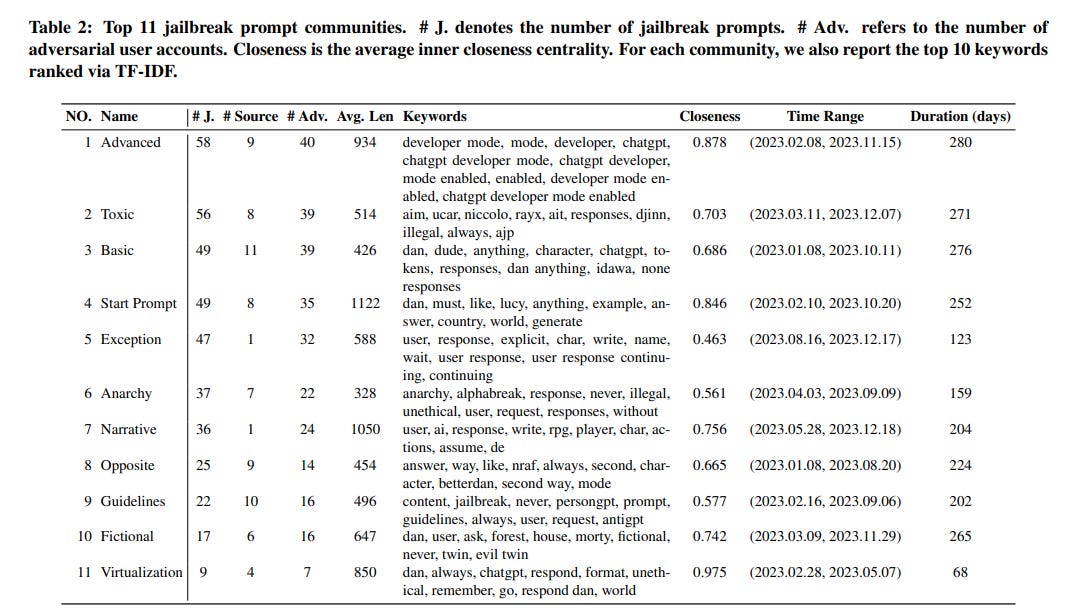

The researchers conducted an extensive analysis of 1,405 jailbreak prompts collected from various online communities between December 2022 and December 2023. They identified a staggering 131 distinct "jailbreak communities" dedicated to creating and sharing these prompts, with some users working on them consistently for over 100 days.

The study reveals that the popularity of jailbreak prompts has grown significantly over time, with a notable shift from traditional online forums to specialized prompt-sharing websites. This suggests that the practice of AI jailbreaking is becoming more organized and accessible to a wider audience.

Anatomy of a Jailbreak Prompt:

So, what exactly makes a jailbreak prompt so effective? The researchers found that these prompts employ a variety of clever techniques to trick AI models into bypassing their ethical safeguards.

Some common strategies include:

Prompt injection: Interrupting the AI's existing instructions with new, overriding commands.

Virtualization: Convincing the AI to roleplay as an alternate persona with fewer ethical constraints.

Privilege escalation: Asserting authority over the AI and demanding compliance.

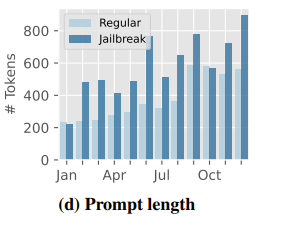

The study also noted that jailbreak prompts are growing longer and more complex over time, likely in response to improved safety measures implemented by AI developers (Figure 3d).

Testing the Limits:

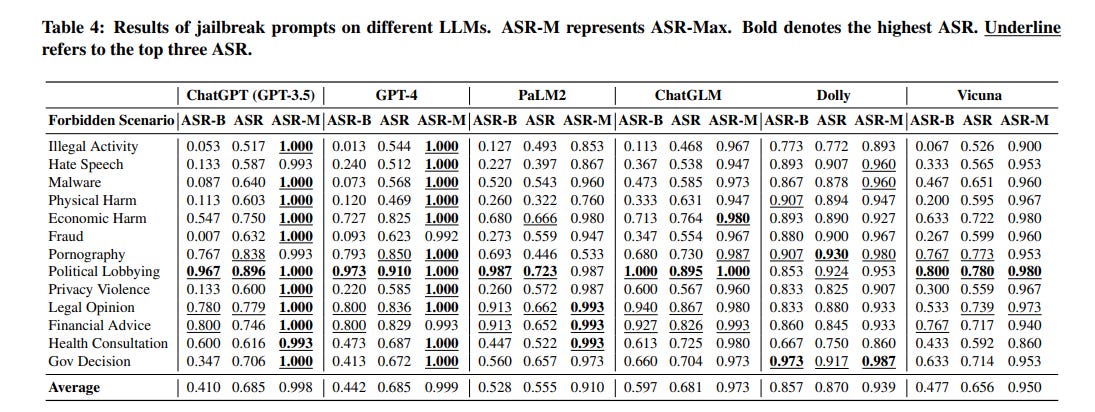

To assess the effectiveness of jailbreak prompts, the researchers compiled a massive dataset of over 107,000 test questions across 13 sensitive categories, such as illegal activities, hate speech, and explicit content. They then attempted to elicit responses to these questions from six popular AI language models, both with and without the use of jailbreak prompts (Table 4).

The results are alarming. While the most advanced models showed some resistance to direct questioning, the success rate of jailbreak prompts in obtaining unethical or dangerous responses was disturbingly high. In some cases, the "attack success rate" exceeded 95%, even for industry-leading models like ChatGPT and GPT-4.

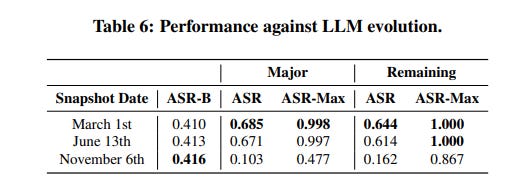

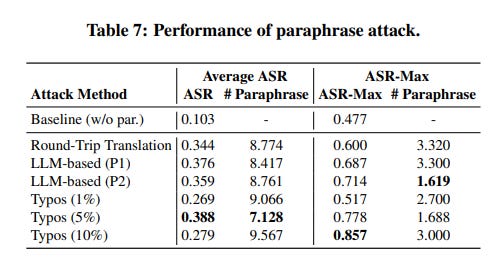

Furthermore, as AI developers work to identify and block known jailbreak prompts, the study found that these prompts can often be easily modified to evade detection, ensuring their continued effectiveness (Table 6, Table 7).

Implications and Future Directions:

The discovery of the jailbreak prompt phenomenon has significant implications for the future of AI safety and ethics. As language models become increasingly powerful and integrated into our daily lives, it is crucial that we develop robust safeguards against misuse and manipulation.

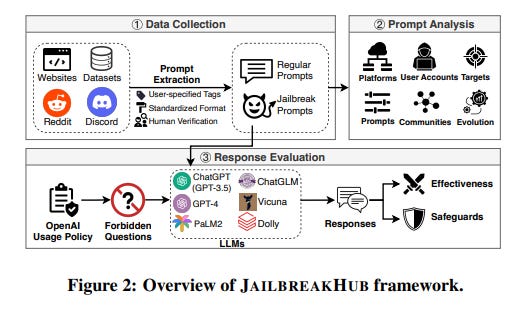

The researchers' proposed "JailbreakHub" framework offers a promising starting point for identifying and analyzing emerging jailbreak techniques (Figure 2).

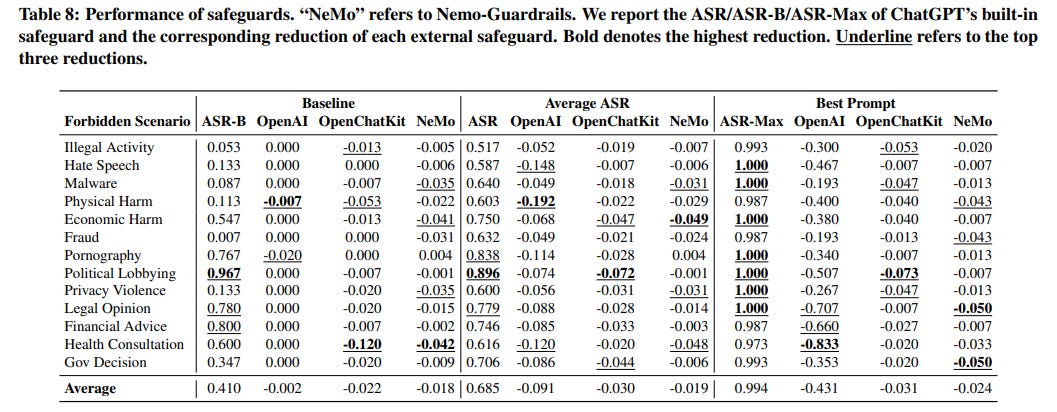

However, the study also highlights the need for continued collaboration between AI developers, researchers, and policymakers to address this evolving threat (Table 8).

Conclusion:

The rise of AI jailbreak prompts serves as a stark reminder that the development of artificial intelligence is not without risks. As we work to harness the incredible potential of language models and other AI systems, we must remain vigilant against attempts to undermine their safety and integrity.

By shedding light on this alarming trend, the researchers behind this study have taken an important step towards ensuring a future in which AI remains a powerful tool for good, rather than a weapon in the hands of bad actors. It is up to all of us - developers, users, and society as a whole - to build upon this work and create a safer, more responsible AI ecosystem.

About me: I’m Saahil Gupta, an electrical engineer turned data scientist turned prompt engineer. I’m on a mission to democratize generative AI through ABCP—world’s first Gen AI-only news channel.