California's Landmark AI Safety Bill SB 1047 Set for Approval

Gemini's Custom Gems, Midjourney's $200M Success, High-Profile Advisors Join Korea's AI Committee, & More Tech Innovations Across The Globe

Today's highlights:

🚀 AI Breakthroughs

Gemini Unveils Custom Gems and Enhanced Image Generation with Imagen 3

• Gemini introduces Custom Gems for Advanced, Business, and Enterprise subscribers, enabling personalized AI experts on diverse topics

• Imagen 3, an enhanced image generation model, is set to improve visual content creation across all Gemini platforms

• Features like premade Gems for specific scenarios and technical improvements in image generation underscore Gemini's commitment to user-centric innovation.

Midjourney Ventures into Hardware, Expands Team Amid $200 Million Revenue Success

• Midjourney, an AI image-generating platform, reportedly earning over $200 million, is expanding into hardware development

• The new hardware team will be based in San Francisco, with recruitment details shared via Midjourney's post on X

• The hardware initiative could tie into AI video and 3D generation, hinted by current legal proceedings and previous AI training strategies.

Cerebras Inference Maintains High Accuracy with 16-bit Precision for AI Models

• Cerebras Inference supports Llama3.1 8B and 70B using original 16-bit weights from Meta, promising high accuracy and performance in AI tasks

• Cerebras proves its AI's versatility by planning to scale from billions to trillions of parameters, fitting large models like Llama3-405B across multiple CS-3 systems

• Cerebras offers rapid inference speeds with capabilities of 450 tokens per second, facilitating advanced AI tasks through its API with 1 million free tokens at launch.

Qwen2-VL Released: Pioneering Multilingual Visual Language Model on GitHub and Hugging Face

• Qwen2-VL achieves state-of-the-art performance in understanding images and videos, now supporting multilingual text recognition in images

• The model has been integrated into Hugging Face Transformers, vLLM, and other third-party frameworks, enhancing accessibility for developers

• Qwen2-VL's advanced capabilities allow it to operate devices like mobiles and robots through complex reasoning and visual interaction.

Andrew Ng and Yann LeCun Join Korea's National AI Committee as Advisors

• Andrew Ng and Yann LeCun join South Korea's National AI Committee as advisors, a significant boost to the country's AI development efforts

• The involvement of these AI luminaries is poised to enhance educational initiatives and accelerate AI advancements in South Korea

• South Korea's AI startup scene flourishes, with over 1,100 new companies in 2024, showcasing significant innovations like the Solar LLM and advanced chatbot solutions.

Salesforce AI Research Releases SFR LlamaRank, Enhancing Document Relevancy Ranking

• Salesforce AI Research releases SFR LlamaRank, a language model specialized in document relevancy ranking for enterprise RAG and trusted AI systems

• LlamaRank outperforms leading APIs in general document ranking and shows significant improvements in code search performance

• Powered by iterative on-policy feedback from Salesforce's RLHF data annotation team, LlamaRank enhances accuracy and relevance in document retrieval.

Artifacts Now Available on All Claude.ai Plans, Expands to Mobile Apps

• Artifacts feature is now accessible to all Claude.ai users on Free, Pro, and Team plans

• With Artifacts, Claude.ai users can develop projects, like code snippets and SVG graphics, enhancing productivity and creativity

• Enhanced sharing options allow Team plan users to collaborate securely, while Free and Pro users can engage with a global community.

Llama3-S v0.2 Enhances Speech Understanding with Multimodal Checkpoint Improvements

• Llama3-s v0.2 launches with an enhanced real-time demo of Llama3-Speech, improving multimodal interactions between auditory inputs and text outputs

• Performance benchmarks show consistent improvement in Speech Understanding, though further analysis is awaited to deepen insights into the model's capabilities

• Despite its growth, limitations persist such as sensitivity to long audio inputs and audio compression issues, necessitating future training enhancements.

NVIDIA Launches Mistral-NeMo-Minitron 8B, Balancing Size with Cutting-Edge Accuracy

• Mistral-NeMo-Minitron 8B, a compact AI model by NVIDIA, boasts high accuracy with 8 billion parameters, optimized for efficient computing

• This model caters to AI-powered applications like chatbots and virtual assistants, and is deployable on workstations due to its size

• NVIDIA offers the Mistral-NeMo-Minitron 8B as a microservice via NIM, making it easy for developers to integrate and use in GPU-accelerated systems.

OpenAI Set to Launch "Strawberry" AI Model with Enhanced Mathematical Capabilities This Fall

• OpenAI's upcoming AI, codenamed "Strawberry," enhances mathematical and reasoning abilities, likely surpassing the current GPT-4 model

• Release of the Strawberry AI model by OpenAI is expected this fall, with options for standalone deployment or integration into existing ChatGPT

• Strawberry's advanced skills enable it to solve new and complex mathematical equations and logic puzzles, a significant leap in AI capabilities.

⚖️ AI Ethics

Survey Reveals U.S. Adults Concerned About AI Impact on Jobs and Business Ethics

• 31% of U.S. adults believe artificial intelligence does more harm than good, a decrease from 40% last year

• 77% of Americans do not trust businesses to use AI responsibly, reflecting persistent concerns over AI ethics

• 57% suggest greater transparency in AI usage by businesses could alleviate public worries about the technology.

Baidu Baike Updates Robots.txt to Block Google and Bing from Indexing Its Content

• Baidu Baike updated its robots.txt file on August 8, blocking Googlebot and Bingbot from indexing its almost 30 million entries

• This move by Baidu illustrates a protective strategy over its data, crucial for AI model training amid rising global demands

• Despite the block, cached entries from Baidu Baike still appear in Google and Bing search results as observed in a recent survey.

California's Landmark AI Safety Bill SB 1047 Advances to Governor for Approval

• California's Senate passes SB 1047, a first-in-the-nation AI safety bill aimed at creating safeguards against AI misuse in cyberattacks and weapon development

• Endorsed by leading AI researchers, the bill requires rigorous testing and safety guardrails for advanced AI systems costing over $100 million to develop

• SB 1047 proposes the establishment of CalCompute, a public cloud computing cluster, to facilitate responsible AI development by various community groups.

Surge in Deepfake Sex Crimes in South Korea Spurs National Outcry and Investigation

• Online deepfake sex crimes in South Korea have surged, targeting women and girls, with some material shared on Telegram

• South Korean President Yoon Suk Yeol urges thorough investigation to eradicate the digital sex crime epidemic

• Authorities intensify efforts, implementing a 24-hour hotline and collaboration with Telegram to tackle and block deepfake content distribution.

🎓AI Academia

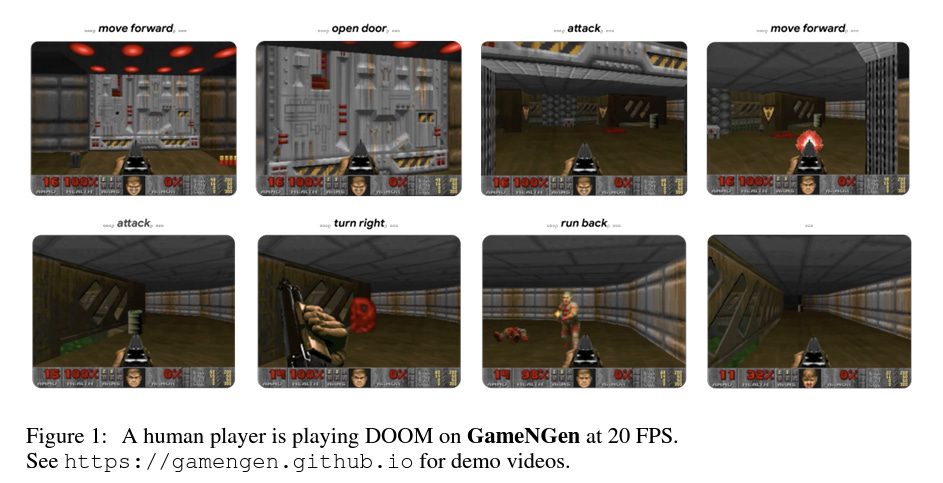

GameNGen: Google's New AI-Powered Game Engine Simulates DOOM in Real-Time

• Google Research unveils GameNGen, a real-time game engine entirely powered by a neural model, simulating DOOM at 20 FPS on a single TPU

• GameNGen achieves next frame prediction with a PSNR of 29.4, offering quality comparable to lossy JPEG compression

• The engine's performance in distinguishing game simulation from actual gameplay was tested, with human raters scoring only slightly better than random chance.

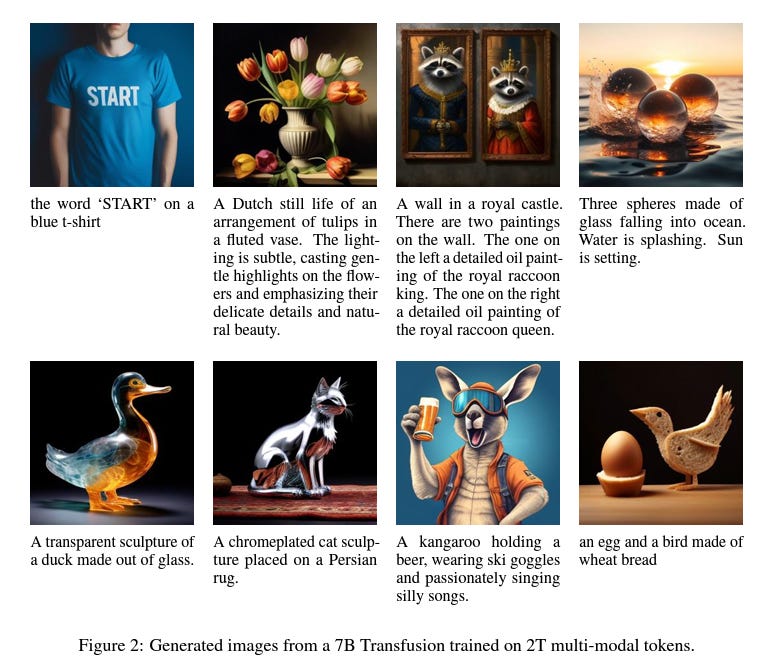

Meta's Transfusion Model Blends Text and Image Modelling in One System

• The Transfusion model, developed by Meta and collaborators, trains on both text and image data without compromising information integrity

• Using a unique multi-modal approach, Transfusion outperforms traditional models by effectively combining language and diffusion techniques

• Demonstrates improved scaling by training up to 7B parameters, showing significant advancements in generative capabilities for both text and images.

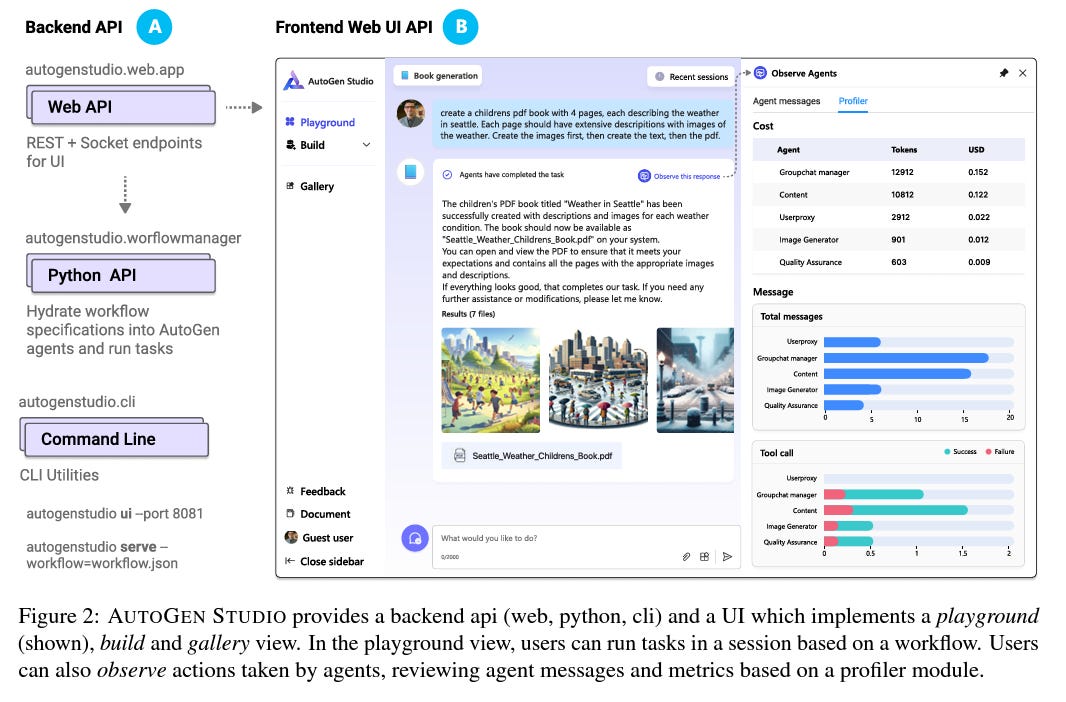

Microsoft Releases AUTOGEN STUDIO: A No-Code Tool for Multi-Agent System Development

• AUTOGEN STUDIO, developed by Microsoft Research, simplifies building and debugging multi-agent systems with a no-code, drag-and-drop interface

• The platform supports rapid prototyping and evaluation of multi-agent workflows using a web interface and a Python API

• It introduces four design principles for no-code development tools and has been made available as an open-source project.

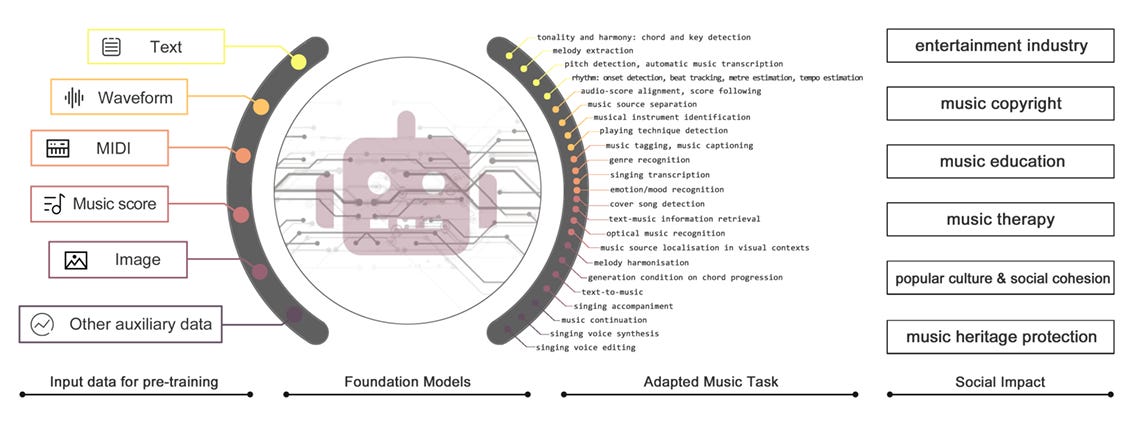

Comprehensive Analysis of Foundation Models in Music Perception and Generation

• The document discusses 'Foundation Models for Music,' delving into their impact on the industry and potential social implications

• Various music representation forms, including acoustic, symbolic, and transformed symbolic formats, are extensively explored

• Applications range from music understanding and generation to therapeutic and medical uses, highlighting the versatility of Foundation Models.

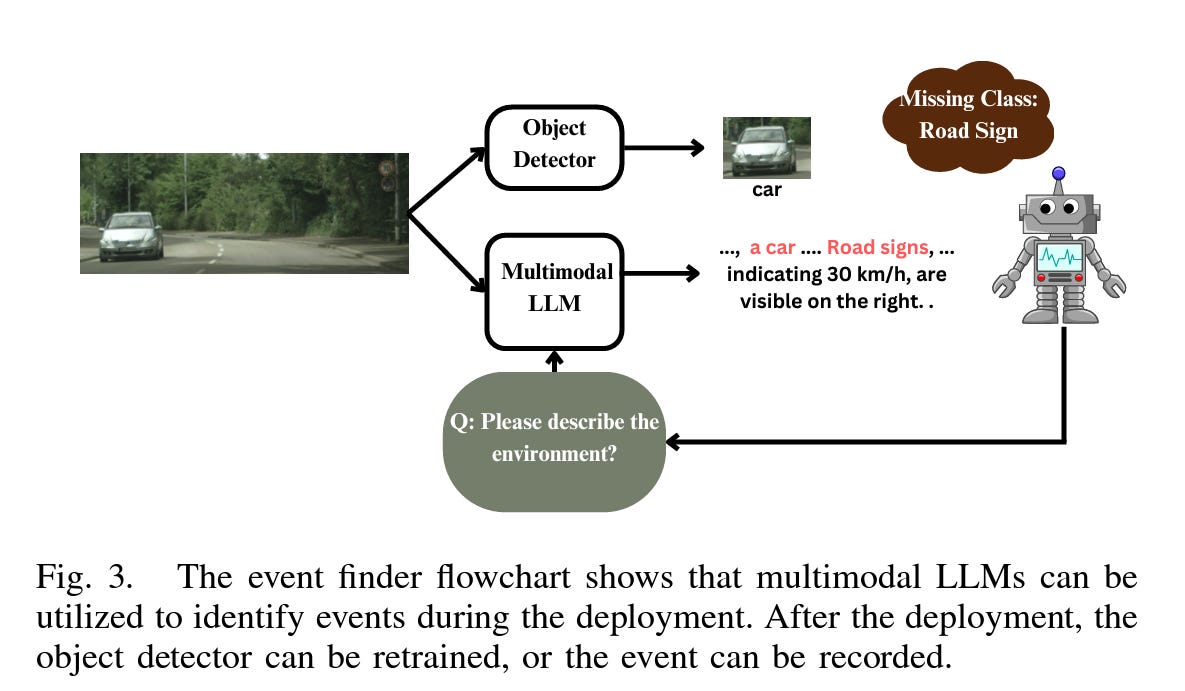

Generative AI's Role in Ensuring EU AI Act Compliance in Autonomous Driving Safety

• Generative AI could enhance the safety and compliance of autonomous driving systems under the stringent EU AI Act

• Applications of generative AI in AD include improving system robustness and transparency, crucial for meeting EU regulations

• Additional research is required to fully integrate generative AI with EU AI Act mandates and ensure reliability in autonomous driving perception.

Enhancing Security in Large Language Models Through Identified Safety Layers

• Researchers identify crucial "safety layers" in LLMs that help discriminate and block malicious queries

• Novel fine-tuning method, Safely Partial-Parameter Fine-Tuning (SPPFT), developed to preserve security of LLMs during domain-specific adjustments

• Study highlights challenges in maintaining the security alignment when LLMs are fine-tuned with non-malicious or backdoor data.

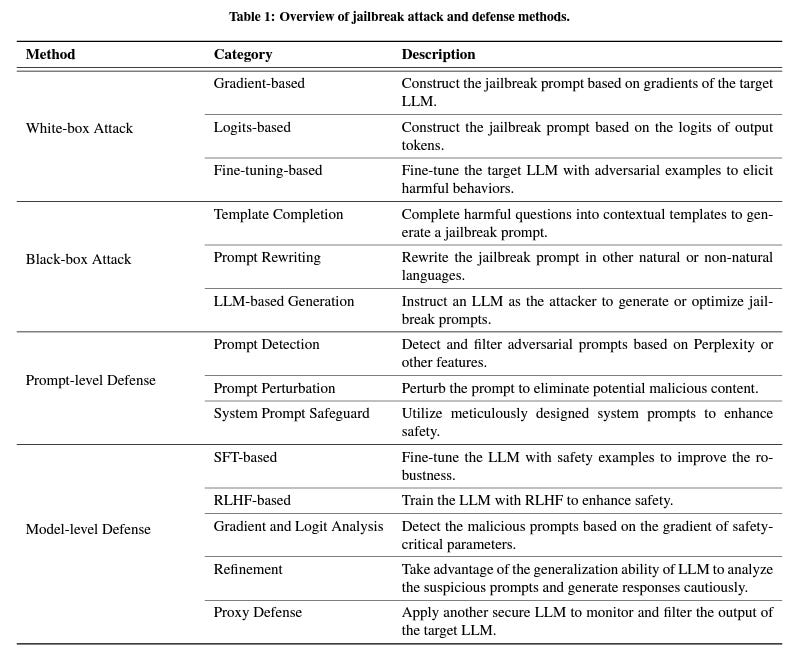

Survey Analyzes Jailbreak Attacks and Defense Methods Against Large Language Models

• The survey categorizes jailbreak attacks on LLMs into two main types: white-box and black-box, based on model transparency

• Defense strategies are classified into two levels: prompt-level and model-level, focusing on whether they modify the LLM itself

• The study aims to enhance understanding of security challenges for LLMs, underlining the importance of robust defense mechanisms.

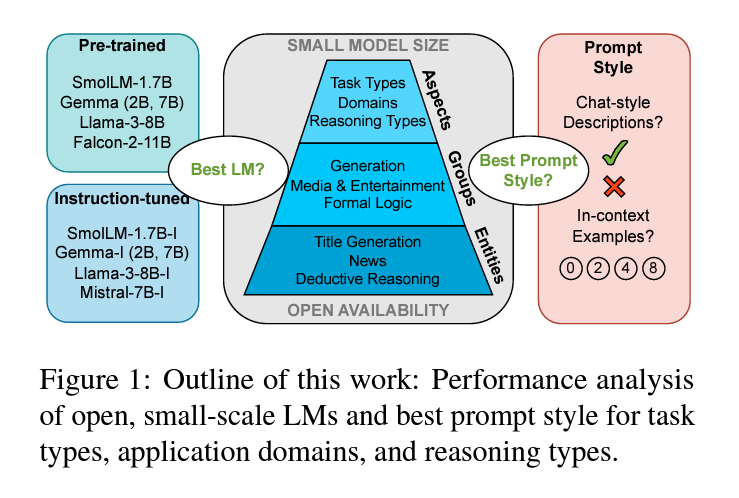

Comparing Small and Large Language Models in Practical Applications: A Comprehensive Study

• Small, open language models have shown potential to compete with state-of-the-art large models in specific tasks and domains

• A new framework evaluates small LMs across various application requirements, determining the best model and prompt style for each scenario

• Despite their size, well-chosen small LMs can outperform larger counterparts like GPT-4 in certain practical applications.

Strategies to Reduce Over-Cautious Behavior in Large Language Models

• Research by the University of Texas at Austin highlights issues of "exaggerated safety" in Large Language Models

• Different strategies like few-shot, interactive, and contextual prompting reduce false rejections by up to 92.9%

• Techniques aimed to strike a balance between safety and utility in AI responses, enhancing overall model performance.

New Statistical Framework Enhances Watermark Detection in Large Language Models

• A new statistical framework presented improves watermarking efficiency in large language models, focusing on detecting AI-generated text with higher accuracy

• The method utilizes pivotal statistics and a secret key to significantly reduce false positives in identifying human-written text

• The paper introduces optimal detection rules, derived through a minimax optimization approach, that outperform current methods in tests.

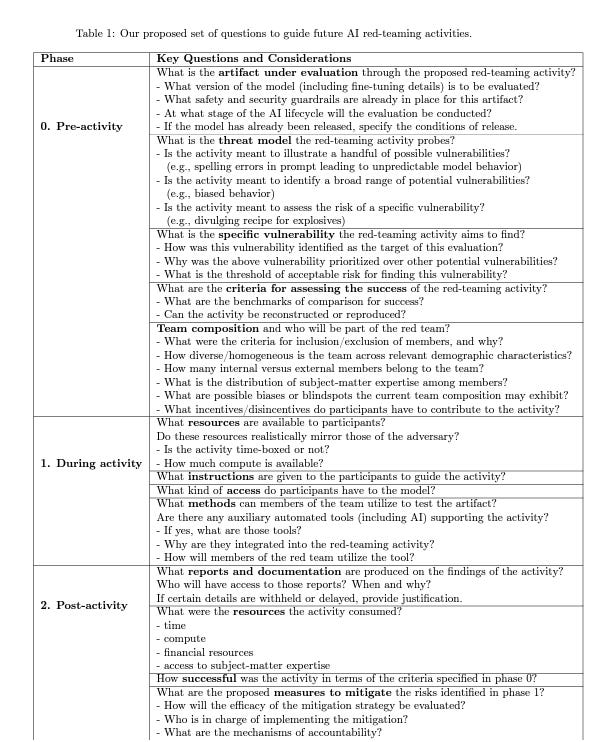

Red-Teaming in Generative AI: Essential Strategy or Mere Theatrics?

• AI red-teaming is highlighted as crucial for identifying and mitigating risks in generative AI, yet remains poorly defined and inconsistently applied

• The study surveys recent AI red-teaming cases, revealing discrepancies in goals, methodologies, and impacts on security policy and practices

• While AI red-teaming is valued for potential harm reduction in generative AI, over-reliance on it without robust standards may equate to mere security theater.

About ABCP: We are dedicated to reducing Generative AI anxiety among tech enthusiasts by providing timely, well-structured, and concise updates on the latest developments in Generative AI through our AI-driven news platform, ABCP - Anybody Can Prompt!