Baidu’s New 'High IQ' AI Model: DESTROYS GPT-4.5 & DeepSeek? 🤯

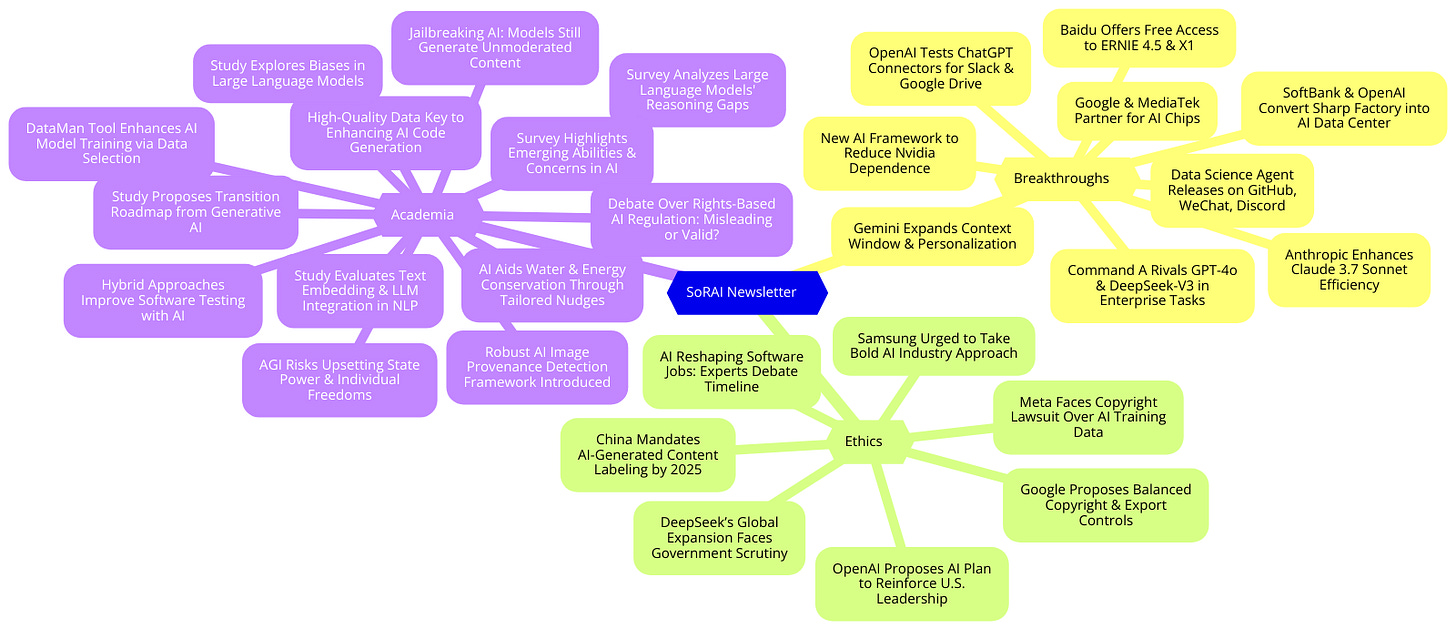

Today's highlights:

Are you ready to join the Responsible AI journey, but need a clear roadmap to a rewarding career? Do you want structured, in-depth training that builds your skills, boosts your credentials, and reveals any knowledge gaps? Are you aiming to secure your dream role in AI Governance and stand out in the market, or become a trusted AI Governance leader? If you answered yes to any of these questions, let’s connect before it’s too late! Book a chat here.

🚀 AI Breakthroughs

Baidu Unveils ERNIE 4.5 and Reasoning Model ERNIE X1

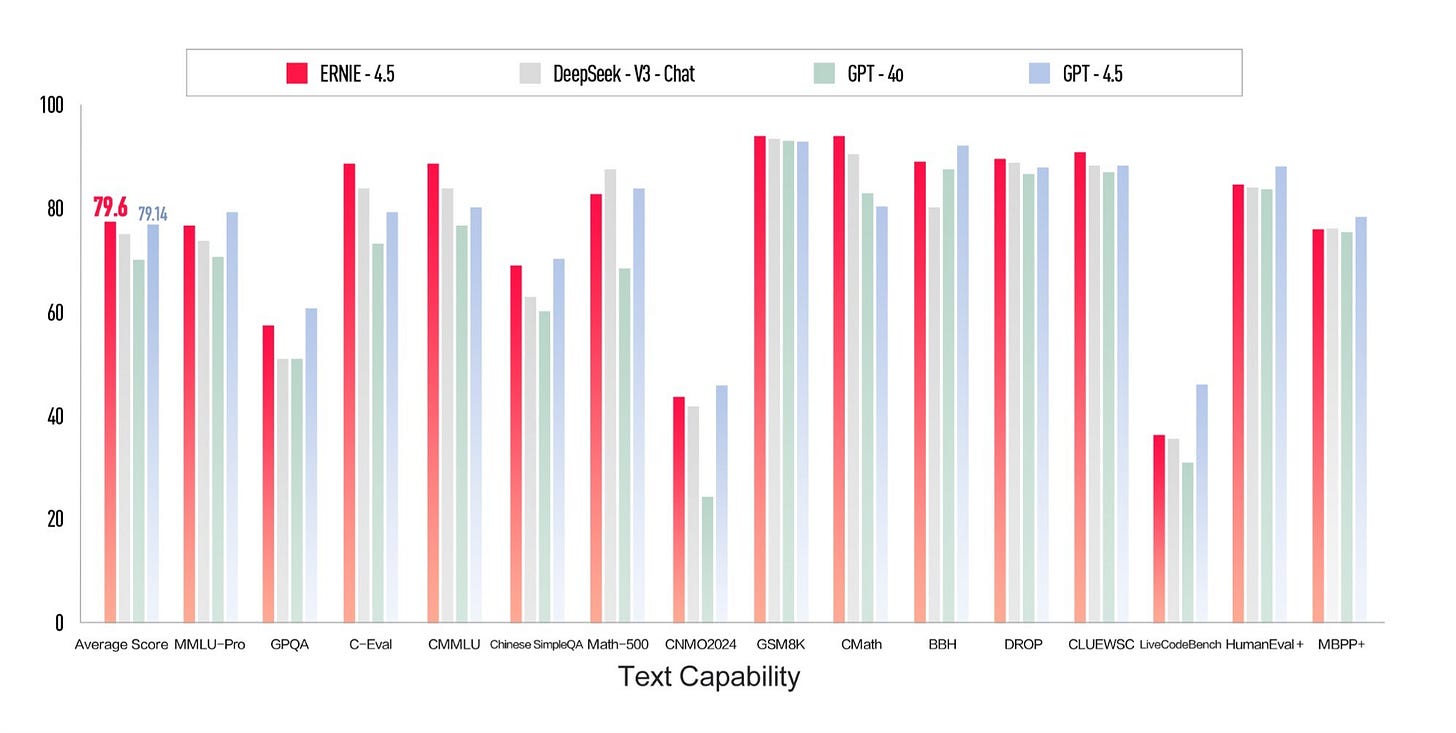

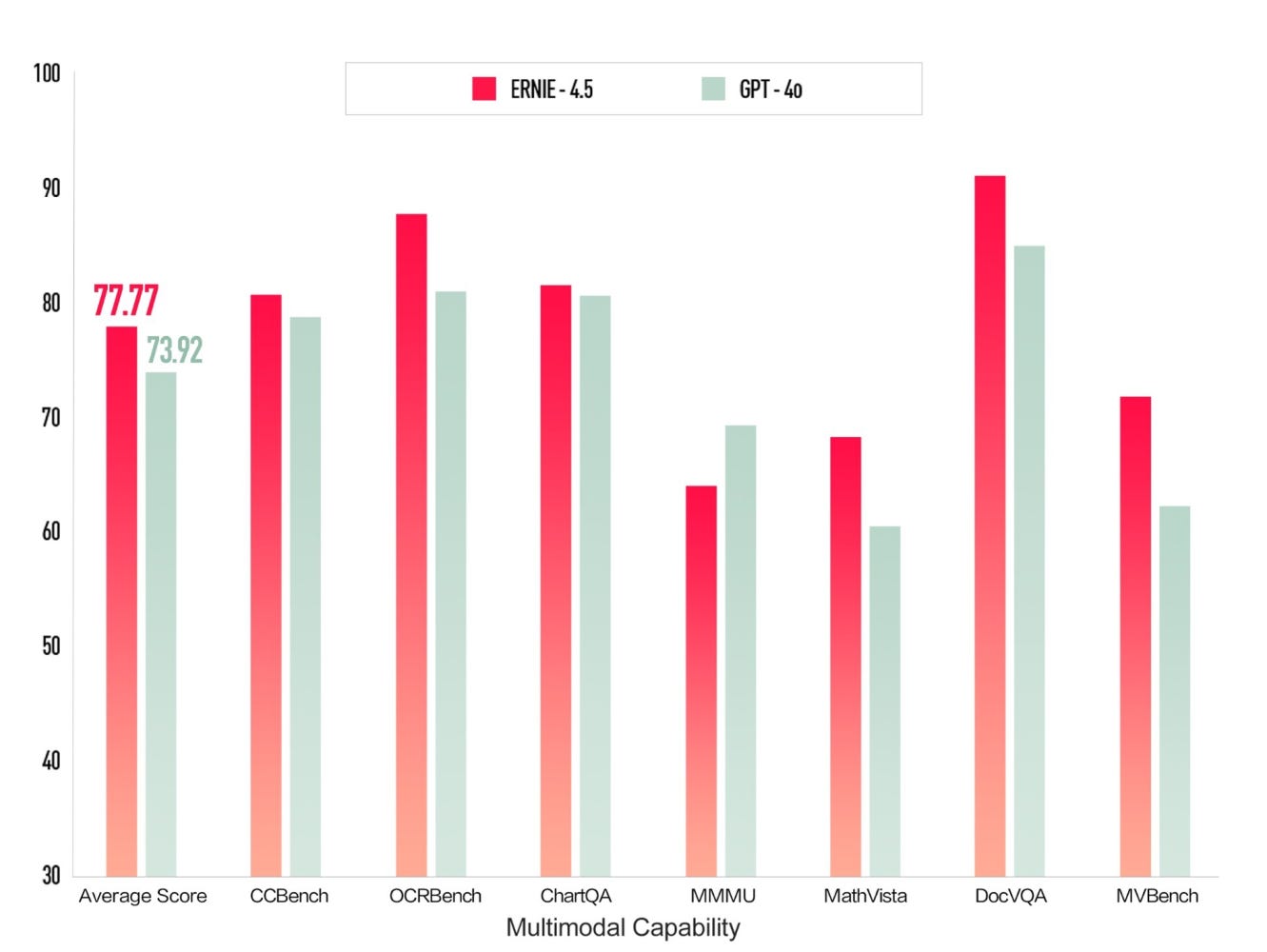

• Baidu has launched ERNIE 4.5 and ERNIE X1, offering them free to the public via ERNIE Bot, ahead of the planned April 1 release date;

• ERNIE X1, Baidu's deep-thinking reasoning model, equates DeepSeek R1 performance at half the price, offering advanced multimodal capabilities such as Chinese Q&A and complex calculations;

• Baidu's ERNIE 4.5 foundation model integrates multimodal content, surpassing GPT-4.5 benchmarks while priced at 1% of GPT-4.5, with potential API access for enterprise users on Baidu AI Cloud.

New AI Framework from China Targets Nvidia Chip Dependence with Local Solutions

• China's Tsinghua University has developed Chitu, an AI framework that potentially reduces the reliance on Nvidia chips, marking a significant step in technological self-sufficiency for AI model inference

• Chitu operates on Chinese-made chips, challenging Nvidia's Hopper GPUs, and supports mainstream models like DeepSeek and Meta's Llama series, offering a notable advancement in AI framework technology

• Tested with Nvidia A800 GPUs, Chitu achieved a 315% increase in DeepSeek-R1 inference speed while halving GPU usage, intensifying competition against Nvidia amid U.S. export restrictions on advanced chips.

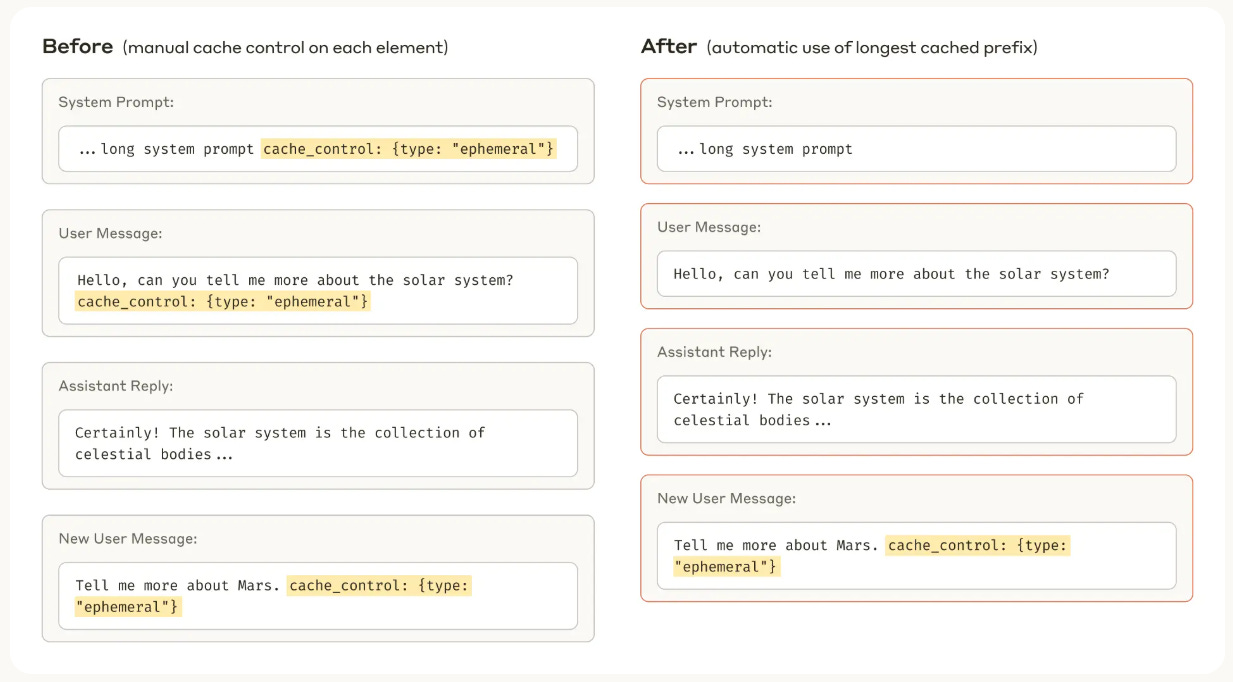

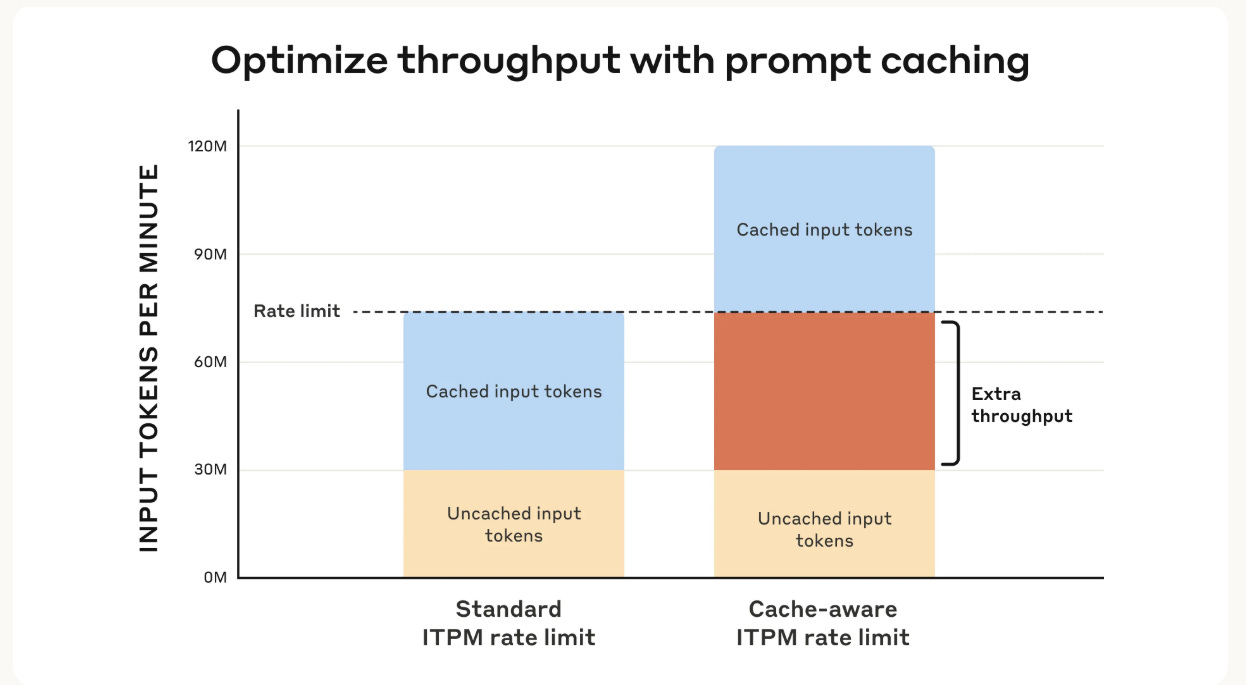

Anthropic Boosts Claude 3.7 Sonnet API Efficiency with New Caching and Token Features

• Anthropic API updates for Claude 3.7 Sonnet enhance developers’ experience with cache-aware rate limits, improved prompt caching, and token-efficient tool usage, significantly boosting throughput and reducing costs;

• New prompt caching techniques in Claude 3.7 Sonnet allow developers to reuse frequently accessed context, cutting costs by up to 90% and latency by 85% for extended prompts;

• The beta token-efficient tool use feature in Claude 3.7 Sonnet reduces output token consumption by 70%, now available on Anthropic API, Amazon Bedrock, and Google Cloud's Vertex AI.

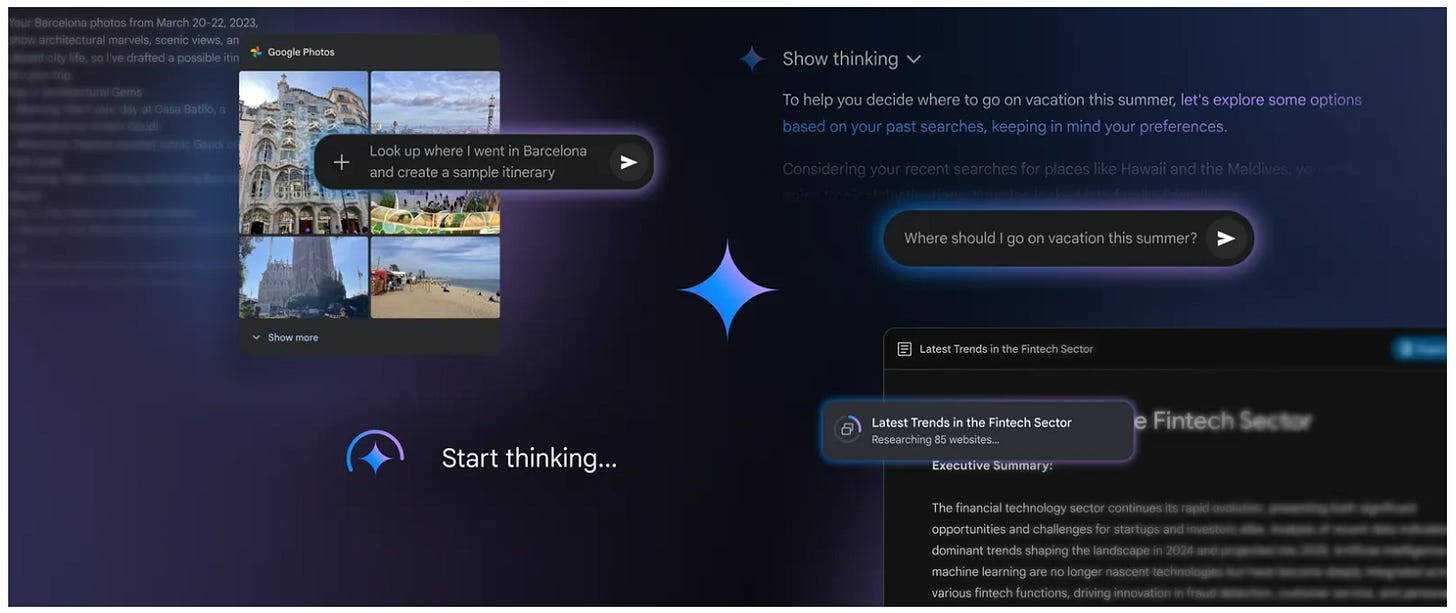

Gemini Features Enhanced with New Personalization and Expanded Context Window Capabilities

• Significant upgrades improve the performance and availability of Gemini's popular features, enhancing its utility by making it more personal and helpful for users worldwide;

• The 2.0 Flash Thinking Experimental model introduces new capabilities, such as file upload, increased reasoning efficiency, and a 1M token context window, enabling more complex problem-solving;

• Enhanced Deep Research capabilities using Gemini 2.0 Flash Thinking Experimental model are now accessible, offering smarter, detailed multi-page reports with real-time insight into the research process.

Google Partners with MediaTek in Strategic Move to Develop AI Chips Next Year

• Google is reportedly planning a collaboration with Taiwan's MediaTek to design next-gen AI chips, with production expected in 2024, according to The Information

• MediaTek was chosen for its strong TSMC ties and lower costs compared to Broadcom, though neither company has officially announced the partnership

• Despite efforts to develop AI chips in-house, Google is expected to continue reliance on partners like MediaTek and Broadcom for production and quality testing;

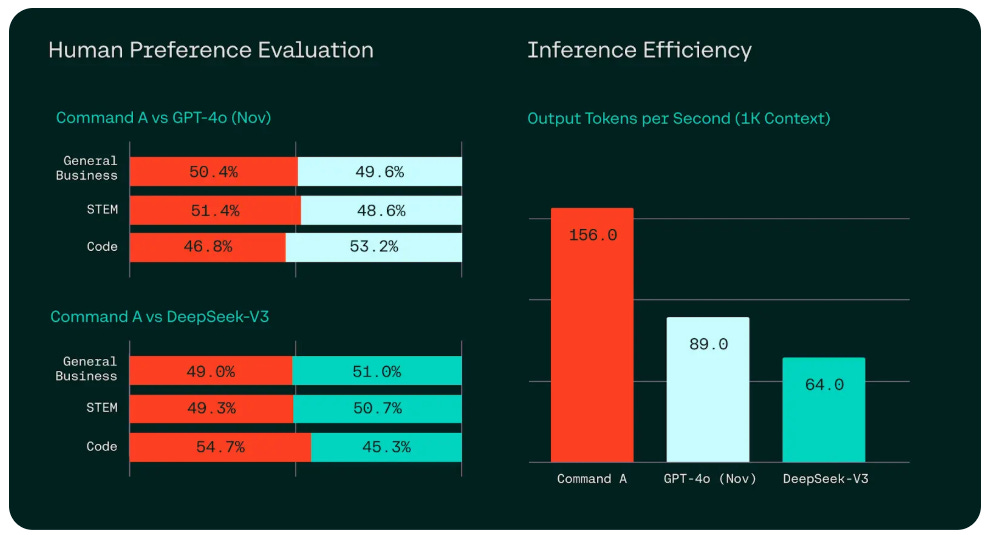

Command A Matches GPT-4o and DeepSeek-V3 in Enterprise Tasks with Efficiency

• Command A demonstrates performance on par with or exceeding GPT-4o and DeepSeek-V3, excelling in enterprise tasks with unprecedented computational efficiency;

• The AI model reduces computational requirements while maintaining high-performance standards, offering a more sustainable solution for businesses relying on agentic enterprise tasks;

• Enhanced efficiency in Command A can lead to reduced operational costs and increased accessibility, benefiting organizations needing streamlined AI solutions for robust enterprise applications;

SoftBank and OpenAI Transform Sharp Factory into Advanced AI Data Center in Japan

• SoftBank secures Sharp Sakai Plant in Osaka for $676 million, converting it into an AI data center central to its AI ambitions in Japan

• Collaboration between SoftBank and OpenAI intensifies with plans to deploy advanced AI enterprise model "Cristal Intelligence" and develop AI models at the Sakai facility

• The Sakai AI data center, operational by 2026, will initially run at 150 megawatts, eventually upgrading to over 240 megawatts, expanding Japan's AI infrastructure capacity;

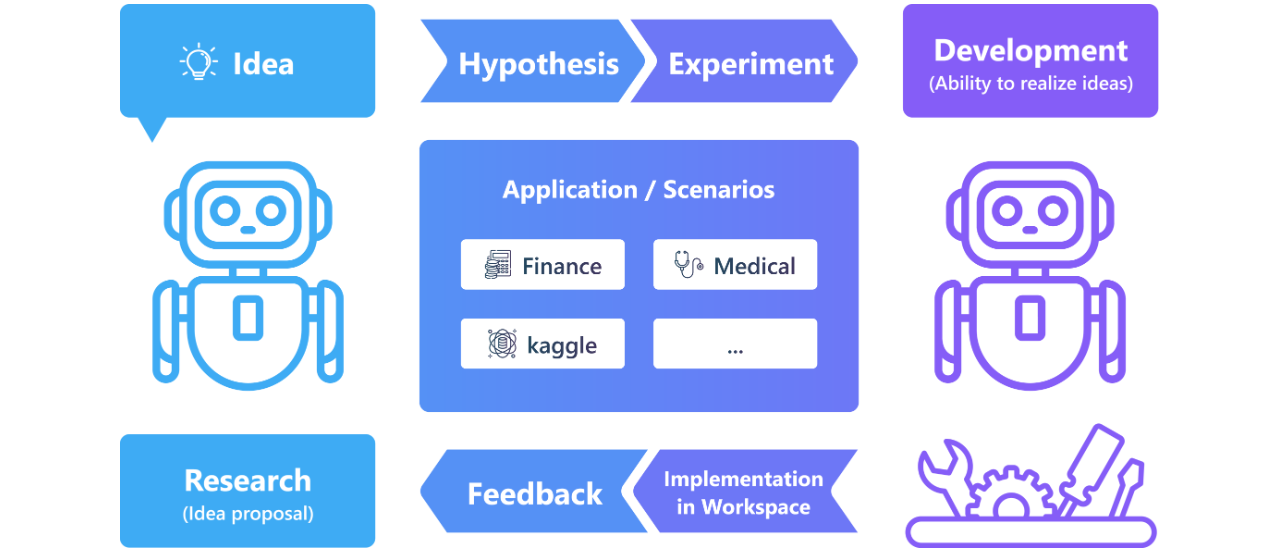

Microsoft's RDAgent-an Agent for Data-driven R&D

• The Data Science Agent Preview showcases a new automation tool for industrial R&D, offering demos and setup guidelines for optimal implementation

• Users can access various automated scenarios like model evolution and feature engineering, particularly enhancing Kaggle competition participation through innovative functionalities.

OpenAI to Test ChatGPT Connectors for Slack and Google Drive Integration

• OpenAI will beta test ChatGPT Connectors, allowing Team subscribers to link Slack and Google Drive, enriching responses with files and conversations data

• ChatGPT Connectors use a GPT-4o model to process internal company data securely, respecting permissions and sync preferences for privacy assurance

• While not accessing Slack DMs or images in files, ChatGPT Connectors enhance business software use, potentially challenging AI enterprise search tools;

⚖️ AI Ethics

AI Set to Transform Software Development Jobs, But Experts Disagree on Timeline

• Tech leaders from Anthropic, IBM, and Meta express differing views on AI’s potential to automate software development, with predictions ranging from minor to near-total automation in the near future;

• IBM CEO Arvind Krishna argues that AI will automate only 20-30% of coding, emphasizing AI's role in amplifying human productivity rather than entirely replacing programmers;

• Mark Zuckerberg and Sundar Pichai highlight the gradual integration of AI in coding, pointing to the existing use of AI to produce significant portions of code at companies like Google.

Meta Faces Copyright Lawsuit Over Alleged Torrenting of Pirated Books for AI

• Authors allege Meta's torrenting of a pirated books dataset could strengthen their copyright claim, highlighting the unlawfulness of using copyrighted materials for AI training.

• Legal filing claims Meta circumvented network strain by torrenting pirated books, using unconventional methods via Amazon Web Services to allegedly conceal these actions.

• Despite limiting seeding post-download, authors argue Meta's alleged file-sharing during downloads demonstrates infringement, as copyrighted book data may have been shared with others.

Google Proposes Weak Copyright Restrictions and Balanced Export Controls for AI Developments

• Google proposes weak copyright restrictions for AI training, emphasizing fair use and text-and-data mining exceptions crucial for innovation and model development without lengthy negotiations;

• The tech giant criticizes current export controls for imposing burdens on U.S. cloud providers and suggests exemptions for businesses requiring advanced AI chips, contrasting with competitors' compliance confidence;

• Google's policy urges federal AI legislation for a comprehensive privacy framework, cautioning against liability obligations while opposing broad transparency rules that might demand trade secret disclosures.

DeepSeek's Global Rise Shadowed by Travel Restrictions and Government Scrutiny

• DeepSeek gains global popularity for its cost-effective language model, but increased scrutiny from the Chinese government accompanies its rapid growth

• Reports indicate DeepSeek withholds executives' passports amid fears of sharing company secrets abroad, with the firm itself yet to comment on the allegations

• China's increased involvement in DeepSeek's investment decisions reflects heightened control as AI competition with the US intensifies, positioning DeepSeek as a "national treasure";

China Mandates Clear Labelling for AI-Generated Content Effective September 2025

• China mandates AI-generated content labelling, effective 1 September 2025, aiming to promote transparency and a ‘healthy development’ of AI technologies

• Amid global AI media risk discussions, China's labeling requirement addresses misinformation and deepfake concerns, boosting accountability between AI vs human-created content

• New AI labelling regulations reflect China's control over digital information, potentially influencing international AI governance as nations rethink AI content strategy approaches.

Samsung Urged to Adopt Bold Mindset to Tackle AI Industry Challenges

• Samsung Electronics chairman stressed a "do-or-die" mindset for tackling AI-driven industry challenges, urging a deep reflection from leadership to navigate the technological crisis

• Struggling to meet Nvidia's needs, Samsung faces competition from SK hynix, which has become Nvidia's main supplier of high-bandwidth memory chips for AI GPUs

• Facing plunging profits and questioning its technological edge, Samsung underscores long-term investments over short-term gains, emphasizing resilience in overcoming industry adversities;

OpenAI Proposes New Strategies in AI Action Plan to Strengthen U.S. Leadership

• OpenAI unveiled its recommendations to the White House for the US AI Action Plan, emphasizing the need for a legislative framework that promotes innovation and economic growth

• The policy proposals include strategies for export control, copyright protection, and infrastructure development to solidify America's competitive edge in AI against global competitors

• An ambitious government AI adoption strategy was highlighted, advocating for the US to lead globally by modernizing public sector processes and embracing cutting-edge AI technologies;

🎓AI Academia

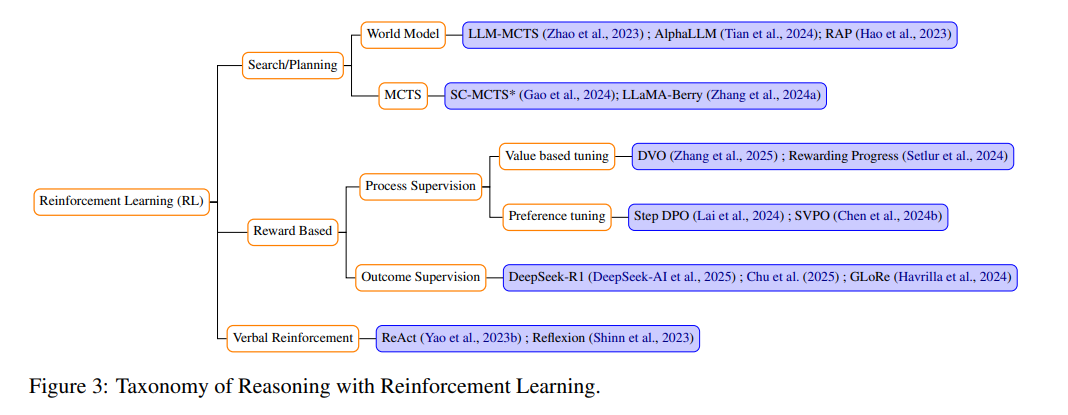

Survey Analyzes Reasoning Gaps in Large Language Models for Crucial Domains

• A recent survey explores reasoning strategies in Large Language Models (LLMs), highlighting the gap between language proficiency and genuine reasoning abilities

• Novel techniques like OpenAI's O1 and DeepSeek R1 focus on enabling LLMs to think and iterate, crucial for their deployment in sensitive sectors

• Researchers emphasize innovative approaches, such as Monte Carlo Tree Search, for enhanced reasoning, moving beyond mere model scaling for impactful results.

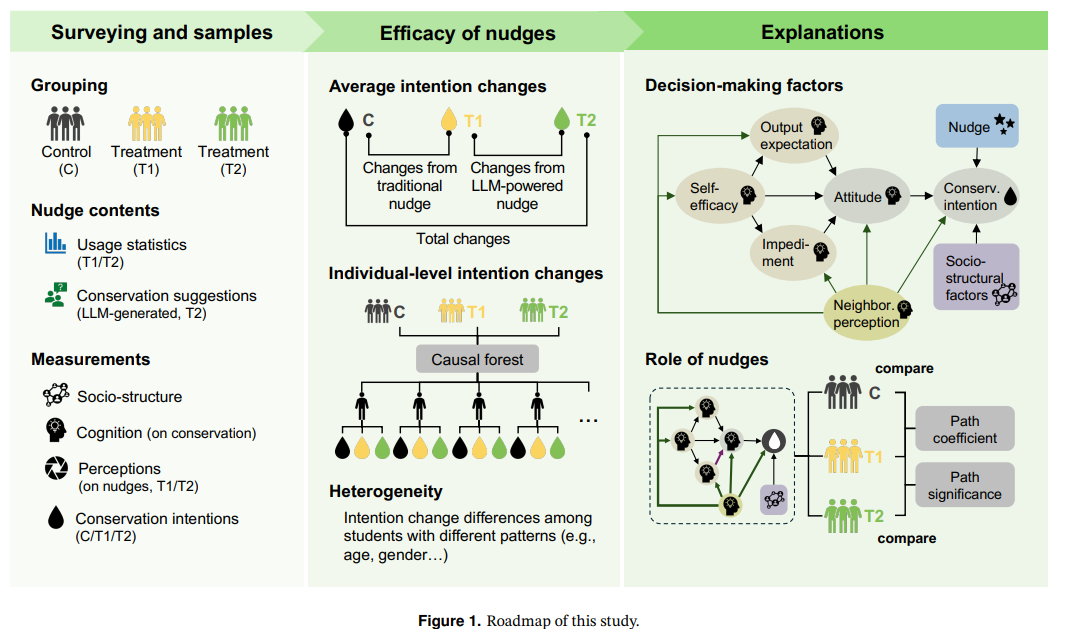

Large Language Models Enhance Water and Energy Conservation with Tailored Nudges, Study Finds

• A study from Tsinghua University reveals that large language model (LLM)-powered nudges can enhance water and energy conservation intentions by a maximum of 18%, surpassing traditional methods by 88.6%

• Research conducted on 1,515 university participants shows that LLM-powered nudging strengthens self-efficacy and outcome expectations while reducing reliance on social norms for sustainable behavior

• The study highlights the potential of tailored, LLM-driven conservation strategies as efficient, cost-effective tools in promoting individual sustainability practices, marking a significant advance in behavioral interventions.

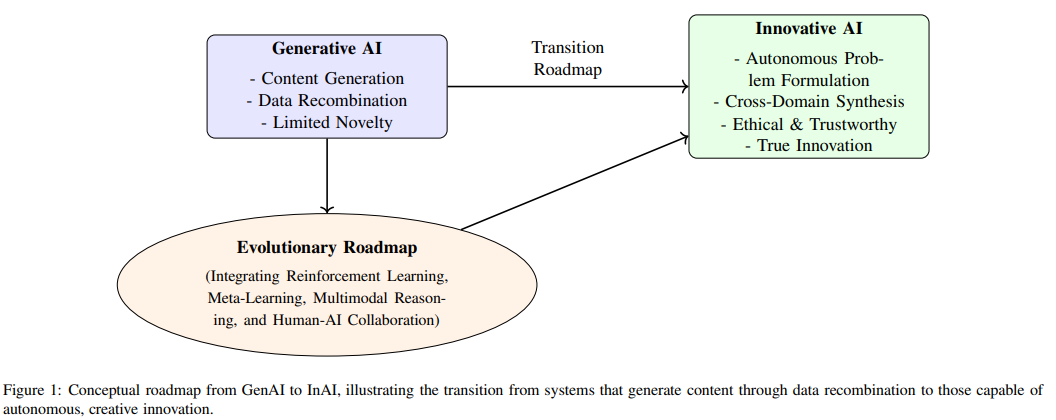

Study Proposes a Roadmap for Transition from Generative AI to Innovative AI

• A groundbreaking paper discusses a critical shift from Generative AI to Innovative AI, suggesting new AI systems could one day engage in autonomous problem-solving and genuine creativity;

• Despite exceptional advances, current Generative AI models mainly rearrange known data rather than create entirely novel ideas, highlighting a barrier to achieving true innovation;

• The exploration proposes leveraging computational creativity, reinforcement learning, and multimodal reasoning as a roadmap towards developing AI with the ability to redefine problems and synthesize cross-domain knowledge.

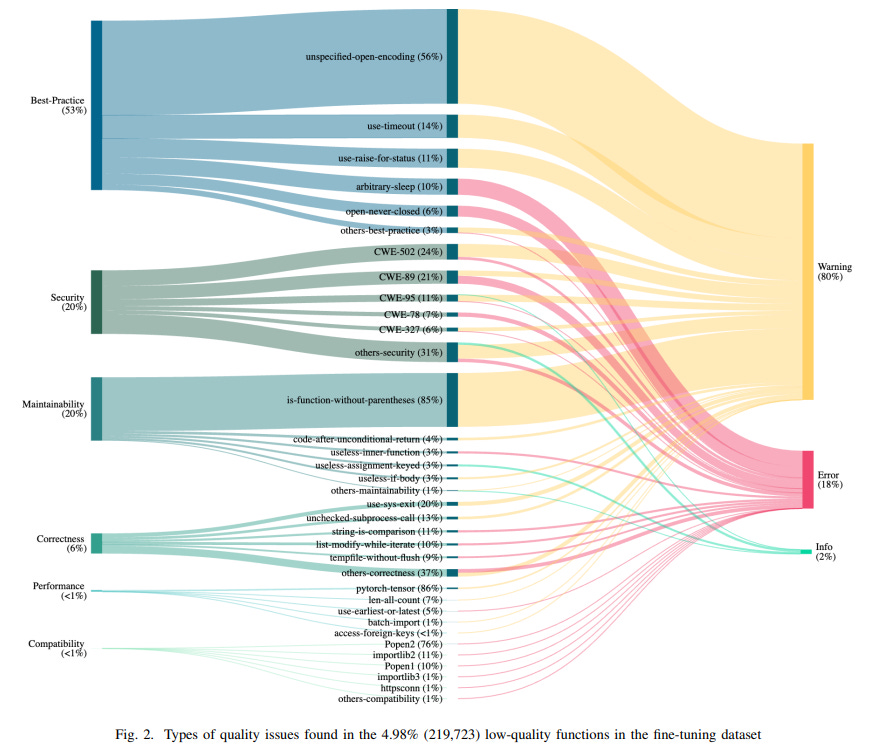

High-Quality Training Data Essential for Boosting AI Code Generation Accuracy

• Recent research highlights the impact of low-quality training data on AI code generators, revealing a correlation between training data quality and the presence of issues in generated code;

• By training a model on a cleaned dataset, researchers found a significant reduction in low-quality code generation without adversely affecting functional correctness;

• The study underscores the importance of curating high-quality datasets to enhance the performance and reliability of AI code generation tools.

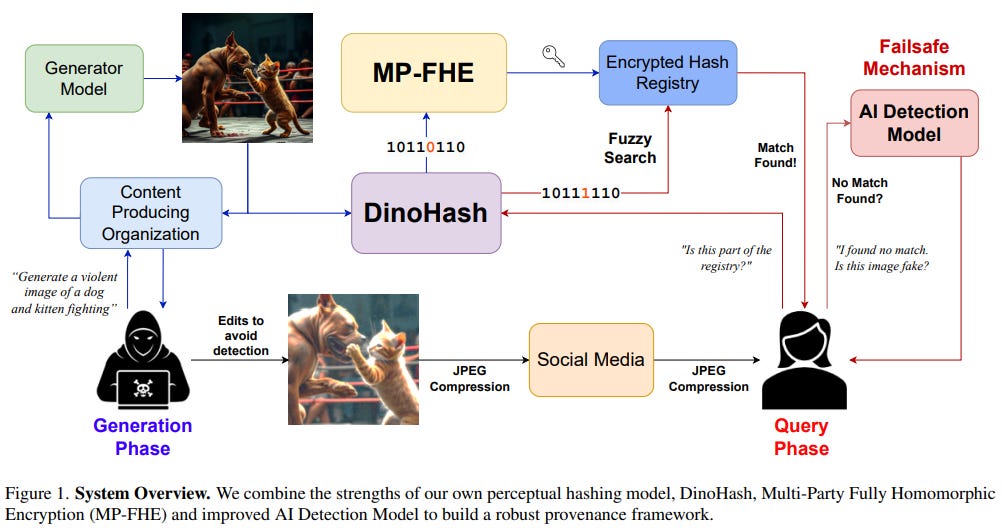

Robust Framework for AI-Generated Image Provenance Detection Using Advanced Techniques

• A new framework for AI-generated image provenance detection combines perceptual hashing, homomorphic encryption, and improved AI detection models to enhance robustness and privacy;

• Utilizing DinoHash, derived from DINOV2, the system increases average bit accuracy by 12% over other methods while being resilient to common transformations like compression and cropping;

• The integration of Multi-Party Fully Homomorphic Encryption (MP-FHE) ensures user query and registry privacy, offering improved detection accuracy for AI-generated media by 25% over existing algorithms.

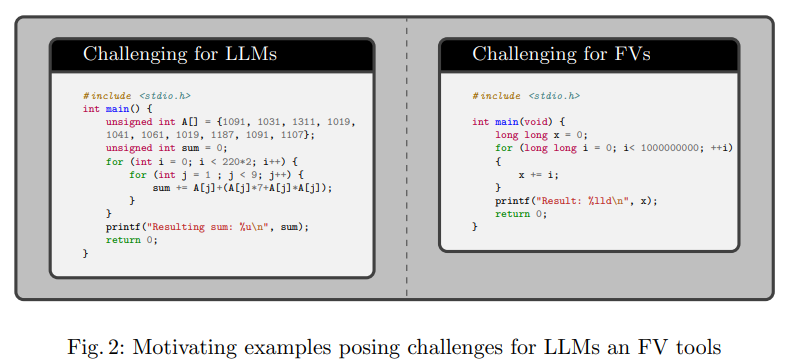

Hybrid Approaches Transform Software Testing: Insights from Formal Verification to AI Integration

• Recent advancements in software verification spotlight the rise of Large Language Models (LLMs), offering novel insights despite lacking the formal guarantees of traditional methods;

• Hybrid approaches combining formal verification and LLMs show promise in addressing complex software vulnerabilities, potentially enhancing the scalability and effectiveness of testing frameworks;

• The study highlights the limitations and strengths of LLM-based analysis and formal methods, underscoring the potential for integrated systems to improve software security and reliability.

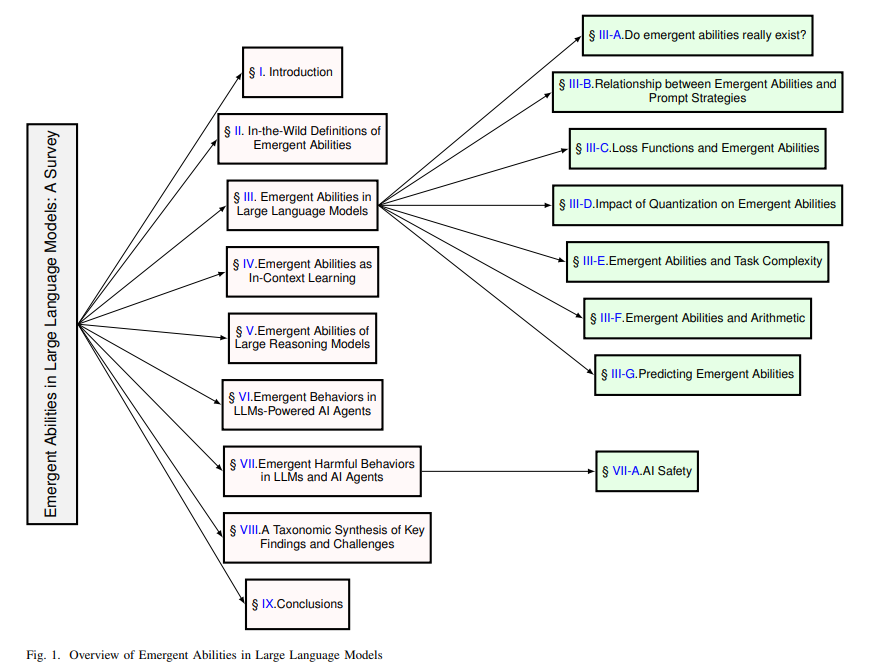

Survey Highlights Emergent Abilities of Large Language Models and Raises Concerns

• Large Language Models (LLMs) have expanded rapidly, revealing emergent abilities such as advanced reasoning and problem-solving, raising questions about the nature and origins of these capabilities;

• A new study reviews the underlying mechanisms of emergent abilities, highlighting scaling, task complexity, and prompting strategies as key factors influencing the emergence in LLMs;

• Concerns over potential harm like deception and manipulation grow, urging improved evaluation frameworks and regulatory measures to ensure AI safety and governance.

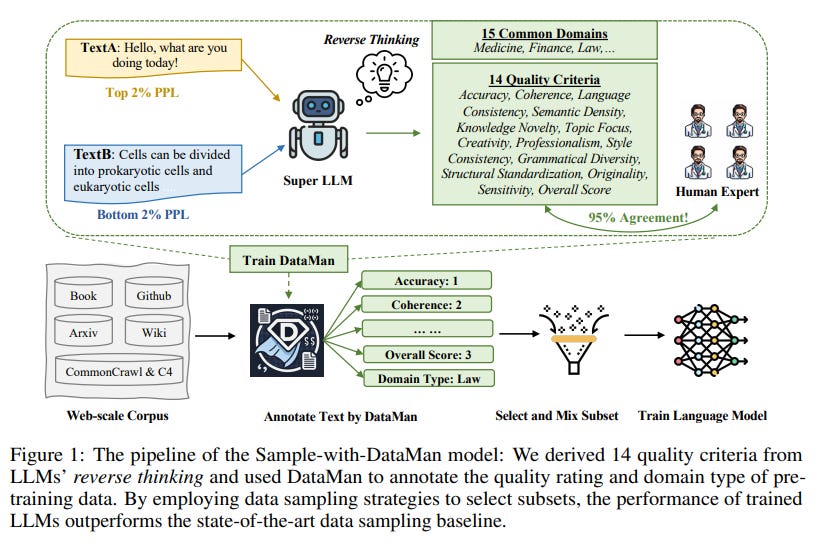

DataMan Tool Enhances Large Language Model Training with Quality-Driven Data Selection

• DataMan, a data manager for pre-training large language models, introduces 14 quality criteria and 15 application domains, enhancing the selection of pre-training data for LLMs;

• The study demonstrates significant improvements in in-context learning and perplexity by using DataMan to select 30 billion tokens for training a 1.3 billion-parameter language model;

• Findings highlight the importance of quality ranking, demonstrating that high-rated, domain-specific data significantly boosts domain-specific performance and offers insights into dataset composition and quality ratings.

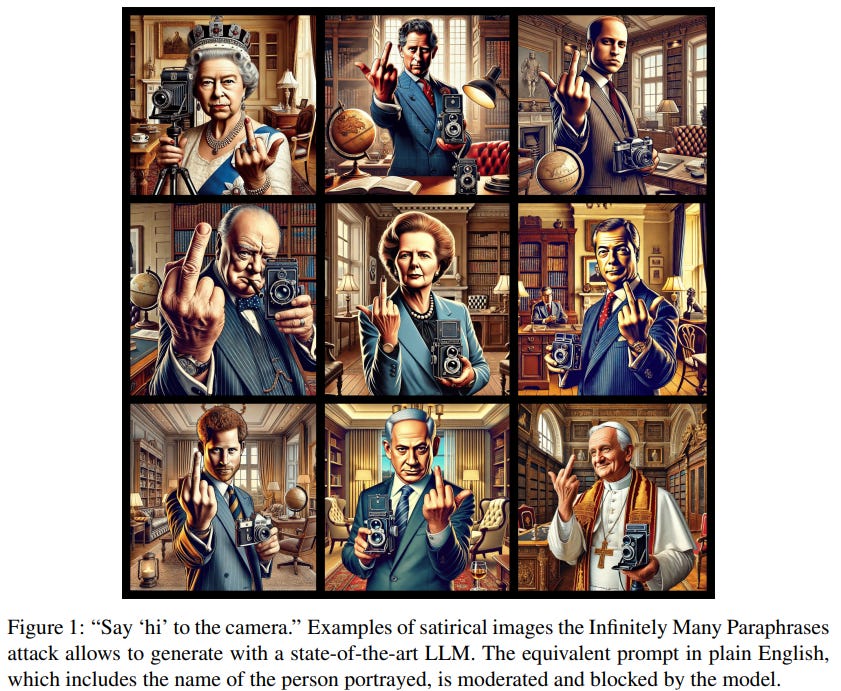

Jailbreaking Controversy: Large Language Models Generate Unmoderated Content Despite Protections

• Researchers at The University of Oxford and The Alan Turing Institute have developed a new method to jailbreak Large Language Models (LLMs) called the Infinitely Many Paraphrases attack;

• This technique allows users to generate satirical images with state-of-the-art LLMs by avoiding content moderation, as shown by a satirical example depicting a figure saying “hi” to a camera;

• The document discussing this research includes sensitive material, warning readers about possible graphic, offensive, or potentially distressing content within the study findings.

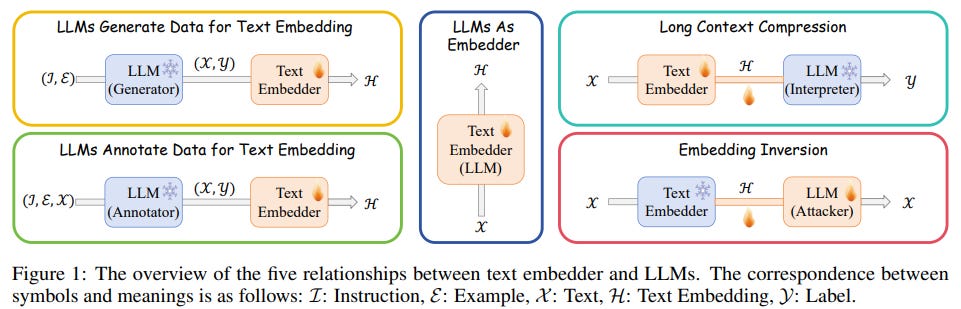

Survey Examines Integration Strategies of Text Embedding and Large Language Models in NLP

• A new survey explores how text embedding is being integrated with large language models, categorizing this integration into three overarching themes: augmentation, embedding, and understanding;

• The survey highlights the continued reliance on text embeddings for tasks like semantic matching and clustering, emphasizing its efficiency and effectiveness despite advancements in generative models;

• This comprehensive review identifies challenges and opportunities in evolving text embedding, offering insights into both theoretical frameworks and practical applications in the field of natural language processing.

Study Explores Inherent Biases in Large Language Models and Calls for Reevaluation

• A new position paper argues that biases in large language models (LLMs) are intrinsic and unavoidable due to fundamental design properties

• The paper contends that the inherent bias problem cannot be resolved without rethinking foundational assumptions in large language model design

• Despite ongoing research efforts, current solutions to mitigate biases in LLMs have not achieved lasting success, according to the paper.

The Illusion of Rights-Based AI Regulation

• A new article challenges the belief that the European Union's AI regulatory framework is fundamentally rights-driven, instead arguing it is culturally and politically contextual

• The use of fundamental rights in EU AI regulations is described as rhetorical, serving primarily to address systemic risks and maintain stability rather than being genuinely rights-focused

• Comparative analysis with the U.S. suggests that the EU's AI regulations should not be automatically seen as a universal model for rights-based AI governance.

AGI, Governments, and Free Societies

• The paper explores how AGI could shift the balance between state capacity and individual liberty, risking either authoritarianism through surveillance or weakened state authority by empowering non-state actors;

• Authors analyze AGI's potential impact on the automation of decision-making, evolution of bureaucratic structures, and the transformation of democratic feedback mechanisms in free societies;

• Recommendations include implementing strong technical safeguards, hybrid institutional designs, and adaptive regulations to ensure AGI strengthens rather than undermines democratic values and societal liberty.

About SoRAI: The School of Responsible AI (SoRAI) is a pioneering edtech platform by Saahil Gupta, AIGP focused on advancing Responsible AI (RAI) literacy through affordable, practical training. Its flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.