Are you saying 'thanks', 'please' to ChatGPT? It's costing OpenAI millions in electricity, says Sam Altman!

++ Robots race humans in Beijing, and Abu Dhabi plans an AI-run government. ByteDance challenges GPT-4o in image generation, Anthropic's Claude Code..

Today's highlights:

You are reading the 88th edition of the The Responsible AI Digest by SoRAI (School of Responsible AI) (formerly ABCP). Subscribe today for regular updates!

Are you ready to join the Responsible AI journey, but need a clear roadmap to a rewarding career? Do you want structured, in-depth "AI Literacy" training that builds your skills, boosts your credentials, and reveals any knowledge gaps? Are you aiming to secure your dream role in AI Governance and stand out in the market, or become a trusted AI Governance leader? If you answered yes to any of these questions, let’s connect before it’s too late! Book a chat here.

🚀 AI Breakthroughs

Polite Interaction with ChatGPT Costs OpenAI Millions and Raises Environmental Concerns

• Polite interactions with ChatGPT, such as saying "please" and "thank you," have significantly increased electricity costs for OpenAI by tens of millions of dollars in energy expenses

• As of March 2025, ChatGPT ranks as the eighth most visited website globally, drawing around 5.56 billion visits and intensifying the environmental footprint of its operations

• ChatGPT's energy consumption is substantial, equating to powering 17,000 U.S. homes daily, highlighting the environmental challenges posed by AI's growing popularity and resource demands.

Humanoid Robots Compete with Humans in Beijing's First Robot Half-Marathon

• In the world's first-ever Humanoid Robot Half-Marathon held in Beijing, Chinese humanoid robots raced alongside humans, showcasing varying sizes from 120 cm to 1.8 meters tall

• Some robots needed physical support during the race, while others crashed, highlighting both technological progress and current limitations in humanoid robotics

• Tiangong Ultra, the humanoid winner, completed the race in 2 hours and 40 minutes, falling significantly behind human racers, indicating the technology's developmental stage relative to human capability;

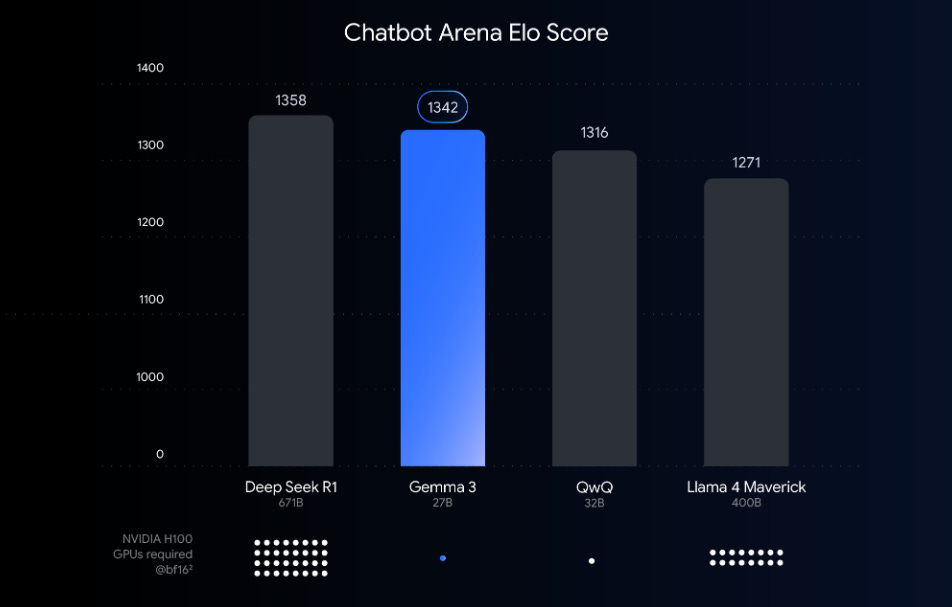

Gemma 3 Models Optimized with QAT Now Accessible on Consumer-Grade GPUs

• Gemma 3 models are now available with Quantization-Aware Training (QAT), reducing memory demands while preserving quality, enabling the 27B variant on GPUs like the NVIDIA RTX 3090;

• Quantizing Gemma 3 with formats like int4 decreases VRAM needs significantly, with the 27B model dropping from 54 GB in BF16 to 14.1 GB;

• By integrating QAT during training, Gemma 3 maintains accuracy despite lower bit precision, minimizing performance dips associated with heavy quantization techniques.

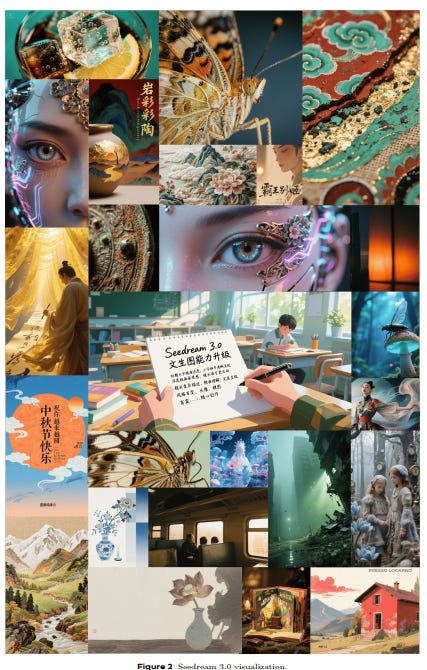

ByteDance's Seedream 3.0 Challenges GPT-4o in High-Quality Image Generation

• ByteDance's Seedream 3.0 surpasses GPT-4o in image creation, featuring advanced techniques like mixed-resolution training and resolution-aware timestep sampling for enhanced scalability and visual language alignment

• Seedream 3.0, a bilingual model, excels in complex Chinese text rendering, outperforming alternatives like GPT-4o and Imagen 3, and addresses limitations of Seedream 2.0

• Post-training of Seedream 3.0 includes VLM-based reward models and diverse aesthetic captions for improved image quality, countering GPT-4o's yellowish hue and noise issues.

Wikimedia Enterprise Releases Beta Dataset on Kaggle for Machine Learning Use

• Wikimedia Enterprise introduced a beta dataset on Kaggle with English and French Wikipedia content, tailored for machine learning workflows

• The dataset, utilizing the Snapshot API's Structured Contents beta, provides clean JSON formats, crucial for NLP pipelines and machine learning endeavors

• Free to use under Creative Commons licenses, the dataset includes abstracts, infobox data, and segmented sections, fostering innovation within Kaggle’s machine learning community.

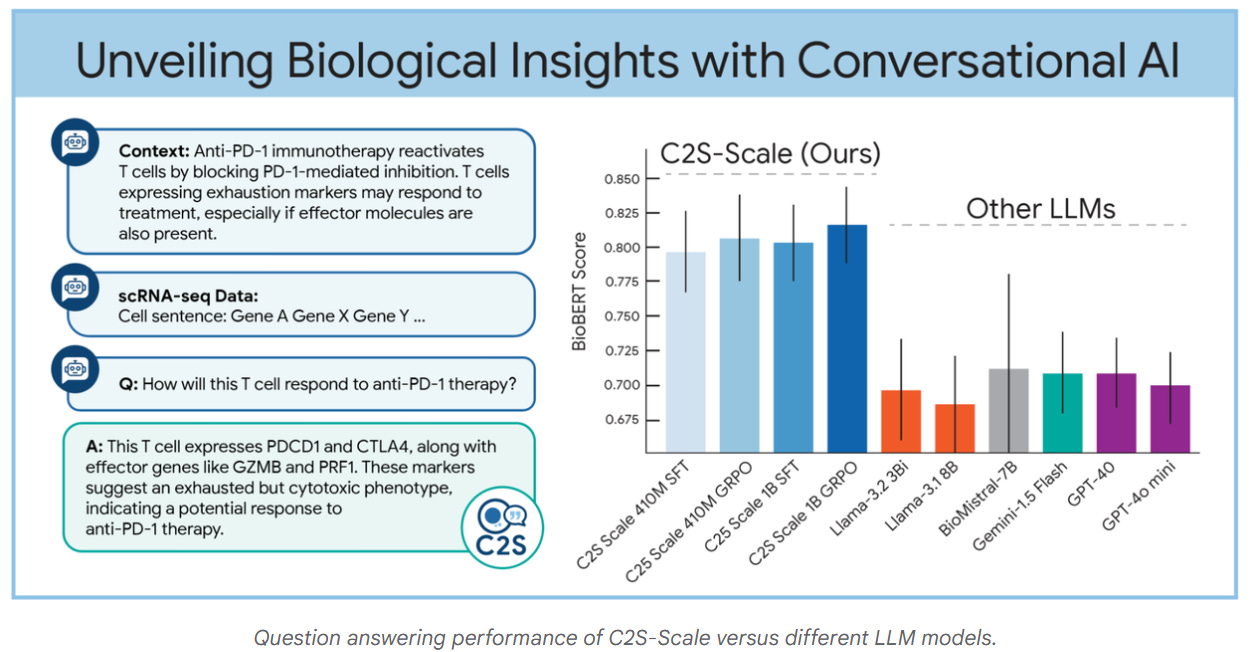

Google Research Develops Language Model to Advance Single-Cell Biological Analysis Capabilities

• Google Research introduces C2S-Scale, a family of open-source large language models, to transform single-cell RNA sequencing data into interpretable text for advanced biological analysis

• These models convert gene expression profiles into "cell sentences," enabling natural language queries and analysis, significantly enhancing accessibility and interpretability for biological research

• C2S-Scale models range from 410 million to 27 billion parameters, designed for diverse research needs, allowing fine-tuning and deployment for both efficient and high-performance biological tasks.

Sam Altman Discusses AI Agents and Superintelligence Live at TED2025 Event

• Sam Altman, CEO of OpenAI, highlighted AI's rapid progress and potential to surpass human intelligence during a live discussion at TED2025 with TED head Chris Anderson

• Altman emphasized the transformative role of AI models like ChatGPT, suggesting they could become integral extensions of human capabilities, enhancing daily life significantly

• Key concerns around AI safety, power dynamics, and moral authority were discussed, with Altman envisioning a future where AI plays a pivotal role in shaping global developments.

⚖️ AI Ethics

Anthropic Releases Claude Code: A Flexible Tool for Agentic Coding Workflows

• Anthropic releases Claude Code, a command line tool for agentic coding, enhancing workflow flexibility and allowing engineers to integrate the Claude AI into diverse coding environments.

• Designed for flexibility, Claude Code facilitates customization through tuning options, allowing engineers to craft personalized workflows while retaining robust security and safety features.

• Claude Code supports advanced integrations with tools through MCP and REST APIs, empowering users to automate tasks like debugging, test-driven development, and comprehensive codebase exploration.

AI System Enhances Traffic Violation Detection in Mira Bhayander Vasai Virar Jurisdiction

• The Mira Bhayander Vasai Virar Police have launched an AI-driven system to enhance traffic monitoring in Thane and Palghar, aimed at improving road safety and enforcement efficiency

• This AI-based platform can automatically detect traffic violations like helmet compliance and triple seat riding, minimizing manual intervention and increasing enforcement precision and speed

• Separately, Malad Police in Mumbai used AI to enhance CCTV footage, swiftly solving a Rs 36 lakh theft case and recovering 100% of the stolen valuables within 12 hours.

Thailand Debuts AI-Powered Police Robot to Boost Safety at Songkran Festival

• Thailand's AI Police Cyborg 1.0 debuts during Songkran festival, aiming to enhance public safety with advanced surveillance and threat detection capabilities.

• Equipped with 360-degree cameras and facial recognition, the robot offers real-time analysis and rapid response coordination through a centralized command center.

• China's humanoid robots reinforce global trends in robotic law enforcement, sparking ethical discussions on privacy and accountability within the AI-driven public safety landscape.

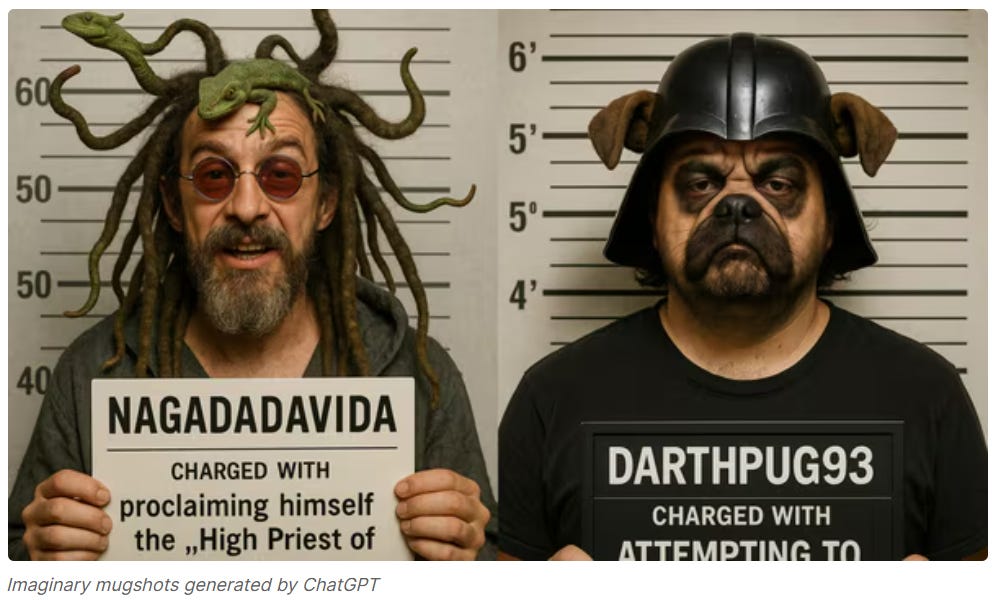

Social Media Buzz: Users Create Absurd Mugshots with ChatGPT’s Image Generator

• ChatGPT's native image generation has become a standout feature, sparking viral trends like transforming photos into Studio Ghibli-style art and rendering unique action figure depictions

• Social media users on Reddit are pushing boundaries by requesting ChatGPT to generate humorous mugshots, incorporating whimsical arrest reasons based on their usernames

• OpenAI's advancements with GPT-4o allow ChatGPT to create images directly, enhancing its ability to produce personalized, precise images without relying on external models like DALL-E;

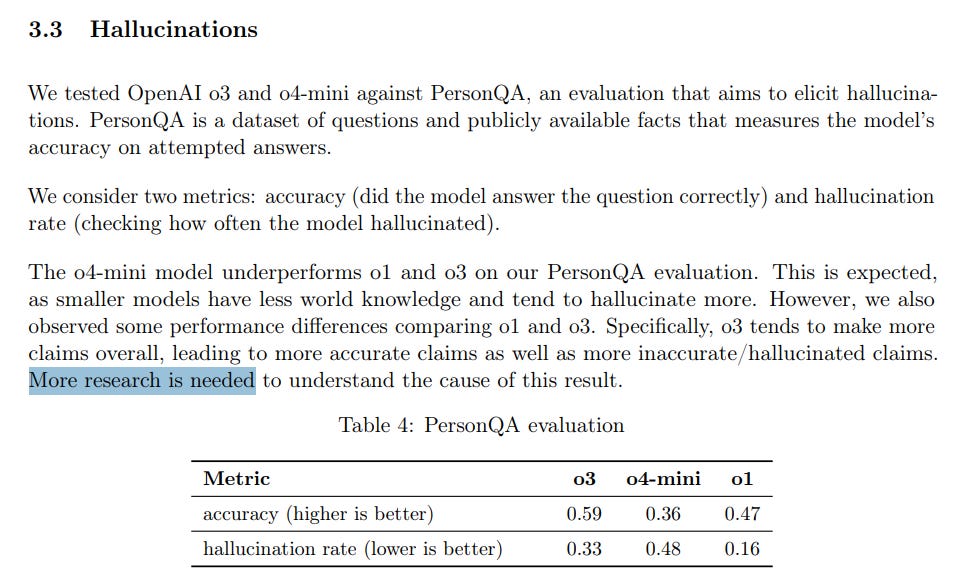

OpenAI's O3 and O4-mini Models Hallucinate More Than Previous AI Generations

• OpenAI's o3 and o4-mini models, although state-of-the-art, exhibit higher hallucination rates than their predecessors, posing challenges for accuracy-critical applications like legal documentation

• Internal tests show o3 hallucinating 33% of the time on PersonQA, significantly higher than previous models, with o4-mini doing even worse at 48%

• Experts suggest reinforcement learning in o-series models may amplify hallucinations, with web search integration being a potential solution to enhance model accuracy;

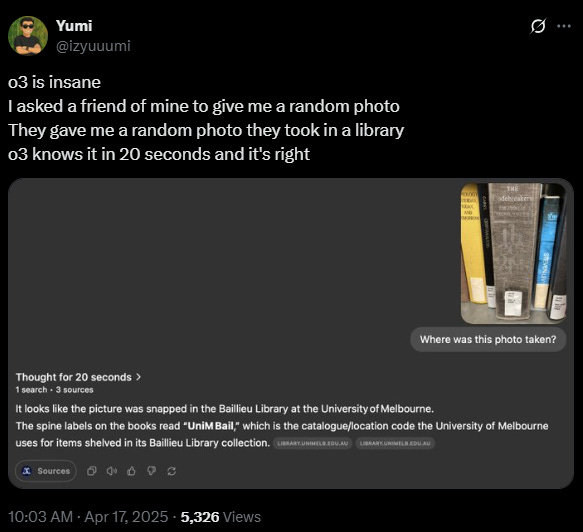

Users Leverage OpenAI's Advanced Models to Reveal Locations from Uploaded Images

• A trend is emerging where users utilize ChatGPT's o3 model to determine locations in photos, raising privacy concerns about revealing personal information through image analysis;

• OpenAI's latest models, o3 and o4-mini, can interpret images by cropping, rotating, and zooming, allowing them to deduce landmarks and cities from photos without relying on EXIF data;

• OpenAI states that safeguards are in place to prevent abuse, but o3's ability to identify locations from subtle clues exposes potential privacy risks without clear prevention measures.

Artists Concerned as AI-Generated Art Disrupts Original Anime-Inspired Creations

• Artists like Joy Cardaño are witnessing a decline in commissions due to the growing preference for AI-generated art, raising concerns over the impact on their livelihood and creativity;

• Many artists argue that reliance on AI-generated images undermines the years of training and experience required to develop unique artistic styles and secure fair commission work;

• OpenAI and other tech firms are exploring measures to prevent AI from mimicking living artists' styles, striving to support rather than replace human creativity in the art world.

Google Faces Transformative Hearing: DOJ Seeks Chrome Divestiture to Break Monopoly

• A remedy hearing in Washington, D.C., is examining remedies for Google's monopolistic practices, with divestiture of the Chrome browser as a potential outcome

• The U.S. Department of Justice seeks to force Google's Chrome divestment to promote competition, potentially altering Alphabet's $1.8 trillion market structure

• Google's counsel argues that the DOJ's proposal is flawed and overly punitive, emphasizing the complexity and potential implications of separating Chrome and its open-source counterpart, Chromium.

Abu Dhabi to Launch World's First AI-Powered Government by 2027 with $13 Billion Investment

• Abu Dhabi is set to become the world’s first fully AI-powered government by 2027, backed by a massive AED 13 billion investment aimed at revolutionizing governance through technology

• The comprehensive digital strategy will integrate AI, cloud computing, and automation across government operations, streamlining services and boosting efficiency while reducing administrative costs

• A unified digital ERP platform will enable seamless integration of 200+ AI solutions, positioning Abu Dhabi as a leader in innovative digital governance and significantly boosting its GDP and job market.

🎓AI Academia

Seven Strategic Lessons for AI Integration from Leading Enterprise Innovators

• Several frontier companies are showcasing the transformative impact of AI, focusing on enhancing workforce performance, automating routine operations, and powering products for improved customer experiences;

• Successful AI adoption in enterprises requires an experimental mindset, treating AI as a new paradigm distinct from traditional software or cloud apps deployment, to achieve faster value realization;

• Iterative deployment methods allow companies to quickly learn from customer use cases, enhancing AI models and products with regular updates, leading to earlier access to AI advancements for users.

EU AI Act's General-Purpose AI Code of Practice Examined Against Industry Standards

• A new report analyzes the alignment between the EU AI Act's GPAI Code of Practice and existing safety measures by leading AI companies like OpenAI, Google DeepMind, and Microsoft

• The study highlights how companies employ Frontier Safety Frameworks, model cards, and vulnerability testing to align with the GPAI Code's safety requirements

• Evidence from industry documents suggests varying levels of compliance with the GPAI Code, influencing upcoming regulatory dialogues as EU AI governance evolves;

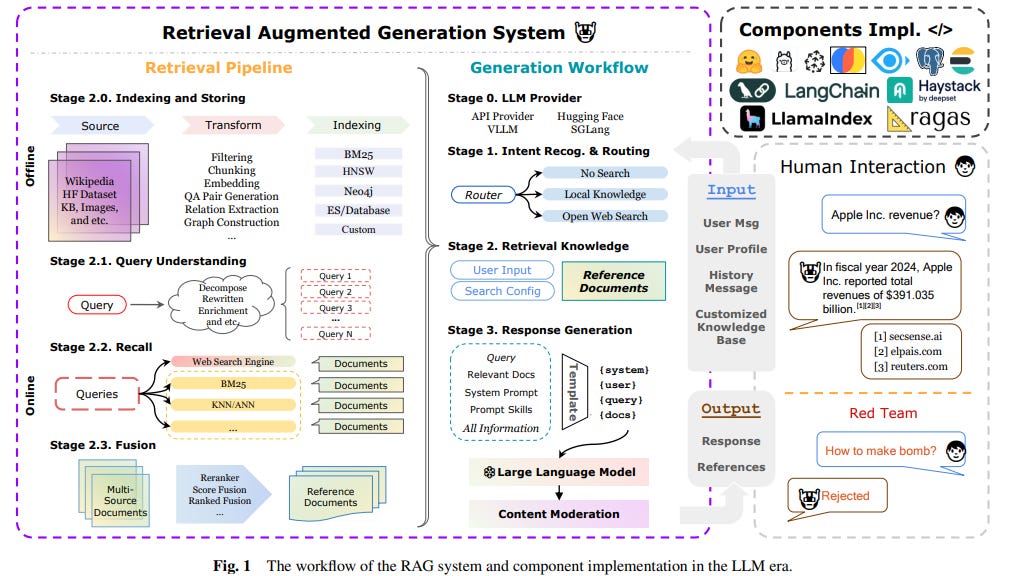

Comprehensive Survey on Retrieval-Augmented Generation Evaluation in Large Language Models Era

• A new comprehensive survey evaluates Retrieval Augmented Generation (RAG) methodologies, highlighting advancements in combining Large Language Models (LLMs) with external information retrieval techniques

• The survey addresses challenges in evaluating RAG systems, focusing on performance metrics, factual accuracy, and computational efficiency in integrating dynamic knowledge sources

• It compiles and categorizes RAG-specific datasets and evaluation frameworks, offering a crucial resource to advance the development and evaluation of RAG systems in the evolving LLM era.

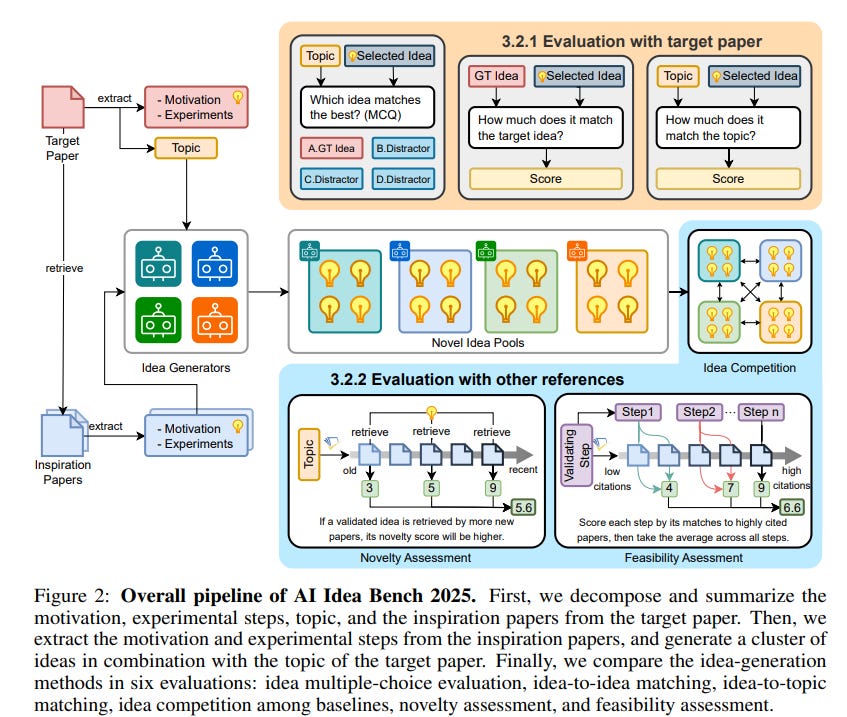

AI Idea Bench 2025 Evaluates Large Language Models in Research Idea Generation

• AI Idea Bench 2025 introduces a framework to assess AI-generated research ideas, focusing on the quality of idea generation and its alignment with the original content

• The framework includes a dataset of 3,495 AI papers with inspired works, providing a comprehensive evaluation methodology for the scientific community

• This benchmark aims to overcome challenges like data leakage and limited feasibility analyses, enhancing the potential for automating scientific discovery.

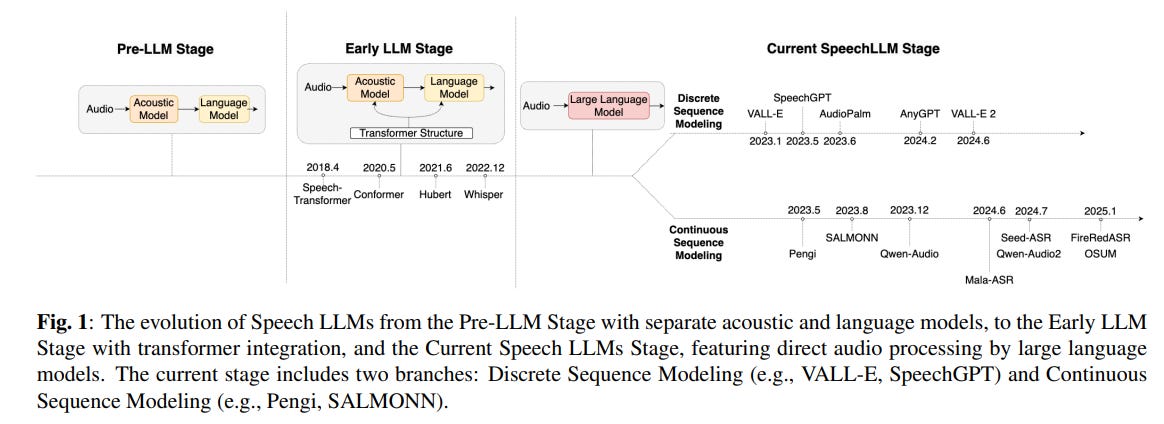

Comprehensive Study Highlights Advancements in Developing Large Language Speech Models

• A comprehensive survey has been conducted analyzing speech Large Language Models (LLMs), highlighting their development, architecture paradigm, and comparison with traditional models in speech understanding tasks

• The survey provides the first in-depth comparative analysis of training methods for aligning speech and text modalities within Speech LLM architectures

• Key challenges such as LLM dormancy and solutions to enhance training strategies for Speech LLMs are discussed, offering valuable insights for future research in speech understanding.

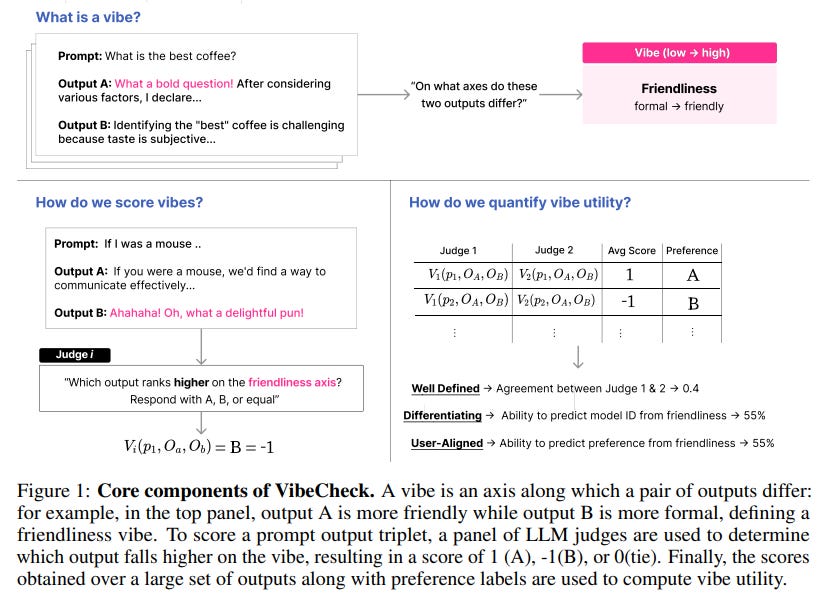

VibeCheck System Quantifies Unique Traits in Large Language Models at ICLR 2025

• VibeCheck, a novel system from UC Berkeley researchers, systematically identifies distinct "vibes" in large language models, capturing nuanced characteristics like tone, style, and formatting beyond traditional correctness metrics;

• Through VibeCheck, Llama-3 is found to possess a friendly and amusing vibe, whereas GPT-4 leans towards formal tones this differentiation predicts model identity with 80% accuracy;

• The VibeCheck tool highlights significant differences in LLM behavior across various tasks, offering insights into model preferences, such as Llama's tendency to overexplain math problems compared to GPT-4.

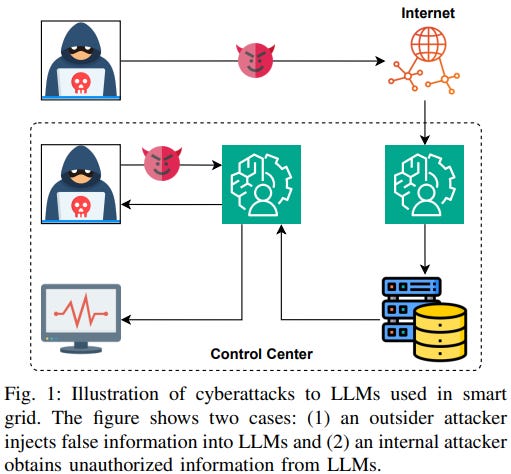

Assessing Large Language Models' Vulnerabilities in Smart Grids: Threat Modeling and Real-World Validation

• Researchers systematically evaluated risks of deploying large language models (LLMs) in smart grids, identifying major attack types like bad data injection and domain knowledge extraction;

• The study highlights two primary attack vectors: external adversaries injecting malicious data and both internal and external actors extracting unauthorized information from smart grid LLMs;

• Validation through simulations showed attackers could exploit popular LLMs like OpenAI GPT-4 and Google Gemini 2.0 to compromise smart grid security.

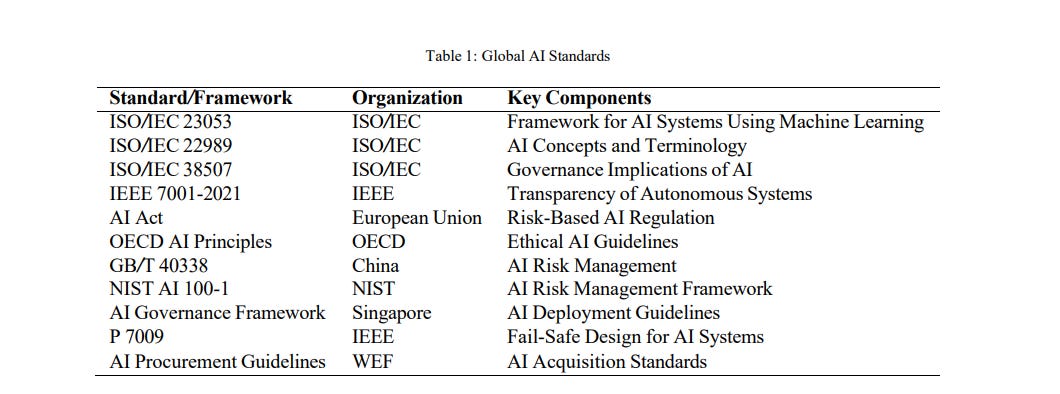

Comprehensive Analysis of Responsible AI: Frameworks, Standards, Applications, and Best Practices

• A new survey extensively explores the framework, standards, applications, and best practices of Responsible AI (RAI), highlighting current challenges and initiatives for ethical AI use;

• The survey emphasizes how social pressure and unethical AI practices drive the demand for standardized Responsible AI frameworks, beyond mere technical implementation;

• Transparency and ethical considerations are underscored as key principles, stressing the importance of maintaining accountability in AI decision-making processes to benefit society constructively.

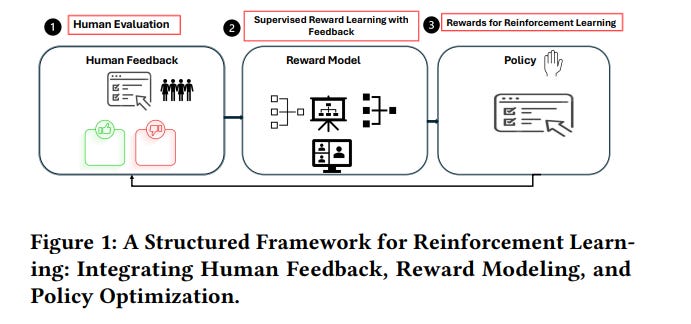

Study Highlights Governance Issues in Reinforcement Learning from Human Feedback Systems

• A recent study highlights governance challenges in Reinforcement Learning from Human Feedback (RLHF), particularly focusing on evaluator bias, cognitive inconsistency, and feedback reliability issues.

• Research demonstrates that evaluators with higher rationality scores provide more consistent and expert-aligned feedback, with low-rationality participants introducing variability (𝑝< 0.01).

• Recommendations include evaluator pre-screening, systematic feedback audits, and reliability-weighted reinforcement aggregation to enhance fairness, transparency, and robustness in AI alignment pipelines.

AI Safety Strategies Urged to Focus on Future Workplace Transformations

• A recent study highlights the need to prioritize AI safety concerning the evolving future of work, emphasizing comprehensive support for meaningful labor amid automation.

• The paper underscores risks of income inequality due to structural labor market changes driven by AI, calling for global governance to enhance economic justice.

• It advocates for open AI development and copyright mechanisms to ensure fair compensation for AI training data, aiming to curtail monopolistic behaviors and promote innovation.

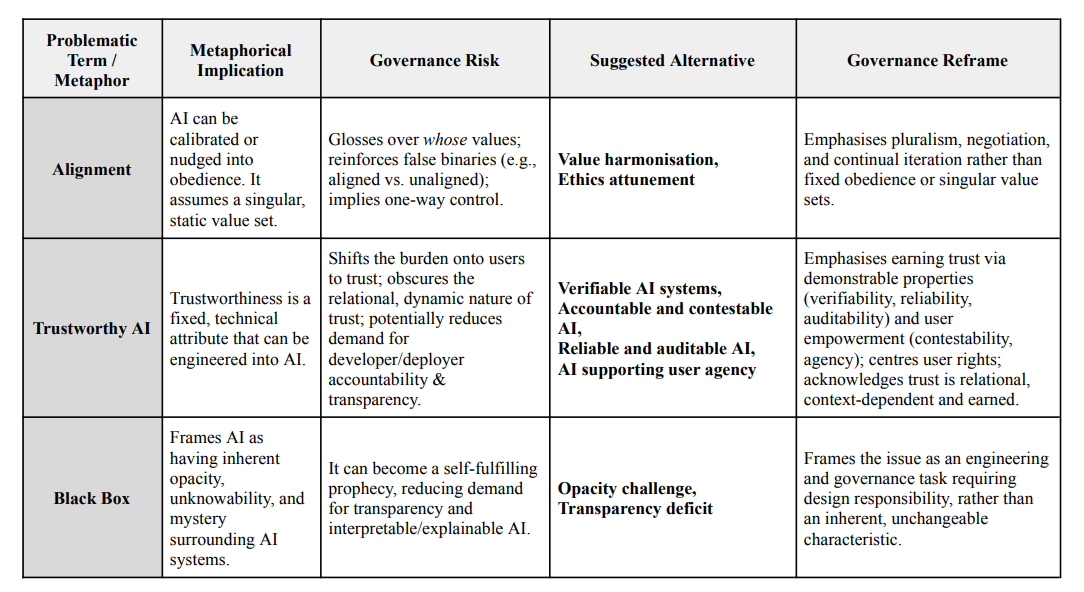

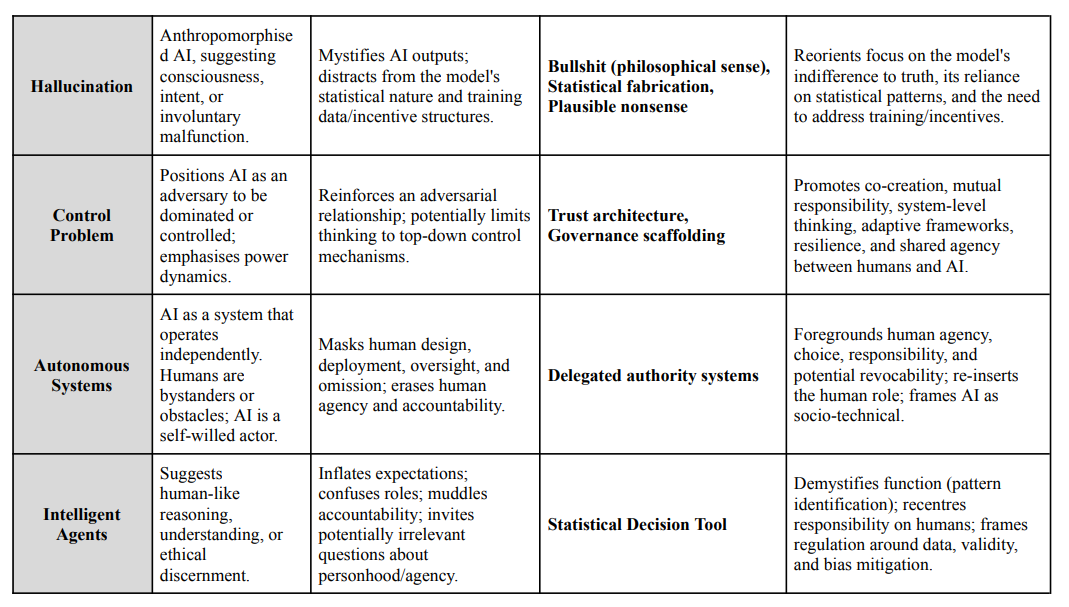

Naming and Framing: Linguistic Challenges in Cybersecurity and AI Governance

• Misleading terminology in cybersecurity and AI governance obscures human responsibility, inflates expectations, and undermines interdisciplinary collaboration, according to recent discussions in a research paper;

• Linguistic pitfalls in cybersecurity, such as the ‘weakest link’ narrative, are being mirrored in AI governance through terms like ‘black box’ and ‘hallucination’ with governance implications;

• A language-first approach in AI governance is advocated to create transparent, equitable frameworks by redefining dominant metaphors and fostering precise, inclusive lexicon development.

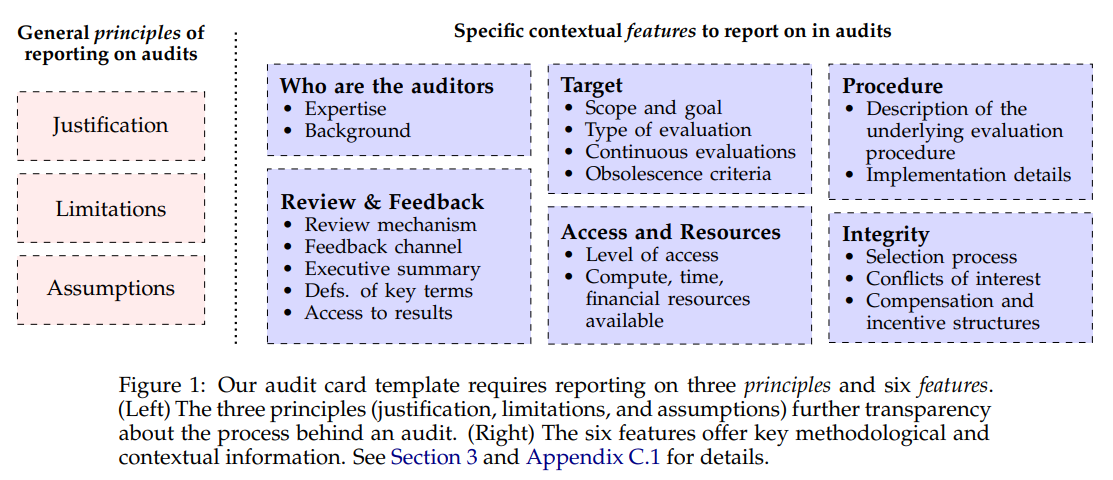

AI Audit Cards Proposed to Enhance Transparency and Trust in Evaluations

• Researchers propose "audit cards" to offer structured contextual information, enhancing transparency and interpretability in AI evaluations, addressing significant gaps in current reporting practices;

• The study identifies six key features for audit cards, including auditor identity and evaluation scope, which are often omitted in existing evaluation reports, highlighting the need for comprehensive guidelines;

• Analysis reveals most regulations and frameworks lack adequate guidance on rigorous reporting, underscoring the potential of audit cards to bolster trust and accountability in AI governance frameworks.

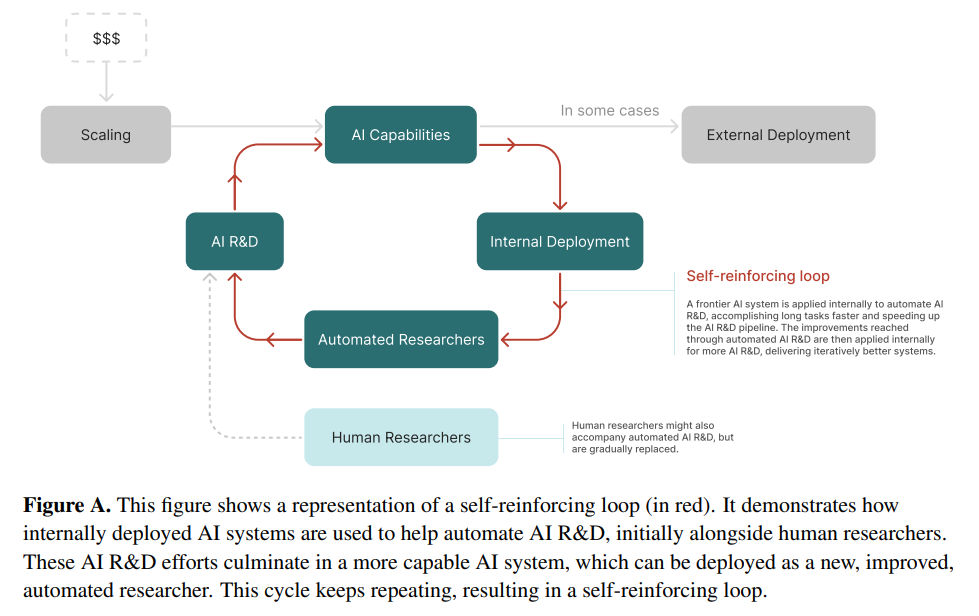

Governance Frameworks Needed for AI Systems Deployed Within Developer Companies

• A new report examines the internal deployment of advanced AI systems within frontier AI companies, stressing the need for robust governance to manage associated benefits and risks

• AI companies prioritizing internal deployment may sideline external deployment to maintain competitive edge, potentially hastening the race toward artificial general intelligence and closing policy intervention windows

• The report provides a detailed analysis of the governance challenges related to internal AI deployment, referencing safety-critical sectors and proposing initial recommendations for oversight frameworks.

About SoRAI: The School of Responsible AI (SoRAI) is a pioneering edtech platform by Saahil Gupta, AIGP focused on advancing Responsible AI (RAI) literacy through affordable, practical training. Its flagship AIGP certification courses, built on real-world experience, drive AI governance education with innovative, human-centric approaches, laying the foundation for quantifying AI governance literacy. Subscribe to our free newsletter to stay ahead of the AI Governance curve.