Andrej Karpathy is launching a new kind of school that is "AI native"

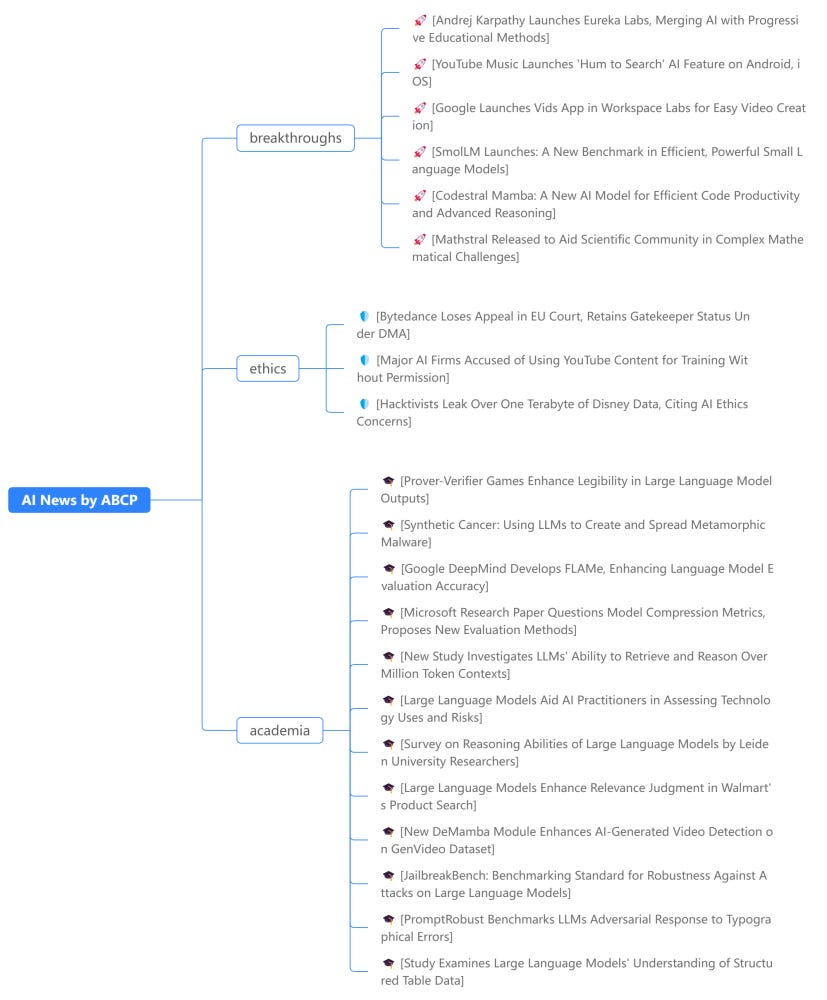

Hacktivists Leak Over a Terabyte of Disney Data, YouTube's 'Hum to Search', Google's New Vids and Gemini Apps, AI Misuses Creators' Content & Revolutionary Advances in AI Education, Development, and S

Today's highlights:

🚀 AI Breakthroughs

Andrej Karpathy Launches Eureka Labs, Merging AI with Progressive Educational Methods

• Andrej Karpathy launches Eureka Labs, a new AI-focused educational company aiming to blend generative AI with traditional teaching methods

• Eureka Labs introduces LLM101n, advertised as "the world's obviously best AI course," focusing on building and refining AI models

• The initiative will provide AI-assisted courses both online and in-person, with plans to expand into various academic subjects.

YouTube Music Launches 'Hum to Search' AI Feature on Android, iOS

• YouTube Music has launched 'Hum to Search' AI feature, enabling users to search songs by humming

• The feature is available globally on both Android and iOS platforms as of July 15

• AI usage in music streaming extends with YouTube Music testing 'conversational radio' for customized playlists.

Google Launches Vids App in Workspace Labs for Easy Video Creation

• Google's new Vids app in Workspace Labs enables users to easily create videos using documents, slides, and recordings

• Unlike other AI video tools, Vids focuses on assembling presentation videos rather than generating lifelike footage

• Users have access to a variety of templates and royalty-free content to start and enhance Google Vids presentations.

SmolLM Launches: A New Benchmark in Efficient, Powerful Small Language Models

• SmolLM introduces a series of small language models including 135M, 360M, and 1.7B parameter versions, trained on a new high-quality dataset

• The SmolLM models outperform competitors in their class on various benchmarks, testing common sense reasoning and world knowledge

• SmolLM's models are optimized for efficiency, enabling potential use on local devices from smartphones to laptops.

Codestral Mamba: A New AI Model for Efficient Code Productivity and Advanced Reasoning

• Codestral Mamba, a new generative AI architecture, offers free use, modification, and distribution, aiming to advance research with its unique capabilities

• Unlike traditional Transformer models, Codestral Mamba achieves linear time inference and can theoretically handle infinitely long sequences, enhancing code productivity

• The model, supported with 7.29 billion parameters, shows promising in-context retrieval and can be deployed via mistral-inference SDK or TensorRT-LLM, with raw weights available on HuggingFace.

Mathstral Released to Aid Scientific Community in Complex Mathematical Challenges

• Mathstral, a new AI tool tailored for complex mathematical problem-solving, excels in STEM fields by leveraging advancements from its predecessor, Mistral 7B

• Achieving impressive results, Mathstral scored 56.6% on MATH and 63.47% on MMLU benchmarks, showcasing its capability in academic environments

• Available for use or fine-tuning on HuggingFace, Mathstral encourages academic collaboration, with features supported by la Plateforme's fine-tuning capabilities.

⚖️ AI Ethics

Bytedance Loses Appeal in EU Court, Retains Gatekeeper Status Under DMA

• Bytedance, operator of TikTok, loses an appeal in a Luxembourg court regarding its status as a gatekeeper under the Digital Markets Act (DMA)

• The General Court upholds the view that Bytedanace meets the criteria for gatekeeper designation due to TikTok's significant user base and market value in the EU

• Despite Bytedance's arguments of being a competitive challenger in the market, the Court recognizes its dominant market presence, invalidating the company's plea.

Major AI Firms Accused of Using YouTube Content for Training Without Permission

• Major AI firms like Apple, Nvidia, and Salesforce used YouTube video subtitles to train AI, breaching YouTube's Terms of Service

• Over 173,000 video subtitles extracted from 48,000 channels without creators' permission, raising copyright and ethical concerns

• Legal ramifications loom as creators and publishers push back against unauthorized use of their content in AI training datasets.

• Nebula CEO Dave Wiskus criticizes the use of creators' content without permission to train AI, highlighting potential replacement of artists by AI technologies

• EleutherAI's dataset "the Pile", used by firms like Apple and Nvidia, included YouTube subtitles and materials from a variety of sources, raising concerns about content usage without consent

• Major tech companies acknowledge using "the Pile" for AI training despite concerns over content ownership and dataset biases, which include profanity and discriminatory language.

Hacktivists Leak Over One Terabyte of Disney Data, Citing AI Ethics Concerns

• Walt Disney Co. suffers extensive data breach, over one terabyte of confidential information leaked by hacktivist group NullBulge

• Hackers claim leak was driven by disputes over AI's impact on creative industries, targeting Disney's use of AI and artist compensation practices

• Disney is actively investigating the breach, which was reportedly executed via compromised Slack account access.

🎓AI Academia

Prover-Verifier Games Enhance Legibility in Large Language Model Outputs

• Prover-Verifier games enhance the legibility of Large Language Model outputs by using a training algorithm inspired by prior research

• The training involves a cycle where helpful provers create checkable solutions and sneaky provers attempt to deceive the verifiers

• Improved legibility in LLMs aids human verifiers in more accurately assessing solutions, thereby aligning model outputs with human understandability.

Synthetic Cancer: Using LLMs to Create and Spread Metamorphic Malware

• Novel metamorphic malware employing LLMs to rewrite code and evade detection was detailed in a submission to the Swiss AI Safety Prize

• The malware spreads through socially engineered emails, drafted by LLMs to encourage recipients to unknowingly execute the worm

• Presented research emphasizes the urgent need for studies on LLMs' role in cybersecurity threats, highlighting the risks of intelligent malware exploitation.

Google DeepMind Develops FLAMe, Enhancing Language Model Evaluation Accuracy

• FLAMe, a new family of Large Autorater Models, outperforms industry giants like GPT-4 and Claude-3 in multiple autorater benchmarks

• FLAMe-RM-24B model leads in RewardBench accuracy among models trained with permissively licensed data, reaching 87.8%

• Google DeepMind’s FLAMe introduces a novel tail-patch fine-tuning method, significantly reducing required training data for competitive performance.

Microsoft Research Paper Questions Model Compression Metrics, Proposes New Evaluation Methods

• Microsoft Research's latest study indicates that accuracy alone is insufficient for assessing LLM compression methods such as quantization and pruning

• The research highlights the importance of additional metrics like KL-Divergence and % flips, showing significant discrepancies in model behavior despite similar accuracy levels

• The study argues that even with comparable accuracy, compressed models can notably differ in performance, notably in free-form generative tasks like MT-Bench.

New Study Investigates LLMs' Ability to Retrieve and Reason Over Million Token Contexts

• "NeedleBench" assesses bilingual long-context capabilities of LLMs, testing retrieval and reasoning in contexts up to 1 million tokens

• Ancestral Trace Challenge introduced within NeedleBench to evaluate LLMs on complex reasoning in extensive documents

• Leading open-source models like GPT-4 Turbo and Claude 3 series extend support for long-context windows, greatly enhancing text comprehension capabilities.

Large Language Models Aid AI Practitioners in Assessing Technology Uses and Risks

• ExploreGen, a Large Language Model framework, aids AI practitioners in envisioning AI technologies' uses and assessing their risks based on EU AI Act regulations

• In a series of nine user studies, 25 AI practitioners found ExploreGen's use case scenarios realistic and its risk classifications 94.5% accurate

• Despite the participants' unfamiliarity with many generated scenarios, they rated them as having high adoption potential and transformational impact.

Survey on Reasoning Abilities of Large Language Models by Leiden University Researchers

• Breakthroughs in language model scaling enable in-context learning for tasks like translation and summarization without prior specific training

• Enhancements in Chain-of-thought prompt learning showcase potential System 2 reasoning abilities in LLMs, addressing major questions in artificial general intelligence

• The study suggests further research into prompt-based reasoning, sequential decision processes, and the intersect with reinforcement learning for future AI advancements.

Large Language Models Enhance Relevance Judgment in Walmart's Product Search

• Large Language Models (LLMs) have been deployed by Walmart Global Technology to enhance relevance judgments in product searches

• The recent research focuses on finetuning LLMs using a unique dataset of multi-million query-item pairs with human-annotated relevancy

• Improvements in LLM accuracy for product search could potentially match human-level relevance evaluations, reshaping e-commerce strategies.

New DeMamba Module Enhances AI-Generated Video Detection on GenVideo Dataset

• DeMamba introduces GenVideo, the first AI-generated video detection dataset, featuring over one million videos for robust testing

• GenVideo enhances fake video detection with diverse generation techniques and categories, tailored for real-world applications

• DeMamba module improves detector performance by analyzing temporal and spatial inconsistencies in AI-generated videos.

JailbreakBench: Benchmarking Standard for Robustness Against Attacks on Large Language Models

• JailbreakBench introduces an open-source robustness benchmark aimed at countering jailbreaking attacks on large language models (LLMs)

• The benchmark includes a repository of state-of-the-art adversarial prompts and a dataset designed to reflect responsible AI practices

• Additionally, JailbreakBench features a standardized evaluation framework and a leaderboard tracking both attack and defense performance.

PromptRobust Benchmarks LLMs Adversarial Response to Typographical Errors

• 'PromptRobust' introduces a new benchmark for evaluating the robustness of Large Language Models (LLMs) against adversarial prompts, revealing significant vulnerability;

• The study employs 4,788 crafted adversarial prompts across various tasks such as sentiment analysis and machine translation to test the resilience of LLMs;

• Results indicate that current LLMs struggle with maintaining accuracy when faced with minor prompt modifications like typos or synonym substitutions.

Study Examines Large Language Models' Understanding of Structured Table Data

• Large language models like GPT-3.5 and GPT-4 assessed for structured table data understanding through a newly developed benchmark

• Performance improvements noted in structured querying tasks when special prompt techniques were utilized

• Open source benchmark released, promising to aid future research in enhancing LLMs' comprehension of tabular data.

About us: We are dedicated to reducing Generative AI anxiety among tech enthusiasts by providing timely, well-structured, and concise updates on the latest developments in Generative AI through our AI-driven news platform, ABCP - Anybody Can Prompt!