For those missing the nail-biting drama around California's much hyped upcoming AI Safety Bill (𝗦𝗕-𝟭𝟬𝟰𝟳), here's a brief summary:

𝗪𝗵𝗮𝘁 𝗜𝘀 𝘁𝗵𝗲 𝗕𝗶𝗹𝗹 𝗔𝗯𝗼𝘂𝘁?

Senate Bill 1047 targets AI models trained above specific compute and cost thresholds. Unlike the EU AI Act, it regulates at the model level, not the application level. (Bill details- https://lnkd.in/gdMfe_XR)

𝗠𝗼𝘀𝘁 𝗖𝗼𝗻𝘁𝗿𝗼𝘃𝗲𝗿𝘀𝗶𝗮𝗹 𝗥𝗲𝗾𝘂𝗶𝗿𝗲𝗺𝗲𝗻𝘁𝘀

The bill holds developers responsible for misuse of their models, even if someone else is modifying or fine-tuning their models.

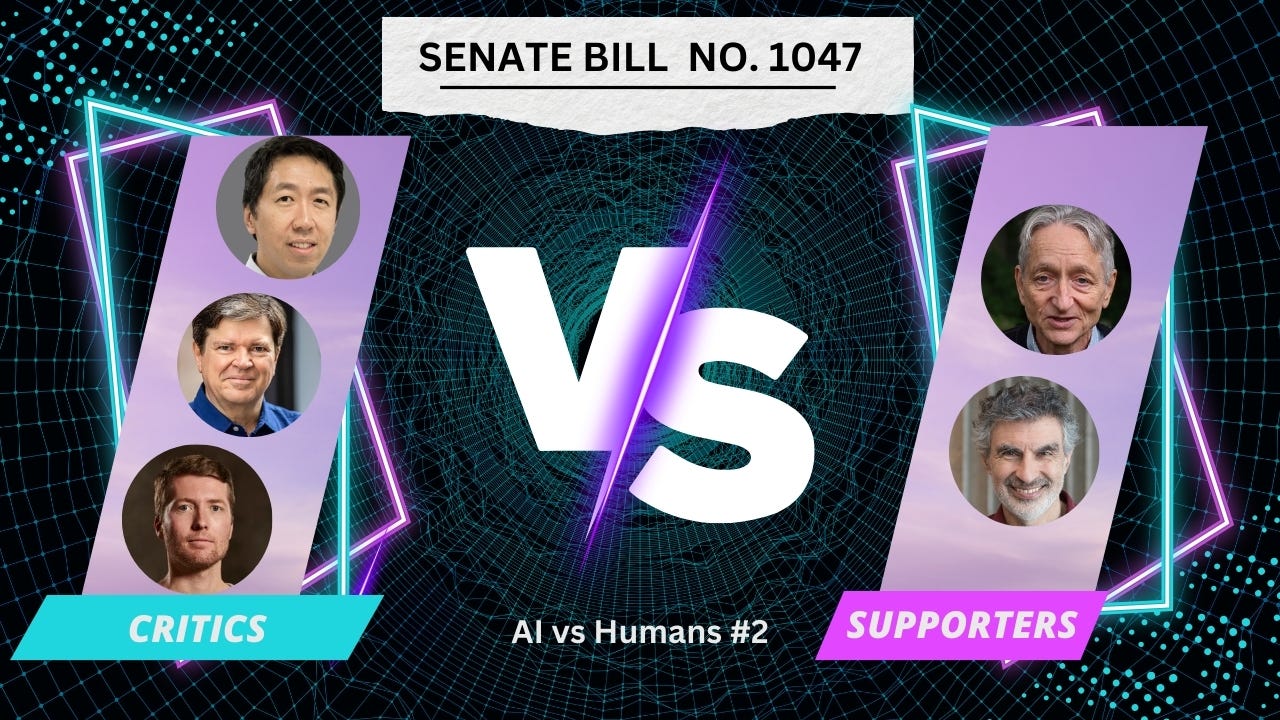

𝗖𝗿𝗶𝘁𝗶𝗰𝘀

A͟n͟d͟r͟e͟w͟ ͟N͟g͟: Compares it to holding motor manufacturers liable for misuse of motors. He argues that regulating AI models instead of specific applications could stifle innovation and beneficial uses of AI. (https://lnkd.in/gScD4Dem)

Y͟a͟n͟n͟ ͟L͟e͟C͟u͟n͟: Warns of "apocalyptic consequences on the AI ecosystem" if R&D is regulated. He believes the bill creates obstacles for open research and open-source AI platforms. (https://lnkd.in/gm-nzdHg)

𝗦𝘂𝗽𝗽𝗼𝗿𝘁𝗲𝗿𝘀

G͟e͟o͟f͟f͟r͟e͟y͟ ͟H͟i͟n͟t͟o͟n͟ and Y͟o͟s͟h͟u͟a͟ ͟B͟e͟n͟g͟i͟o͟: View it as a sensible approach to balance the potential and risks of AI. (https://lnkd.in/gV6Tj7gx)

𝗣𝗼𝗶𝗻𝘁 𝗼𝗳 𝗗𝗲𝗯𝗮𝘁𝗲

Should regulation be at the model level or the application level?

Our 𝗧𝗮𝗸𝗲?

While both perspectives are equally important, after reading the requirements in detail I strongly see the need for more RAI experts, ethicists, policymakers, and lawyers to work closely with the AI teams right from the beginning. I am imagining a more balanced approach: 80% responsibility at the application level (especially for fine-tuned models) and 20% at the developer level. This distribution could better balance innovation with safety concerns.

𝗧𝗵𝗼𝘂𝗴𝗵𝘁𝘀?

Discussion about this post

No posts